Chapter 1. A

Abacus

The abacus is perhaps the earliest mathematical device, if you don’t count making marks in the sand or cave paintings. It’s the ancient equivalent of a pocket calculator. The “hackers” of the Bronze Age definitely used abacuses.

The full story of the invention of the abacus is unknown. Historical records of its use date back as far as 2700 BC in Mesopotamia (approximately where Iraq and parts of Iran, Kuwait, Syria, and Turkey exist today), as well as ancient Egypt, Greece, Rome, China, India, Persia (the precursor to modern-day Iran), Japan, Russia, and Korea. The revolutionary abacus traveled the world centuries before motor engines and the printing press! But the device might be even older.

Abacuses are still manufactured, and they are frequently seen in young children’s classrooms. Some modern abacuses are also designed for blind people, such as the Cranmer Abacus, invented by Terence Cranmer of the Kentucky Division of Rehabilitation Services for the Blind in 1962.

Abacus designs vary, but their defining feature is a set of bars in rows or columns, usually in some sort of frame, with beads or similar objects as counters that can slide across each bar to change position horizontally (on a row) or vertically (on a column).

Here’s how an abacus works. Each bar can represent a position in a numeral system, and then the placement of the counters on a bar indicates the position’s integer. For example, an abacus that would work well with the base 10 or decimal numeral system we’re most familiar with in the modern world would have 10 bars and 10 counters on each bar. On the bar farthest to the right, I could slide three counters up. On the bar to its left, I could slide two counters up. And on the bar left of that, I could slide seven counters up. That value represents 327. If I wanted to perform a very basic arithmetic operation and add or subtract, I could reposition the counters accordingly. If I wanted to add 21, I could slide two more counters to the two that have already been placed in the tens position, and one more in the ones position. So I could see that 327 plus 21 equals 348.

There are a lot of other arithmetic and algebraic methodologies that can be applied to an abacus, as well as different numeral systems. If you’re an imaginative math whiz, you could even create some of your own. Now you’re thinking like a hacker!

See also “Binary”, “Hexadecimal numbering”

Abandonware

Activision

Activision is one of the biggest names in video games. Its founders, David Crane, Larry Kaplan, Alan Miller, and Bob Whitehead, were definitely hackers. In the earliest era of console game development, they had to invent the craft almost from scratch. They started their careers making games for the Atari 2600, the most commercially successful second-generation video game console, which debuted in 1977. (The first generation consisted of the Magnavox Odyssey and a variety of home Pong clones released throughout the 1970s. The third generation was defined by Nintendo.)

After a couple of years, Crane, Kaplan, Miller, and Whitehead realized that the games they developed accounted for 60% of all Atari 2600 game sales. Their games made Atari about $20 million, whereas their salaries were about $20,000 a year. “The Gang of Four” felt significantly underpaid and wanted to be credited, so gamers would know who crafted their games. They left Atari in 1979 to form Activision, the very first third-party console game developer.

In 1983, however, the video game market crashed in North America—partly triggered by a plethora of low-quality Atari 2600 games. The crash forced Activision to diversify if it wanted to survive. It started to produce games for home computers. In the late 1980s the company rebranded as Mediagenic and expanded to games for Nintendo Entertainment System (NES), Commodore, and Sega systems, as well as text-adventure games (as Infocom) and business software (as Ten Point O).

Mediagenic struggled to make a profit as the video game console and home PC markets evolved. In 1991, Bobby Kotick and a team of investors bought Mediagenic for about $500,000 and restored the company’s original name and its focus on video games. Activision rapidly grew and became profitable throughout the 1990s and 2000s. Notable titles from that era include Quake, the MechWarrior series, the Tony Hawk series, the Wolfenstein series, Spyro the Dragon, and the Call of Duty series.

In 2008, Activision merged with Vivendi Games to form Activision Blizzard, which Microsoft acquired in 2002. Kotick still leads Activision Blizzard and is one of the wealthiest people in the industry.

See also “Atari”, “Commodore”, “Microsoft”, “Nintendo”, “Sega”

Adleman, Leonard

Adleman was born in San Francisco in 1945. According to his bio on the University of Southern California Viterbi School of Engineering’s website, a very young Leonard Adleman was inspired to study chemistry and medicine by the children’s science show Mr. Wizard. Adleman would actually become known for his innovations in computer science, but his destiny would cross into medicine later on in a peculiar way.

Adleman acquired his first degree, a bachelor in mathematics, from the University of California, Berkeley, in 1968. Back then, mathematics degrees often led to computer programming jobs, and after graduation Adleman got a job as a computer programmer at the Federal Reserve Bank in San Francisco. He did return to Berkeley soon after and got his PhD in computer science in 1976.

With his doctorate in hand, Adleman went right to MIT to teach mathematics. There he began collaborating with his colleagues Ron Rivest and Adi Shamir, who shared his enthusiasm for public-key cryptography and the Diffie-Hellman key exchange. The three developed RSA (Rivest-Shamir-Adleman) cryptography in 1977. RSA’s big innovation was the ability to encrypt and decrypt messages without a shared private key. This made public-facing cryptography, like we use on the internet, a lot more feasible.

In 1980, Adleman moved to Los Angeles and became a pioneer of DNA computing, likely inspired by his childhood memories of Mr. Wizard. The Viterbi School says, “He is the father of the field of DNA computation. DNA can store information and proteins can modify that information. These two features assure us that DNA can be used to compute all things that are computable by silicon based computers.”

In 2002, Rivest, Shamir, and Adleman received a Turing Award, the highest honor in computer science, for their work. Mr. Wizard would indeed be proud. All three of the inventors of RSA encryption have entries in this book: Ron Rivest, Adi Shamir, and Leonard Adleman.

See also “Berkeley, University of California”, “Cryptography”, “Rivest-Shamir-Adleman (RSA) cryptography”, “Rivest, Ron”, “Shamir, Adi”

Advanced persistent threat (APT)

APT stands for advanced persistent threat, a cool-sounding name for teams of malicious cyberattackers with advanced technological skills and knowledge. Most are nation-state-sponsored cyberwarfare units or sophisticated organized-crime entities. APTs create their own malware, find their own exploits, and do technologically complex reconnaissance work—that’s the “advanced” part.

Different cybersecurity vendors and organizations have different ways to describe the phases of an APT attack. But it all boils down to infiltrating a targeted network, establishing persistence, and spreading the attack to more parts of the targeted network. Here’s the National Institute of Standards and Technology’s definition:

An adversary that possesses sophisticated levels of expertise and significant resources that allow it to create opportunities to achieve its objectives by using multiple attack vectors including, for example, cyber, physical, and deception. These objectives typically include establishing and extending footholds within the IT infrastructure of the targeted organizations for purposes of exfiltrating information, undermining or impeding critical aspects of a mission, program, or organization…. The advanced persistent threat pursues its objectives repeatedly over an extended period; adapts to defenders’ efforts to resist it; and is determined to maintain the level of interaction needed to execute its objectives.1

Among cybersecurity people, “script kiddie” is a term for a generally low-skilled threat actor: someone who conducts attacks by running malicious scripts or applications developed by other people. APTs are the polar opposite of that!

APTs are “persistent” in that they don’t strike and run, like a computerized drive-by shooting. They can maintain an unauthorized presence in a computer network for a few weeks or even years.

APTs are the kind of “threats” that have malicious intentions. They’re capable of doing great harm. They rarely bother with ordinary computer users: APTs are behind attacks on industrial facilities, like the 2010 Stuxnet attack on a uranium enrichment plant in Iran and thefts of terabytes’ worth of sensitive data from financial institutions.

See also “Cybersecurity”, “Stuxnet”

Agile methodology

See “DevOps”

Airgapping

No computer is 100% secure. The closest we can get is by airgapping: that is, restricting access to a computer’s data as much as possible by not having any connections to any networks whatsoever. Not to the internet, not to any internal networks, not even to any printer devices or network-attached storage. That’s the most literal network-security definition, at any rate.

If you want to do a really proper job, you should physically remove any network interface cards and disable any USB ports and optical drives that aren’t absolutely necessary for the computer’s authorized use. Those aren’t computers, but they are potential cyberattack surfaces: places where a threat actor could send malicious data. Ideally, an airgapped computer should also be in a room protected by excellent physical security, including locked physical doors that require the user to authenticate.

As you might have gathered by now, airgapping a computer makes it very inconvenient to use. For that reason, they’re typically only used for highly sensitive data in situations with extremely strict security standards, like military and national security agencies or industrial control systems in power plants. They also have applications in digital forensics and incident response (DFIR), when digital forensics specialists need to assure a court of law that their evidence hasn’t been tampered with through networks or other means of data transmission.

As with all cybersecurity techniques, there’s a constant battle between security professionals and those who seek to outwit them. Mordechai Guri, director of the Cybersecurity Research Center at Ben-Gurion University, is well known for coming up with exploit ideas in hopes of staying several steps ahead of attackers. If you’re curious about his ideas, I wrote about Guri for the Tripwire blog back in 2017, and Discover magazine reported on another one of Guri’s scenarios in 2022.

See also “Cybersecurity”

Akihabara, Tokyo

Akihabara, a neighborhood of Tokyo, Japan, is just as relevant to hacker culture as Silicon Valley is.

Some hackers are total weeaboos (Westerners who are fans of Japanese popular culture), as I am. And in Japan, an otaku is pretty much any sort of obsessive nerd, including those with technological fixations. Akihabara is a hub for Japanese hackers and otaku culture.

During the Meiji period in 1869, a massive fire destroyed large parts of Tokyo, including what is now Akihabara. Railways were part of the city’s reconstruction, and in 1890 when Akihabara train station was built, the surrounding neighborhood was named after the station, still a major fixture of the area.

When World War II ended in 1945, an unregulated “black market” for radio components appeared in Akihabara, earning it the nickname “Radio Town.” Until 1955, when Sony launched the TR-55 radio, hobbyists in Japan typically built their own radios. If you wanted to find the postwar equivalent to hackers, they’d be in Akihabara, feeding their passion. Akihabara was also close to Tokyo Denki University, and bright young minds from the technical school poured into the neighborhood’s radio component shops.

Radio broadcasting boomed in 1950s Japan, becoming an important source of news and entertainment, and the growing demand for radio equipment fed Akihabara’s economy. The US General Headquarters, which had a governing role throughout the mid-20th century, directed electronics shops to move to Akihabara. This expanded the variety of nerdy goods sold in the neighborhood, which soon became known as “Electric Town.”

This was also the period in which anime and manga culture started to thrive. The seeds of that phenomenon were sown through the genius of Osamu Tezuka, known as the God of Manga. Tezuka pioneered manga and anime, and many other Japanese artists followed his lead. His 1950s Astro Boy and Princess Knight manga series kicked off the widespread popularity of Japanese comics. In the 1960s, TV shows based on his work, such as Astro Boy and Kimba the White Lion, made anime a commercially profitable medium.

From the 1970s until roughly 1995, Akihabara was still known as Electric Town. The built environment went from a series of rustic electronics flea markets to impressive multistory retail buildings covered in neon signs. Then, in 1995, the launch of Windows 95 brought thousands of computer hackers to the neighborhood, eager for their own copy of Microsoft’s operating system. More electronics shops opened, selling more PC and computer networking equipment, as well as video game consoles.

Video games also began to incorporate anime and manga art and culture, most notably in Japanese role playing games (JRPGs) and visual novels. For instance, Akira Toriyama created the Dragon Ball manga series and illustrated the Dragon Quest series, Japan’s most popular JRPG franchise. Once shops in Akihabara started selling video games, anime and manga products of all kinds followed.

In the 2020s, Akihabara remains a hacker paradise where you can pick up PC hardware, electronics components, PS5 games, and even a ¥30,000 Melancholy of Haruhi Suzumiya figure for good measure.

See also “Atlus”, “Nintendo”, “Sega”, “Tokyo Denki University”

Alderson, Elliot

Alphabet Inc.

Alphabet Inc. is the holding company that owns Google and thus has a massive amount of control over the internet. Google founders Larry Page and Sergey Brin founded Alphabet in 2015 to enable a variety of projects, including:

- Calico

- A project to improve human health and longevity via technology

- CapitalG

- A venture-capital endeavor

- Waymo

- Formerly known as the “Google Self-Driving Car Project”

- Google Fiber

- An internet service provider and telecommunications company

- DeepMind

- For artificial intelligence research

See also “Google”

Amazon Web Services (AWS)

Amazon Web Services (AWS) is a major provider of cloud computing infrastructure and services for businesses. In the early 2000s, Amazon built on its success as one of the earliest successful online retailers by offering datacenter services to other major US retailers, such as Target. Many of these big retailers had started as brick-and-mortar stores and needed Amazon’s help to scale their online offerings.

Scaling, in this context, means expanding to meet demand. If a company needs 1x storage and bandwidth one month, 8x storage and bandwidth the next, and 3x storage and bandwidth the following month, the degree to which that infrastructure adjusts accordingly is called its scalability. Big retailers need a datacenter backend that is huge, scalable, and flexible, and creating that was a real challenge. As John Furrier writes in Forbes, “The idea grew organically out of [Amazon’s] frustration with its ability to launch new projects and support customers.”

In 2006, Simple Storage Service (S3), the first major AWS component, went online. AWS features have been expanding ever since. In the wake of the success of AWS, other major tech companies launched cloud platforms and services. Its two biggest competitors, Google Cloud Platform and Microsoft Azure, both launched in 2008.

See also “Cloud”

AMD (Advanced Micro Devices)

Advanced Micro Devices (AMD) was founded in 1969 in Sunnyvale, California, in the heart of what is now Silicon Valley. AMD makes billions from graphics processors (GPUs), chipsets, and motherboard components, but its competition with Intel is what made AMD the huge tech company it is today.

The x86 CPU architecture debuted with Intel’s groundbreaking Intel 8086 processor in 1978, and it has been predominant in PCs ever since. In desktops and laptops, the two main CPU competitors are Intel and AMD.

In 1982, IBM wanted at least two different suppliers of CPUs, to have redundancy in their supply chain in case something happened with either supplier that would slow down production. Intel couldn’t afford to lose IBM as a customer, so it made an agreement with AMD for both companies to produce processors based on 8086 and 8088 technology. But by 1984, the agreement had fallen apart; Intel stopped sharing its research and development with AMD. In 1987, AMD sued Intel for developing the 386 without cooperating with them. After a long battle through the courts, the Supreme Court of California ruled in AMD’s favor in 1994.

Intel released its first 486 CPU in April 1989. AMD’s equivalent, Am486, came out in 1993, but Acer and Compaq, PC manufacturing giants at the time, used it frequently in their products. In 2006, AMD bought ATI for a whopping $5.4 billion and became a major competitor with NVIDIA.

AMD’s Ryzen CPU series, launched in 2017, has been AMD’s most commercially successful foray into the gaming CPU market. It features x86-64 architecture and Zen microarchitecture, for you CPU nerds out there.

See also “CPU (Central processing unit)”, “IBM”, “Intel”

Amiga

The Commodore 64, first released in 1982, was the world’s top-selling PC by 1984. It was technologically impressive for its time and had a large third-party software library that included business and programming utilities and a huge variety of games. But Commodore knew it needed to produce PCs with more advanced and innovative technology to compete with Atari, IBM, and Apple.

Activision cofounder Larry Kaplan approached Atari hardware designer Jay Miner about starting a new company to produce a new video game hardware platform with its own proprietary operating system. That produced Amiga in 1982, with Miner leading its research and development team in creating a Motorola-68000-based prototype, code-named “Lorraine.” Trouble was brewing in Commodore’s C-suite, however, despite the massive success of the Commodore 64. Founder Jack Tramiel fought with primary shareholder Irving Gould and was dismissed from the company in 1984. Tramiel moved to Atari, and many top Commodore engineers followed him.

January 1984 was probably the most important month in the history of hacker culture. Tramiel left Commodore, 2600 Magazine published its debut issue, the historic Apple Super Bowl commercial launched the Macintosh, the author of this book was born, and the Amiga team presented their working prototype at the Consumer Electronics Show. Attendees were mesmerized by the Boing Ball, a red-and-white checkerboard-patterned 3D graphic that showcased the Amiga’s astonishing graphical capabilities.

PC World called the Amiga 1000 “the world’s first multimedia, multitasking personal computer.” It featured the fully graphical and mouse-navigable AmigaOS 1.0, cutting-edge graphics, a Motorola 68000 CPU, and built-in audio. It had a variety of audio and video outputs—great for gaming, but also a great computer for digital video editing. There had never been such a graphically advanced PC before, and the Amiga’s Video Toaster software became the professional standard for digital video editing. Commodore couldn’t produce enough Amiga 1000s to meet demand. But by 1993, it was losing market share to multimedia “IBM-compatible” PCs (and, to a lesser extent, the Apple Macintosh line). In a dramatic move, Commodore reconfigured its Amiga PC hardware into the Amiga CD32, which (probably deliberately) looked a bit like the Sega Genesis. It wasn’t enough; in 1994, Commodore went into voluntary bankruptcy liquidation. What a sad end! But hackers look back on the Amiga fondly.

See also “Activision”, “Atari”, “Commodore”, “Consumer Electronics Show (CES)”

Android operating system

(For androids as in humanoid robots, see “Robotics”.)

There is only one mobile operating system that gives Apple’s iOS any real competition: Android. If iOS is the Coca-Cola of the mobile OS world, Android is its Pepsi.

The world’s first mobile OS was the short-lived PenPoint, introduced for PDAs in 1991.2 It was followed in 1993 by Apple’s Newton OS and then Symbian, a joint venture between several mobile phone manufacturers that claimed 67% of the smartphone market share in 2006. But Apple introduced the iPhone in 2007, and iOS became the first mobile platform to sell devices in the hundreds of millions (and then billions). Apple operating systems like iOS and macOS are only supposed to run on Apple hardware, although hackers have been installing them on non-Apple hardware for years.

Android Inc. was founded in 2003 by Rich Miner, Nick Sears, Chris White, and Andy Rubin, who originally conceived of it as an operating system for digital cameras. Google bought the company in 2005. In 2007, hoping to compete with the iPhone, Google founded the Open Handset Alliance in November 2007 with HTC, LG, Sony, Motorola, and Samsung.

Android first launched in 2008, running on the slider smartphone HTC Dream, which had a physical keyboard rather than a touchscreen. Like Symbian, it was designed to run on devices made by multiple manufacturers. It is based on a Linux kernel. Android entered the market at the right time and replicated some of what Apple was doing right.

The 2009 Android 1.5 Cupcake was the first Android version to have a touchscreen keyboard, and 2010’s Android 2.3 Gingerbread really established Android’s “visual language,” as a UI designer might say. The 2019 Android 10 introduced the ability for users to decide which permissions to grant which apps—for instance, you can deny apps camera access—a big step forward for privacy.

Anonymous

Anonymous is the most notorious hacktivist group ever. Laypeople may not have heard of LulzSec or the Cult of the Dead Cow. But if they read “We are Anonymous. We are Legion. We do not forgive. We do not forget,” and see Guy Fawkes masks, their imaginations run wild.

The origin of Anonymous can be traced back to the infamous 4chan forum in 2003. In 4chan culture, it’s very poor form to enter a username when you post. To be able to generate posts for the “lulz” without the inconvenience of accountability, you’d better leave the name field blank. So whoever you are and wherever you are in the world, your name is posted as “Anonymous.” And all the l33t posters on 4chan are “Anonymous.”

One of the earliest Anonymous “ops” to get public attention was 2008’s Operation Chanology. Operation Chanology was a massive online and offline protest campaign against the Church of Scientology. That’s how I first got involved. Offline protests took place in various US, Canadian, Australian, British, and Dutch cities between February and May of that year.

Some other campaigns that have been attributed to Anonymous include 2010’s Operation Payback, against the Recording Industry Association of America (RIAA) and the Motion Picture Association of America (MPAA); 2011’s Operation Tunisia, in support of the Arab Spring; 2014’s Operation Ferguson, in response to the racism-motivated police murder of Michael Brown in Ferguson, Missouri; and 2020’s vandalism of the United Nations’ website to post a web page supporting Taiwan.

Let me let you in on a little secret that’s actually not a secret at all: Anonymous doesn’t have strictly verifiable membership, like a motorcycle gang, a law enforcement agency, or the Girl Scouts would. Anyone can engage in hacktivism online or protest offline in the name of Anonymous. Anyone who posts on 4chan is “Anonymous,” and that extends to the hacktivist group. Do not forgive and do not forget, ’kay?

See also “Hacktivism”

Apache/Apache License

The world’s first web server was launched by Tim Berners-Lee in December 1990. The second was httpd in 1993, developed by the National Center for Supercomputing Applications (NCSA). However, as the Apache HTTP Server Project website explains, “development of that httpd had stalled after [developer] Rob [McCool] left NCSA in mid-1994, and many webmasters had developed their own extensions and bug fixes that were in need of a common distribution. A small group...gathered together for the purpose of coordinating their changes (in the form of ‘patches’).” Eight of those core contributors (known as the Apache Group) went on to launch Apache HTTP Server in 1995, replacing NCSA httpd.

Microsoft launched its proprietary IIS (Internet Information Services) software the same year, and the two have dominated web-server market share ever since. IIS is optimized to run on Windows Server, whereas Apache was originally only for Linux distributions, but now there are versions of Apache for Windows Server and UNIX-based servers as well. Apache is open source under its own Apache License. Anyone who develops their own open source software may use the Apache License if they choose.

See also “Berners-Lee, Tim”, “Open source”

Apple

Apple, a little tech company you might have heard of, became the world’s first $3 trillion company in 2022. It has more money than many nations now, but when Steve Jobs and Steve Wozniak founded it on April 1, 1976, Apple was indeed little (and it was no April Fool’s joke, either). Not long before Jobs and Wozniak founded Apple, they were phone phreaking and attending meetings of the Homebrew Computer Club. As Jobs said in an interview: “If it hadn’t been for the Blue Boxes, there would have been no Apple. I’m 100% sure of that. Woz and I learned how to work together, and we gained the confidence that we could solve technical problems and actually put something into production.”

Wozniak designed the first Apple product, the Apple I, as a kit. Users could buy the motherboard but had to add their own case, peripherals, and even monitor. Homebrew, indeed.

In 1977, Woz and Jobs debuted the Apple II at the first West Coast Computer Faire in San Francisco. The Apple II was more user friendly, with a case, a keyboard, and floppy-disk peripherals. But the really exciting feature that set it apart from the Commodore PET (which also debuted at the Faire) was its color graphics. When color television was introduced in the 1960s, NBC designed its peacock logo to show off the network’s color broadcasting; similarly, the original rainbow Apple logo was designed to show off the Apple II’s colors. Apple II was the first Apple product to become widely successful. It inspired many early game developers.

In 1983, Jobs chose former Pepsi president John Sculley to be CEO of Apple. According to biographer Walter Isaacson,3 Jobs asked Sculley, “Do you want to spend the rest of your life selling sugared water, or do you want a chance to change the world?”

The first product Apple introduced during Sculley’s tenure, the Lisa (1983), was the first Apple product to have an operating system with a graphical user interface (GUI). It was ambitious but a commercial failure. It was priced at US$9,995 (a whopping US$27,000 today) and marketed to businesses, but only sold about 10,000 units.

The following year, Apple launched the Macintosh (known today as the Macintosh 128K) with a George Orwell-themed Super Bowl commercial that only aired once. The ad declared, “On January 24th, Apple Computer will introduce Macintosh. And you’ll see why 1984 won’t be like 1984.”

At US$2,495, or about US$6,500 today, the Macintosh was still expensive, but Apple was targeting hobbyists with some disposable income. It featured a Motorola 68000 CPU, 128K RAM, a built-in display with a floppy drive, and the first version of (Classic) Mac OS, then called System 1 or Macintosh System Software. It even came with a mouse and keyboard. The Macintosh sold very well in its first few months, but then sales tapered off.

In 1985, Wozniak stopped working for Apple in a regular capacity, though he never stopped being an Apple employee. Steve Jobs also left that year, citing his and Sculley’s conflicting visions for the company. Sculley was replaced as CEO by Michael Spindler in 1993, followed by Gil Amelio (formerly of Bell Labs) in 1996. Amelio laid off a lot of Apple’s workforce and engaged in aggressive cost cutting. I’m not a fan of the reputation Steve Jobs has for mistreating employees, but it seems like Amelio was an even more destructive leader.

Many different Macintosh models were released before Jobs rejoined Apple in 1997. The Macintosh II came out in March 1987, featuring a Motorola 68020 CPU, a whopping 1 MB of RAM, and System 4.1. It showed how much PC hardware had improved since 1984’s Macintosh, full colors and all. Yes, the Apple II also had colors, but not the Macintosh II’s computing power!

The 1980s and 1990s brought the Macintosh Portable (1989), Apple’s first proper laptop computer, followed by the Macintosh PowerBook series (1991–97) and, for businesses, the Macintosh Quadra (1991–95) and Power Macintosh (1994–98) lines.

Apple also produced some experimental yet commercially released mobile devices, such as the Apple Newton line of personal digital assistants (1993–98). Although 1997’s eMate 300 had a physical keyboard, most Newton devices used touchscreen input with a stylus. However, Newton OS’s handwriting recognition feature didn’t work well. Such mistakes definitely informed Apple’s later development of iPods, iPhones, and iPads.

In 1997, Apple convinced Jobs to merge his new company, NeXT, with Apple and become its CEO in 1997. One of his first moves was to get rid of 70% of Apple’s product line.

The iMac (1998) ushered in the new vision Jobs had for Apple products, featuring striking designs by Jony Ive. Its monitor and motherboard resided in one unit, available in a variety of candy colors at a time when most PCs were some shade of beige. Ive also designed Apple Park, the corporate campus, in Cupertino, California.

A new operating system, Mac OS X, debuted in 2001—and so did the iPod. For the first time, Apple began selling mobile devices in the billions of units, as well as entering digital-music sales. From the late 1990s to the mid-2000s, iMacs and iPods made Apple an extremely profitable company. The first MacBook line launched in May 2006 and is still Apple’s top-selling Mac line as of today.

The first iPhone launched in 2007 with its own OS, iPhone OS 1.0. Some were skeptical that consumers would want a smartphone without a physical keyboard, but Apple had the last laugh, since now we all carry phones that are just one solid touchscreen interface with a motherboard and lithium-ion battery behind it. The iPhone line continues to sell like hotcakes. Meanwhile, the first iPad debuted in April 2010, and by the time this book is published, will be on its eleventh generation.

In 2003, Jobs was diagnosed with pancreatic cancer. As his health worsened, Jobs became Apple’s chairman and made Tim Cook CEO. Jobs died in 2011; Cook is still CEO as of this writing, and billions more iPhones, iPads, and MacBooks have been sold.

See also “Captain Crunch (John Draper)”, “Commodore”,“Graphical user interface (GUI)”, “Homebrew Computer Club”, “Jobs, Steve”, “Personal computers”, “Silicon Valley”, “Wozniak, Steve”

ARM (Advanced RISC Machines)

ARM (Advanced RISC Machines) is a family of CPU architectures used predominantly in mobile devices and embedded computers. (RISC stands for “reduced instruction set computer.”) The vast majority of non-Apple PCs these days use x86 CPUs, which are now only produced by two companies: Intel and AMD. (Apple has been putting its own ARM architecture CPUs in its MacBooks these days.) But ARM licenses its architectures to lots of semiconductor brands—kind of like how Android phones are produced by a wide range of companies. Small single-board computers designed for hobbyist hackers, such as the Raspberry Pi series, also use CPUs with ARM architecture.

ARM’s design facilitates advanced computing while consuming a lot less electricity and producing a lot less heat than x86 CPUs. It’s why we’ve got computers in our pockets that are more powerful than PCs from 10 years ago, but they don’t need fans, nor external vents for thermal regulation.

Acorn Computers (1978–2015), a mighty British tech company people seldom talk about anymore, is the reason this great ARM technology drives our very small computers. Its first major product was the 1981 BBC Micro line of PCs, made for the British Broadcasting Corporation’s Computer Literacy Project. Acorn developed the world’s first RISC CPU in 1985. In the late 1980s, Acorn worked to develop a CPU for Apple’s Newton line of touchscreen mobile devices (a precursor to iPhones).

See also “AMD (Advanced Micro Devices)”, “CPU (Central processing unit)”, “Intel”, “Raspberry Pi”

ARPAnet

The Advanced Research Projects Agency Network (ARPAnet) was the precursor to the modern internet, developed in 1969 under what is now the US Defense Advanced Research Projects Agency (DARPA).

One of ARPAnet’s earliest tests came when Charley Kline sent the first packet-switched message between two computers at University of California at Los Angeles (UCLA), under the supervision of professor Leonard Kleinrock. The system crashed before Kline could type the “G” in “LOGIN”! They eventually worked out the bugs and proved that ARPAnet could send short messages. ARPAnet gradually came to connect US military facilities, academic computer science departments, and technological development facilities in the private sector. At its apex in 1976, ARPAnet had 63 connected hosts.

One of the main differences between ARPAnet and the modern internet is that the latter is built on TCP/IP, the standard internet protocol suite that makes it possible for devices and applications from any makers to communicate. ARPAnet was a technological mess in its earliest years, because there was little technological standardization between the hosts that were trying to send each other packets.

In 1973, Vinton Cerf and Bob Kahn started work on the development of TCP/IP to solve that problem. In 1982, the US Department of Defense declared TCP/IP to be the protocol standard for all military computer networking. ARPAnet was completely migrated to TCP/IP on January 1, 1983, the date many computer historians consider the birthdate of the internet. (ARPAnet was a Scorpio, but the modern internet is a Capricorn.) The ARPAnet project was decommissioned in 1990. But from 1983 to 1990, as Tim Berners-Lee started development on the World Wide Web, ARPAnet and the internet overlapped.

See also “DARPA (Defense Advanced Research Projects Agency)”, “Internet”, “TCP/IP (Transmission Control Protocol/Internet Protocol)”, “World Wide Web”

Artificial intelligence (AI)

Artificial intelligence (AI) is all about making computers think. There was a lot of buzz around OpenAI’s ChatGPT project in the early 2020s, but AI development and research began in the 1950s. Improvements in computer technology over the decades have just made AI more versatile and sophisticated. Most people encounter AI in a wide variety of areas, from Google search suggestions to computer-controlled bad guys in video games.

In 1963, DARPA began funding AI research at MIT. The 1968 film 2001: A Space Odyssey predicted that machines would match or exceed human intelligence by 2001. A major milestone in AI development was when IBM’s Deep Blue computer beat world chess champion Garry Kasparov in a 1997 game.

A foundational concept in AI is the Turing test, based on Alan Turing’s 1950 paper “Computing Machinery and Intelligence”. His proposal was about answering the question “Can machines think?” Today the term “Turing test” commonly refers to whether or not a computer program can fool someone into thinking that it’s human. (This includes via mediums like text; an AI doesn’t have to have a human-like body to pass the Turing test.) Advanced AI chatbots like ChatGPT do usually pass the Turing test—unless you ask if they’re human. ChatGPT’s reply to that was, “No, I’m not a human. I’m an artificial intelligence language model created by OpenAI called ChatGPT. My purpose is to understand natural language and provide helpful responses to people’s queries.”

See also “ChatGPT”, “DARPA (Defense Advanced Research Projects Agency)”, “IBM”, “Massachusetts Institute of Technology (MIT)”, “Turing, Alan”

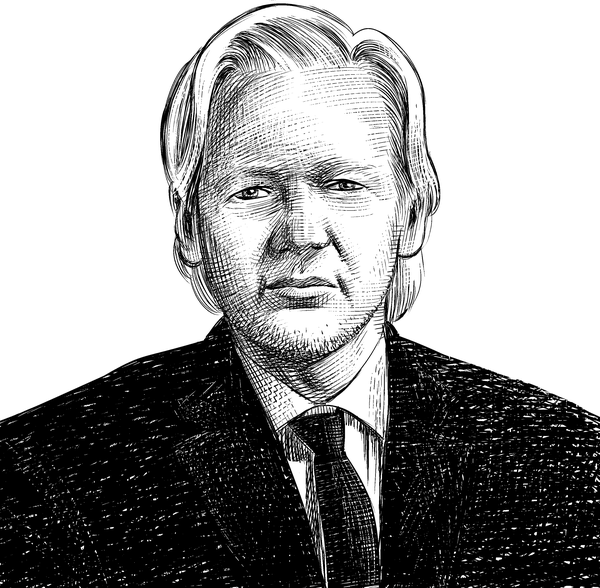

Assange, Julian

Julian Assange (1971–) is most notable for founding WikiLeaks, a controversial online platform for journalists and sources to expose classified information to the masses. Born in Townsville, Queensland, Australia, Assange has spent his life exposing dark truths hidden by many of the most powerful people, militaries, and intelligence agencies in the world.

Assange’s childhood featured a lot of homeschooling and correspondence courses as his family traveled around Australia. He had an unusual talent for computers and, in the late 1980s, joined International Subversives, an early hacktivist group. Under the handle “Mendax,” Assange hacked into some impressively prominent networks, including those of NASA and the Pentagon.

This got the Australian authorities’ attention, and in 1991, Assange was charged with 31 counts of cybercrime and sentenced to a small fine. (In 1991, people who weren’t computer professionals likely would not have understood computer networks very well, so perhaps the judge didn’t comprehend the serious implications of hacking into the Pentagon!)

Assange launched WikiLeaks in 2006 and began publishing sensitive data that December. The site became world-famous in 2010 when former US soldier Chelsea Manning, a hacker herself, published nearly half a million classified documents that exposed horrific US Army activities in Iraq and Afghanistan. For doing so, Manning was imprisoned from 2010 to 2017, much of that time in profoundly psychologically traumatizing solitary confinement. She was subpoenaed to testify against Assange in February 2019, but, with much integrity and conviction, refused. A judge found her in contempt of court and Manning spent two more months in prison.

Courts in the Five Eyes realms of Australia, the United Kingdom, and the United States became harsher to Assange in the wake of the Iraq and Afghanistan leaks. In 2010, Sweden issued an international warrant for Assange’s arrest on rape allegations.4 Assange was arrested, held for a few weeks, and then granted bail. In May 2012, however, a British court ruled that Assange should be extradited to Sweden to face questioning over the rape allegations. Instead, he sought refuge in the Ecuadorean embassy in London. Ecuador granted him asylum, and Assange lived in the building for years! London Metropolitan Police entered the embassy and arrested him in 2019 for “failing to surrender to the court.” He has been incarcerated at HM Prison Belmarsh in London ever since, and has been infected with COVID at least once.

The US Department of Justice has been working since 2019 to extradite Assange to the United States, where he could be harshly punished for the Iraq and Afghanistan leaks. Social justice activists and his lawyers have been fighting his extradition, and even Australian Prime Minister Anthony Albanese has suggested that Assange has suffered enough and implied that it might not be right to extradite him to the United States.

See also “Manning, Chelsea”, “WikiLeaks”

Assembly

The vast majority of computers that came after ENIAC (the early digital computer, from 1945) have some sort of CPU: game consoles, smartphones, PCs, internet servers, and even the embedded computers in your Internet of Things car and in the elevators in my apartment building. ENIAC didn’t really have a CPU per se; 1949’s BINAC (Binary Automatic Computer) introduced vacuum-tube-based CPUs. When microprocessors emerged in the 1970s, CPUs began to take the form we’re familiar with today.

Computer programming languages can be classified according to how much abstraction they have from a CPU’s instruction set. The most abstracted high-level programming languages are the most human readable and intuitive, such as Perl and Python. Low-level programming languages, by contrast, speak the most directly to the CPU. Most are assembly languages, the most esoteric of programming languages. Assembly languages are human-readable mnemonics representing individual machine instructions. There are different assembly languages for different types of CPUs.

Hello World code examples illustrate how different high-level programming languages are to assembly code. Here’s a Hello World program by René Nyffenegger in Microsoft’s Macro Assembler (MASM, commonly used on Windows PCs before booting the operating system), with the comments removed:

EXTRN __imp_ExitProcess:PROC

EXTRN __imp_MessageBoxA:PROC

_DATA SEGMENT

$HELLO DB 'Hello...', 00H

ORG $+7

$WORLD DB '...world', 00H

_DATA ENDS

_TEXT SEGMENT

start PROC

sub rsp, 40 ; 00000028H

xor ecx , ecx

lea rdx , OFFSET $WORLD

lea r8 , OFFSET $HELLO

xor r9d , r9d

call QWORD PTR __imp_MessageBoxA

xor ecx, ecx

call QWORD PTR __imp_ExitProcess

add rsp, 40

ret 0

start ENDP

_TEXT ENDS

END

Compare that to how simple it is to create a Hello World program in Python 3:

print('Hello, world!')

The technologically sophisticated software we use these days would be nearly impossible to create without high-level programming languages. But there’s probably some assembly-language code on your phone and on your PC, where it’s most often used to develop hardware drivers (which let your devices talk to their operating systems) and bootloaders (which boot up your machine and check its hardware).

Some older personal computers and video game consoles, like the Commodore 64 and Nintendo Entertainment System, don’t support high-level programming languages, so even their fun games were developed with assembly languages. Those early game developers must have been very patient people.

See also “ENIAC (Electronic Numerical Integrator and Computer)”, “Hello World”, “Programming”, “Syntax”, “Turing, Alan”

Atari

The Atari company, founded in 1972 by Nolan Bushnell and Ted Dabney, was integral to the creation of today’s video game industry. Atari led the direction of tropes, mechanics, and standards in arcade-game machines and, later on, video game consoles. Today, it’s a mere shell (or shell-company-owned shell) of its former self, with the value of the Atari brand completely anchored to nostalgia. But early Atari was saturated with hacker culture and the hacker spirit.

Most historians point to Spacewar! as the very first video game. In the game, players control a dot spaceship shooting at other dot spaceships. Spacewar! was developed in the 1960s by electronic engineering and computer science specialists using MIT’s groundbreaking, massive TX-0 and DEC PDP-1 computers, both of which played revolutionary roles in the development of computer science. Its display was a CRT-based scope using output technology developed for military aircraft. It was made by hackers, for hackers.

Bushnell and Dabney formed Syzygy Engineering to produce the game Computer Space, their own version of Spacewar!, in late 1971, and formed Atari Inc. in June 1972. Bushell’s background working with electronic engineering and at amusement parks helped him see the commercial potential of video games. At the time, the hardware and overall technology were so expensive that home consoles would have been impractical, so the business revolved around gathering game machines in spaces where players could gather—arcades.

The pioneering research and development work of Ralph Baer in the 1960s was also instrumental in Atari’s early arcade games and consoles. Baer conceptualized a simple table-tennis video game in November 1967, five years before Atari released Pong. Baer also created a “Brown Box” prototype in 1969, which formed the basis of the Magnavox Odyssey, the very first home video game console.

Through the 1970s, Atari’s arcade released Space Race, Gotcha, Rebound, Tank!, PinPong, Gran Trak 10, and Breakout, which Steve Wozniak helped to design in the months before he co-founded Apple with Steve Jobs. Bushnell sold Atari to Warner Communications in 1976.

Likely inspired by the Magnavox Odyssey’s commercial success, Atari released its first home game console in 1977. It was first known as the Video Computer System (VCS), then renamed the Atari 2600.

Atari’s in-house game development team mainly consisted of David Crane, Larry Kaplan, Alan Miller, and Bob Whitehead. Unhappy with their pay and treatment at Atari, in 1979 the four went on to found Activision, the very first third-party video game developer and publisher, where they continued to make games for the Atari 2600.

Atari released its 400 and 800 home computers in November 1979, but the competition was stiff as the early 1980s brought the Commodore 64 and the Apple Macintosh. (Commodore founder Jack Tramiel would eventually buy Atari.) Home consoles and, later, handheld consoles from Nintendo and Sega dominated the market into the early 1990s. Atari’s glory days pretty much ended there.

See also “Activision”, “Baer, Ralph”, “Commodore”, “Nintendo”, “Sega”, “Wozniak, Steve”

Atlus

Atlus, founded in 1986, was one of the top Japanese video game development and publishing companies before it was acquired by Sega in 2013. While US-based companies like Activision and Electronic Arts developed out of Silicon Valley’s hacker community, Japan has a hacker culture of its own, based in the Akihabara area of Tokyo.

Atlus’s first original game was 1987’s Digital Devil Story: Megami Tensei. Its plot was based on a novel by Aya Nishitani in which the protagonist summons demons from a computer, unleashing horrors on the world. Players must negotiate with the demons as well as fight them. Megami Tensei/Shin Megami Tensei became a massive game series (I’m a huge fan, personally). Its most recent entry as of this writing is 2021’s Shin Megami Tensei V. Because only the more recent games have been officially localized into English, hackers created unofficial fan subs and ROM hacks to play the older games in English.

The Persona series, a spin-off from the Shin Megami Tensei games started in 1996, features a dark, psychological story set in a high school. It has surpassed the original series in worldwide sales and popularity.

See also “Akihabara, Tokyo”, “Nintendo”, “Sega”

Augmented and virtual reality

Augmented reality (AR) is often compared and contrasted with virtual reality (VR). When you put on a VR headset, everything you perceive in your environment is generated by a computer: you’re inside the video game. In AR, you see the real world around you, but it’s augmented with digital elements.

AR caught on in the public consciousness in 2016, with the release of the mobile game Pokémon GO. The game uses the phone’s rear camera to show the world behind it. For example, if you were playing Pokémon GO and standing in front of a rosebush, you’d see the rosebush exactly as you’d see it in your phone’s camera when taking video—but, if you were lucky, an AR-generated Charmander might appear, and you could throw an AR-generated Pokéball to catch it. That summer, it seemed like everyone and their uncle was wandering around towns and cities, desperate to catch an Eevee or a Squirtle. Some people still play Pokémon GO today, but 2016 marked the height of the game’s popularity.

In 1968, Ivan Sutherland created the “Sword of Damocles”, a head-mounted device that looked like a contraption you might find in an optometrist’s clinic. It could display cubes and other very simple graphical objects within the user’s field of vision.

VR and AR overlapped in a 1974 experiment by the University of Connecticut’s Myron Krueger. A 1985 demonstration video of his Videoplace “artificial reality” lab shows a silhouette of a real person, moving in front of the viewer in real time, while interacting within a completely computer-generated world.

Boeing’s Tom Caudell coined the term augmented reality in 1990. The US Air Force’s Armstrong Research Lab sponsored AR research, hoping to improve piloting tools and training. There, in 1992, Louis Rosenberg developed his Virtual Fixtures system, which involved a lot of custom hardware peripherals for the user’s head, arms, and hands. AR’s utility in aviation extended to space exploration as well: NASA created a hybrid synthetic-vision system for its X-38 spacecraft that could generate a heads-up display in front of the pilot’s view. Hirokazu Kato’s open source ARToolKit launched in 2000, opening the way for more AR video games and software utilities in the years to follow.

Google Glass, launched in 2014, was Google’s first venture into AR, but was commercially unsuccessful. Google Glass looks very much like vision-correcting glasses, but with a tiny display and camera in front of one lens. Google Glass resembles augmented reality glasses prototypes made by Steve Mann. Mann has been called “the father of wearable computing” and “the father of wearable augmented reality.”

With Google Glass, users could see graphical user interfaces (GUIs) for applications in their field of vision. The release of Google Glass provoked some public backlash when it emerged that users could take photos and film video without alerting anyone around them. Its $1,500 price tag probably didn’t help sales, either. Google is continuing to develop AR glasses and peripherals, perhaps with lessons learned from Google Glass.

See also “Google”

Autistic

It’s believed that many hackers are autistic, and that there may be an association between hacking skills and autistic traits. I speculate that many hackers are autistic, including some of the people I mention in this book.

Being autistic means having a distinct sort of neurology that makes people more interested in creative, intellectual, and sensory pursuits than pointless small talk and group conformity. Autistic people often have intense sensory sensitivities, loving or avoiding certain sensations or foods (it’s different for everyone). Some autistics need direct support with their daily activities; some are nonspeaking or situationally nonspeaking. Nonautistics often fail to recognize the intelligence of nonspeaking autistics because nonspeaking autistics don’t share their ideas in societally accepted ways. Each and every autistic person is unique, and autism presents uniquely in each of us.

I’m autistic, and I’m fully verbal. I live and work alone, communicating with colleagues via emails and Zoom chats. In public, conscious of being watched, I use body language and posture that are generally perceived as “normal.” In private, I stim with broken earbud cords while pounding away at my laptop keyboard,5 engrossed in my work. I learn quickly from computers and books, and can focus on my interests with greater depth than nonautistics. While that’s led me into a successful career as a cybersecurity researcher and writer, autistic people shouldn’t be judged according to whether or not we have marketable talents.

Modern medicine has pathologized autism, treating it as a disease. It has been defined, studied, and treated almost exclusively by nonautistic so-called experts. We are not treated as the experts on ourselves, and it’s no coincidence that almost all autism treatments, both mainstream and fringe, have been tremendously harmful.

The harm caused by such “treatments” is the reason why I and many other autistic people reject being called terms like “person with autism” and “high functioning/low functioning,”6 as well as Lorna Wing’s “spectrum” model. There’s no such thing as being “more autistic” or “less autistic.” Either you’re autistic or you’re not.

As an autistic critic of capitalism and ableism, I have two coinciding ideas. The first is that, although not all autistic people are technically inclined, eliminating us and our traits would significantly slow down technological progress. Each and every autistic life has value, and each and every autistic should be accepted as they are. Our value as a people should not be determined by how useful we are to capitalism or whether our talents can be monetized. Defining our value in these absurd ways has had tragic results, including many autistic suicides.

I believe that official diagnosis by nonautistic “experts” will one day be considered as absurd as treating homosexuality as a psychiatric disorder. I hope that in future generations, people will discover that they’re autistic the same way LGBTQ+ people today discover their sexual orientations: through self-discovery and a community of their peers.

AWS

Azure

Microsoft Azure, launched in 2008, is Microsoft’s cloud platform and a major competitor to Amazon’s AWS.

Azure originates from Microsoft’s acquisition of Groove Networks in 2005. It appears that Microsoft hoped originally to spin Groove into something that would look like a combination of today’s Office 365 software as a service (SaaS) and Microsoft Teams. Instead, Microsoft used the infrastructure and technology from Groove as the foundation of Azure. (Groove cofounder Ray Ozzie also created Lotus Notes.)

Azure was launched as Windows Azure. Microsoft originally marketed it toward developers with niche web deployment needs, as an alternative to Amazon EC2 (an AWS service that provides virtual machines) and Google App Engine. Instead of infrastructure as a service (IaaS), which simply provides server machines and networking hardware, Azure introduced platform as a service (PaaS), which deploys infrastructure with a computing platform layer.

Between 2009 and 2014, Windows Azure gradually launched features and services for content delivery networks (CDNs), Java, PHP, SQL databases, its own proprietary .NET Framework, a Python SDK (software development kit), and Windows and Linux virtual machines.

Possibly because its features went well beyond what people think of in the Windows desktop and Windows Server realms, it rebranded in 2014 as Microsoft Azure. By 2018, Azure had datacenters on all major continents (Antarctica doesn’t count!), beating AWS and Google Cloud Platform to become the first major cloud platform with a physical presence in Africa, launching datacenters in Cape Town and Johannesburg, South Africa.

See also “Cloud”, “Microsoft”

1 National Institute of Standards and Technology (NIST), Special Publication 800-160 (Developing Cyber-Resilient Systems).

2 Personal digital assistants (PDAs), such as the BlackBerry and PalmPilot, were handheld mini-tablet devices for notes and calendars.

3 Walter Isaacson, Steve Jobs (Simon & Schuster, 2015), pp. 386–87.

4 Is Assange really a rapist, or were the allegations made up to arrest him for the Iraq and Afghanistan leaks? I honestly don’t know, and I can only speculate, but I definitely think that people should be believed when they say they were raped. Rape victims are usually treated horribly and seldom receive justice in the courts, as the advocacy group Rape Abuse and Incest National Network (RAINN) has documented.

5 Many autistic people do self-stimulation movements that aid us in focusing. When nonautistic people do this, it’s often called “fidgeting.” In autistic culture, we call it “stimming.”

6 Another term best avoided is Asperger syndrome, named for Hans Asperger, who conducted his autism research under the auspices of Nazi Germany. Unfortunately, even current research often falls into the pseudoscience of eugenics.

Get Hacker Culture A to Z now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.