Chapter 1. Introduction to Green Software

You wouldn’t like me when I’m angry.

Dr. Bruce Banner, green scientist

We can see why activists might be angry. Few industries have moved fast enough to support the energy transition, and that includes the tech sector.

But we are beginning to change.

What Does It Mean to Be Green in IT?

According to the Green Software Foundation (GSF), the definition of green software (or sustainable software) is software that causes minimal emissions of carbon when it is run. In other words:

-

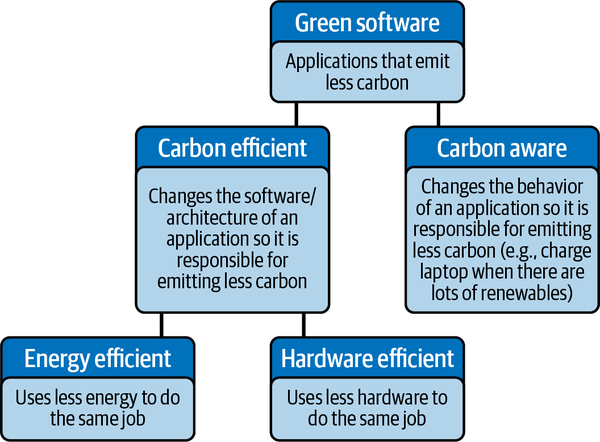

Green software is designed to require less power and hardware per unit of work. This is known as carbon efficiency on the assumption that both the generation of power and the building of hardware tend to result in carbon emissions.

-

Green software also attempts to shift its operations, and therefore its power draw, to times and places where the available electricity is from low-carbon sources like wind, solar, geothermal, hydro, or nuclear. Alternatively, it aims to do less at times when the available grid electricity is carbon intensive. For example, it might reduce its quality of service in the middle of a windless night when the only available power is being generated from coal. This is called carbon awareness.

Being energy efficient, hardware efficient, and carbon aware are the fundamental principles of green computing (see Figure 1-1).

Figure 1-1. The Green Software Foundation’s definition of green software

Now that we know what green software is, how do we go about creating it?

What We Reckon

This book is made up of 13 technical chapters:

-

Introduction to Green Software

-

Building Blocks

-

Code Efficiency

-

Operational Efficiency

-

Carbon Awareness

-

Hardware Efficiency

-

Networking

-

Greener Machine Learning, AI, and LLMs

-

Measurement

-

Monitoring

-

Co-Benefits

-

The Green Software Maturity Matrix

-

Where Do We Go from Here?

We’ll now talk you through each of the upcoming chapters and give you the key takeaways.

Chapter 2: Building Blocks

Before we dive in, there is one thing everyone in the tech industry knows is essential to grok about any new problem: the jargon.

In Chapter 2, “Building Blocks”, we explain what all the climate talk actually means, starting with carbon. Throughout this book, we use carbon as a shorthand to describe all greenhouse gases, which are any gases in the atmosphere that trap heat. Most are naturally occurring, but their overabundance from human activities means we’re having to fight global temperature rises to avoid those pesky catastrophic climate disasters.

Next, we will cover some knowledge you should have in your back pocket, ready to convince friends and colleagues about the importance of building climate solutions. We’ll review the difference between climate and weather, how global warming contrasts with climate change, and how the international community monitors it all. We’ll also look at how the greenhouse gas protocols (i.e., scope 1, 2, and 3 emissions) apply to software systems.

The next building block we will cover is electricity. Most of us studied electricity at school, and if you still remember that far back, you can skip this section. For the rest of us who need a refresher (like the authors), we will review the basic concepts of electricity and energy and how they relate to software. We will also briefly review energy production and compare and contrast high- and low-carbon energy sources.

The final building block we will go over is hardware. You’re probably wondering why you—let’s say a web developer—need to learn anything about hardware. TL;DR. But you need to.

Hardware is essential to all things software, and all hardware has carbon associated with it, even before it starts running your application. Embedded carbon, often referred to as embodied carbon, is the carbon emitted during the creation and eventual destruction of a piece of equipment.

In 2019, Apple reported that 85% of the lifetime carbon emissions associated with an iPhone occur during the production and disposal phases of the device. This is a figure we must all bear in mind when designing, developing, and deploying software. We need to make this carbon investment work harder, so therefore, user device longevity matters.

But what about other devices, like servers? What should we be aware of when deploying an application to an on-premises (on-prem) data center or the cloud? The good news is that in professionally run data centers, server hardware is more tightly managed and works far harder than user devices. As DC users, it’s electricity we need to worry about.

Chapter 3: Code Efficiency

In Chapter 3, “Code Efficiency”, we cover how the electricity an application requires to run is approximately a function of how much CPU/GPU it uses or indirectly causes to be used. Reducing a piece of software’s processing requirements is thus key to reducing its energy use and carbon emissions. One way we can do this is by improving its code efficiency.

However, the question we need to ask is, does code efficiency actually move the green dial or is it a painful distraction? In fact, is it the most controversial concept in green software?

Code Efficiency Is Tricky

The problem with code efficiency is that although cutting CPU/GPU use can potentially have a huge impact on carbon emissions and is well understood—the same techniques have been used for many decades in high-performance computing (HPC)—it is high effort for engineers.

You might get a hundredfold reduction in carbon emissions by switching, for example, from Python to a much more efficient language like Rust, but there will be a price to pay in productivity.

Developers really do deliver much more quickly when they are using lower machine-efficiency languages like Python. As a result, writing efficient code is unattractive to businesses, who want to devote their developer time to building new features, not writing more streamlined code. That can make it an impossible sell.

Luckily, there are code efficiency options that are aligned with business goals for speed. These include:

-

Using managed services

-

Using better tools, libraries, or platforms

-

Just being leaner and doing less

Using managed services

Later in this book, we will discuss the real operational efficiency advantages that come from managed cloud and online services. Such services might share their platform and resources among millions of users, and they can achieve extremely high hardware and energy utilization. However, we suspect their biggest potential win comes from code efficiency.

The commercial premise behind a managed service is simple: a business that has the scale and demand to justify it puts in the huge investment required to make it operation and code efficient. Irritatingly, that company then makes loads of money off the service because it is cheaper to operate. However, you get code efficiency without having to invest in it yourself.

Let’s face it: that’s an attractive deal.

Choosing the right tools, libraries, and platforms

The most efficient on-premises alternative to a managed service should be a well-optimized open source library or product. The trouble is that most haven’t been prioritizing energy efficiency up until now. As open source consumers, we need to start demanding that they do.

Doing less

The most efficient code is no code at all.

If you don’t fancy bolstering a hyperscaler’s bank balance by using one of its preoptimized services, an attractive alternative is to do less. According to Adrian Cockcroft, ex-VP of Sustainable Architecture at AWS, “The biggest win is often changing requirements or SLAs [service-level agreements]. Reduce retention time for log files. Relax overspecified goals.”1

The best time to spot unnecessary work is early in the product design process, because once you have promised an SLA or feature to anyone, it’s harder to roll back. Sometimes, overspecified goals (regulations that have to be complied with, for example) are unavoidable, but often, they are internally driven rather than in response to external pressures or genuine user needs. If that is the case in your organization, ask your product manager to drop them until you know you require them.

What if you really can’t buy it or drop it and have to build it?

If you really have to do it yourself, there are multiple options for CPU-heavy jobs that must run at times of high carbon intensity:

-

Replace inefficient custom code with efficient services or libraries.

-

Replace inefficient services or libraries with better ones.

-

Rewrite the code to use a more lightweight platform, framework, or language. Moving from Python to Rust has been known to result in a hundredfold cut in CPU requirements, for example, and Rust has security advantages over the more classic code efficiency options of C or C++.

-

Look at new language alternatives like Cython or Mojo, which aim to combine C-like speed with better usability.

-

Consider pushing work to client devices where the local battery has some hope of having been renewably charged. (However, this is nuanced. If it involves transmitting a load of extra data, or it encourages the user to upgrade their device, or the work is something your data center has the hardware to handle more efficiently, then pushing it to a device may be worse. As always, the design requires thought and probably product management involvement.)

-

Make sure your data storage policies are frugal. Databases should be optimized (data stored should be minimized, queries tuned).

-

Avoid excessive use of layers. For example, using some service meshes can be like mining Bitcoin on your servers.

Consider the Context

Delivering energy-efficient software is a lot of work, so focus your attention on applications that matter because they have a lot of usage and have to be always on.

“Scale matters,” says climate campaigner Paul Johnston. “If you’re building a high-scale cloud service, then squeeze everything you can out of your programming language. If you’re building an internal tool used by four people and the office dog, unless it’s going to be utilizing 10 MWh of electricity, it is irrelevant.”2

Green by Design

Software systems can be designed in ways that are more carbon aware or energy efficient or hardware efficient, and the impact of better design often swamps the effect of how they are coded. However, none of this happens for free.

Being green means constantly thinking about and revisiting your design, rather than just letting it evolve. So, it’s time to dust off that whiteboard and dig out that green pen, which luckily is probably the only one with any ink left.

Chapter 4: Operational Efficiency

We cover operational efficiency in Chapter 4, “Operational Efficiency”, which is arguably the most important chapter of the book.

Operational efficiency is about achieving the same output with fewer machines and resources. This can potentially cut carbon emissions five to tenfold and is comparatively straightforward because, as we will discuss later, services and tools already exist to support operational efficiency, particularly in the cloud.

However, don’t feel left out if you are hosting on prem. Many of the techniques, such as high machine utilization, good ops practice, and multitenancy, can work for you too.

High Machine Utilization

The main operational way to reduce emissions per unit of useful work is by cutting down on idleness. We need to run systems at higher utilization for processors, memory, disk space, and networking. This is also called operating at high server density, and it improves both energy and hardware efficiency.

A good example of it can be seen in the work Google has done over the past 15 years to improve its internal system utilization. Using job encapsulation via containerization, together with detailed task labeling and a tool called a cluster scheduler, Google tightly packs its various workloads onto servers like pieces in a game of Tetris. The result is that Google uses far less hardware and power (possibly less than a third of what it would otherwise).

Note

You can read all about Google’s work in a fascinating paper published a decade ago. The authors gave the cluster scheduler a great name too: Borg. Reading the Google Borg paper was what changed Anne’s life and sent her off on the whole operationally efficient tech journey, so be warned.

BTW: Borg eventually spawned Kubernetes.

Multitenancy

All the public cloud providers invest heavily in operational efficiency. As a result, the best sustainable step you can take today may be to move your systems to the cloud and use their services.

Their high level of multitenancy, or machine sharing between multiple users, is what enables the cloud’s machine utilization rates to significantly outstrip what is achievable on prem. Potentially, they get >65% utilization versus 10–20% average on prem (although if you just “lift and shift” onto dedicated cloud servers, you won’t get much of this benefit).

The hyperscalers achieve this by packing their diverse workloads onto large servers using their own smart orchestrators and schedulers if they can (i.e., if you haven’t hamstrung them by specifying dedicated servers).

Note that if you are using a well-designed microservices architecture, then even on-prem utilization rates can be significantly increased using a consumer cluster scheduler—for example, the Kubernetes scheduler or Nomad from HashiCorp.

The cluster schedulers that optimize for machine utilization require encapsulated jobs (usually jobs wrapped in a VM, a container, or a serverless function), which run on top of an orchestration layer that can start or stop them or move them from machine to machine.

To pack well, it is also vital that orchestrators and schedulers know enough to make smart placement decisions for jobs. The more a scheduler knows about the jobs it is scheduling, the better it can use resources. On clouds, you can communicate the characteristics of your workloads by picking the right instance types, and you should avoid overspecifying your resource or availability requirements (e.g., by asking for a dedicated instance when a burstable one would work).

Highly multitenant serverless solutions, like Lambda functions on AWS, Azure functions, or Google Serverless, can also be helpful in minimizing hardware footprint. Serverless solutions also provide other operational efficiency capabilities like autoscaling (having hardware resources come online only when they are required) and automatic rightsizing.

Doing this kind of clever operational stuff on your own on-prem system is possible, but it comes with a monetary cost in terms of engineering effort to achieve the same result. For cloud providers, this is their primary business and worth the time and money. Is the same true for you?

Good Ops Practice

Simpler examples of operational efficiency include not overprovisioning systems (e.g., manually downsizing machines that are larger than necessary), or using autoscaling to avoid provisioning them before they are required.

Simpler still, close down applications and services that don’t do anything anymore. Sustainability expert Holly Cummins, engineer at Red Hat, refers to them as “zombie workloads”. Don’t let them hang around “just in case.”

If you can’t be bothered to automate starting and stopping a server, that is a sign it isn’t valuable anymore. Unmaintained, zombie workloads are bad for the environment as well as being a security risk. Shut them down.

Green Operational Tools and Techniques

Even if you run your workloads on a cloud (i.e., operated by someone else), there are still operational efficiency configurations withinyour control:

-

Spot instances on AWS or Azure (preemptible instances on GCP) are a vital part of how the public clouds achieve their high utilization. They give orchestrators and schedulers discretion over when jobs are run, which helps with packing them onto machines. In the immediate term, using spot instances everywhere you can will make your systems more hardware efficient, more electricity efficient, and a lot cheaper. In the longer term, it will help your systems be more carbon aware because spot instances will allow a cloud provider to time-shift workloads to when the electricity on the local grid is less carbon intensive (as Google describes in its recent paper on carbon-aware data center operations).

-

Overprovisioning reduces hardware and energy efficiency. Machines can be rightsized using, for example, AWS Cost Explorer or Azure’s cost analysis, and a simple audit can often identify zombie services, which need to be shut off.

-

Excessive redundancy can also decrease hardware efficiency. Often organizations demand duplication across regions for hot failover, when a cold failover plus GitOps would be good enough.

-

Autoscaling minimizes the number of machines needed to run a system resiliently. It can be linked to CPU usage or network traffic levels, and it can even be configured predictively. Remember to autoscale down as well as up, or it’s only useful the first time! AWS offers an excellent primer on microservices-driven autoscalability. However, increasing architectural complexity by going overboard on the number of microservices can result in overprovisioning. There’s a balance here. Try to still keep it simple. Read Building Microservices by Sam Newman for best practice.

-

Always-on or dedicated instance types are not green. Choosing instance types that give the host more flexibility and, critically, more information about your workload will increase machine utilization and cut carbon emissions and costs. For example, AWS T3 instances, Azure B-series, and Google shared-core machine types offer interesting bursting capabilities, which are potentially an easier alternative to autoscaling.

It is worth noting that architectures that recognize low-priority and/or delayable tasks are easier to operate at high machine utilization. In the future, the same architectures will be better at carbon awareness. These include serverless, microservice, and other asynchronous (event-driven) architectures.

According to the green tech evangelist Paul Johnston, “Always on is unsustainable.” This may be the death knell for some heavyweight legacy monoliths.

Reporting Tools

Hosting cost has always been somewhat of a proxy measure for carbon emissions. It is likely to become even more closely correlated in the future as the cloud becomes increasingly commoditized, electricity remains a key underlying cost, and dirty electricity becomes more expensive through dynamic pricing. More targeted carbon footprint reporting tools do also now exist. They are rudimentary but better than nothing, and if they get used, they’ll get improved. So use them.

Chapter 5: Carbon Awareness

In Chapter 5, “Carbon Awareness”, we will cover the markers of a strong design from a carbon awareness perspective:

-

Little or nothing is “always on.”

-

Jobs that are not time critical (for example, machine learning or batch jobs) are split out and computed asynchronously so they can be run at times when the carbon intensity of electricity on the local grid is low (for example, when the sun is shining and there isn’t already heavy demand on the grid). This technique is often described as demand shifting, and, as we mentioned, Spot or preemptible instance types are particularly amenable to it.

-

The offerings of your services change based on the carbon intensity of the local grid. This is called demand shaping. For example, at times of low-carbon electricity generation, full functionality is offered, but in times of high-carbon power, your service is gracefully degraded. Many applications do something analogous to cope with bandwidth availability fluctuations, for example, by temporarily stepping down image quality.

-

Genuinely time-critical, always-on tasks that will inevitably need to draw on high–carbon intensity electricity are written efficiently so as to use as little of it as possible.

-

Jobs are not run at higher urgency than they need, so if they can wait for cleaner electricity, they will.

-

Where possible, calculations are pushed to end-user devices and the edge to minimize network traffic, reduce the need to run on demand processes in data centers, and take full advantage of the energy stored in device batteries. There are other benefits to this too: P2P, offline-first applications help remove the need for centralized services with a high percentage of uptime and increase application resilience to network issues and decreasing latency.

-

Algorithmic precalculation and precaching are used: CPU- or GPU-intensive calculation tasks are done and saved in advance of need. Sometimes that may seem inefficient (calculations may be thrown away or superseded before they are used), but as well as speeding up response times, smart precalculation can increase hardware efficiency and help shift work to times when electricity is less carbon intensive.

These markers often rely on a microservices or a distributed systems architecture, but that isn’t 100% required.

Chapter 6: Hardware Efficiency

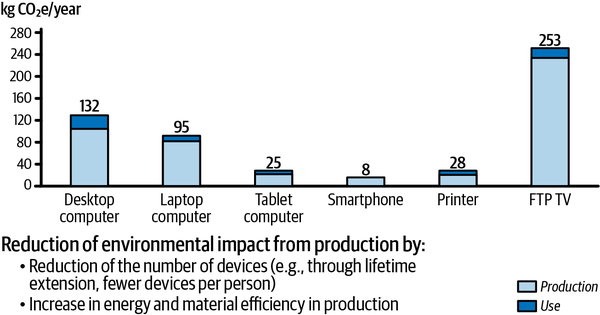

In Chapter 6, “Hardware Efficiency”, we observe that for software running on user devices rather than servers, the carbon emitted during the production of those devices massively outstrips what’s emitted as a result of their use (see Figure 1-2).

Figure 1-2. Direct effects of CO2 emissions per ICT end-user device (based on University of Zurich data)

Note

No, none of us knows what an FTP TV is either. We’re guessing it’s a smart TV. This device’s greenhouse gas emissions seem more problematic than we would have imagined.

Therefore, in the future, user devices in a carbon-zero world will need to last a lot longer. This will be driven in part by physical design and manufacture but also by avoiding software-induced obsolescence caused by operating systems and applications that stop providing security patches or depend on new hardware or features.

As time goes on, Moore’s law (which posits that the number of transistors on a microchip doubles every two years) and other forms of progress mean that devices are always getting new features, which developers want to exploit in their new app releases. Mobile phones, for example, have gotten faster, evolved to have dedicated GPU and machine learning chips, and acquired more memory. Apps take advantage of this progress, and that is fine. However, it is vital that those apps also continue to work on older phones without the new features so they don’t contribute to avoidable, software-driven obsolescence.

So that users aren’t encouraged to throw away working technology, it’s imperative that developers create new software that is backward compatible with existing devices. Phone OSes do provide some information and tooling to help with this, but it usually requires action from developers.

At the moment, the large company that is best at keeping software from killing devices could be Apple. The new iOS 15 supports phones that are up to six years old. However, all providers need to improve. Device life expectancies must be much longer even than six years. Fairphone, a more niche phone vendor, already provides OS security patches for eight years and have set their sights on ten, which demonstrates that it can be done.

All current phones are beaten on longevity by most game consoles. For example, the Xbox One was designed to last 10 years, and that commitment appears to be holding up. The business model for game consoles does not include as much planned disposability for the product as the business model for phones. This demonstrates that devices can last longer if manufacturers choose. We believe that at least 10 years should be the life expectancy of all new devices from now on.

Chapter 7: Networking

In Chapter 7, “Networking”, we talk about the impact of networking and the internet on carbon emissions and discuss how products like videoconferencing services, which have to handle fluctuating bandwidth, provide useful real-world examples of demand shifting and demand shaping.

Networking tools and equipment like fiber-optic cables, routers, and switches have always had minimizing watts per transmitted bit as a fundamental target. Compared to the rest of the industry, networking is thus already quite optimized for energy use, and it accounts for only a small chunk of the electricity bill and carbon emissions of a modern data center.

However, there is still a load of room for improvement in the way most applications use those networks. For them, watts/bit was unlikely to have been a design goal.

There is a great deal the budding field of green software can learn from telecoms.

Chapter 8: Greener Machine Learning, AI, and LLMs

In Chapter 8, “Greener Machine Learning, AI, and LLMs”, we tackle the new world of AI and machine learning (ML), which is generating a huge surge in CPU-intensive work and sparking a massive expansion in data center capacity. As a result, we need strategies for green AI.

We discuss techniques such as training ML models faster and more efficiently by shrinking the model size, using federated learning, pruning, compression, distillation, and quantization.

ML also benefits from fast progress in dedicated hardware and chips, and we should try to use the hardware best suited for the training job in hand.

Most importantly, ML models are a great example of jobs that are not latency sensitive. They do not need to be trained on high–carbon intensity electricity.

Chapter 9: Measurement

According to Chris Adams of the Green Web Foundation, “The problem hasn’t only been developers not wanting to be carbon efficient—it’s been them bumping up against a lack of data, particularly from the big cloud providers, to see what is actually effective. So, the modeling often ends up being based on assumptions.”3

In the short term, making a best guess is better than nothing. Generic moves such as shifting as much as possible into multitenant environments and making time-critical code less CPU intensive are effective. Longer term, however, developers need the right observability and monitoring tools to iterate on energy use.

Chapter 10: Monitoring

It is still very early days for emissions monitoring from software systems, but more tools will be coming along, and when they do, it is vital we learn from all the progress the tech industry has made in effective system monitoring over the past decade and apply it to being green.

In Chapter 10, we discuss site reliability engineering (SRE) and how it might be applied to budgeting your carbon emissions.

Chapter 11: Co-Benefits

In Chapter 11, “Co-Benefits”, we talk about the knock-on benefits of adopting a green software approach, which include cost savings, increased security, and better resilience.

While we wait for better reporting tools, cost is a useful proxy measurement of carbon emissions. There is thus overlap between carbon tracking and the new practice of cloud financial operations, or FinOps, which is a way for teams to manage their hosting costs where everyone (via cross-functional teams in IT, finance, product, etc.) takes ownership of their expenditure, supported by a central best-practices group.

Nevertheless, there remains a significant benefit in using carbon footprint tools over FinOps ones to measure carbon costs. At some point—hopefully ASAP—those tools will take into account the carbon load of the electricity actually used to power your servers. At the moment, you often pay the same to host in regions where the electricity is low carbon like France (nuclear) or Scandinavia (hydro, wind) as you do in regions with high-carbon power like Germany. However, your carbon emissions will be lower in the former locations and higher in the latter. A carbon footprint tool will reflect that. A FinOps one will not.

Chapter 12: The Green Software Maturity Matrix

In Chapter 12, “The Green Software Maturity Matrix”, we discuss the Green Software Maturity Matrix (GSMM) project from the Green Software Foundation. Most of us need to climb from level 1 on the matrix (barely started on efficient and demand-shapeable and shiftable systems) to level 5 (systems that can run 24/7 on carbon-free electricity).

The GSMM asserts that we should start with operational efficiency improvements and save code efficiency until the end, when we’ll hopefully be able to buy it off the shelf. In fact, the GSMM is remarkably aligned with our own suggestions.

Chapter 13: Where Do We Go from Here?

In the last chapter, we will set you a challenge. We want you to halve your hosting (and thus carbon) bills within the next 6–12 months, and we’ll give you some suggestions on how you might go about it. It is a nontrivial goal, but it is achievable and a necessary first step in moving up the Green Software Maturity Matrix.

Finally, we will tell you what the three of us learned about green software from writing this book: it is not a niche. It is what all software is going to have to be from now on.

Green software must therefore fulfill all our needs. It has to be productive for developers, and resilient and reliable and secure and performant and scalable and cheap. At the start of this chapter, we said green software was software that was carbon efficient and aware, but that is only part of the story. Green software has to meet all our other needs as well as being carbon aware and efficient. But this is doable. It is already happening.

The story of green software is not all gloom followed by doom. In our judgment, going green is the most interesting and challenging thing going on in tech right now. It will shape everything, and it involves everyone. It is important and it is solvable.

So, good luck and have fun changing the world.

Get Building Green Software now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.