Chapter 4. Operational Efficiency

Resistance is futile!

The Borg

In reality, resistance isn’t futile. It’s much worse than that.

The Battle Against the Machines

One day, superconducting servers running in supercool(ed) data centers will eliminate resistance, and our DCs will operate on a fraction of the electricity they do now. Perhaps our future artificial general intelligence (AGI) overlords are already working on that. Unfortunately, however, we puny humans can’t wait.

Today, as we power machines in data centers, they heat up. That energy—too often generated at significant climate cost—is then lost forever. Battling resistance is what folks are doing when they work to improve power usage effectiveness (PUE) in DCs (as we discussed in Chapter 2). It is also the motive behind the concept of operational efficiency, which is what we are going to talk about in this chapter.

Note

Those superconducting DCs might be in space because that could solve the cold issue (superconductors need to be very, very cold—space cold, not pop-on-a-vest cold). Off-planet superconducting DCs are a century off, though. Too late to solve our immediate problems.

For the past three decades, we have fought the waste of electricity in DCs using developments in CPU design and other improvements in hardware efficiency. These have allowed developers to achieve the same functional output with progressively fewer and fewer machines and less power. Those Moore’s law upgrades, however, are no longer enough for us to rely on. Moore’s law is slowing down. It might even stop (although it probably won’t).

Fortunately, however, hardware efficiency is not the only weapon we have to battle resistance. Operational efficiency is the way green DevOps or SRE folk like Sarah reduce the waste of electricity in their data centers. But what is operational efficiency?

Tip

Note also that the heat released due to electrical resistance from every electrical device in the world makes no significant contribution to global warming. It’s our sun combined with the physical properties of greenhouse gases doing the actual heating in climate change. It’s also the sun that ultimately provides the heat delivered by heat pumps, which is how they achieve >100% efficiency. Electricity merely helps heat pumps get their hands on that solar energy.

In the far future, if we achieve almost unlimited power creation from fusion or space-based solar arrays, we might have to worry about direct warming. However, that’s a problem for another century. It will be an excellent one to get to. It’ll mean humanity survived this round.

Hot Stuff

Operational efficiency is about achieving the same functional result for the same application or service, including performance and resilience, using fewer hardware resources like servers, disks, and CPUs. That means less electricity is required and gets dissipated as heat, less cooling is needed to handle that heat, and less carbon is emitted as part of the creation and disposal of the equipment.

Operational efficiency may not be the most glamorous option. Neither might it have the biggest theoretical potential for reducing energy waste. However, in this chapter we’re going to try to convince you it is a practical and achievable step that almost everyone can take to build greener software. Not only that, but we’ll argue that in many respects, it kicks the butt of the alternatives.

Techniques

As we discussed in the introduction, AWS reckons that good operational efficiency can cut carbon emissions from systems fivefold to tenfold. That’s nothing to sniff at.

“Hang on! Didn’t you say that code efficiency might cut them a hundredfold! That’s 10 times better!”

Indeed. However, the trouble with code efficiency is it can run smack up against something most businesses care a great deal about: developer productivity. And they are correct to care about that.

We agree that operational efficiency is less effective than code efficiency, but it has a significant advantage for most enterprises. For comparatively low effort and using off-the-shelf tools, you can get big improvements in it. It’s much lower-hanging fruit, and it’s where most of us need to start.

Code efficiency is amazing, but its downside is that it is also highly effortful and too often highly customized (hopefully, that is something that’s being addressed, but we’re not there yet). You don’t want to do it unless you are going to have a lot of users. Even then, you need to experiment first to understand your requirements.

Note

You might notice that 10x operational improvements plus 100x from code efficiency get us the 1000x we actually want. Within five years we’ll need both through commodity tools and services—i.e., green platforms.

In contrast, better operational efficiency is already available from standardized libraries and commodity services. So in this chapter we can include widely applicable examples of good practice, which we couldn’t give you in the previous one.

But before we start doing that, let’s step back. When we talk about modern operational efficiency, what high-level concepts are we leaning on?

We reckon it boils down to a single fundamental notion: machine utilization.

Machine Utilization

Machine utilization, server density, or bin packing. There are loads of names for the idea, and we’ve probably missed some, but the motive behind them all is the same. Machine utilization is about cramming work onto a single machine or a cluster in such a way as to maximize the use of physical resources like CPU, memory, network bandwidth, disk I/O, and power.

Great machine utilization is at least as fundamental to being green as code efficiency.

For example, let’s say you rewrite your application in C and cut its CPU requirements by 99%. Hurray! That was painful, and it took months. Hypothetically, you now run it on exactly the same server you did before. Unfortunately, all that rewriting effort wouldn’t have saved you that much electricity. As we will discuss in Chapter 6, a partially used machine consumes a large proportion of the electricity of a fully utilized one, and the embodied carbon hit from the hardware is the same.

In short, if you don’t shrink your machine (so-called rightsizing) at the same time you shrink your application, then most of your code optimization efforts will have been wasted. The trouble is, rightsizing can be tricky.

Rightsizing

Operationally, one of the cheapest green actions you can take is not overprovisioning your systems. That means downsizing machines that are larger than necessary. As we have already said (but it bears repeating), higher machine utilization means electricity gets used more efficiently and the embodied carbon overhead is reduced. Rightsizing techniques can be applied both on prem and in the cloud.

Unfortunately, there are problems with making sure your applications are running on machines that are neither too big nor too small (let’s call it the DevOps Goldilocks Zone): overprovisioning is often done for excellent reasons.

Overprovisioning is a common and successful risk management technique. It’s often difficult to predict what the behavior of a new service or the demands placed on it will be. Therefore, a perfectly sensible approach is to stick it on a cluster of servers that are way bigger than you reckon it needs. That should at least ensure it doesn’t run up against resource limitations. It also reduces the chance of hard-to-debug race conditions. Yes, it’ll cost a bit more money, but your service is less likely to fall over, and for most businesses, that trade-off is a no brainer. We all intend to come back later and resize our VMs, but we seldom do because of the second issue with rightsizing: you never have time to do it.

The obvious solution is autoscaling. Unfortunately, as we are about to see, that isn’t perfect either.

Autoscaling

Autoscaling is a technique often used in the cloud, but you can also do it on prem. The idea behind it is to automatically adjust the resources allocated to a system based on current demand. All the cloud providers have autoscaling services, and it is also available as part of Kubernetes. In theory, it’s amazing.

The trouble is, in practice, autoscaling can hit issues similar to manual overprovisioning. Scaling up to the maximum is fine, but scaling down again is a lot riskier and scarier, so sometimes it isn’t configured to happen automatically. You can scale down again manually, but who has the time to do anything manual? That was why you were using autoscaling in the first place. As a result, autoscaling doesn’t always solve your underutilization problem.

Fortunately, another potential solution is available on public clouds. Burstable instances offer a compromise between resilience and greenness. They are designed for workloads that don’t require consistently high CPU but occasionally need bursts of it to avoid that whole pesky falling-over thing.

Burstable instances come with a baseline level of CPU performance, but, when the workload demands, can “burst” to a higher level for a limited period. The amount of time the instance can sustain the burst is determined by the accumulated CPU credits it has. When the workload returns to normal, the instance goes back to its baseline performance level and starts accumulating CPU credits again.

There are multiple advantages to burstable instances:

-

They’re cheaper (read: more machine efficient for cloud providers) than types of instance that offer more consistent high CPU performance.

-

They are greener, allowing your systems to handle occasional spikes in demand without having to provision more resources in advance than you usually need. They also scale down automatically.

-

Best of all, they make managing server density your cloud provider’s problem rather than yours.

Of course, there are always negatives:

-

The amount of time your instance can burst to a higher performance level is limited by the CPU credits you have. You can still fall over if you run out.

-

If your workload demands consistent high CPU, it would be cheaper to just use a large instance type.

-

It isn’t as safe as choosing an oversized instance. The performance of burstable instances can be variable, making it difficult to predict the exact level you will get. If there is enough demand for them, hopefully that will improve over time as the hyperscalers keep investing to make them better.

-

Managing CPU credits adds complexity to your system. You need to keep track of accumulated credits and plan for bursts.

The upshot is that rightsizing is great, but there’s no trivial way to do it. Using energy efficiently by not overprovisioning requires up-front investment in time and new skills—even with autoscaling or burstable instances.

Again and again, Kermit the Frog has been proved right. Nothing is easy when it comes to being green or we’d already be doing it. However, as well as being more sustainable, avoiding overprovisioning can save you a load of cash, so it’s worth looking into. Perhaps handling the difficulties of rightsizing is a reason to kick off an infrastructure-as-code or GitOps project…

Infrastructure as code

Infrastructure as code (IaC) is the principle that you define, configure, and deploy infrastructure using code rather than doing it manually. The idea is to give you better automation and repeatability plus version control. Using domain-specific languages and config files, you describe the way you want your servers, networks, and storage to be. This code-based representation then becomes the single version of truth for your infrastructure.

GitOps is a version of IaC that uses Git as its version control system. Any changes, including provisioning ones like autoscaling, are managed through Git, and your current infrastructure setup is continuously reconciled with the desired state as defined in your repository. The aim is to provide an audit trail of any infrastructure changes, allowing you to track, review, and roll back.

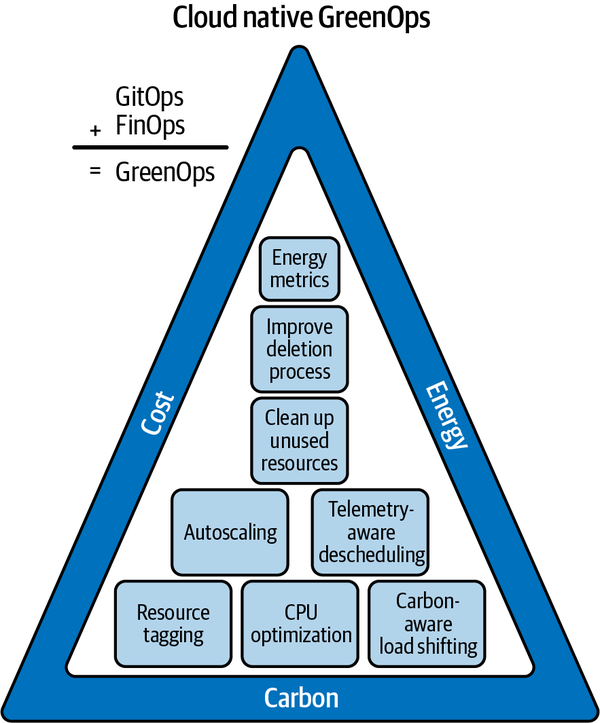

The good news is that the IaC and GitOps communities have started to think about green operations, and so-called GreenOps is already a target of the Cloud Native Computing Foundation’s (CNCF) Environmental Sustainability Group. The foundation ties the concept to cost-cutting techniques (a.k.a. FinOps, which we’ll talk more about in Chapter 11, on the co-benefits of green systems), and the foundation is right (see Figure 4-1). Operationally, greener is cheaper.

Figure 4-1. CNCF definition of GreenOps as GitOps + FinOps

We think the implication here is that you start at the top of the triangle and work down, which seems sensible because the stuff at the bottom is certainly trickier.

Anything that automates rightsizing and autoscaling tasks makes them more likely to happen, and that suggests IaC and GitOps should be a good thing for green. That there is a CNCF IaC community pushing GreenOps is also an excellent sign.

At the time of writing, the authors spoke to Alexis Richardson, CEO at Weaveworks, and some of the wider team. Weaveworks coined the term GitOps in 2017 and set out the main principles together with FluxCD, a Kubernetes-friendly implementation. The company sees a next major challenge for GreenOps being automated GHG emission tracking. We agree, and it is a problem we’ll discuss in Chapter 10.

Cluster scheduling

Standard operational techniques like rightsizing and autoscaling are all very well, but if you really want to be clever about machine utilization, you should also be looking at the more radical concept of cluster scheduling.

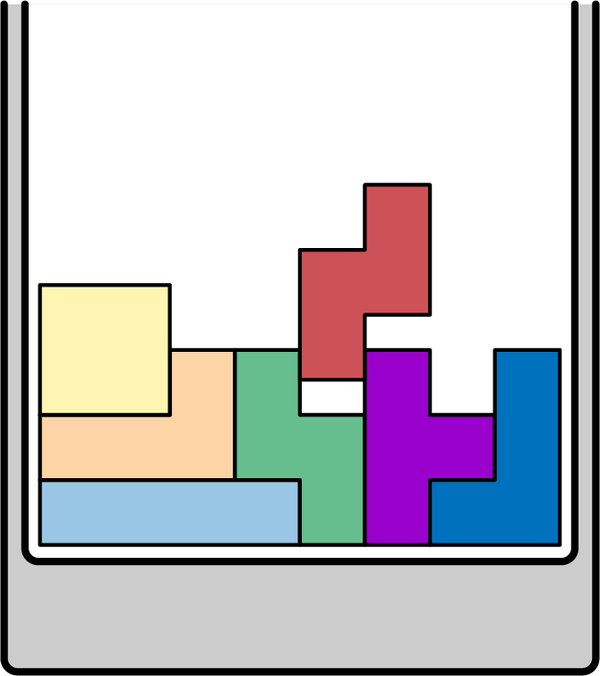

The idea behind cluster scheduling is that differently shaped workloads can be programmatically packed onto servers like pieces in a game of DevOps Tetris (see Figure 4-2). The goal is to execute the same quantity of work on the smallest possible cluster of machines. It is, perhaps, the ultimate in automated operational efficiency, and it is a major change from the way we used to provision systems. Traditionally, each application had its own physical machine or VM. With cluster scheduling, those machines are shared between applications.

Figure 4-2. DevOps Tetris

For example, imagine you have an application with a high need for I/O and a low need for a CPU. A cluster scheduler might locate your job on the same server as an application that is processor intensive but doesn’t use much I/O. The scheduler’s aim is always to make the most efficient use of the local resources while guaranteeing your jobs are completed within the required time frame and to the target quality and availability.

The good news is that there are many cluster scheduler tools and services out there—usually as part of orchestration platforms. The most popular is a component of the open source Kubernetes platform, and it is a much simplified version of Google’s internal cluster scheduler, which is called Borg. As we mentioned in the introduction, Borg has been in use at Google for nearly two decades.

To try cluster scheduling, you could use the Kubernetes scheduler or another such as HashiCorp’s Nomad in your on-prem DC. Alternatively, you could use a managed Kubernetes cloud service like EKS, GKS, or AKS (from AWS, Google, and Azure, respectively) or a non-Kubernetes option like the AWS Container Service (ECS). Most cluster schedulers offer similar functionality, so the likelihood is you’ll use the one that comes with the operational platform you have selected—it is unlikely to be a differentiator that makes you choose one platform over another. However, the lack of such machine utilization functionality might indicate the platform you are on is not green enough.

Cluster scheduling sounds great, and it is, maybe delivering up to 80% machine utilization. If these tools don’t save you money/carbon, you probably aren’t using them right. However, there is still a big problem.

Information underload

For these cluster schedulers to move jobs from machine to machine to achieve optimal packing, they require three things:

-

The jobs need to be encapsulated along with all their prerequisite libraries so that they can be shifted about for maximum packing without suddenly stopping working because they are missing a key dependency.

-

The encapsulation tool must support fast instantiation (i.e., it must be possible for the encapsulated job to be switched off on one machine and switched on again on another fast). If that takes an hour (or even a few minutes), then cluster scheduling won’t work—the service would be unavailable for too long.

-

The encapsulated jobs need to be labeled so the scheduler knows what to do with them (letting the scheduler know whether they have high availability requirements, for example).

The encapsulation and fast instantiation parts can be done by wrapping them in a container such as Docker or Containerd, and that technology is now widely available. Hurray!

Note

Internally, many of the AWS services use lightweight VMs as the wrapper around jobs, rather than containers. That’s fine. The concept remains the same.

However, all this clever tech still runs up against the need for information. When a scheduler understands the workloads it is scheduling, it can use resources more effectively. If it’s in the dark, it can’t do a good job.

For Kubernetes, the scheduler can act based on the constraints specified in the workload’s pod definition, particularly the CPU and memory requests (minima) and limits (maxima), but that means you need to specify them. The trouble is, that can be tricky.

According to longtime GreenOps practitioner Ross Fairbanks, “The problem with both autoscaling and constraint definition is that setting these constraints is hard.” Fortunately, there are now some tools to make it easier. Fairbanks reckons, “The Kubernetes Vertical Pod Autoscaler can be of help. It has a recommender mode so you can get used to using it, as well as an automated mode. It is a good place to start if you are using Kubernetes and want to improve machine utilization.”1

What about the cloud?

If your systems are hosted in the cloud, then even if you are not running a container orchestrator like Kubernetes, you will usually be getting the benefit of some cluster scheduling because the cloud providers operate their own schedulers.

You can communicate the characteristics of your workload by picking the right cloud instance type, and the cloud’s schedulers will use your choice to optimize their machine utilization. That is why, from a green perspective, you must not overspecify your resource or availability requirements (e.g., by asking for a dedicated instance when a burstable one or even just a nondedicated one would suffice).

Again, this requires thought, planning, and observation. The public clouds are quite good at spotting when you have overprovisioned and are sneakily using some of those resources for other users (a.k.a. oversubscription), but the most efficient way to use a platform is always as it was intended. If a burstable instance is what you need, the most efficient way to use the cloud is to choose one.

Mixed workloads

Cluster scheduling is at its most effective (you can get really dense packing) if it has a wide range of different, well-labeled tasks to schedule on a lot of big physical machines. Unfortunately, this means it is less effective—or even completely ineffective—for smaller setups like running Kubernetes on prem for a handful of nodes or for a couple of dedicated VMs in the cloud.

However, it can be great for hyperscalers. They have a wide range of jobs to juggle to achieve optimum packing, and in part, that explains the high server utilization rates they report. The utilization percentages that AWS throws about imply that AWS requires less than a quarter of the hardware you’d use on prem for the same work. The true numbers are hard to come by, but AWS’s figure is more than plausible (it’s likely an underestimate of AWS’s potential savings).

Those smaller numbers of servers mean a lot less electricity used and carbon embodied. As a result, the easiest sustainability step you can take is often to move your systems to the cloud and use their services well, including the full range of instance types. It’s only by using their optimized services and schedulers that you can get those numbers. Don’t lift and shift onto dedicated servers and expect much green value, even if you’re using Kubernetes like a pro.

As we’ve said before, scale and efficiency go hand in hand. Hyperscalers can invest the whopping engineering effort to be hyperefficient because it is their primary business. If your company is selling insurance, you will never have the financial incentive to build a hyperefficient on-prem server room, even if that were possible. In fact, you’d not be acting in your own best interest to do so because it wouldn’t be a differentiator.

Time shifting and spot instances

The schedulers mentioned previously get a whole extra dimension of flexibility if we add time into the mix. Architectures that recognize and can manage low-priority or delayable jobs are particularly operable at high machine utilization, and as we’ll cover in the next chapter, those architectures are vital for carbon awareness. According to green tech expert Paul Johnston, “Always on is unsustainable.”

Which brings us to an interesting twist on cluster scheduling: the cloud concept of spot instances (as they are known on AWS and Azure; they are called Preemptible Instances on the more literal GCP).

Spot instances are used by public cloud providers to get even better machine utilization by using up leftover spare capacity. You can put your job in a spot instance, and it may be completed or it may not. If you keep trying, it will probably be done at some point, with no guarantee of when. In other words, the jobs need to be very time shiftable. In return for this laissez faire approach to scheduling, users get 90% off the standard price for hosting.

A spot instance combines several of the smart scheduling concepts we have just discussed. It is a way of:

-

Wrapping your job in a VM

-

Labeling it as time insensitive

-

Letting your cloud provider schedule it when and where it likes

Potentially (i.e., depending on the factors that go into the cloud’s scheduling decisions), using spot instances could be one of the greenest ways to operate a system. We would love to see hyperscalers take the carbon intensity of the local grid into account when scheduling spot instance workloads, and we expect that to happen by 2025. Google is already talking about such moves.

Multitenancy

In a chapter on operational efficiency, it would be a travesty if we didn’t mention the concept of multitenancy.

Multitenancy is when a single instance of a server is shared between several users, and it is vital to truly high machine utilization. Fundamentally, the more diverse your users (a.k.a. tenants), the better your utilization will be.

Why is that true? Well, let’s consider the opposite. If all your tenants were ecommerce retailers, they would all want more resources on Black Friday and in the run-up to Christmas. They would also all want to process more requests in the evenings and at lunchtime (peak home shopping time). Correlated demand like that is bad for utilization.

You don’t want to have to provision enough machines to handle Christmas and then have them sitting idle the rest of the year. That’s very ungreen. It would be more machine efficient if the retailer could share its hardware resources with someone who had plenty of work to do that was less time sensitive than shopping (e.g., ML training). It would be even more optimal to share those resources with someone who experienced demand on different dates or at different times of the day. Having a wide mix of customers is another way that the public clouds get their high utilization numbers.

Serverless Services

Serverless services like AWS Lambda, Azure Functions, and Google Cloud Functions are multitenant. They also have encapsulated jobs, care about fast instantiation, and run jobs that are short and simple enough that a scheduler knows what to do with them (execute them as fast as possible and then forget about them). They also have enough scale that it should be worth public cloud providers’ time to put in the effort to hyperoptimize them.

Serverless services therefore have huge potential to be cheap and green. They are doing decently at it, but we believe they have room to get much better. The more folk who use them, the more efficient they are likely to get.

Hyperscalers and Profit

There is no magic secret to being green in tech. It is mostly about being a whole lot more efficient and far less wasteful, which happens to match the desires of anyone who wants to manage their hosting costs.

According to ex-Azure DevRel Adam Jackson, “The not-so-dirty secret of the public cloud providers is that the cheaper a service is, the higher the margins. The cloud providers want you to pick the cheapest option because that’s where they make the most money.”

Those services are cheap because they are efficient and run at high scale. As 17th-century economist Adam Smith pointed out, “It is not from the benevolence of the butcher, the brewer, or the baker that we expect our dinner, but from their regard to their own interest.” In the same vein, the hypercloud providers make their systems efficient for their own benefit. However, in this case it is to our benefit, too, because we know that although efficiency is not an exact proxy for greenness, it isn’t bad.

Reducing your hosting bills by using the cheapest, most efficient and commoditized services you can find is not just in your interest and the planet’s, but it is also in the interest of your host. They will make more money as a result, and that is a good thing. Making money isn’t wrong. Being energy inefficient in the middle of an energy-driven climate crisis is. It also highlights the reason why operational efficiency might be the winning form of efficiency: it can make a load of money for DC operators. It is aligned with their interests, and you should choose ones that have the awareness to see that and the capital to put behind it.

Note

AWS Lambda serverless service is an excellent example of how the efficiency of a service gets improved when it becomes clear there is enough demand to make that worthwhile. When Lambda was first launched, it used a lot of resources. It definitely wasn’t green. However, as the latent demand became apparent, AWS put investment in and built the open source Firecracker platform for it, which uses lighter-weight VMs for job isolation and also improves the instantiation times and scheduling. As long as untapped demand is there, this commoditization is likely to continue. That will make it cheaper and greener as well as more profitable for AWS.

SRE Practices

Site reliability engineering (SRE) is a concept that originally came from another efficiency-obsessed hyperscaler: Google. SREs are responsible for designing, building, and maintaining reliable and robust systems that can handle high traffic and still operate smoothly. The good news is that green operations are aligned with SRE principles, and if you have an SRE organization, being green should be easier.

SREs practice:

-

Monitoring (which should include carbon emissions; see Chapter 9 for our views on the subject of the measurement of carbon emissions and Chapter 10 for our views on how to use those measurements)

-

Continuous integration and delivery (which can help with delivering and testing carbon emission reductions in a faster and safer fashion)

-

Containerization and microservices (which are more automatable and mean your entire system isn’t forced to be on demand and can be more carbon aware)

This is not a book about SRE best practices and principles, so we are not going to go into them in detail, although we discuss them more in Chapter 11. However, there are plenty of books available from O’Reilly that cover these excellently and in depth.

LightSwitchOps

Most of what we have talked about so far has been clever high-tech stuff. However, there are some simple operational efficiency ideas that anyone can implement, and one of the smartest we’ve heard is from Red Hat’s Holly Cummins. It’s called LightSwitchOps (see Figure 4-3).

Figure 4-3. LightSwitchOps as illustrated by Holly

Closing down zombie workloads (Cummins’ term for applications and services that don’t do anything anymore) should be a no-brainer for energy saving.

In a recent real-life experiment, a major VM vendor who relocated one of its DCs discovered two-thirds of its servers were running applications that were hardly used anymore. Effectively, these were zombie workloads.

According to Martin Lippert, Spring Tools Lead & Sustainability Ambassador at VMware, “In 2019, VMware consolidated a data center in Singapore. The team wanted to move the entire data center and therefore investigated what exactly needed a migration. The result was somewhat shocking: 66% of all the host machines were zombies.”3

This kind of waste provides huge potential for carbon saving. The sad reality is that a lot of your machines may also be running applications and services that no longer add value.

The trouble is, which ones are those exactly?

There are several ways to work out whether a service still matters to anyone. The most effective is something called a scream test. We’ll leave it as an exercise for the reader to deduce how that works. Another is for resources to have a fixed shelf life. For example, you could try only provisioning instances that shut themselves down after six months unless someone actively requests that they keep running.

These are great ideas, but there is a reason folk don’t do either of these things. They worry that if they turn a machine off, it might not be so easy to turn it back on again, and that is where LightSwitchOps comes in.

For green operations, it is vital that you can turn off machines as confidently as you turn off the lights in your hall—i.e., safe in the knowledge that when you flick the switch to turn them back on, they will. Holly Cummins’s advice is to ensure you are in a position to turn anything off. If you aren’t, then if your server is not part of the army of the walking dead today, you can be certain that one day it will be.

GreenOps practitioner Ross Fairbanks suggests that a great place to get started with LightSwitchOps is to automatically turn off your test and development systems overnight and on the weekend.

Zombie apocalypse

In addition to saving carbon, there are security reasons for turning off those zombie servers. Ed Harrison, former head of security at Metaswitch Networks (now part of Microsoft), told us, “Some of the biggest cybersecurity incidents in recent times have stemmed from systems which no one knew about and should never have been switched on.” He went on, “Security teams are always trying to reduce the attack surface. The sustainability team will be their best friends if their focus is switching off systems which are no longer needed.”4

Location, Location, Location

There is one remaining incredibly important thing for us to talk about. It is a move that is potentially even easier than LightSwitchOps, and it might be the right place for you to start—particularly if you are moving to a new data center.

You need to pick the right host and region.

The reality is that in some regions, DCs are easier to power using low-carbon electricity than in others. For example, France has a huge nuclear fleet, and Scandinavia has wind and hydro. DCs in such areas are cleaner.

We say again, choose your regions wisely. If in doubt, ask your host about it.

Note

The global online business publication the Financial Times offers a good example of a change in location leading to greener infrastructure. The Financial Times’ engineering team spent the best part of a decade moving to predominantly sustainable EU regions in the cloud from on-premises data centers that had no sustainability targets.

Anne talked to the Financial Times in 2018 (when the company was 75% of the way through the shift) about the effect it was having on their own operational sustainability goals. At that point, the result was that ~67% of their infrastructure was consequently on “carbon neutral” servers, and the company expected this to rise to nearly 90% when it transitioned to the Cloud in 2020 (which it did).

The carbon-neutral phrasing may have been dropped by everyone, but the Financial Times now inherits AWS’s target of powering its operations with 100% renewable energy by 2025, which is great. The lesson here is that picking suppliers with solid sustainability targets they seem committed to (i.e., green platforms) takes that hard work off you—it’ll just happen under your feet.

Oh No! Resistance Fights Back!

Unfortunately, efficiency and resilience have always had an uneasy relationship. Efficiency adds complexity and thus fragility to a system, and that’s a problem.

Efficiency versus resilience

In most cases, you cannot make a service more efficient without also putting in work to make it more resilient, or it will fall over on you. Unfortunately, that puts efficiency in conflict yet again with developer productivity.

For example:

-

Cluster schedulers are complicated beasts that can be tricky to set up and use successfully.

-

There are a lot of failure modes to multitenancy: privacy and security become issues, and there is always the risk of a problem from another tenant on your machine spilling over to affect your own systems.

-

Even turning things off is not without risk. The scream test we talked about earlier does exactly what it says on the tin.

-

To compound that, overprovisioning is a tried-and-tested way to add robustness to a system cheaply in terms of developer time (at the cost of increased hosting bills, but most folk are happy to make that trade-off).

Cutting to the chase, efficiency is a challenge for resilience.

There are some counterarguments. Although a cluster scheduler is good for operational efficiency, it has resilience benefits too. One of the primary reasons folk use a cluster scheduler is to automatically restart services in the face of node, hardware, or network failures. If a node goes down or becomes unavailable for any reason, a scheduler can automatically shift the affected workloads to other nodes in the cluster. You not only get efficient resource utilization, but you also get higher availability—as long as it wasn’t the cluster scheduler that brought you down, of course.

However, the reality is that being more efficient can be a risky business. Handling the more complex systems requires new skills. In the case of Microsoft improving the efficiency of Teams during the COVID pandemic, the company couldn’t just make its efficiency changes. It also had to up its testing game by adopting chaos-engineering techniques in production to flush out the bugs in its new system.

Like Microsoft, if you make any direct efficiency improvements yourself, you will probably have to do more testing and more fixing. In the Skyscanner example, using spot instances increased the resilience of its systems and also cut its hosting bills and boosted its greenness, but the company’s whole motivation for adopting spot instances was to force additional resilience testing on itself.

Efficiency usually goes hand in hand with specialization, and it is most effective at high scale, but scale has dangers too. The European Union fears we are putting all our computational eggs in the baskets of just a few United States hyperscalers, which could lead to a fragile world. The EU has a point, and it formed the Sustainable Digital Infrastructure Alliance (SDIA) to attempt to combat that risk.

On the other hand, we know that the same concentration will result in fewer machines and less electricity used. It will be hard for the smaller providers that make up the SDIA to achieve the efficiencies of scale of the hyperscalers, even if they do align themselves on sensible open source hosting technology choices as the SDIA recommends.

We may not like the idea of the kinds of huge data centers that are currently being built by Amazon, Google, Microsoft, and Alibaba, but they will almost certainly be way more efficient than a thousand smaller DCs, even if those are warming up a few Instagrammable municipal pools or districts as the EU is currently demanding.

Note that we love the EU’s new mandates on emission transparency. We are not scoffing at the EU, even if for one small reason or another none of us live in it anymore. Nonetheless, we would prefer to see DCs located near wind turbines or solar farms, where they could be using unexpected excess power rather than competing with homes for precious electricity in urban grid areas.

Green Operational Tools and Techniques

Stepping back, let’s review the key operational-efficiency steps you can take. Some are hard, but the good news is that many are straightforward, especially when compared to code efficiency. Remember, it’s all about machine utilization.

-

Turn stuff off if it is hardly used or while it’s not being used, like test systems on the weekend (Holly Cummins’s LightSwitchOps).

-

Don’t overprovision (use rightsizing and autoscaling, burstable instances in the cloud). Remember to autoscale down as well as up or it’s only useful the first time!

-

Cut your hosting bills as much as possible using, for example, AWS Cost Explorer or Azure’s cost analysis, or a nonhyperscaler service like CloudZero, ControlPlane, or Harness. A simple audit can also often identify zombie services. Cheaper is almost always greener.

-

Containerized microservice architectures that recognize low-priority and/or delayable tasks can be operated at higher machine utilization. Note, however, that increasing architectural complexity by going overboard on the number of microservices can also result in overprovisioning. You still need to follow microservices design best practices, so, for example, read Building Microservices (O’Reilly) by Sam Newman.

-

If you are in the cloud, dedicated instance types have no carbon awareness and low machine utilization. Choosing instance types that give the host more flexibility will increase utilization and cut carbon emissions and costs.

-

Embrace multitenancy from shared VMs to managed container platforms.

-

Use efficient, high-scale, preoptimized cloud services and instance types (like burstable instances, managed databases, and serverless services). Or use equivalent open source products with a commitment to green or efficient practices, an energetic community to hold them to those commitments, and the scale to realistically deliver on them.

-

Remember that spot instances on AWS or Azure (preemptible instances on GCP) are great—cheap, efficient, green, and a platform that encourages your systems to be resilient.

-

None of this is easy, but the SRE principles can help: CI/CD, monitoring, and automation.

Unfortunately, none of this is work-free. Even running less or turning stuff off requires an investment of time and attention. However, the nice thing about going green is that it will at least save you money. So the first system to target from a greenness perspective should also be the easiest to convince your manager about: your most expensive one.

Having said that, anything that isn’t work-free, even if it saves a ton of money, is going to be a tough sell. It will be easier to get the investment if you can align your move to green operations with faster delivery or saving developer or ops time down the road because those ideas are attractive to businesses.

That means the most effective of the suggested steps are the last five. Look at SRE principles, multitenancy, managed services, green open source libraries, and spot instances. Those are all designed to save dev and ops time in the long run, and they happen to be cheap and green because they are commoditized and they scale. Don’t fight the machine. Going green without destroying developer productivity is about choosing green platforms.

To survive the energy transition, we reckon everything is going to have to become a thousand times more carbon efficient through a combination of, initially, operational efficiency and demand shifting and, eventually, code efficiency, all achieved using green platforms. Ambitious as it sounds, that should be doable. It is about releasing the increased hardware capacity we have used for developer productivity over the past 30 years, while at the same time keeping the developer productivity.

It might take a decade, but it will happen. Your job is to make sure all your platform suppliers, whether public cloud, open source, or closed source, have a believable strategy for achieving this kind of greenness. This is the question you need to constantly ask yourself: “Is this a green platform?”

Get Building Green Software now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.