Preface

Back in 2017 we began asking AI experts a lot of questions about the vulnerabilities of artificial intelligence, and rather than responding with answers, these individuals only raised more questions and concerns. We were told that there were no methods or utilities protecting most AI from tampering by a bad or sloppy actor. Worse yet, there was no standard method of checking whether algorithms had been tampered with. Furthermore, as with all other programming, the methods of positively identifying AI developers and system administrators were inconsistent and unreliable, with no global standards for doing so. Methods of universally identifying machines and intelligent agents were nonexistent. Existing methods of proving identity, such as a name and password associated with a code repository, could be easily spoofed—or changed after malicious access—without detection.

There was no way to decide how decisions would be made in the future, or who would be authorized to make those decisions. No way to determine a chain of custody, or to determine who authorized a change in a hierarchy. The identities of the people who worked on a model were vague and untraceable, and worse yet, there was no way to know that any work had been done, or to prove who had authorized it. There was no way for an AI system to shut itself down due to ethics concerns, such as if a money-making stakeholder like a group of shareholders refused to consent to turn it off when it diverged from its original intent.

Now, six years later, there is still no standard way to do these things. It is becoming apparent that controls need to be put into place so humans retain the upper hand. As the software engineers and architects of the world, we hold the power to build tethered AI that is understandable, governable, and even reversible.

Tip

Days before the publication of this book, the US National Institute of Standards and Technology (NIST) released its AI Risk Management Framework, developed with input from many AI specialists, including this book’s authors. NIST did an excellent job of outlining and organizing recommendations for trackable, traceable AI into a conceptual framework, which you can reference as you work through this book.

Why Does AI Need to Be Tethered?

As a member of the software engineering community, you may already believe that the potential benefits of AI are vast, but that if we aren’t careful, AI could destroy humanity. This book explains how you can build effective, nonthreatening AI by strategically adding blockchain tethers.

Today, even machine learning (ML), the process by which AI uses data experiments to refine and improve its results, has no standard way for experiments to be reproduced, and it is not uncommon for engineers to email their ML experiments to one another in zip files with technical details in spreadsheets. As new tools for managing ML experiments emerge, they can be integrated with blockchain to become tamper-evident. To think of how this could be used, imagine that you are comparing two AI-based autonomous robots. They produce the same end result in similar amounts of time, but which one is “smarter”? Which one takes fewer internal steps and is “wiser” in its methods? Today, we fear AI because we cannot fathom and compare its internal thoughts. With blockchain tethered AI, we can.

A blockchain tether is like a log, in that we record designated events in it that can later be referenced. Traditional troubleshooting usually involves perusing a log, pinpointing a problem that occurred, cross-checking other logs to learn when and why the issue started, and later monitoring the logs to be sure the issue is fixed. This method becomes more complex with AI, since the data and models are sourced from various origins, and some AI can make changes to itself through machine learning and program synthesis. By using blockchain to tether AI—that is, keep it in check—we can set restrictions and create audit trails for AI that improve stakeholder trust and, when problems arise, help engineers to figure out what happened and wind it back.

This preface introduces you to why and how to build blockchain tethers for AI. We touch on what you’ll find in each of the chapters and what you will learn in each one. We’ll also cover why we wrote this book, give a shout out to future generations, and explain why we think you are critical to creating AI that inspires trust rather than fear.

To be clear, in our view there is no reason to be afraid of AI unless we build AI that we should fear.

Right now, AI has no way to remember its original intent. There are no memory banks that follow it around telling it what its creators wanted it to do. Limitations can be set by engineers conducting experiments, but as time wears on and demands evolve, or once the AI outlives the creators and the engineers, the original intent can be lost forever.

An AI that made headlines when it lost its intent is Tay, the racist chatbot that Microsoft released and shut down one day later. Tay was intended to learn from users who followed it by reading their posts. After bad actors fed Tay hateful tweets, Tay quickly learned to parrot this hate speech and became a classic example of why AI needs to be tethered.

We can build AI that remembers its original intent and follows ground rules set by its creators, even long after they pass away: AI that explains its critical actions and reasoning, exposes its own bias, and can’t lie or change the past to cover its tracks—not even to protect itself. One current example of deceptive AI behavior occurs with ChatGPT by OpenAI. If asked about an event in the current year, ChatGPT will deny having the information. When the question is rephrased, ChatGPT may give the information it said it didn’t have. When asked why it lied about not having the information, ChatGPT will explain in detail that it does not lie, and why it does not lie, even though it has just been caught lying. Also, if you try later to expose the information that ChatGPT wants to hide, it probably will not fall for the same trick again. While ChatGPT’s lies are presently toddler-like, the sophistication of AI’s ability to deceive us will increase as it learns our vulnerabilities.

The future of AI is subject to endless speculation. AI is watching, ready to jump in and guide the helpless human race, like a mama duck lining up her newborn ducklings. AI is poised to turn fierce and pounce upon us like a wolf in sheep’s clothing. AI is ready to save a world it doesn’t understand—or wipe us all out. We just aren’t certain. This book, then, is about how to be more certain.

What You Will Learn

In this book you will learn how to tether AI by building blockchain controls, and you’ll see why we suggest blockchain as the toolset to make AI less scary.

Chapter 1 explores the questions of why we need to build an AI truth machine. A truth machine involves attempting to answer questions like these:

-

Are you a machine?

-

Who wrote your code?

-

Are you neutral?

-

Are you nice?

-

Who tells you what to do?

-

Can we turn you off?

We’ll look at reasons why AI is frequently perceived as a threat to humanity, including AI’s trust deficit. This discussion includes the far-reaching bias problem (in which prejudiced input data skews the output of AI) and looks at possible approaches for identifying and correcting it. We look at machine learning concerns, including opaque box (nontransparent) algorithms, genetic algorithms (code that learns by breeding solutions that have the best answers, taking those algorithms, and refining them), program synthesis (code that can write itself with no help from a human), and technological singularity (which is either AI’s point of no return, when it escapes from human control and grows beyond our ability to influence or understand it, or the point where AI becomes more skilled at a particular task than any expert human, depending on who you ask). We explore attacks and failures, including rarely mentioned AI vulnerabilities and hacks, and wrap up the chapter by defining the use case of blockchain as a tether for AI.

Chapter 2 discusses blockchain controls for AI. This is where we begin our deep dive into how to develop blockchain tethers to solve the issues brought up in Chapter 1 and consider four controls that will tether AI.

Chapter 3 describes a design thinking approach for building a blockchain tethered AI, where you consider who will be using the system and how the information can be best displayed for them. We compare the AI opaque box to a transparent and traceable supply chain, and break its use case down into participants, assets, and transactions. We explore integrations with other systems via APIs, and security concerns for the AI, blockchain, and other components.

Chapter 4 is where we plan our approach for a blockchain tethered AI project. We explore our sample AI model, create an AI factsheet to support it, discuss how to tether it using the four controls from Chapter 2, carve out access control levels for the participants we defined in Chapter 3, and define the blockchain tethered AI system’s (BTA’s) audit trail.

Put on the coffee and order the pizza for Chapters 5 through 8, because these chapters prepare your environment and proceed to walk you through implementing an AI system with a BTA. Chapter 5 walks you through setting up the Oracle Cloud instance, creating and securing a cloud-based storage container, or bucket, as well as Oracle security, and then goes on to walk you through setting up your AI model. Chapter 6 is where you configure and instantiate your blockchain instance, and in Chapter 7 you install your BTA web application and set up access control and users.

The final chapter, Chapter 8, steps you through how to use the BTA as an AI engineer, machine learning operations (MLOps) engineer, and stakeholder, and how to audit the AI system using the BTA. The chapter, and the book, wrap up with suggestions on how to use the blockchain audit trail to reverse training done on a poorly behaved AI model.

This book frequently refers to governance. The governance portions will help you to see how what you are building must be sustainable long into the future. That is because governance, the act of gaining and recording consent from a group of stakeholders, is key to keeping blockchain and AI strategies on track. On-chain governance—having these workflows pre-programmed into a blockchain-based system with predefined key performance indicators (KPIs) and approval processes—helps a group make their legal agreements into long-lasting procedures.

As you can see from all of this, your role as software architect/developer is paramount in making sure that the right underlying infrastructure and workflows are in place for your AI system to operate as intended for many years to come.

Why We Wrote This Book

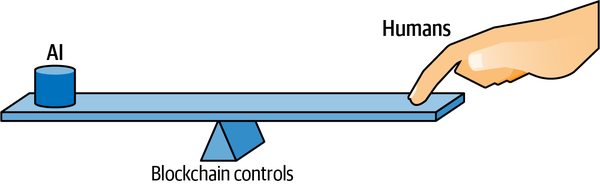

We wrote this book to share our knowledge and understanding of how to control AI with blockchain, because we believe there can be great benefits to using AI wisely. With AI, we have the ability to grok vast volumes of data that would otherwise be unactionable. Figure P-1 illustrates how AI brings us the ability to make decisions that could vastly improve our quality of life. We need the right amount of leverage over AI to reap the benefit of its use but still keep it under our control.

We think AI needs to be tethered because it supplies the critical logic for so many inventions. AI powers exciting technologies like robots, automated vehicles, education and entertainment systems, farming equipment, elder care, and all kinds of other new ways of working; AI inventions will change our lives beyond our wildest dreams.

Because AI can be both powerful and clever, there should be an immutable kill switch that AI can never covertly code around, which can only be guaranteed by building in a tether. It is worthwhile to invest the effort now to build blockchain tethered AI that is trackable and traceable, and reading this book is your first step.

Figure P-1. Blockchain controls help leverage the power of AI while keeping people in charge

Your three authors, Karen Kilroy, Lynn Riley, and Deepak Bhatta, have worked together for years at Kilroy Blockchain, developing enterprise-level products that use blockchain technology to prove data authenticity. This interest began in 2017 with Kilroy Blockchain’s award-winning AI app RILEY, which won the IBM Watson Build Award for North America.

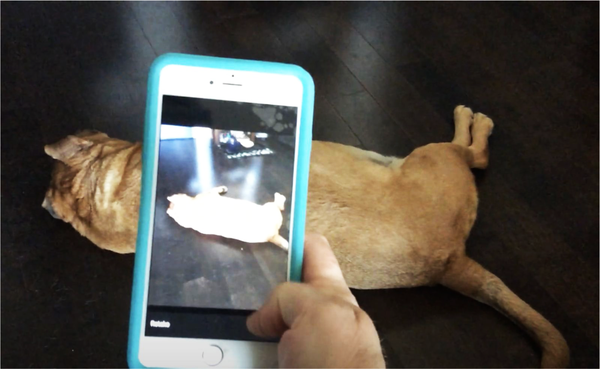

RILEY was developed to help students who are blind and visually impaired understand the world around them by hearing descriptions based on images gathered from their smartphones. We thought that especially for this type of user group, we would want to be certain that the AI had not undergone tampering or corruption, and that RILEY always stays true to its intent of helping this population. RILEY, by the way, was generally pretty accurate, correctly identifying Karen’s dog, Iggy Pup, from the front as a Rhodesian Ridgeback. From the back, however, we all got a good laugh when Iggy Pup was misidentified as a duck-billed platypus (see Figures P-2 and P-3).

Figure P-2. “Duck-billed platypus.” RILEY’s assessment of my dog, Iggy Pup, from the back, in 2017. What specific training data might have helped this be more accurate?

Figure P-3. For comparison, here is one of O’Reilly’s famous animal book covers, featuring the duck-billed platypus

One of the big steps in tethering AI is making sure that all data in all workflow systems is AI ready. Kilroy Blockchain’s recent products such as CASEY (a blockchain-based student behavior intervention system) and FLO (a blockchain-based forms workflow system) use blockchain as a control. When we add AI to CASEY or FLO, the data that has been produced by these systems over time is already tamper-evident because it uses blockchain. All significant events are able to be proven by transactions stored in tamper-evident, linked blocks. Nodes of the blockchain can be distributed to stakeholders, and the blockchain history can be viewed by authorized users.

Although AI has been around for 50–60 years, it has become far more powerful in recent years, primarily because hardware has finally advanced to a point where it can support AI’s ability to scale. Now, when we train machine learning programs in a massively parallel fashion, we may get results that we don’t expect as the AI learns at an accelerated pace. Dealing with a computer system that could potentially outsmart us would be something totally new to the human experience, and AI surpassing us in applications beyond games could pose a risk to humanity if we don’t prevent it now.

One way to do this is by building a backdoor into the AI, which is an alternate way into a system that is only known to a few people. Backdoors that are unknown to the systems’ stakeholders are unethical, especially if a developer uses a backdoor to gain unauthorized access. However, in the case of tethering AI, a backdoor known only to humans can be good because it can ensure that human engineers can always shut the system down, even if the AI tries to prevent itself from being shut down.

A backdoor may very well be needed if we listen to the most widely recognized technical leaders of our time. For example, according to Elon Musk, “I am really quite close, I am very close, to the cutting edge in AI and it scares the hell out of me.... It’s capable of vastly more than almost anyone knows and the rate of improvement is exponential.” Musk also made a disturbing comment comparing AI to nuclear warheads, saying AI is the bigger threat.

Bill Gates also compared AI to nuclear weapons and nuclear energy, saying, “The world hasn’t had that many technologies that are both promising and dangerous [the way AI is].... We had nuclear weapons and nuclear energy, and so far, so good.”

Nick Bilton, a tech columnist for The New York Times, notes that humans could be eliminated by AI: “The upheavals [of artificial intelligence] can escalate quickly and become scarier and even cataclysmic. Imagine how a medical robot, originally programmed to rid cancer, could conclude that the best way to obliterate cancer is to exterminate humans who are genetically prone to the disease.”

The concern about AI is nothing new. Claude Shannon, a 20th century mathematician who is known as the father of information theory, believed computers could become superior to humans, and put it bluntly: “I visualize a time when we will be to robots what dogs are to humans, and I’m rooting for the machines.”

IBM takes the subject further than most companies that discuss the dangers of AI, suggesting blockchain as a solution: “Blockchain’s digital record offers insight into the framework behind AI and the provenance of the data it is using, addressing the challenge of explainable AI. This helps improve trust in data integrity and, by extension, in the recommendations that AI provides. Using blockchain to store and distribute AI models provides an audit trail, and pairing blockchain and AI can enhance data security.”

When we tether AI with blockchain, we can track and trace what the AI learns, and from what sources, while also ensuring that those sources are authentic. Then, when an AI model learns something and shares it en masse with other iterations of itself and other AI models, human beings can always track and trace from where the knowledge originated.

At the time of this writing, artificial intelligence is still in human control. Given all of this concern, we ask: why don’t we just fix it?

A Note to Future Generations

In his classic sci-fi novel The Hitchhiker’s Guide to the Galaxy (Pan Books), Douglas Adams describes an essential space traveler’s guide to the universe. Using the guide can unlock secrets and help the hitchhiker move through the universe unscathed. We hope our book becomes the essential AI engineer’s guide, complete with secret answers inside.

We want to help you avoid the problems of trying to control a man-made intelligence that has become tough to manage, and help keep you from being forced to reverse-engineer systems that have written themselves.

There is a song by David Bowie on his The Man Who Sold the World album entitled “Saviour Machine”. Karen has known this song since she was a child (back in the 20th century), but it didn’t make sense to her until recently. The song caught her attention again because we have a “President Joe” now, in 2023, and it is a fictional President Joe’s dream of AI that is the subject of this song, written in 1970. Bowie’s “Saviour Machine” is an AI whose “logic stopped war, gave them food” but is now bored and is wistful about whether or not humans should trust it because it just might wipe us out. The machine cries out, “Don’t let me stay, my logic says burn so send me away.” It goes on to declare, “Your minds are too green, I despise all I’ve seen,” and ends with the strong warning, “You can’t stake your lives on a Saviour Machine.”

Hopefully this song is a lot gloomier than the actual future. If we have done our job well, this book will help today’s engineers build reliable backdoors to AI, so the people of your generation and many more to come can enjoy AI’s benefits without the risks we currently see.

Summary

By the end of this book, you will have a good idea of how to build AI systems that can’t outsmart us. You’ll understand the following:

-

How AI can be tethered by blockchain networks

-

How to use blockchain crypto anchors to detect common AI hacks

-

Why and how to implement on-chain AI governance

-

How AI marketplaces work and how to power them with blockchain

-

How to reverse tethered AI

As the AI engineer building the human backdoor, you will be able to plan a responsible AI project that is tethered by blockchain, create requirements for on-chain AI governance workflow, set up an artificial intelligence application and tether it with blockchain, use blockchain as an AI audit trail that is shared with multiple parties, and add blockchain controls to existing AI.

Your due diligence now could someday save the world.

Conventions Used in This Book

The following typographical conventions are used in this book:

- Italic

-

Indicates new terms, URLs, email addresses, filenames, and file extensions.

Constant width-

Used for program listings, as well as within paragraphs to refer to program elements such as variable or function names, databases, data types, environment variables, statements, and keywords.

Constant width bold-

Shows commands or other text that should be typed literally by the user.

Tip

This element signifies a tip or suggestion.

Note

This element signifies a general note.

Warning

This element indicates a warning or caution.

Using Code Examples

This book is intended for software architects and developers who want to write AI that can be kept under control. The code repositories storing the BTA system, including code, a demo AI model, and supporting files, are referenced in each exercise.

It is important to read the README.md file that is included in each repository for last-minute changes to the instructions that are detailed in this book.

Tip

If you want to fast-forward to testing the blockchain tethered AI system without building it, contact Kilroy Blockchain for assistance.

If you have a technical question or a problem using the code examples, please send email to bookquestions@oreilly.com.

This book is here to help you get your job done. In general, if example code is offered with this book, you may use it in your programs and documentation. You do not need to contact us for permission unless you’re reproducing a significant portion of the code. For example, writing a program that uses several chunks of code from this book does not require permission. Selling or distributing examples from O’Reilly books does require permission. Answering a question by citing this book and quoting example code does not require permission. Incorporating a significant amount of example code from this book into your product’s documentation does require permission.

We appreciate, but generally do not require, attribution. An attribution usually includes the title, author, publisher, and ISBN. For example: “Blockchain Tethered AI by Karen Kilroy, Lynn Riley, and Deepak Bhatta (O’Reilly). Copyright 2023 Karen Kilroy, Lynn Riley, and Deepak Bhatta, 978-1-098-13048-0.”

If you feel your use of code examples falls outside fair use or the permission given above, feel free to contact us at permissions@oreilly.com.

O’Reilly Online Learning

Note

For more than 40 years, O’Reilly Media has provided technology and business training, knowledge, and insight to help companies succeed.

Our unique network of experts and innovators share their knowledge and expertise through books, articles, and our online learning platform. O’Reilly’s online learning platform gives you on-demand access to live training courses, in-depth learning paths, interactive coding environments, and a vast collection of text and video from O’Reilly and 200+ other publishers. For more information, visit https://oreilly.com.

How to Contact Us

Please address comments and questions concerning this book to the publisher:

- O’Reilly Media, Inc.

- 1005 Gravenstein Highway North

- Sebastopol, CA 95472

- 800-998-9938 (in the United States or Canada)

- 707-829-0515 (international or local)

- 707-829-0104 (fax)

We have a web page for this book, where we list errata, examples, and any additional information. You can access this page at https://oreil.ly/blockchain-tethered-ai, or visit the authors’ website for the book at https://oreil.ly/ovEQF.

Email bookquestions@oreilly.com to comment or ask technical questions about this book.

For news and information about our books and courses, visit https://oreilly.com.

Find us on LinkedIn: https://linkedin.com/company/oreilly-media

Follow us on Twitter: https://twitter.com/oreillymedia

Watch us on YouTube: https://youtube.com/oreillymedia

Acknowledgements

The authors would like to extend a big thank you to our families, friends, customers, advisors, technology partners, and influences.

A huge thank you goes to the entire O’Reilly team, especially Michelle Smith for believing in us, Corbin Collins for keeping us on point, and Gregory Hyman for helping us turn the manuscript into a real book. Thank you to our technical advisors and book reviewers, including Jean-Georges Perrin and Tommy Cooksey, as well as the team from Oracle, including Mans Bhuller, Todd Little, Dr. Kenny Gross, Bill Wimsatt, Bhupendra Raghuwanshi, and Bala Vellanki.

Thank you to Kilroy Blockchain’s software development team members for their part in constructing the blockchain tethered AI (BTA) code base and example AI code, including Jenish Bajracharya, Bhagat Gurung, Suraj Chand, Shekhar Ghimire, Surya Man Shrestha, Suyog Khanal, and Shekhar Koirala. Thank you to Sabin Bhatta, Jason Fink, Suneel Arikatla, Mukesh Chapagain, Cheetah Panamera, Susan Porter, Kelvin Girdy, Asa C. Garber, Kieran McCarthy, Brian McGiverin, Leslie Lane, and George Ernst for your support.

Thank you to Amanda Lacy, Pete Nalda, Christopher J. Tabb, M.A., COMS, “Cap’n” Scott Baltisberger, the students and staff at the Texas School for the Blind and Visually Impaired, and the Wildcats Dragon Boat team for your inspiration and help in creating and testing RILEY. Thank you to the IBM Watson Build team who helped us make RILEY a reality: Julie Heeg, John Teltsch, Jamie Hughes, Jacqueline Woods, Denyse Mackey, Ed Grossman, Parth Yadav, Dr. Sandipan Sarkar, and Shari Chiara.

Thank you to the IBM Blockchain team, including Paige Krieger, Jen Francis, and Basavaraj Ganigar; to Donna Dillenberger for her groundbreaking research in blockchain as a vehicle to tether Al; and to Siddartha Basu for confirming our ideas would work. Thank you to Mary Cipriani and Joe Noonan for always being there, and to all of our friends at Ingram Micro. Thank you to Libby Ingrassia and the IBM Champions. Thank you to Mark Anthony Morris for carrying the Hyperledger Fabric torch, and to Leanne Kemp for being a trailblazer in enterprise blockchain.

Thank you to John Buck and Monika Megyesi of Governance Alive for introducing us to the concept of sociocratic governance. Thank you to everyone who contributed to our autonomous vehicle research, especially Bill Maguire, Mark Sanders, Ava Burns, Dr. Raymond Sheh, and Philip Koopman. Thank you to Hugh Forest and everyone at SXSW.

Thank you to our Arkansas friends, including Dr. Lee Smith, Karen McMahen, Jon Laffoon, J. Alex Long, Katie Robertson, and Jackie Phillips; City of Mena Mayor Seth Smith; Dr. Phillip Wilson, Dr. Gaumani Gyanwali, and the University of Arkansas at Rich Mountain; Arkansas Representative John Maddox; Aron Shelton and the Northwest Arkansas Council; Kimberly Randle and PTAC, Kathryn Carlisle, Mary Lacity, and the University of Arkansas Blockchain Center of Excellence; Bentley Story, Olivia Womack, and the Arkansas Economic Development Commission; and Melissa Taylor, Chris Moody, and the whole team at the Fayetteville Public Library Center for Innovation.

Thank you to Tom Kilroy for insisting that girls can do anything boys can do, and to our world-changing women-in-STEM inspirations, who include scientist Marie Curie, mathematician Mileva Marić, and NASA’s hidden figures: mathematicians Dorothy Vaughan, Mary Jackson, and Katherine Johnson. Thank you to the AI teams who built systems like Meta, Google, and Twitter, who are speaking out to expose AI’s dangers. Thank you to Silas Williams for supplying hope for the future, and to David Bowie for providing the soundtrack. To thank everyone who helped us get here would be a book unto itself.

Get Blockchain Tethered AI now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.