Chapter 4. Planning Your BTA

After reading Chapters 1 through 3, you are now prepared to start building your own blockchain tethered AI system (BTA). This chapter helps you get the scope for setting up your Oracle Cloud instance and running your model (Chapter 5), instantiating your blockchain (Chapter 6), running your BTA (Chapter 7), and testing the completed BTA (Chapter 8).

The BTA is built by interweaving the MLOps process with blockchain in such a way that the MLOps system requires blockchain to function, which results in the AI being tethered. To do this, the BTA integrates blockchain with MLOps so that a model must be approved in order to proceed through the cycle of training, testing, review, and launch. Once a model is approved, it advances to the next step of the cycle. If it is declined, the model goes back to the previous steps for modifications and another round of approvals. All of the steps are recorded in the blockchain audit trail.

To plan for a system like this, you need to consider the architecture of the BTA, the characteristics of the model you are trying to tether, how accounts and users are created and how permissions are assigned, and the specifics of how and why to record certain points on blockchain.

BTA Architecture

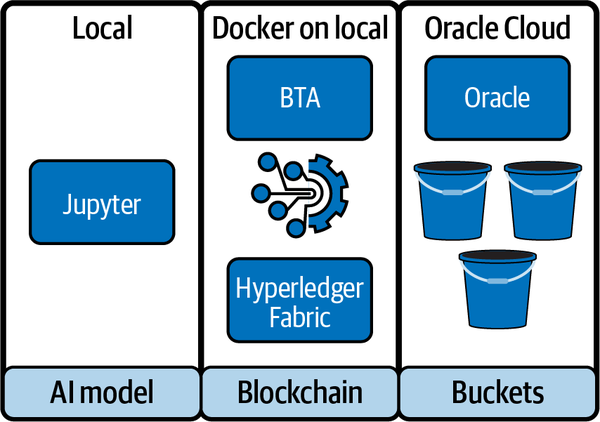

The BTA has three major layers in its architecture: the BTA web application, a blockchain network, and the buckets.

Alongside the BTA, you will use a Jupyter Notebook, which is a Python development environment that helps you to build and test models on your local system. The BTA architecture provides you with cloud-based buckets to store and share logs and artifacts that are generated when you run the code that is contained in your Jupyter Notebook. Figure 4-1 gives you a high-level overview of the architecture that this book’s exercises use.

Figure 4-1. High-level architecture used for this book’s exercises

These environments work together to allow AI and MLOps engineers to route and approve a new or modified model, or store objects like models and logs in buckets, while recording cryptographic hashes of the objects onto blockchain.

When the users are created in the BTA, the organization admin also issues them Oracle Cloud credentials. These credentials are stored in the BTA web app under each user’s configuration. The users log in to the BTA, and the Oracle Cloud and blockchain are seamlessly connected to the system with no further action from the users. Chapter 5 explains the Oracle Cloud integration and setup process in detail.

Sample Model

To test your BTA, you need a model to tether. This book’s accompanying code provides a sample Traffic Signs Detection model, which is a good model to test because it has features that allow you to try all four blockchain controls, as Chapter 2 describes.

The completed AI factsheet in the next section details the Traffic Signs Detection model. There could be many more facts on the factsheet, but this is enough information for testing purposes.

AI Factsheet: Traffic Signs Detection Model

Let’s define some of the terms we’ll be using:

- Purpose

-

Detect traffic signs based on input images.

- Domain

-

Transportation.

- Data set

-

The data set is divided into training data and test data. The data set is collected from the German Traffic Sign Recognition Benchmark (GTSRB). This data set includes 50K images and 43 traffic sign classes.

- Algorithm

-

Convolutional neural network (CNN).

- ML type

-

Supervised learning.

- Input

-

Images of traffic signs.

- Output

-

Identify input images and return text containing names of traffic signs and probability of successful identification.

- Performance metrics

-

Accuracy of the model based on the F1 score and confusion matrix.

- Bias

-

Since the project uses 43 classes, each class has 1K to 1.5K images, so data skew is minimized. No known bias.

- Contacts

-

MLOps and AI engineers, stakeholders, and organization admin are the major contacts of the project. (In this case, all of those contacts are you.)

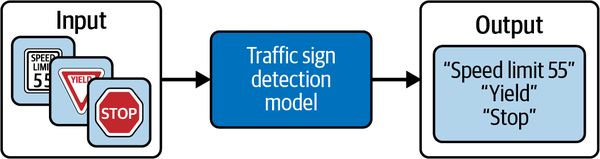

How the Model Works

To try the sample model, you can browse to its user interface using a web browser and upload an image of a traffic sign. If the model thinks it recognizes the sign, it will return the name of the sign. Otherwise, it will indicate that the sign is not recognized. This model is similar to visual recognition used in vehicles to recognize traffic signs. This is illustrated in Figure 4-2.

Figure 4-2. Sample inputs and outputs of the Traffic Signs Detection model

A simple visual recognition model like this is built by training the model with thousands of images that contain street signs, and thousands of images that contain items that look like street signs but aren’t. This has already been done in the example model that is included with this book.

If this were a production model, AI and MLOps engineers might continue to attempt to improve its accuracy. They may introduce new sets of training or testing data, or they might modify an algorithm to do something like consider the context of the traffic sign (Is it covered by a tree branch? Is it raining?). The AI and MLOps engineers routinely run controlled experiments with modified variables, then weigh the results of the experiments against the results of previous tests and look for measurable improvements.

The modifications to the Traffic Signs Detection model itself, which are explained in depth in Chapter 5, are made using a Jupyter Notebook that runs on your local computer. Now that you are somewhat familiar with the model, you can consider how to tether it with blockchain.

Tip

How to use the Jupyter Notebook to modify the Traffic Signs Detection model is explored in the exercises in Chapter 5.

Tethering the Model

The BTA keeps track of how various approvers impact a model, such as how a stakeholder affects the model’s purpose and intended domain; how an AI engineer collects and manages training data and develops models using different algorithms; how an MLOps engineer tracks and evaluates inputs and outputs and reviews performance metrics, bias, and optimal and performance conditions; and how AI and MLOps engineers create explanations. The BTA’s scope could be expanded to include additional user roles, like an auditor.

The AI engineers can log in to the BTA and record details about model training events and experiments. This triggers workflow to MLOps engineers who are involved in the approval and deployment of the model. The workflow is such that an AI engineer can create a model in the BTA, record the results of certain experiments, and submit the model for review and approval. Then, the MLOps engineer user can test the model, review the results of the AI engineer’s experiments, and give feedback. Once the MLOps engineer approves the results, the model is presented to the stakeholder for a final approval.

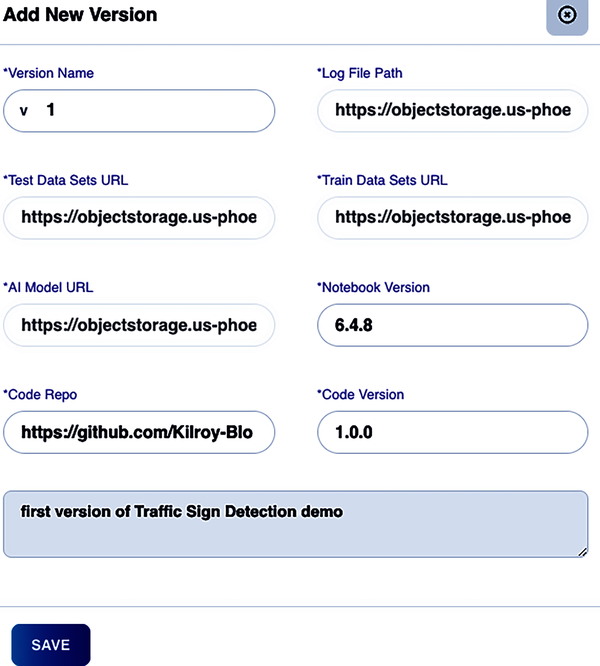

When submitting a model for approval, the BTA will prompt the AI engineer for a unique version number. As you can see in Figure 4-3, the BTA collects URLs for the log files, test and training data sets, model URL, notebook version, and code repository at the same time, which become tied to this version number.

Figure 4-3. AI engineer assigning a version number to a model in the BTA

When the Add New Version form is submitted, cryptographic hashes are created from this information and stored on blockchain, which creates a tamper-evident trail of the origin of the model.

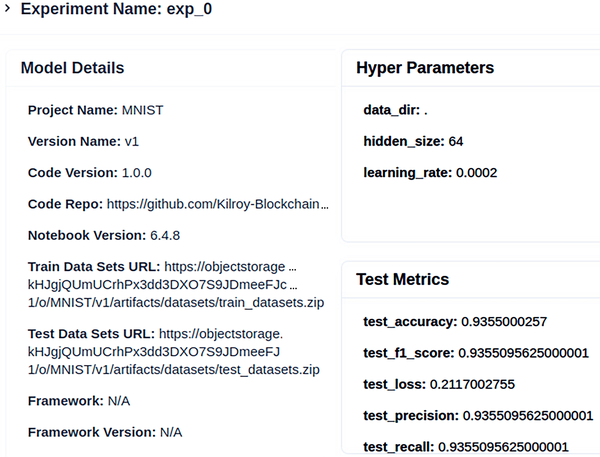

Next, the experiment is run and the detailed outcome is recorded by the AI engineer. When the model arrives at the next step—review by the MLOps engineer—they will receive an email notification. When the MLOps engineer opens the link, they will access the Version Details screen, which contains information they need to perform their own testing and form their own feedback about the model. The Model Details section, as shown in Figure 4-4, shows the MLOps engineer at a glance the basic information about the model, such as its code version, notebook version, and the URLs of the data sets used to train that version. Along with the details of the model are hyperparameters, which, as you may recall from Chapter 1, are the run variables that an AI engineer chooses before model training, and test metrics, or analytics, for the model for the most recent and past epochs. The MLOps engineer can review these details before deciding whether to approve or reject the model.

Figure 4-4. Model details and hyperparameters of trained model

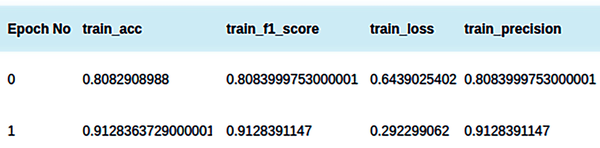

Next on the Version Details screen is a list of epochs that are associated with a particular version. The list shows metrics for each run through the data set that has been done in this version, as shown in Figure 4-5.

Figure 4-5. List of epochs of an experiment

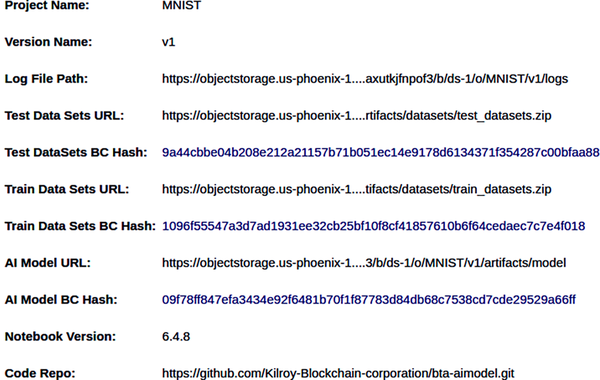

Further down on the Version Details screen, as shown in Figure 4-6, are details that help you to verify the results of the experiment and the steps taken to achieve the results. These details include the path to the log file, a link to test and training data and blockchain hashes which can be used to verify that the data has not undergone tampering, the URL of the model, a hash that can be used to verify its code, and a link to the code repository.

Figure 4-6. Version details

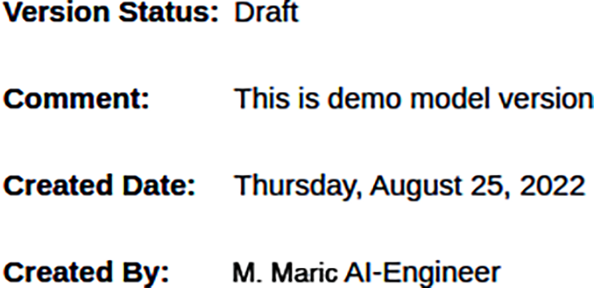

The status of the version and details about its origin are also displayed on the Version Details screen, as shown in Figure 4-7. This status indicates whether the version is still a draft or if it has been put into production, along with information about when it was created and the name of the AI engineer who created it.

Figure 4-7. Version status and origin details

As shown in Figure 4-8, the Version Details page displays a Log File BC Hash. This is the value stored on blockchain that can be used later to prove the log file has not undergone tampering.

Figure 4-8. Log file hash that will only compute to the same value if the log has not changed

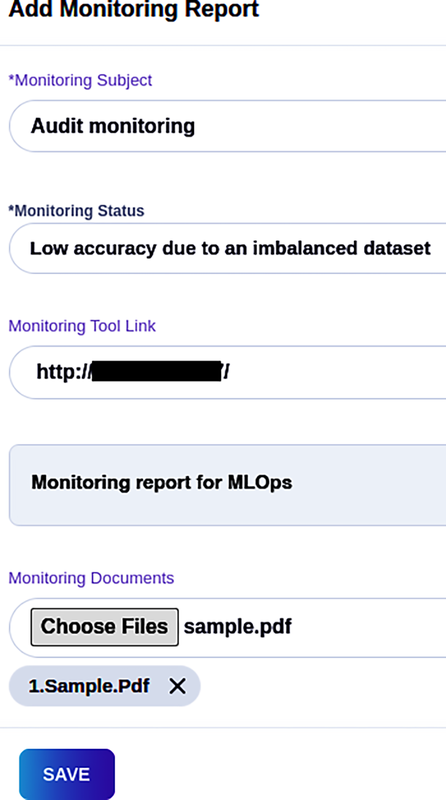

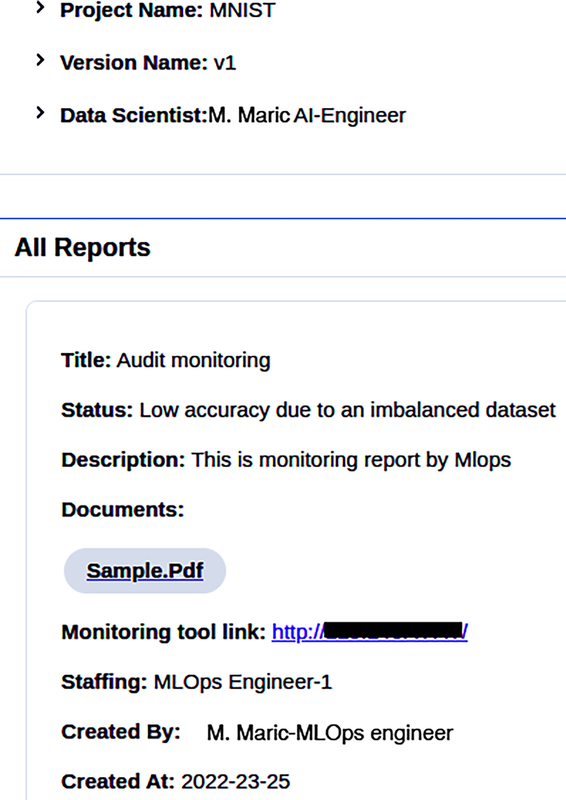

The MLOps engineer completes their review, as shown in Figure 4-9, using the Add Monitoring Report form.

Figure 4-9. Adding a new monitoring report in the BTA

The AI engineer can gather input from the MLOps engineer by reviewing the Monitoring Report, as shown in Figure 4-10.

Figure 4-10. BTA monitoring report interface

Tip

If this were a real scenario, you would want to also include peer reviews, which means that in addition to the MLOps engineer and the stakeholder, other AI engineers could add their reviews of a model.

The AI engineer reviews and implements the required changes and submits the model again for the review. This cycle continues until the stakeholder accepts the product or until some other limitation, like permitted time or budget, has been reached.

After the model is reviewed and approved, it is deployed onto a production server and closely monitored by the MLOps engineer and stakeholder in a continuous feedback, improvement, and maintenance loop.

The BTA’s records help users with an oversight role to be certain of the provenance of the model, including who has trained the model, what data sets are used, how the model got reviewed, the model’s accuracy submitted by the AI engineer compared to the model’s accuracy submitted by the MLOps engineer, and the feedback given by peers. The BTA can be used to produce a blockchain-based audit trail of all steps and all actions done during the development and launching of the model, which is helpful to stakeholders and auditors, and which Chapter 8 explores in detail.

Note

People may switch jobs, organizations may change policies; still, the current policies need to be evaluated in order to allow or disallow a model from passing into production. Building these requirements into a BTA will help to catch, or even prevent, mistakes that result from organizational changes.

Subscribing

The BTA is multitenant, which means that different organization accounts, with different users, can exist in one implementation without impacting one another. Brand new BTA accounts are created by adding a new subscription. Subscription requests are initiated by the new organization admin on the BTA signup page, as Chapter 7 explains.

When you create a new subscription in your test BTA, make sure you do it with the email address that you plan to use for the organization admin. For your test, use any organization name and any physical address that you like. After you submit your request for a new subscription, the super admin, or built-in user who oversees all subscriptions, will be able to approve it and allow your organization admin to use the BTA to create users, upload and approve models, check the audit trail, and delegate these functions and features to other users.

At the time of subscription approval, the super admin adds an Oracle bucket to the new subscription, and creates the required blockchain node, using the Oracle Cloud account for the organization. Chapter 7 explains the complete steps for adding and approving a subscription, and for integrating it with the Oracle Cloud. Now that the account is set up, the organization admin can start working access control and create organization units, staffings, and users.

Controlling Access

Access control by way of user permissions is the cornerstone of a multiuser, multitenant software as a service like the BTA. When different users log in, they can see different features and perform different functions within the BTA. This is accomplished through creating organizations and staffings that define the level of access, and assigning them to users. Chapter 7 has more detail on how to set up access control and users. This section provides an overview to give you a high-level understanding of how access is managed.

Organization Units

Organization units are intended to be logical groupings of features that are assigned to people with similar roles. In your BTA, you have an organizational unit called AI engineers, another called MLOps engineers, and another called stakeholders. Within these organization units, sets of features that fit the role are selected, making these features available to any user assigned to this organization unit.

Staffings

Staffings fall within organization units and are intended to mitigate users’ create-read-write-update access within the features. For this BTA example, we only have one staffing for each organization unit. In a production scenario, you may have multiple staffings within each organization unit.

Tip

If you don’t see a feature in the staffing list, make sure it is enabled in the staffing’s organization unit.

Users

The BTA offers role-based access control (RBAC) implemented via units called organizations and staffings. This way, the depth of the information displayed to any individual user can be controlled by placing that user in an organization and staffing appropriate to their level of authorized access. It is critical to create traceable credentials and well-thought-out permission schemes to have a system that lets the right participants see the right information, and keeps the wrong participants out.

As you have read throughout this chapter, there are five types of users that you will use in your test BTA: super admin, organization admin, stakeholders, AI engineers, and MLOps engineers.

When a user is added, they get login credentials from the BTA application. These details include a username, a password, and a blockchain key generated at the blockchain end using the SHA-256 hashing algorithm as a collision resistant hash function that hashes the user’s wallet info and creates the key. A collision resistant hash function means that it is unlikely to find two inputs that will hash to the same output.

Tip

If this were a production project, some good questions to ask yourself when determining participants are: Who is on the team? How are they credentialed? What sort of organizations and roles should they be grouped into? What is the procedure when someone leaves or joins?

Super admin

The super admin is a special user that is automatically created when you instantiate your BTA. The super admin can inspect any subscriber’s authenticity and either verify or decline any organization’s subscription. The super admin is assigned their own blockchain node, where all approved or declined subscription requests are stored.

Organization admin

Once the subscription is approved by the super admin, the BTA allows the new organizational admin to log in. An organization admin has permission to manage the users for their subscription by assigning the correct roles and permissions. The organization admin plays an important role in using the BTA by creating the project, organization units (departments), staffings, and users.

The organization admin creates all required organization units and permissions before creating the users. While different kinds of permissions for model creation, submission, review, deployment, and monitoring can be set in the BTA, these exercises suggest that you follow the steps outlined in Chapter 7 for setting the user permissions so you have a system that will work properly; then you can safely make changes to test new ideas.

Tip

It is important to understand that after creating all required permissions, the organization admin needs to create at least one AI engineer, MLOps engineer, and stakeholder to start a project. Since these users must approve or reject a model, a project cannot be created unless one of each type of user is created. In order to let the user work with the blockchain, a blockchain peer and channel name should be assigned to each user by the organization admin.

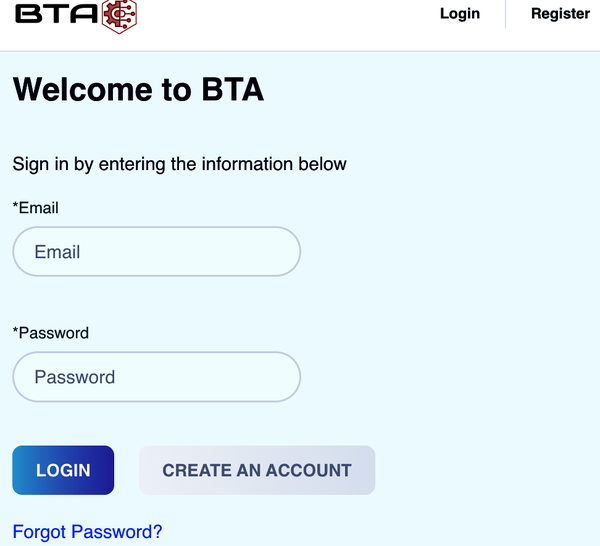

AI engineer

After the organization admin creates the AI engineer, the user receives an activation email. The email contains the username and password. It also contains the blockchain key created using the user’s blockchain wallet info like a public key. The SHA-256 algorithm is used to create the key. In the sample project in this chapter, the user needs to first enter their credentials on the login page, as shown in Figure 4-11.

Figure 4-11. Login page

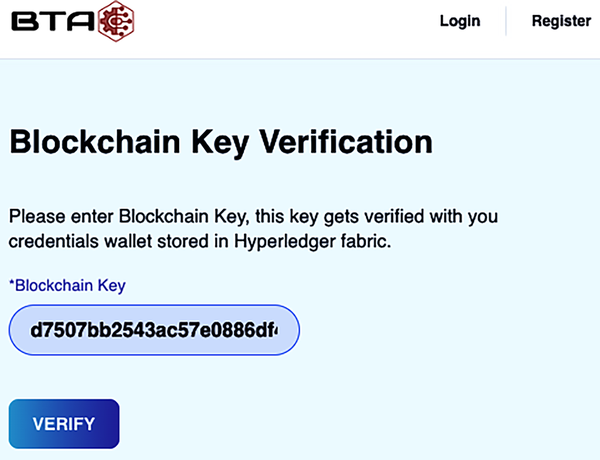

Next, the user is asked to supply their blockchain key to pass the second layer of security of the application, as illustrated in Figure 4-12.

This feature is built to address blockchain control 1 (pre-establish identity and workflow criteria for people and systems). The identity created in blockchain is used in the web application through the Blockchain Key Verification page.

Tip

When the account in Oracle Cloud is created by the organization admin, it sends an email to the user which includes Oracle Cloud login credentials. These details have to be entered into the user’s configuration so they can be passed to the cloud by the BTA.

After the activation of the BTA application account and Oracle Cloud account, the user logs in to the BTA application where all AI projects assigned to them are listed. The engineer should be able to start model development using Oracle Cloud–based data science resources. After a model is created, the engineer can log in to the BTA web application, select a project assigned to them, and start creating a new version of the recently built model. In the sample project, the user can create a new version of the model but keep it as a draft. Draft status allows the user to come back and change the model info in the web application. Once the model is ready to submit, the user can submit it for review by the MLOps engineer. After submitting the model, the user cannot change anything in the submitted version.

Figure 4-12. Blockchain key verification page after passing first login

After creating a model, the log file should be stored in the user’s bucket in the folder hierarchy shown in the following list:

-

Project Name

-

Version Name (V1)

-

Artifacts

-

Train Data Set

-

Test Data Set

-

-

Log

-

Exp1.json

-

Exp(n).js

-

-

-

MLOps engineer

The MLOps engineer reviews and deploys the submitted models, and can see all projects in the BTA, including multiple versions of each project. The MLOps engineer downloads the model and runs it in their own data science environment, where test data and logs are stored in the user’s own bucket following the folder format discussed in the AI engineer’s role.

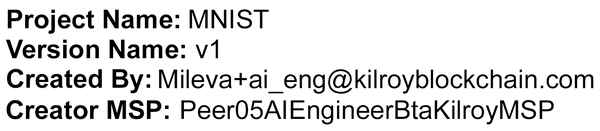

Before the review process starts, the MLOps engineer makes sure that the data set submitted by the AI engineer has not changed by comparing the hash of logs and artifacts between the current version versus before the run. Figure 4-13 shows basic information about the project such as the name, version, engineer’s name, and the blockchain membership service provider, or MSP, verifying the identity of the version’s creator.

Figure 4-13. Model details

The Verify BC Hash section of the Model Blockchain History screen in BTA compares blockchain hashes of the log file, test data set, training data set, and AI model against the hashes of current bucket data to prove that data tampering has not taken place, as shown in Figure 4-14.

Figure 4-14. The Verify BC Hash section of the Model Blockchain History screen in BTA

If the hash verification passes, it proves that artifacts and logs have not been tampered with, thus addressing blockchain control 2.

Tip

As with the AI engineer, the MLOps engineer should activate two accounts before starting. These are the Oracle account and the BTA application account. With successful account activation and login, the user gets access to the OCI Data Science resources, the BTA application, and the blockchain peer.

When the review process starts, the MLOps engineer can change the status of the model to “Reviewing” so that the other project participants know that the review process has begun. After reviewing the model, the MLOps engineer can change the status of the model to “Review Passed” or “Review Failed.” (A model gets “Review Passed” if the accuracy of the model reviewed by the MLOps engineer is greater than the AI engineer’s accuracy retrieved from the submitted log file.)

Once the review passes, the MLOps engineer changes the status to “Deployed” and releases a testing/deployed URL for the rest of the users. After the model is reviewed, the MLOps engineer can change the model’s status to “Deployed.” They can add ratings of the model, production URLs, and review the version number, or add a log-and-test data set that is pulled by the script based on the version number. By adding review details from a MLOps engineer, the stakeholder or end user knows why the model was approved and deployed. This helps in governance and auditing of the AI model, which addresses blockchain control 3.

Additionally, a team review helps to make the model more authentic. Screenshots of a bug or issue with the model can be compiled into one document and uploaded to the BTA. The model’s status can be set to QA to indicate it is under review, which is noted on blockchain. This creates a tamper-evident audit trail of deployment issues. The MLOps engineer can then launch the model to production if reviews after deployment are positive. The engineer should then change the status of the model to “Production.” They can also update the status to “Monitoring,” which allows for feedback if bugs or other issues are found in the launched AI model.

Stakeholder

Stakeholders pass through the registration and account activation process in a similar fashion to the AI and MLOps engineers, but with different staffing assignments. Stakeholder users get the staffing permissions that allow the user to review, complete, or cancel the AI model. The stakeholder gets access to the blockchain node, but it is not necessary to get access to the Oracle Cloud account as the user is not going to develop an AI model. The stakeholder user, as the decision maker, can either cancel or complete the project.

The stakeholder is able to review the deployed model and monitor the production-based model. The stakeholder is able to see who has submitted the model, the accuracy of the model, and compare the hashes of the logs and artifacts before and after they deploy to prove the provenance of the artifacts. All this information is pulled from the blockchain node along with the transaction ID and timestamp, so it addresses blockchain control 4.

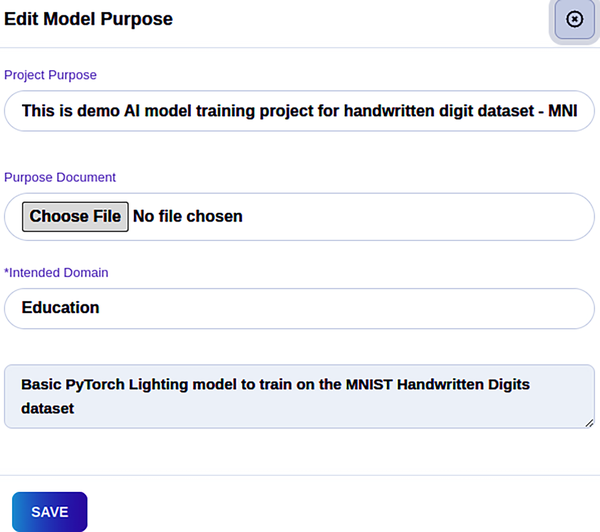

The stakeholder can add or update a purpose for the model, as shown in Figure 4-15, and review, monitor, audit, and approve or decline the model.

Figure 4-15. A stakeholder updates a purpose for a model in the BTA

Analyzing the Use Case

Chapter 1 discussed defining your blockchain use case. Figure 1-13 illustrates how to determine the participants, assets, and transactions for blockchain networks. Now you can apply this process to your BTA use case, and determine how to address both the business logic and the technical requirements of the BTA’s blockchain component.

Participants

A participant does some action that involves writing to the blockchain, while a user is anyone who logs in to the BTA web user interface. As such, an AI engineer is both a user of the BTA and a participant in the blockchain network because their approvals and comments need to leave a tamper-evident trail. An auditor user may not need to leave such a trail, because they are only reading records and auditing them. In this case, the auditor is a user of the BTA, but not a participant of the blockchain network.

Assets

Models, code repositories, logs, artifacts, and reports are the assets you will use when building the example BTA. Models are the AI models before and after training epochs and experiments. Repositories are where the code is stored. Logs are the temporary historical records that are produced during the epochs, similar to text logs found for any other computer system. Artifacts are defined as training and test data sets that have been used in previous training and testing. Reports are produced by the BTA or by supporting systems.

You might think that the BTA is supposed to log all data for each and every activity on blockchain. However, your BTA does not require that all data be logged on blockchain and it does not record each and every transaction. There is a good reason for not storing all assets like logs and artifacts into blockchain and into applications: the files themselves are too large and numerous, and storing the objects there isn’t a good use for blockchain. Also, because there might be millions of objects like these in an ML system, storing them on blockchain distributed nodes will ultimately slow down the blockchain network and affect the performance.

It is better to put objects into a cloud-based object store with a pointer to the object and a hash of the object stored on blockchain. To make sure that the data set or log produced is tamper evident (the info has not been changed from the date of submission), the BTA stores the hash of those files (log and artifacts) in blockchain using the SHA-256 hashing algorithm, as detailed in Chapter 2.

At the time of model verification, the MLOps engineer makes sure the hash of the log or data set they get while running the model in their instance matches the hash of those files submitted by an AI engineer. If the hash is not the same, it indicates that the data set or log info has been changed outside of the accepted workflow. In that scenario, the MLOps engineer will not deploy the model. Instead, the model gets the status “Review Failed” and the AI engineer gets a chance to amend it based on this feedback.

When the MLOps engineer or stakeholder reviews the model, the channel (global-channel) receives the data and sends it to the stakeholder, MLOps engineer, and AI engineer’s peers. In this way, data gets shared among different peers. This architecture is created for a project where each participant belongs to different organizations and grows when new projects are added. This architecture is responsible for achieving all four blockchain controls mentioned in Chapter 2.

Transactions

As discussed in Chapter 1, a transaction is what occurs when a participant takes some significant action with an asset, like when an MLOps engineer approves a model. When other participants comment on the model, those are also transactions, and are recorded on blockchain.

Usually, when you think through your transactions is where you will find the information that you need in the audit trail that you will store on blockchain, or blockchain touchpoints. Think of them as important points within the lifecycle of your model, timestamped and recorded on the blockchain sequentially so they can be easily reviewed and understood.

Smart Contracts

As you learned in Chapter 1, the term smart contract is used to refer either to functions within the chaincode that drive the posting of blocks to the blockchain, or the business logic layer of the application that drives workflow. In your BTA, smart contracts are used to automate the acceptance or rejection of the results of a model training event, and to kick off rounds of discussion about why the model is being accepted or rejected and what to do about it.

The workflow smart contracts used in your BTA only require any one participant to move something into a different status such as QA. In a nontest scenario, this workflow can be as complex as the business requirements, requiring a round of voting or for some other condition to be fulfilled (e.g., QA has to be performed for two weeks before the model can be put into production).

Audit Trail

Chapter 1 explained in detail how to determine and document blockchain touchpoints. This section guides you through how to design the audit trail for your BTA.

The purpose of adding blockchain to an AI supply chain is to create a way to trace and prove an asset’s provenance. Therefore, it is very important to make sure all of the critical events are recorded onto blockchain. The BTA project allows AI engineers to develop the AI model using whatever toolsets, frameworks, and methodologies they prefer. Their development activities are not logged into the blockchain because there would be tons of trial and error in the code, which may not make sense to record because it would make the blockchain too big and unwieldy.

For this reason, the sample BTA focuses on the participants, assets, and transactions surrounding the model submitted by the AI engineer to the MLOps engineer for review. Some project information from the AI factsheet, such as its purpose and key contacts, are part of the BTA-generated audit trail, as shown in Table 4-1. In this table, you can see a timestamp of when each transaction was posted to blockchain, a transaction ID, an email address associated with the participant that created the transaction, which Hyperledger Fabric MSP was used for identity and cryptography, a unique ID for the project, detail about the project, and its members.

| Timestamp | TxId | Added By | CreatorMSP | projectId | Detail | Members |

|---|---|---|---|---|---|---|

| 2022-07-10T16:44:24Z | 52628...6d2365 | OrgAdmin@... | PeerOrg1MainnetBtaKilroyMSP | 628b6c2...fa45625 | Project: TSD Domain: Transportation Traffic sign detection is the process... |

aiengineer@..., mlopsengineer@..., stakeholder@... |

| 2022-04-11T16:44:24Z | 726b7...6d2369 | OrgAdmin@... | PeerOrg1MainnetBtaKilroyMSP | 428b6c...562569 | Project: HDR Domain: Education The handwritten digit recognition... |

aiengineer@..., mlopsengineer@..., stakeholder@... |

The BTA also stores transactions that relate to when the model was tested, along with hashes of the log file, test data set, and training database, as shown in Table 4-2. By adding version status, this becomes an easy-to-understand audit trail of the training cycle of the model.

| project id: | 428b6c252a41ae5fa4562569 |

| Timestamp | TxId | CreatorMSP | versionId | Version | logFileBCHash | testDatasetBCHash | trainDatasetBCHash | versionStatus |

|---|---|---|---|---|---|---|---|---|

| 2022-07-11T15:24:24Z | 933d0...c7573 | PeerMLOpsEngineerMainnetBtaKilroyMSP | 60e6...55298 | 1 | 68878...99ce91c6 | e5af4...385dd | 1dd49...9107d | Review Passed |

| 2022-07-11T14:24:24Z | d091a...9221 | PeerAIEngineerMainnetBtaKilroyMSP | 60e6f...55276 | 2 | bef57...892c4721 | 7d1a5...d4da9a | 4fb9...1f6323 | Pending |

| 2022-07-10T19:24:24Z | 75bc...52946 | PeerStakeHolderMainnetBtaKilroyMSP | 62ca8...7562 | 3 | 88d4...f031589 | ba78...0015ad | 36bb...4ca42c | Complete |

| 2022-07-08T19:24:24Z | 2e6b...9a4b | PeerMLOpsEngineerMainnetBtaKilroyMSP | 60e6f...5255 | 4 | ab07...9ju6a97 | 1b60e...ca6eef | 179e...98b72c | Review Failed |

The status of a trained model, its version details, and the corresponding URL are also recorded in your BTA. Table 4-3 shows how this might look to a participant using your BTA to audit the AI.

| Version ID: | 62ca8a8b3c1f1f70949d7562 |

| versionName: | v3.0 |

| logFileVersion: | v1.0 |

| logFileBCHash: | 88d42...31589 |

| testDatasetBCHash: | ba781...015ad |

| trainDatasetBCHash: | 36bbe...ca42c |

| Timestamp | TxId | CreatorMSP | DeployedURL | ModelReviewId | ProductionURL | versionStatus |

|---|---|---|---|---|---|---|

| 2022-07-10T19:24:24Z | 75bc7c8094...5c3d652946 | PeerStakeHolderMainnetBtaKilroyMSP | - | - | - | Complete |

| 2022-07-10T17:44:24Z | 6c4a63e39f...2b7463cdf8b | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | - | Monitoring |

| 2022-06-15T13:45:24Z | 17fa18dcab...df34rsdf984 | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | http://productionurl.com | Production |

| 2022-06-27T13:45:24Z | 27fa18dcab...76224d3b7 | PeerStakeHolderMainnetBtaKilroyMSP | - | - | - | QA |

| 2022-06-25T13:45:24Z | sdfsdf3dc...3234nisdfms | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | - | QA |

| 2022-06-23T13:45:24Z | 34dfd32j...324234sdf32s | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | - | Deployed |

| 2022-06-16T16:44:24Z | 476254942...9b55efe32 | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | - | Review Passed |

| 2022-06-15T12:40:24Z | 6685c676bd...4ad39c17 | PeerMLOpsEngineerMainnetBtaKilroyMSP | - | - | - | Reviewing |

| 2022-06-10T11:45:24Z | da23812033...670c3146f45 | PeerAIEngineerMainnetBtaKilroyMSP | - | - | - | Pending |

Part of auditing a blockchain is having the ability to check the hashes and request a change. This is addressed in Chapter 8, where you can also find more screenshots that show how the BTA is intended to look and work.

Summary

In Chapter 4 you learned about your AI model and what it does by reviewing its factsheet. You took a look at the various user personas in more depth, as well as how system access is controlled for each. Finally, you started looking at the BTA blockchain use case in more depth, analyzing the participants, assets, and transactions, and exploring the sort of audit trail that is possible with blockchain.

In Chapter 5 you will move on to setting up your Oracle Cloud instance and running your model.

Get Blockchain Tethered AI now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.