Chapter 1. Introduction to Serverless on AWS

The secret of getting ahead is getting started.

Mark Twain

Welcome to serverless! You are stepping into the vibrant ecosystem of a modern, exciting computing technology that has radically changed how we think of software, challenged preconceptions about how we build applications, and enabled cloud development to be more accessible for everyone. It’s an incredible technology that many people love, and we’re looking forward to teaching you all about it.

The software industry is constantly evolving, and the pace of evolution in software engineering is faster than in just about any other discipline. The speed of change disrupts many organizations and their IT departments. However, for those who are optimistic, change brings opportunities. When enterprises accept change and adjust their processes, they lead the way. In contrast, those who resist change get trampled by competition and consumer demands.

We’re always looking for ways to improve our lives. Human needs and technology go hand in hand. Our evolving day-to-day requirements demand ever-better technology, which in turn inspires innovation. When we realize the power of these innovations, we upgrade our needs, and the cycle continues. The industry has been advancing in this way for decades, not in giant leaps all the time but in small increments, as one improvement triggers the next.

To fully value serverless and the capabilities it offers, it is beneficial to understand its history. To stay focused on our subject without traveling back in time to the origins of bits and bytes, we’ll start with the cloud and how it became a force to reckon with.

Sit back, relax, and get ready to journey through the exciting world of serverless!

The Road to Serverless

During the early 2000s, I (Sheen) was involved in building distributed applications that mainly communicated via service buses and web services—a typical service-oriented architecture (SOA). It was during this time that I first came across the term “the cloud,” which was making a few headlines in the tech industry. A few years later, I received instructions from upper management to study this new technology and report on certain key features. The early cloud offering that I was asked to explore was none other than Amazon Web Services.

My quest to get closer to the cloud started there, but it took me another few years to fully appreciate and understand the ground-shifting effect it was having in the industry. Like the butterfly effect, it was fascinating to consider how past events had brought us to the present.

Note

The butterfly effect is a term used to refer to the concept that a small change in the state of a complex system can have nonlinear impacts on the state of that system at a later point. The most common example cited is that of a butterfly flapping its wings somewhere in the world acting as a trigger to cause a typhoon elsewhere.

From Mainframe Computing to the Modern Cloud

During the mid-1900s, mainframe computers became popular due to their vast computing power. Though massive, clunky, highly expensive, and laborious to maintain, they were the only resources available to run complex business and scientific tasks. Only a lucky few organizations and educational institutions could afford them, and they ran jobs in batch mode to make the best use of the costly systems. The concept of time-sharing was introduced to schedule and share the compute resources to run programs for multiple teams (see Figure 1-1). This distribution of the costs and resources made computing more affordable to different groups, in a way similar to the on-demand resource usage and pay-per-use computing models of the modern cloud.

![Mainframe computer time-sharing (source: adapted from an image in Guide to Operating Systems by Greg Tomsho [Cengage])](/api/v2/epubs/9781098141929/files/assets/sdoa_0101.png)

Figure 1-1. Mainframe computer time-sharing (source: adapted from an image in Guide to Operating Systems by Greg Tomsho [Cengage])

The emergence of networking

Early mainframes were independent and could not communicate with one another. The idea of an Intergalactic Computer Network or Galactic Network to interconnect remote computers and share data was introduced by computer scientist J.C.R. Licklider, fondly known as Lick, in the early 1960s. The Advanced Research Projects Agency (ARPA) of the United States Department of Defense pioneered the work, which was realized in the Advanced Research Projects Agency Network (ARPANET). This was one of the early network developments that used the TCP/IP protocol, one of the main building blocks of the internet. This progress in networking was a huge step forward.

The beginning of virtualization

The 1970s saw another core technology of the modern cloud taking shape. In 1972, the release of the Virtual Machine Operating System by IBM allowed it to host multiple operating environments within a single mainframe. Building on the early time-sharing and networking concepts, virtualization filled in the other main piece of the cloud puzzle. The speed of technology iterations of the 1990s brought those ideas to realization and took us closer to the modern cloud. Virtual private networks (VPNs) and virtual machines (VMs) soon became commodities.

The term cloud computing originated in the mid to late 1990s. Some attribute it to computer giant Compaq Corporation, which mentioned it in an internal report in 1996. Others credit Professor Ramnath Chellappa and his lecture at INFORMS 1997 on an “emerging paradigm for computing.” Regardless, with the speed at which technology was evolving, the computer industry was already on a trajectory for massive innovation and growth.

The first glimpse of Amazon Web Services

As virtualization technology matured, many organizations built capabilities to automatically or programmatically provision VMs for their employees and to run business applications for their customers. An ecommerce company that made good use of these capabilities to support its operations was Amazon.com.

During early 2000, engineers at Amazon were exploring how their infrastructure could efficiently scale up to meet the increasing customer demand. As part of that process, they decoupled common infrastructure from applications and abstracted it as a service so that multiple teams could use it. This was the start of the concept known today as infrastructure as a service (IaaS). In the summer of 2006, the company launched Amazon Elastic Compute Cloud (EC2) to offer virtual machines as a service in the cloud for everyone. That marked the humble beginning of today’s mammoth Amazon Web Services, popularly known as AWS!

Cloud deployment models

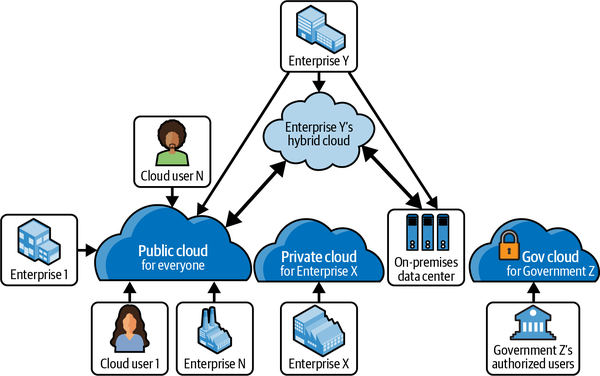

As cloud services gained momentum thanks to the efforts of companies like Amazon, Microsoft, Google, Alibaba, IBM, and others, they began to address the needs of different business segments. Different access models and usage patterns started to emerge (see Figure 1-2).

Figure 1-2. Figurative comparison of different cloud environments

These are the main variants today:

- Public cloud

-

The cloud service that the majority of us access for work and personal use is the public cloud, where the services are accessed over the public internet. Though cloud providers use shared resources in their data centers, each user’s activities are isolated with strict security boundaries. This is commonly known as a multitenant environment.

- Private cloud

-

In general, a private cloud is a corporate cloud where a single organization has access to the infrastructure and the services hosted there. It is a single-tenant environment. A variant of the private cloud is the government cloud (for example, AWS GovCloud), where the infrastructure and services are specifically for a particular government and its organizations. This is a highly secure and controlled environment operated by the respective country’s citizens.

- Hybrid cloud

-

A hybrid cloud uses both public and private cloud or on-premises infrastructure and services. Maintaining these environments requires clear boundaries on security and data sharing.

The Influence of Running Everything as a Service

The idea of offering something “as a service” is not new or specific to software. Public libraries are a great example of providing information and knowledge as a service: we borrow, read, and return books. Leasing physical computers for business is another example, which eliminates spending capital on purchasing and maintaining resources. Instead, we consume them as a service for an affordable price. This also allows us the flexibility to use the service only when needed—virtualization changes it from a physical commodity to a virtual one.

In technology, one opportunity leads to several opportunities, and one idea leads to many. From bare VMs, the possibilities spread to network infrastructure, databases, applications, artificial intelligence (AI), and even simple single-purpose functions. Within a short span, the idea of something as a service advanced to a point where we can now offer almost anything and everything as a service!

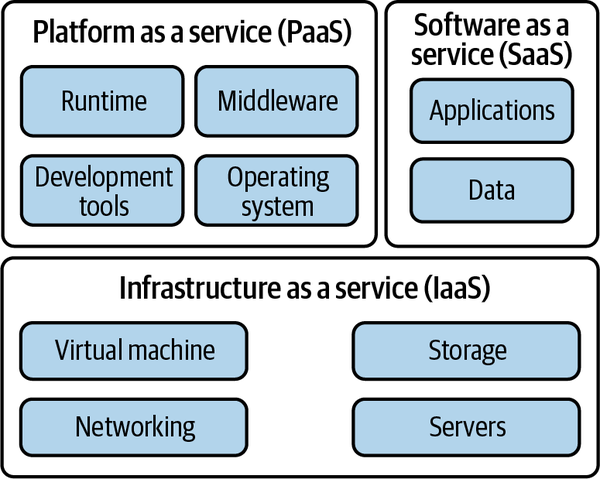

Infrastructure as a service (IaaS)

IaaS is one of the fundamental cloud services, along with platform as a service (PaaS), software as a service (SaaS), and function as a service (FaaS). It represents the bare bones of a cloud platform—the network, compute, and storage resources, commonly housed in a data center. A high-level understanding of IaaS is beneficial as it forms the basis for serverless.

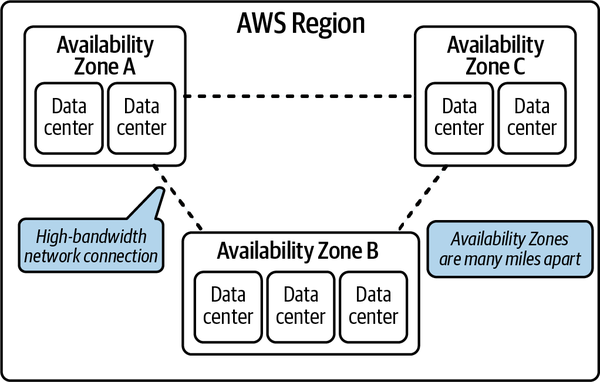

Figure 1-3 shows a bird’s-eye view of AWS’s data center layout at a given geographic area, known as a Region. To offer a resilient and highly available service, AWS has built redundancy in every Region via groups of data centers known as Availability Zones (AZs). The core IaaS offerings from AWS include Amazon EC2 and Amazon Virtual Private Cloud (VPC).

Figure 1-3. An AWS Region with its Availability Zones

Platform as a service (PaaS)

PaaS is a service abstraction layer on top of IaaS to offer an application development environment in the cloud. It provides the platform and tools needed to develop, run, and manage applications without provisioning the infrastructure, hardware, and necessary software, thereby reducing complexity and increasing development velocity. AWS Elastic Beanstalk is a popular PaaS available today.

Software as a Service (SaaS)

SaaS is probably the most used and covers many of the applications we use daily, for tasks such as checking email, sharing and storing photos, streaming movies, and connecting with friends and family via conferencing services.

Besides the cloud providers, numerous independent software vendors (ISVs) utilize the cloud (IaaS and PaaS offerings) to bring their SaaS solutions to millions of users. This is a rapidly expanding market, thanks to the low costs and easy adoption of cloud and serverless computing. Figure 1-4 shows how these three layers of cloud infrastructure fit together.

Figure 1-4. The different layers of cloud infrastructure

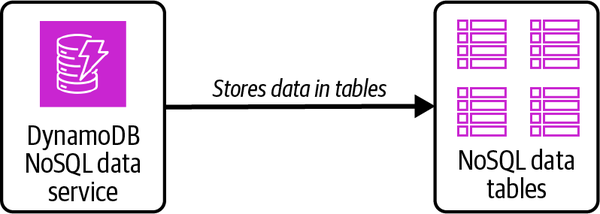

Database as a service (DBaaS)

DBaaS is a type of SaaS that covers various data storage options and operations. Along with the traditional SQL relational database management systems (RDBMSs), several other types of data stores are now available as managed services, including NoSQL, object storage, time series, graph, and search databases.

Amazon DynamoDB is one of the most popular NoSQL databases available as a service (see Figure 1-5). A DynamoDB table can store billions of item records and still provide CRUD (Create, Read, Update, and Delete) operations with single-digit or low-double-digit millisecond latency. You will see the use of DynamoDB in many serverless examples throughout this book.

Figure 1-5. Pictorial representation of Amazon DynamoDB and its data tables

Amazon Simple Storage Service (S3) is an object storage service capable of handling billions of objects and petabytes of data (see Figure 1-6). Similar to the concept of tables in RDBMSs and DynamoDB, S3 stores the data in buckets, with each bucket having its own specific characteristics.

The emergence of services such as DynamoDB and S3 are just a few examples of how storage needs have changed over time to cater to unstructured data and how the cloud enables enterprises to move away from the limited vertical scaling and mundane operation of traditional RDBMSs to focus on building cloud-scale solutions that bring value to the business.

Figure 1-6. Pictorial representation of Amazon S3 and its data buckets

Function as a service (FaaS)

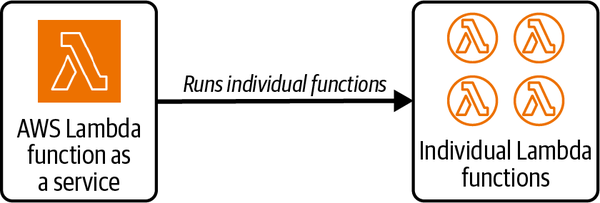

In simple terms, FaaS is a type of cloud computing service that lets you run your function code without having to provision any hardware yourself. FaaS provided a core piece of the cloud computing puzzle by bringing in the much-needed compute as a service, and it soon became the catalyst for the widespread adoption of serverless. AWS Lambda is the most used FaaS implementation available today (see Figure 1-7).

Figure 1-7. Pictorial representation of AWS Lambda service and functions

Managed Versus Fully Managed Services

Before exploring the characteristics of serverless in detail, it is essential to understand what is meant by the terms managed services and fully managed services. As a developer, you often consume APIs from different vendors to fulfill business tasks and abide by the API contracts. You do not get access to the implementation or the operation of the service, because the service provider manages them. In this case, you are consuming a managed service.

The distinction between managed and fully managed services is often blurred. Certain managed services require you to create and maintain the necessary infrastructure before consuming them. For example, Amazon Relational Database Service (RDS) is a managed service for RDBMSs such as MySQL, SQL Server, Oracle, and so on. However, its standard setup requires you to create a virtual network boundary, known as a virtual private cloud (VPC), with security groups, instance types, clusters, etc., before consuming the service. In contrast, DynamoDB is a NoSQL database service that you can set up and consume almost instantly. This is one way of distinguishing a managed service from a fully managed one. Fully managed services are sometimes referred to as serverless services.

Now that you have some background on the origins and evolution of the cloud and understand the simplicity of fully managed services, it’s time to take a closer look at serverless and see the amazing potential it has for you.

The Characteristics of Serverless Technology

Serverless is a technological concept that utilizes fully managed cloud services to carry out undifferentiated heavy lifting by abstracting away the infrastructure intricacies and server maintenance tasks.

John Chapin and Mike Roberts, the authors of Programming AWS Lambda (O’Reilly), distill this in simple terms:

Enterprises building and supporting serverless applications are not looking after that hardware or those processes associated with the applications. They are outsourcing this responsibility to someone else, i.e., cloud service providers such as AWS.

As Roberts notes in his article “Serverless Architectures”, the first uses of the term serverless seem to have occurred around 2012, sometimes in the context of service providers taking on the responsibility of managing servers, data stores, and other infrastructure resources (which in turn allowed developers to shift their focus toward tasks and process flows), and sometimes in the context of continuous integration and source control systems being hosted as a service rather than on a company’s on-premises servers. The term began to gain popularity following the launch of AWS Lambda in 2014 and Amazon API Gateway in 2015, with a focus on incorporating external services into the products built by development teams. Its use picked up steam as companies started to use serverless services to build new business capabilities, and it’s been trending ever since.

Note

During the early days, especially after the release of AWS Lambda (the FaaS offering from AWS), many people used the terms serverless and FaaS interchangeably, as if both represented the same concept. Today, FaaS is regarded as one of the many service types that form part of the serverless ecosystem.

Definitions of serverless often reflect the primary characteristics of a serverless application. Along with the definitions, these characteristics have been refined and redefined as serverless has evolved and gained wider industry adoption. Let’s take a look at some of the most important ones.

Pay-per-Use

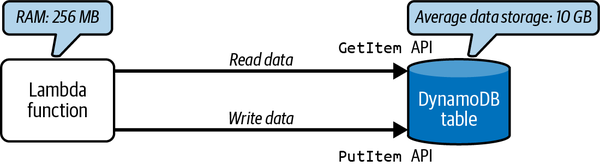

Pay-per-use is the main characteristic that everyone associates with serverless. It mainly originated from the early days of serverless, when it was equated with FaaS: you pay for each function invocation. That interpretation is valid for ephemeral services such as AWS Lambda; however, if your application handles data, you may have a business requirement to store the data for a longer period and for it to be accessible during that time. Fully managed services such as Amazon DynamoDB and Amazon S3 are examples of services used for long-term data storage. In such cases, there is a cost associated with the volume of data your applications store every month, often measured in gibibytes (GiB). Remember, this is still pay-per-use based on your data volume, and you are not charged for an entire disk drive or storage array.

Figure 1-8 shows a simple serverless application where a Lambda function operates on the data stored in a DynamoDB table. While you pay for the Lambda function based on the number of invocations and memory consumption, for DynamoDB, in addition to the pay-per-use cost involved with its API invocations for reading and writing data, you also pay for the space consumed for storing the data. In Chapter 9, you will see all the cost elements related to AWS Lambda and DynamoDB in detail.

Figure 1-8. A simple serverless application, illustrating pay-per-use and data storage cost elements

Autoscaling and Scale to Zero

One of the primary characteristics of a fully managed service is the ability to scale up and down based on demand, without manual intervention. The term scale to zero is unique to serverless. Take, for example, a Lambda function. AWS Lambda manages the infrastructure provisioning to run the function. When the function ends and is no longer in use, after a certain period of inactivity the service reclaims the resources used to run it, scaling the number of execution environments back to zero.

Conversely, when there is a high volume of requests for a Lambda function, AWS automatically scales up by provisioning the infrastructure to run as many concurrent instances of the execution environment as needed to meet the demand. This is often referred to as infinite scaling, though the total capacity is actually dependent on your account’s concurrency limit.

Tip

With AWS Lambda, you can opt to keep a certain number of function containers “warm” in a ready state by setting a function’s provisioned concurrency value.

Both scaling behaviors make serverless ideal for many types of applications.

High Availability

A highly available (HA) application avoids a single point of failure by adding redundancy. For a commercial application, the service level agreement (SLA) states the availability in terms of a percentage. In serverless, as we employ fully managed services, AWS takes care of the redundancy and data replication by distributing the compute and storage resources across multiple AZs, thus avoiding a single point of failure. Hence, adopting serverless provides high availability out of the box as standard.

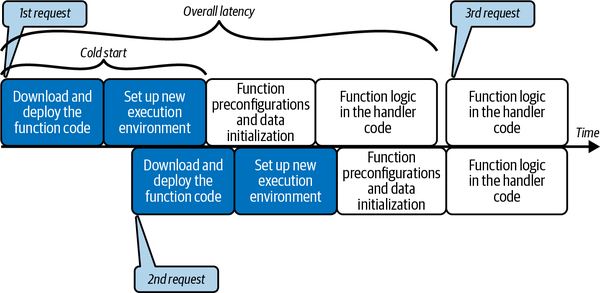

Cold Start

Cold start is commonly associated with FaaS. For example, as you saw earlier, when an AWS Lambda function is idle for a period of time its execution environment is shut down. If the function gets invoked again after being idle for a while, AWS provisions a new execution environment to run it. The latency in this initial setup is usually called the cold start time. Once the function execution is completed, the Lambda service retains the execution environment for a nondeterministic period. If the function is invoked again during this period, it does not incur the cold start latency. However, if there are additional simultaneous invocations, the Lambda service provisions a new execution environment for each concurrent invocation, resulting in a cold start.

Many factors contribute to this initial latency: the size of the function’s deployment package, the runtime environment of the programming language, the memory (RAM) allocated to the function, the number of preconfigurations (such as static data initializations), etc. As an engineer working in serverless, it is essential to understand cold start as it influences your architectural, developmental, and operational decisions (see Figure 1-9).

Figure 1-9. Function invocation latency for cold starts and warm executions—requests 1 and 2 incur a cold start, whereas a warm container handles request 3

The Unique Benefits of Serverless

Along with the core characteristics of serverless that you saw in the previous section, understanding some of its unique benefits is essential to optimize the development process and enable teams to improve their velocity in delivering business outcomes. In this section, we’ll take a look at some of the key features serverless supports.

Individuality and Granularity of Resources

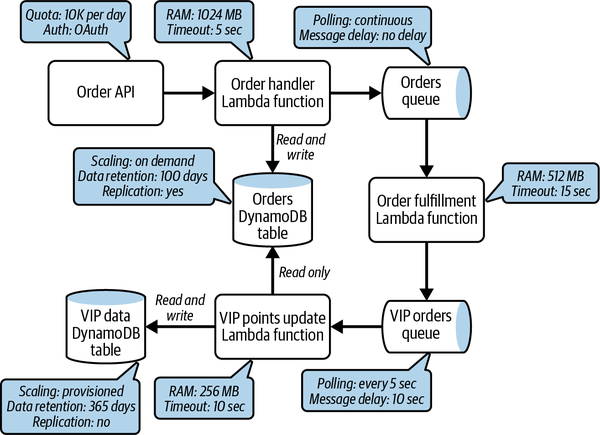

“One size fits all” does not apply to serverless. The ability to configure and operate serverless services at an individual level allows you to look at your serverless application not just as a whole but at the level of each resource, working with its specific characteristics. Unlike with traditional container applications, you no longer need to set a common set of operational characteristics at the container level.

Say your application has several Lambda functions. Some functions handle user requests from your website, while others perform batch jobs on the backend. You may provide more memory for the user-facing functions to enable them to respond quickly, and opt for a longer timeout for the backend batch job functions where performance is not critical. With Amazon Simple Queue Service (SQS) queues, for example, you configure how quickly you want to process the messages as they come into the queue, or you can decide whether to delay when a message becomes available for processing.

Note

Amazon SQS is a fully managed and highly scalable message queuing service used to send, receive, and store messages. It is one of AWS’s core services and helps build loosely coupled microservices. Each SQS queue has several attributes; you can adjust these values to configure the queue as needed.

When you build an application that uses several resources—Lambda functions, SQS queues, DynamoDB tables, S3 buckets, etc.—you have the flexibility to adjust each resource’s behavior as dictated by the business and operational requirements. This is depicted in Figure 1-10, which shows part of an order processing microservice.

The ability to operate at a granular level brings many benefits, as you will see throughout this book. Understanding the individuality of resources and developing a granular mindset while building serverless applications helps you design secure, cost-effective, and sustainable solutions that are easy to operate.

Figure 1-10. A section of an order processing microservice, illustrating how each serverless resource has different configurations

Ability to Optimize Services for Cost, Performance, and Sustainability

Cost and performance optimization techniques are common in software development. When you optimize to reduce cost, you target reducing the processing power, memory consumption, and storage allocation. With a traditional application, you exercise these changes at a high level, impacting all parts of the application. You cannot select a particular function or database table to apply the changes to. In such situations, if not adequately balanced, the changes you make could lead to performance degradations or higher costs elsewhere. Serverless offers a better optimization model.

Serverless enables deeper optimization

A serverless application is composed of several managed services, and it may contain multiple resources from the same managed service. For example, in Figure 1-10, you saw three Lambda functions from the AWS Lambda service. You are not forced to optimize all the functions in the same way. Instead, depending on your requirements, you can optimize one function for performance and another for cost, or give one function a longer timeout than the others. You can also allow for different concurrent execution needs.

Based on their characteristics, you can exhibit the same principle with other resources, such as queues, tables, APIs, etc. Imagine you have several microservices, each containing several serverless resources. For each microservice, you can optimize each individual resource at a granular level. This is optimization at its deepest and finest level in action!

Storage optimization

Modern cloud applications ingest huge volumes of data—operational data, metrics, logs, etc. Teams that own the data might want to optimize their storage (to minimize cost and, in some cases, improve performance) by isolating and keeping only business-critical data.

Managed data services provide built-in features to remove or transition unneeded data. For example, Amazon S3 supports per-bucket data retention policies to either delete data or transition it to a different storage class, and DynamoDB allows you to configure the Time to Live (TTL) value on every item in a table. The storage optimization options are not confined to the mainstream data stores; you can specify the message retention period for each SQS queue, Kinesis stream, API cache, etc.

Warning

DynamoDB manages the TTL configuration of the table items efficiently, regardless of how many items are in a table and how many of those items have a TTL timestamp set. However, in some cases, it can take up to 48 hours for an item to be deleted from the table. Consequently, this may not be an ideal solution if you require guaranteed item removal at the exact TTL time.

Support for Deeper Security and Data Privacy Measures

You now understand how the individuality and granularity of services in serverless enable you to fine-tune every part of an application for varying demands. The same characteristics allow you to apply protective measures at a deeper level as necessary across the ecosystem.

Permissions at a function level

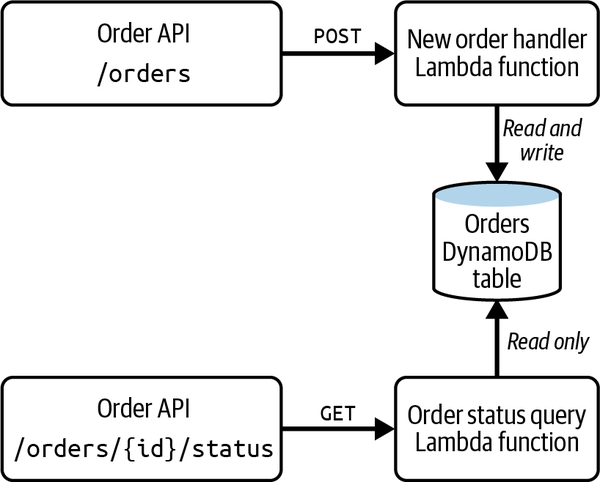

Figure 1-11 shows a simple serverless application that allows you to store orders and query the status of a given order via the POST /orders and GET /orders/{id}/status endpoints, respectively, which are handled by the corresponding Lambda functions. The function that queries the Orders table to find the status performs a read operation. Since this function does not change the data in the table, it requires just the dynamodb:Query privilege. This idea of providing the minimum permissions required to complete a task is known as the principle of least privilege.

Note

The principle of least privilege is a security best practice that grants only the permissions required to perform a task. As shown in Example 1-1, you define this as an IAM policy by limiting the permitted actions on specific resources. It is one of the most fundamental security principles in AWS and should be part of the security thinking of every engineer. You will learn more about this topic in Chapter 4.

Figure 1-11. Serverless application showing two functions with different access privileges to the same data table

Granular permissions at the record level

The IAM policy in Example 1-1 showed how you configure access to read (query) data from the Orders table. Table 1-1 contains sample data of a few orders, where an order is split into three parts for better access and privacy: SOURCE, STATUS, and ADJUSTED.

| PK | SK | Status | Tracking | Total | Items |

|---|---|---|---|---|---|

| SOURCE | 100-255-8730 | Processing | 44tesYuwLo | 299.99 | { … } |

| STATUS | 100-255-8730 | Processing | 44tesYuwLo | ||

| SOURCE | 100-735-6729 | Delivered | G6txNMo26d | 185.79 | { … } |

| ADJUSTED | 100-735-6729 | Delivered | G6txNMo26d | 175.98 | { … } |

| STATUS | 100-735-6729 | Delivered | G6txNMo26d |

Per the principle of least privilege, the Lambda function that queries the status of an order should only be allowed to access that order’s STATUS record. Table 1-2 highlights the records that should be accessible to the function.

| PK | SK | Status | Tracking | Total | Items |

|---|---|---|---|---|---|

| SOURCE | 100-255-8730 | Processing | 44tesYuwLo | 299.99 | { … } |

| STATUS | 100-255-8730 | Processing | 44tesYuwLo | ||

| SOURCE | 100-735-6729 | Delivered | G6txNMo26d | 185.79 | { … } |

| ADJUSTED | 100-735-6729 | Delivered | G6txNMo26d | 175.98 | { … } |

| STATUS | 100-735-6729 | Delivered | G6txNMo26d |

To achieve this, you can use an IAM policy with a dynamodb:LeadingKeys condition and the policy details listed in Example 1-2.

Example 1-2. Policy to restrict read access to a specific type of item

{"Version":"2012-10-17","Statement":[{"Sid":"AllowOrderStatus","Effect":"Allow","Action":["dynamodb:GetItem","dynamodb:Query"],"Resource":["arn:aws:dynamodb:…:table/Orders"],"Condition":{"ForAllValues:StringEquals":{"dynamodb:LeadingKeys":["STATUS"]}}}]}

The conditional policy shown here works at a record level. DynamoDB also supports attribute-level conditions to fetch the values from only the permitted attributes of a record, for applications that require even more granular access control.

Policies like this one are common in AWS and applicable to several common services you will use to build your applications. Awareness and understanding of where and when to use them will immensely benefit you as a serverless engineer.

Incremental and Iterative Development

Iterative development empowers teams to develop and deliver products in small increments but in quick succession. As Eric Ries says in his book The Startup Way (Penguin), you start simple and scale fast. Your product constantly evolves with new features that benefit your customers and add business value.

Event-driven architecture (EDA), which we’ll explore in detail in Chapter 3, is at the heart of serverless development. In serverless, you compose your applications with loosely coupled services that interact via events, messages, and APIs. EDA principles enable you to build modular and extensible serverless applications.1 When you avoid hard dependencies between your services, it becomes easier to extend your applications by adding new services that do not disrupt the functioning of the existing ones.

Multiskilled, Diverse Engineering Teams

Adopting new technology brings changes as well as challenges in an organization. When teams move to a new language, database, SaaS platform, browser technology, or cloud provider, changes in that area often require changes in others. For example, adopting a new programming language may call for modifications to the development, build, and deployment processes. Similarly, moving your applications to the cloud can create demand for many new processes and skills.

Influence of DevOps culture

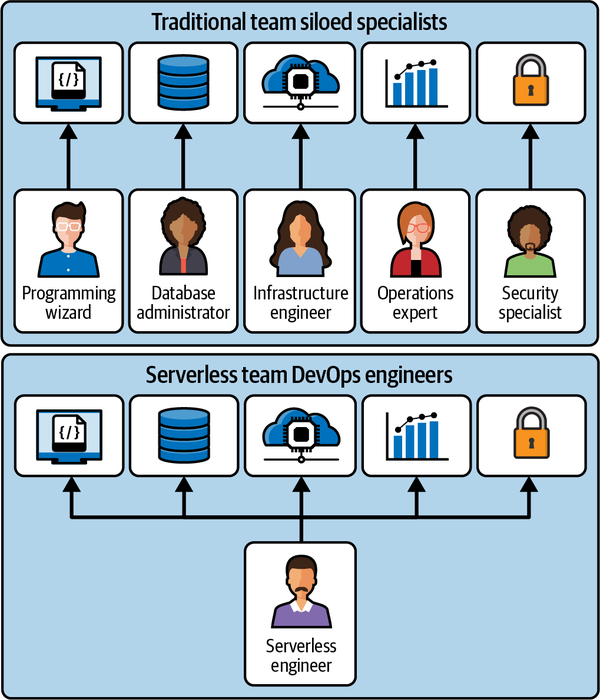

The DevOps approach removes the barriers between development and operations, making it faster to develop new products and easier to maintain them. Adopting a DevOps model takes a software engineer who otherwise focuses on developing applications into performing operational tasks. You no longer work in a siloed software development cycle but are involved in its many phases, such as continuous integration and delivery (CI/CD), monitoring and observability, commissioning the cloud infrastructure, and securing applications, among other things.

Adopting a serverless model takes you many steps further. Though it frees you from managing servers, you are now programming the business logic, composing your application using managed services, knitting them together with infrastructure as code (IaC), and operating them in the cloud. Just knowing how to write software is not enough. You have to protect your application from malicious users, make it available 24/7 to customers worldwide, and observe its operational characteristics to improve it continually. Becoming a successful serverless engineer thus requires developing a whole new set of skills, and cultivating a DevOps mindset (see Figure 1-12).

Figure 1-12. Traditional siloed specialist engineers versus multiskilled serverless engineers

Your evolution as a serverless engineer

Consider the simple serverless application shown in Figure 1-8, where a Lambda function reads and writes to a DynamoDB table. Imagine that you are proficient in TypeScript and have chosen Node.js as your Lambda runtime environment. As you implement the function, it becomes your responsibility to code the interactions with DynamoDB. To be efficient, you learn NoSQL concepts, identify the partition key (PK) and sort key (SK) attributes as well as appropriate data access patterns to write your queries, etc. In addition, there may be data replication, TTL, caching, and other requirements. Security is also a concern, so you then learn about AWS IAM, how to create roles and policies, and, most importantly, the principle of least privilege. From being a programmer proficient in a particular language, your engineering role takes a 360-degree turn. As you evolve into a serverless engineer, you pick up many new skills and responsibilities.

As you saw in the previous section, your job requires having the ability to build the deployment pipeline for your application, understand service metrics, and proactively act on production incidents. You’re now a multiskilled engineer—and when most engineers in a team are multiskilled, it becomes a diverse engineering team capable of efficient end-to-end serverless delivery. For organizations where upskilling of engineers is required, Chapter 2 discusses in detail the ways to grow serverless talents.

The Parts of a Serverless Application and Its Ecosystem

An ecosystem is a geographic area where plants, animals, and other organisms, as well as weather and landscape, work together to form a bubble of life.

In nature, an ecosystem contains both living and nonliving parts, also known as factors. Every factor in an ecosystem depends on every other factor, either directly or indirectly. The Earth’s surface is a series of connected ecosystems.

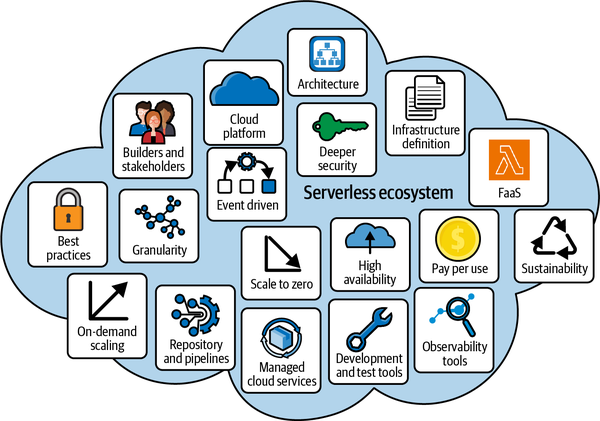

The ecosystem analogy here is intentional. Serverless is too often imagined as an architecture diagram or a blueprint, but it is much more than FaaS and a simple framework. It has both technical and nontechnical elements associated with it. Serverless is a technology ecosystem!

As you learned earlier in this chapter, managed services form the bulk of a serverless application. However, they alone cannot bring an application alive—many other factors are involved. Figure 1-13 depicts some of the core elements that make up the serverless ecosystem.

Figure 1-13. Parts of the serverless technology ecosystem

They include:

- The cloud platform

-

This is the enabler of the serverless ecosystem—AWS in our case. The cloud hosting environment provides the required compute, storage, and network resources.

- Managed cloud services

-

Managed services are the basic building blocks of serverless. You compose your applications by consuming services for computation, event transportation, messaging, data storage, and various other activities.

- Architecture

-

This is the blueprint that depicts the purpose and behavior of your serverless application. Defining and agreeing on an architecture is one of the most important activities in serverless development.

- Infrastructure definition

-

Infrastructure definition—also known as infrastructure as code (IaC) and expressed as a descriptive script—is like the circuit diagram of your application. It weaves everything together with the appropriate characteristics, dependencies, permissions, and access controls. IaC, when actioned on the cloud, holds the power to bring your serverless application alive or tear it down.

- Development and test tools

-

The runtime environment of your FaaS dictates the programming language, libraries, plug-ins, testing frameworks, and several other developer aids. These tools may vary from one ecosystem to another, depending on the product domain and the preferences of the engineering teams.

- Repository and pipelines

-

The repository is a versioned store for all your artifacts, and the pipelines perform the actions that take your serverless application from a developer environment all the way to its target customers, passing through various checkpoints along the way. The infrastructure definition plays a pivotal role in this process.

- Observability tools

-

Observability tools and techniques act as a mirror to reflect the operational state of your application, offering deeper insights into how it performs against its intended purpose. A non-observable system cannot be sustained.

- Best practices

-

To safeguard your serverless application against security threats and scaling demands and ensure it is both observable and resilient in the face of unexpected disruptions, you need well-architected principles and best practices acting as guardrails. The AWS Well-Architected Framework is an essential best practices guide; we’ll look at it later in this chapter.

- Builders and stakeholders

-

The people who come up with the requirements for an application and the ones who design, build, and operate it in the cloud are also part of the ecosystem. In addition to all the tools and techniques, the role of humans in a serverless ecosystem is vital—they’re the ones responsible for making the right decisions and performing the necessary actions, similar to the role we all play in our environmental ecosystem!

Why Is AWS a Great Platform for Serverless?

As mentioned earlier in this chapter, although the term serverless first appeared in the industry in around 2012, it gained traction after the release of AWS Lambda in 2014. While the large numbers of people who jumped on the Lambda bandwagon elevated serverless to new heights, AWS already had a couple of fully managed serverless services serving customers at this point. Amazon SQS was released almost 10 years before AWS Lambda. Amazon S3, the much-loved and widely used object store in the cloud, was launched in 2006, way before the cloud reached the corners of the IT industry.

This early leap into the cloud with a futuristic vision, offering container services and fully managed serverless services, enabled Amazon to roll out new products faster than any other provider. Recognizing the potential, many early adopters swiftly realized their business ideas and launched their applications on AWS. Even though the cloud market is growing rapidly, AWS remains the top cloud services provider globally.

The Popularity of Serverless Services from AWS

Working closely with customers and monitoring industry trends has allowed AWS to quickly iterate ideas and launch several important serverless services in areas such as APIs, functions, data stores, data streaming, AI, machine learning, event transportation, workflow and orchestration, and more.

AWS is a comprehensive cloud platform offering over 200 services to build and operate both serverless and non-serverless workloads. Table 1-3 lists some of the most commonly used managed serverless services. You will see many of these services featured in our discussions throughout this book.

| Category | Service |

|---|---|

| Analytics | Amazon Kinesis, Amazon Athena, AWS Glue, Amazon QuickSight |

| App building | AWS Amplify |

| API building | Amazon API Gateway, AWS AppSync |

| Application integration | Amazon EventBridge, AWS Step Functions, Amazon Simple Notification Service (SNS), Amazon Simple Queue Service (SQS), Amazon API Gateway, AWS AppSync |

| Compute | AWS Lambda |

| Content delivery | Amazon CloudFront |

| Database | Amazon DynamoDB, Amazon Aurora Serverless |

| Developer tools | AWS CloudFormation, AWS Cloud Development Kit (CDK), AWS Serverless Application Model (SAM), AWS CodeBuild, AWS CodeCommit, AWS CodeDeploy, AWS CodePipeline |

| Emails | Amazon Simple Email Service (SES) |

| Event-driven architecture | Amazon EventBridge, Amazon Simple Notification Service (SNS), Amazon Simple Queue Service (SQS), AWS Lambda |

| Governance | AWS Well-Architected Tool, AWS Trusted Advisor, AWS Systems Manager, AWS Organizations |

| High volume event streaming | Amazon Kinesis Data Streams, Amazon Kinesis Data Firehose |

| Identity, authentication, and security | AWS Identity and Access Management (IAM), Amazon Cognito, AWS Secrets Manager, AWS WAF, Amazon Macie |

| Machine learning | Amazon SageMaker, Amazon Translate, Amazon Comprehend, Amazon DevOps Guru |

| Networking | Amazon Route 53 |

| Object store | Amazon Simple Storage Service (S3) |

| Observability | Amazon CloudWatch, AWS X-Ray, AWS CloudTrail |

| Orchestration | AWS Step Functions |

| Security | AWS Identity and Access Management (IAM), Amazon Cognito, AWS Secrets Manager, AWS Systems Manager Parameter Store |

The AWS Well-Architected Framework

The AWS Well-Architected Framework is a collection of architectural best practices for designing, building, and operating secure, scalable, highly available, resilient, and cost-effective applications in the cloud. It consists of six pillars covering fundamental areas of a modern cloud system:

- Operational Excellence

-

The Operational Excellence pillar provides design principles and best practices to devise organizational objectives to identify, prepare, operate, observe, and improve operating workloads in the cloud. Failure anticipation and mitigation plans, evolving applications in small but frequent increments, and continuous evaluation and improvements of the operational procedures are some of the core principles of this pillar.

- Security

-

The Security pillar focuses on identity and access management, protecting applications at all layers, ensuring data privacy and control as well as traceability and auditing of all actions, and preparing for and responding to security events. It instills security thinking at all stages of development and is the responsibility of everyone involved.

- Reliability

-

An application deployed and operated in the cloud should be able to scale and function consistently as demand changes. The principles and practices of the Reliability pillar include designing applications to work with service quotas and limits, preventing and mitigating failures, and identifying and recovering from failures, among other guidance.

- Performance Efficiency

-

The Performance Efficiency pillar is about the approach of selecting and the use of the right technology and resources to build and operate an efficient system. Monitoring and data metrics play an important role here in constantly reviewing and making trade-offs to maintain efficiency at all times.

- Cost Optimization

-

The Cost Optimization pillar guides organizations to operate business applications in the cloud in a way that delivers value and keeps costs low. The best practices focus on financial management, creating cloud cost awareness, using cost-effective resources and technologies such as serverless, and continuously analyzing and optimizing based on business demand.

- Sustainability

-

The Sustainability pillar is the latest addition to the AWS Well-Architected Framework. It focuses on contributing to a sustainable environment by reducing energy consumption; architecting and operating applications that reduce the use of compute power, storage space, and network round trips; use of on-demand resources such as serverless services; and optimizing to the required level and not over.

AWS Technical Support Plans

Depending on the scale of your cloud operation and the company’s size, Amazon offers four technical support plans to suit your needs:

- Developer

-

This is the entry-level support model, suitable for experimentation, building prototypes, or testing simple applications at the start of your serverless journey.

- Business

-

As you move from the experimentation stage toward production deployments and operating business applications serving customers, this is the recommended support level. As well as other support features, it adds response time guarantees for production systems that are impaired or go down (<4 hours and <1 hour, respectively).

- Enterprise on-ramp

-

The main difference between this one and the Enterprise plan is the response time guarantee when business-critical applications go down (<30 minutes, versus <15 minutes with the higher-level plan). The lower-level plans do not offer this guarantee.

- Enterprise

-

If you’re part of a big organization with several teams developing and operating high-profile, mission-critical workloads, the Enterprise support plan will give you the most immediate care. In the event of an incident with your mission-critical applications, you get support within 15 minutes. This plan also comes with several additional benefits, including:

-

A dedicated Technical Account Manager (TAM) who acts as the first point of contact between your organization and AWS

-

Regular (typically monthly) meeting cadence with your TAM

-

Advice from AWS experts, such as solution architects specializing in your business domain, when building an application

-

Evaluation of your existing systems and recommendations based on AWS Well-Architected Framework best practices

-

Training and workshops to improve your internal AWS skills and development best practices

-

News about new product launches and feature releases

-

Opportunities to beta-test new products before they become generally available

-

Invitations to immersion days and face-to-face meetings with AWS product teams related to the technologies you work with

Note

The number one guiding principle at Amazon is customer obsession: “Leaders start with the customer and work backwards. They work vigorously to earn and keep customer trust. Although leaders pay attention to competitors, they obsess over customers.”

-

AWS Developer Community Support

The AWS developer community is an incredibly active and supportive technical forum. There are several avenues you can pursue to become part of this community, and doing so is highly recommended, both for your growth as a serverless engineer and to ensure the successful adoption of serverless within your enterprise. They include:

- Engage with AWS Developer Advocates (DAs).

-

AWS’s DAs connect the developer community with the different product teams at AWS. You can follow serverless developments on social media. You will find the serverless specialist DAs helping the community by answering questions and contributing technical content via blogs, live streams and videos, GitHub code shares, conferences, meetups, etc.

- Reach out to AWS Heroes and Community Builders.

-

The AWS Heroes program recognizes AWS experts whose contributions make a real impact in the tech community. Outside AWS, these experts share their knowledge and serverless adoption stories from the trenches.

At the time of writing this book, Sheen Brisals has been recognized as an AWS Serverless Hero.

The AWS Community Builders program is a worldwide initiative to bring together AWS enthusiasts and rising experts who are passionate about sharing knowledge and connecting with AWS product teams and DAs, Heroes, and industry experts. Identifying the builders who contribute to the serverless space and learning from their experiences will deepen your knowledge.

At the time of writing this book, Luke Hedger has been recognized as an AWS Community Builder.

- Join an AWS Cloud Club.

-

There’s a thriving student-led community, with AWS Cloud Clubs in multiple regions worldwide. Students can join their local club to network, learn about AWS, and build their careers. Cloud Club Captains organize events to learn together, connect with other clubs, and earn AWS credits and certification vouchers, among other opportunities.

- Sign up with AWS Startup or AWS Educate.

-

AWS has popular programs to help bring your ideas to realization. AWS Activate is for anyone with great ideas and ambitions or start-ups less than 10 years old. AWS offers the necessary tools, resources, credits, and advice to boost success at every stage of the journey.

AWS Educate is a learning resource for students and professionals. It offers AWS credits that can be used for courses and projects, access to free training, and networking opportunities.

- Attend AWS conferences.

-

An essential part of an enterprise’s serverless adoption journey is continuously evaluating its development processes, domains, team organization, engineering culture, best practices, etc. Conferences and collaborative events are the perfect platforms to find solutions for your concerns, validate your ideas, and identify actions to correct before it is too late.

The type and scale of conferences vary. For example, AWS re:Invent is an annual week-long conference that brings tens of thousands of tech and business enthusiasts together with hundreds of sessions spread over five days, whereas AWS Summits and AWS Community Summits are local events—mostly one-day—conducted around the world to bring the community and technology experts closer. Most of the content gets shared online as well. AWS Events and Webinars is a good place to identify events that might suit your purposes.

Summary

As you start your serverless journey, either as an independent learner or as part of an enterprise team, it is valuable to have an understanding of how the cloud evolved and how it facilitated the development of serverless technology. This chapter provided that foundation, and outlined the significant potential of serverless and the benefits it can provide to an organization.

Every technology requires a solid platform to thrive and a passionate community to support it. While by no means the only option out there, Amazon Web Services (AWS) is a fantastic platform with numerous service offerings and dedicated developer support programs. As highlighted in this chapter, to be successful in your serverless adoption, it is paramount that you share your experiences and learn from proven industry experts.

In the next chapter, we will look at serverless adoption in an enterprise. We will examine some of the fundamental things an organization needs to evaluate and do to onboard serverless and make it a successful venture for its engineers, users, and business.

Interview with an Industry Expert

Danilo Poccia, Chief Evangelist (EMEA), Amazon Web Services

Danilo works with start-ups and companies of all sizes to support their innovation. In his role as Chief Evangelist (EMEA) at Amazon Web Services, he leverages his experience to help people bring their ideas to life, focusing on serverless architectures and event-driven programming and the technical and business impact of machine learning and edge computing. He is the author of AWS Lambda in Action: Event-Driven Serverless Applications (Manning) and speaks at conferences worldwide.

Q: Why does the software industry need serverless technology?

When you build an application, it can become complex to deploy, test, or add a new feature sooner or later without breaking the existing functionalities. In the last 20 years, complexity has become a science that has found similarities across many different fields, such as mathematics, physics, and social sciences. Complexity theory finds that when there are strong dependencies between components, even simple components, you might experience the emergence of “complex” and difficult-to-predict behaviors. For example, the behavior of an ant is simple, but together, ants can discover food hidden in remote locations and bring it back to their nest. The emergence of unexpected behaviors applies very well to software development.

When you have a large codebase, it requires a lot of effort to reduce side effects so that you don’t break something else when you add a new feature. In my experience, monolithic applications with a large codebase only work when there is a small core team of developers that have worked for years on the same application and have accumulated a large amount of experience in the business domain. Microservices help, but you still need business domain knowledge to find where to split the application. That’s what domain-driven design (DDD) calls the “bounded context.” And that’s why microservices are more successful if they are implemented as a migration from an existing application than when you’re starting from scratch. If the boundaries are well chosen and defined, you limit the internal dependencies to reduce the overall code complexity and the emergence of unexpected issues.

But still, each microservice brings its nonfunctional requirements in terms of security, reliability, scalability, observability, performance, and so on. Serverless architecture helps implement these nonfunctional requirements in a much easier way. It can reduce the overall code size by using services to implement functionalities that are not unique to your implementation. It lets you focus on the features you want to build and not managing the infrastructure required to run the application. In this way, moving from idea to production is much faster, and the advantage of serverless technologies is not only for the technical teams but also for the business teams that are able to deliver more and faster to their customers.

Q: As the author of the first book on AWS Lambda, what has changed in serverless since your early experience?

When I wrote the book in 2016, there was very little tooling around AWS Lambda. In some examples of my book, I call Lambda functions directly from the browser using the AWS SDK for JavaScript. In a way, I don’t dislike that. It makes you focus more on the business functions you need to implement. Also, even if many customers were interested in AWS Lambda at the time, there were very few examples of serverless workloads in production.

The idea for the book started from a workshop I created where I wanted to show how to make building an application easier. For example, I start with data. Is data structured? Put it in a fully managed database like Amazon DynamoDB. Is it unstructured data? Put it in an Amazon S3 bucket. Where is your business logic? Split it into small functions and run them on AWS Lambda. Can those functions run on a client or in a web browser? Put them there, and don’t run that code in the backend. Maybe the result is what we now call “serviceful” architecture.

Today, we have many tools that support building serverless event-driven applications to manage events (like Amazon EventBridge) or to coordinate the execution of your business logic (like AWS Step Functions). With better tooling, customers are now building more advanced applications. It’s not just about serverless functions like in 2016. Today, you need to consider how to use the tools together to build applications faster and have less code to maintain.

Q: In your role as a technology evangelist, you work with several teams. How does serverless as a technology enable teams of different sizes to innovate and build solutions faster?

Small teams work better. Serverless empowers small teams to do more because it lets them remove the parts that can be implemented off the shelf by an existing service. It naturally leads them to adopt a microservice architecture and use distributed systems. It can be hard at first if they don’t have any experience in these fields, but it’s rewarding when they learn and see the results.

If you need to make a big change in the way you build applications, for example, adopting serverless or microservices, put yourself in a place where you can make mistakes. It’s by making mistakes that we learn. As you move to production, collecting metrics on code complexity in your deployment pipeline helps keep the codebase under control. To move that a step further, I find the idea of a “fitness function” (as described in the book Building Evolutionary Architectures by Rebecca Parsons, Neal Ford, and Patrick Kua [O’Reilly]) extremely interesting, especially if you define “guardrails” about what the fitness of your application should be.

Automation helps at any scale but is incredibly effective for small teams. The AWS Well-Architected Framework also provides a way to measure the quality of your implementation and provides a Serverless Lens with specific guidance.

Q: As the leading cloud platform for developing serverless applications, what measures does AWS take to think ahead and bring new services to its consumers?

I started at AWS more than 10 years ago. Things were different at the time. Just starting an EC2 instance in a couple of minutes was considered impressive. But even if some time has passed, at AWS, we always use the same approach to build new services and features: we listen to our customers. Our roadmap is 90 to 95% based on what our customers tell us. For the rest, we try to add new ideas from the experience we have accumulated over the years in Amazon and AWS.

We want to build tools that can help solve customers’ problems, not theoretical issues. We build them in a way so that customers can choose which tools to use. We want to iterate quickly on new ideas and get as much feedback as possible when we do it.

Q: What advice would you give to the readers starting their personal or organizational serverless adoption journey?

Learn the mental model. Don’t focus on the implementation details. Understand the pros and cons of using microservices and distributed architectures. Time becomes important because there is latency and concurrency to be considered. Design your systems for asynchronous communication and eventual consistency.

Faults are a natural part of any architecture. Think of your strategy to recover and manage faults. Can you record and repeat what your application is doing? Can events help you do that? Also, two core requirements that are more important now than before are observability and sustainability. They are more related than one might think at first.

Offload the parts that are not unique to your application to services and SaaS offerings that can implement those functionalities for you. Focus on what you want to build. It’s there where you can make a difference.

1 A module is an independent and self-contained unit of software.

Get Serverless Development on AWS now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.