Chapter 4. Model Training Patterns

Machine learning models are usually trained iteratively, and this iterative process is informally called the training loop. In this chapter, we discuss what the typical training loop looks like, and catalog a number of situations in which you might want to do something different.

Typical Training Loop

Machine learning models can be trained using different types of optimization. Decision trees are often built node by node based on an information gain measure. In genetic algorithms, the model parameters are represented as genes, and the optimization method involves techniques that are based on evolutionary theory. However, the most common approach to determining the parameters of machine learning models is gradient descent.

Stochastic Gradient Descent

On large datasets, gradient descent is applied to mini-batches of the input data to train everything from linear models and boosted trees to deep neural networks (DNNs) and support vector machines (SVMs). This is called stochastic gradient descent (SGD), and extensions of SGD (such as Adam and Adagrad) are the de facto optimizers used in modern-day machine learning frameworks.

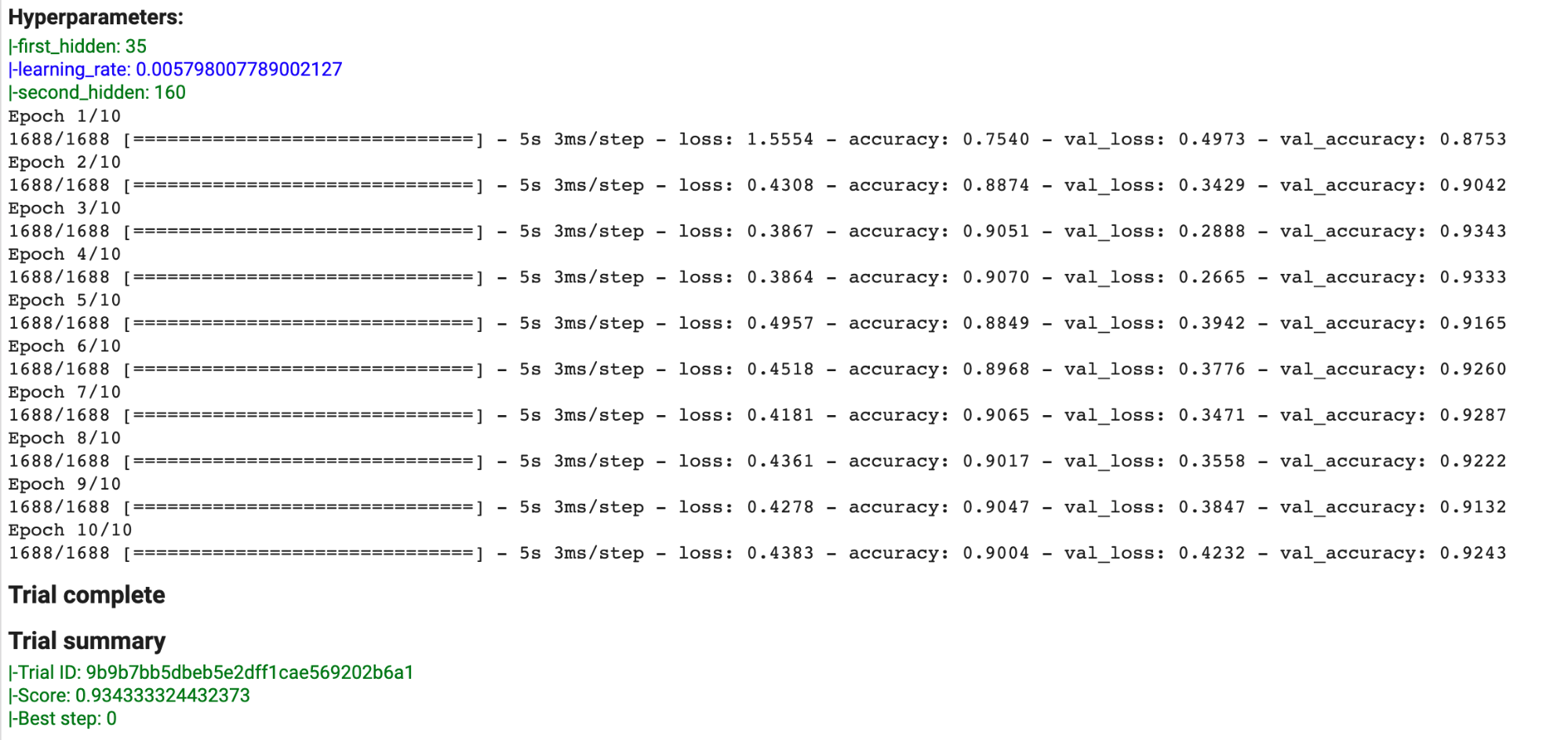

Because SGD requires training to take place iteratively on small batches of the training dataset, training a machine learning model happens in a loop. SGD finds a minimum, but is not a closed-form solution, and so we have to detect whether the model convergence has happened. Because of this, the error (called the loss) on the training dataset has to be monitored. Overfitting can happen if the model complexity is higher than can be afforded by the size and coverage of the dataset. Unfortunately, you cannot know whether the model complexity is too high for a particular dataset until you actually train that model on that dataset. Therefore, evaluation needs to be done within the training loop, and error metrics on a withheld split of the training data, called the validation dataset, have to be monitored as well. Because the training and validation datasets have been used in the training loop, it is necessary to withhold yet another split of the training dataset, called the testing dataset, to report the actual error metrics that could be expected on new and unseen data. This evaluation is carried out at the end.

Keras Training Loop

The typical training loop in Keras looks like this:

model=keras.Model(...)model.compile(optimizer=keras.optimizers.Adam(),loss=keras.losses.categorical_crossentropy(),metrics=['accuracy'])history=model.fit(x_train,y_train,batch_size=64,epochs=3,validation_data=(x_val,y_val))results=model.evaluate(x_test,y_test,batch_size=128))model.save(...)

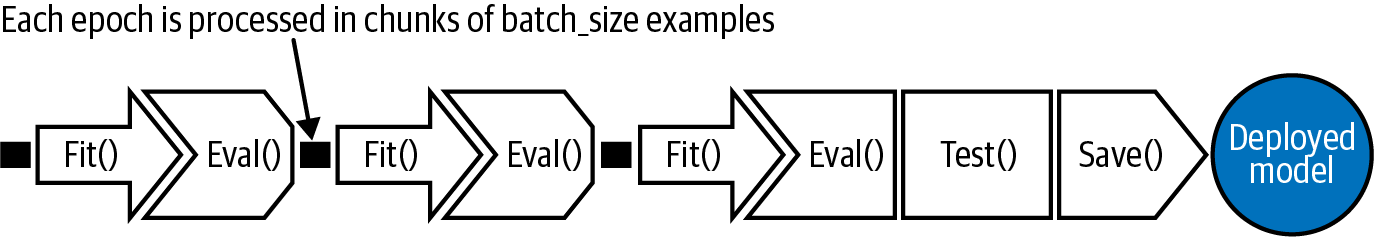

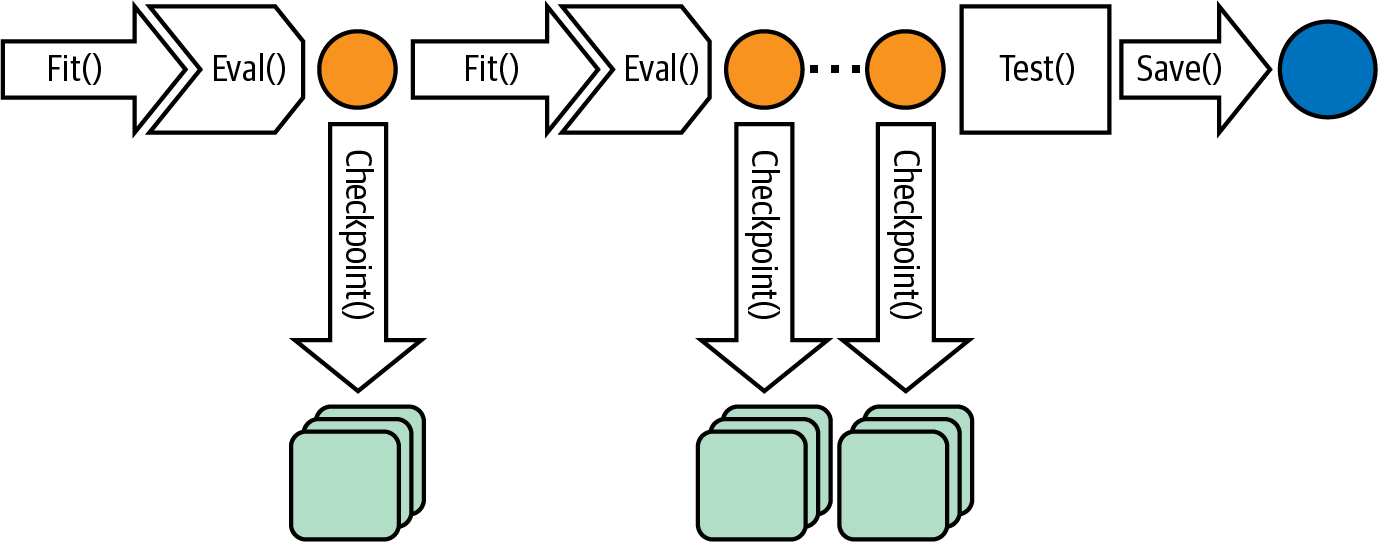

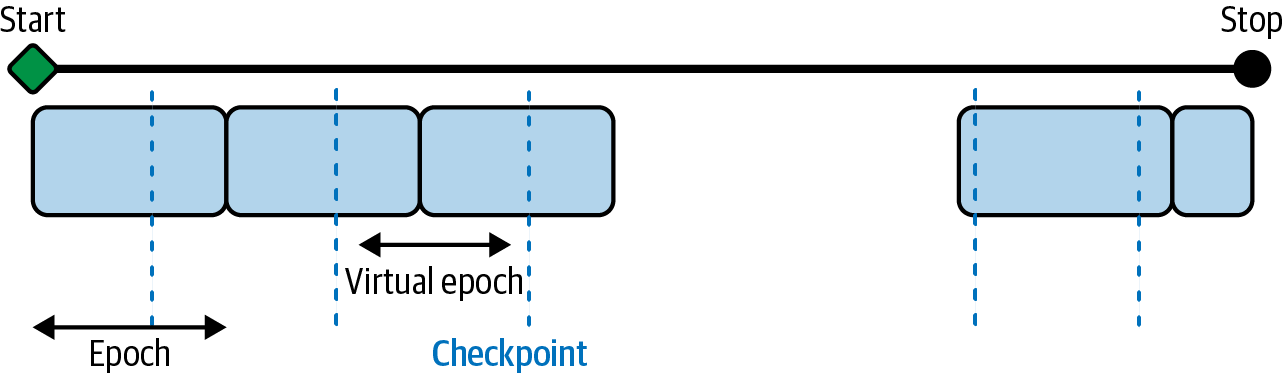

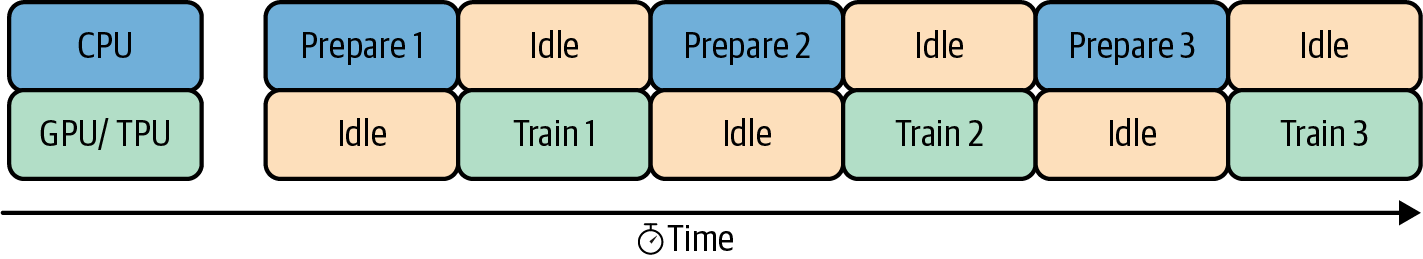

Here, the model uses the Adam optimizer to carry out SGD on the cross entropy over the training dataset and reports out the final accuracy obtained on the testing dataset. The model fitting loops over the training dataset three times (each traversal over the training dataset is termed an epoch) with the model seeing batches consisting of 64 training examples at a time. At the end of every epoch, the error metrics are calculated on the validation dataset and added to the history. At the end of the fitting loop, the model is evaluated on the testing dataset, saved, and potentially deployed for serving, as shown in Figure 4-1.

Figure 4-1. A typical training loop consisting of three epochs. Each epoch is processed in chunks of batch_size examples. At the end of the third epoch, the model is evaluated on the testing dataset, and saved for potential deployment as a web service.

Instead of using the prebuilt fit() function, we could also write a custom training loop that iterates over the batches explicitly, but we will not need to do this for any of the design patterns discussed in this chapter.

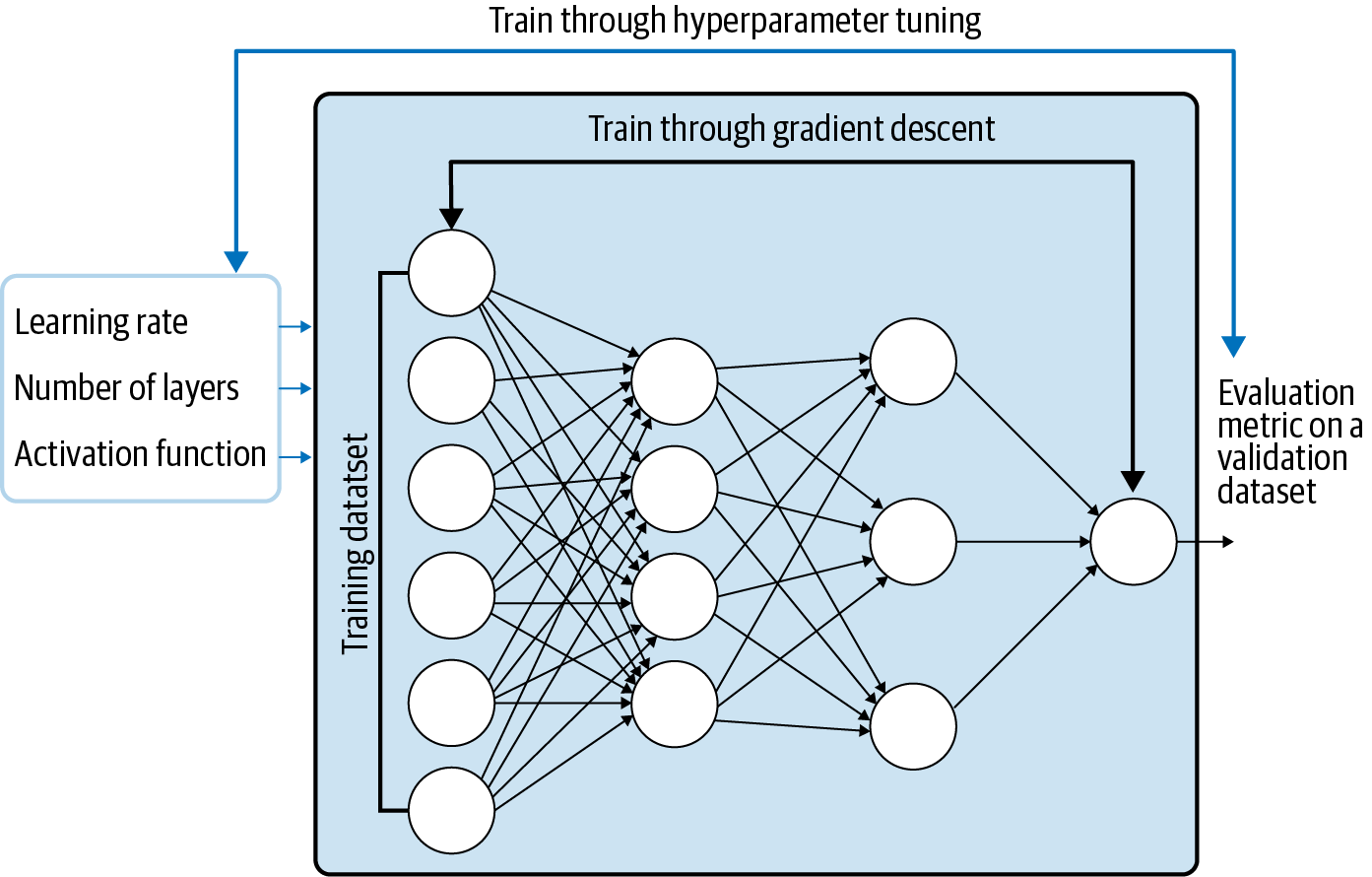

Training Design Patterns

The design patterns covered in this chapter all have to do with modifying the typical training loop in some way. In Useful Overfitting, we forgo the use of a validation or testing dataset because we want to intentionally overfit on the training dataset. In Checkpoints, we store the full state of the model periodically, so that we have access to partially trained models. When we use checkpoints, we usually also use virtual epochs, wherein we decide to carry out the inner loop of the fit() function, not on the full training dataset but on a fixed number of training examples. In Transfer Learning, we take part of a previously trained model, freeze the weights, and incorporate these nontrainable layers into a new model that solves the same problem, but on a smaller dataset. In Distribution Strategy, the training loop is carried out at scale over multiple workers, often with caching, hardware acceleration, and parallelization. Finally, in Hyperparameter Tuning, the training loop is itself inserted into an optimization method to find the optimal set of model hyperparameters.

Design Pattern 11: Useful Overfitting

Useful Overfitting is a design pattern where we forgo the use of generalization mechanisms because we want to intentionally overfit on the training dataset. In situations where overfitting can be beneficial, this design pattern recommends that we carry out machine learning without regularization, dropout, or a validation dataset for early stopping.

Problem

The goal of a machine learning model is to generalize and make reliable predictions on new, unseen data. If your model overfits the training data (for example, it continues to decrease the training error beyond the point at which validation error starts to increase), then its ability to generalize suffers and so do your future predictions. Introductory machine learning textbooks advise avoiding overfitting by using early stopping and regularization techniques.

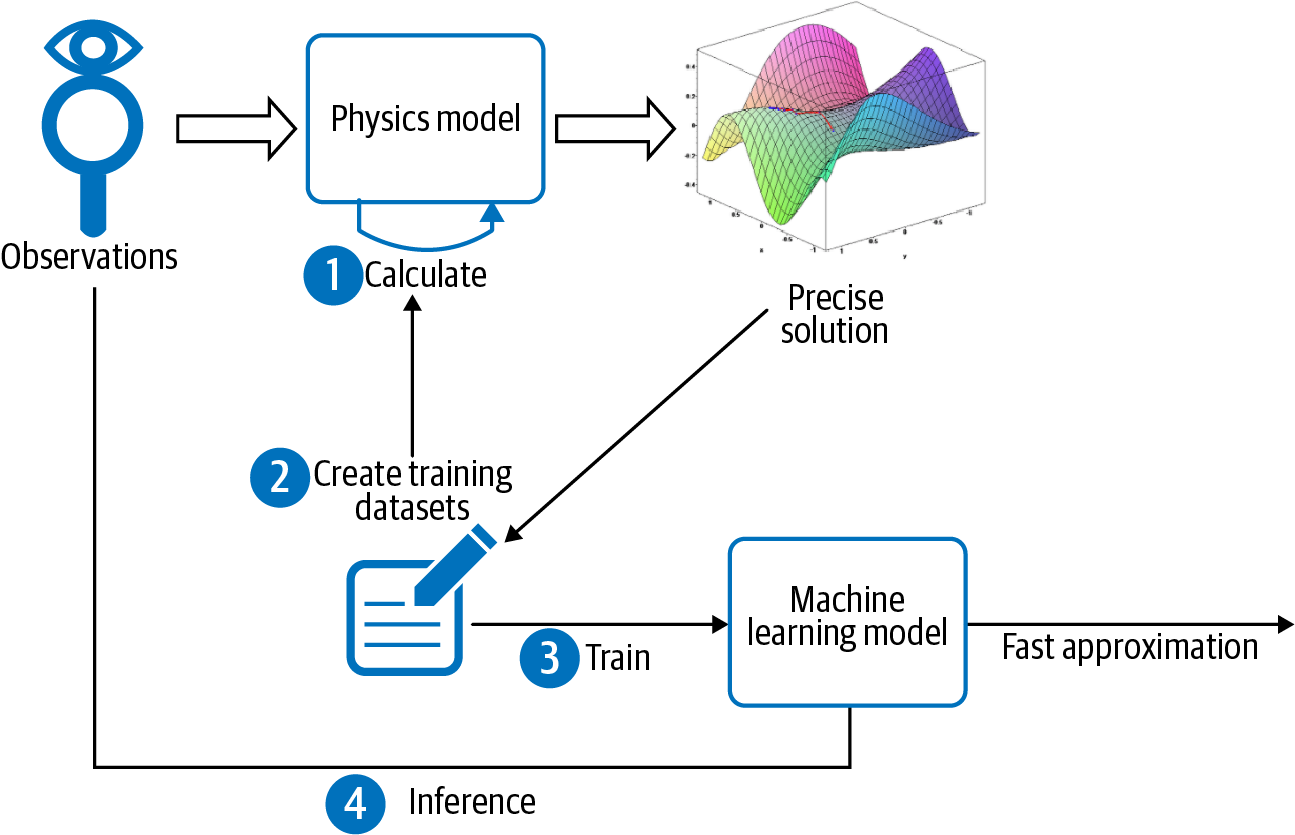

Consider, however, a situation of simulating the behavior of physical or dynamical systems like those found in climate science, computational biology, or computational finance. In such systems, the time dependence of observations can be described by a mathematical function or set of partial differential equations (PDEs). Although the equations that govern many of these systems can be formally expressed, they don’t have a closed-form solution. Instead, classical numerical methods have been developed to approximate solutions to these systems. Unfortunately, for many real-world applications, these methods can be too slow to be used in practice.

Consider the situation shown in Figure 4-2. Observations collected from the physical environment are used as inputs (or initial starting conditions) for a physics-based model that carries out iterative, numerical calculations to calculate the precise state of the system. Suppose all the observations have a finite number of possibilities (for example, temperature will be between 60°C and 80°C in increments of 0.01°C). It is then possible to create a training dataset for the machine learning system consisting of the complete input space and calculate the labels using the physical model.

Figure 4-2. One situation when it is acceptable to overfit is when the entire domain space of observations can be tabulated and a physical model capable of computing the precise solution is available.

The ML model needs to learn this precisely calculated and nonoverlapping lookup table of inputs to outputs. Splitting such a dataset into a training dataset and an evaluation dataset is counterproductive because we would then be expecting the model to learn parts of the input space it will not have seen in the training dataset.

Solution

In this scenario, there is no “unseen” data that needs to be generalized to, since all possible inputs have been tabulated. When building a machine learning model to learn such a physics model or dynamical system, there is no such thing as overfitting. The basic machine learning training paradigm is slightly different. Here, there is some physical phenomenon that you are trying to learn that is governed by an underlying PDE or system of PDEs. Machine learning merely provides a data-driven approach to approximate the precise solution, and concepts like overfitting must be reevaluated.

For example, a ray-tracing approach is used to simulate the satellite imagery that would result from the output of numerical weather prediction models. This involves calculating how much of a solar ray gets absorbed by the predicted hydrometeors (rain, snow, hail, ice pellets, and so on) at each atmospheric level. There is a finite number of possible hydrometeor types and a finite number of heights that the numerical model predicts. So the ray-tracing model has to apply optical equations to a large but finite set of inputs.

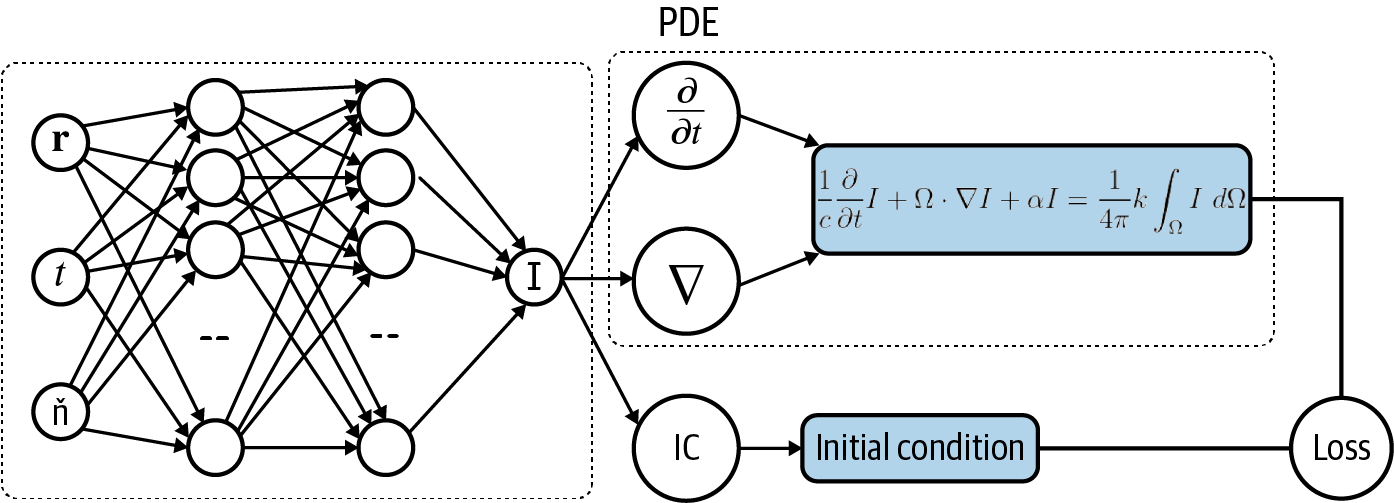

The equations of radiative transfer govern the complex dynamical system of how electromagnetic radiation propagates in the atmosphere, and forward radiative transfer models are an effective means of inferring the future state of satellite images. However, classical numerical methods to compute the solutions to these equations can take tremendous computational effort and are too slow to use in practice.

Enter machine learning. It is possible to use machine learning to build a model that approximates solutions to the forward radiative transfer model (see Figure 4-3). This ML approximation can be made close enough to the solution of the model that was originally achieved by using more classical methods. The advantage is that inference using the learned ML approximation (which needs to just calculate a closed formula) takes only a fraction of the time required to carry out ray tracing (which would require numerical methods). At the same time, the training dataset is too large (multiple terabytes) and too unwieldy to use as a lookup table in production.

Figure 4-3. Architecture for using a neural network to model the solution of a partial differential equation to solve for I(r,t,n).

There is an important difference between training an ML model to approximate the solution to a dynamical system like this and training an ML model to predict baby weight based on natality data collected over the years. Namely, the dynamical system is a set of equations governed by the laws of electromagnetic radiation—there is no unobserved variable, no noise, and no statistical variability. For a given set of inputs, there is only one precisely calculable output. There is no overlap between different examples in the training dataset. For this reason, we can toss out concerns about generalization. We want our ML model to fit the training data as perfectly as possible, to “overfit.”

This is counter to the typical approach of training an ML model where considerations of bias, variance, and generalization error play an important role. Traditional training says that it is possible for a model to learn the training data “too well,” and that training your model so that the train loss function is equal to zero is more of a red flag than cause for celebration. Overfitting of the training dataset in this way causes the model to give misguided predictions on new, unseen data points. The difference here is that we know in advance there won’t be unseen data, thus the model is approximating a solution to a PDE over the full input spectrum. If your neural network is able to learn a set of parameters where the loss function is zero, then that parameter set determines the actual solution of the PDE in question.

Why It Works

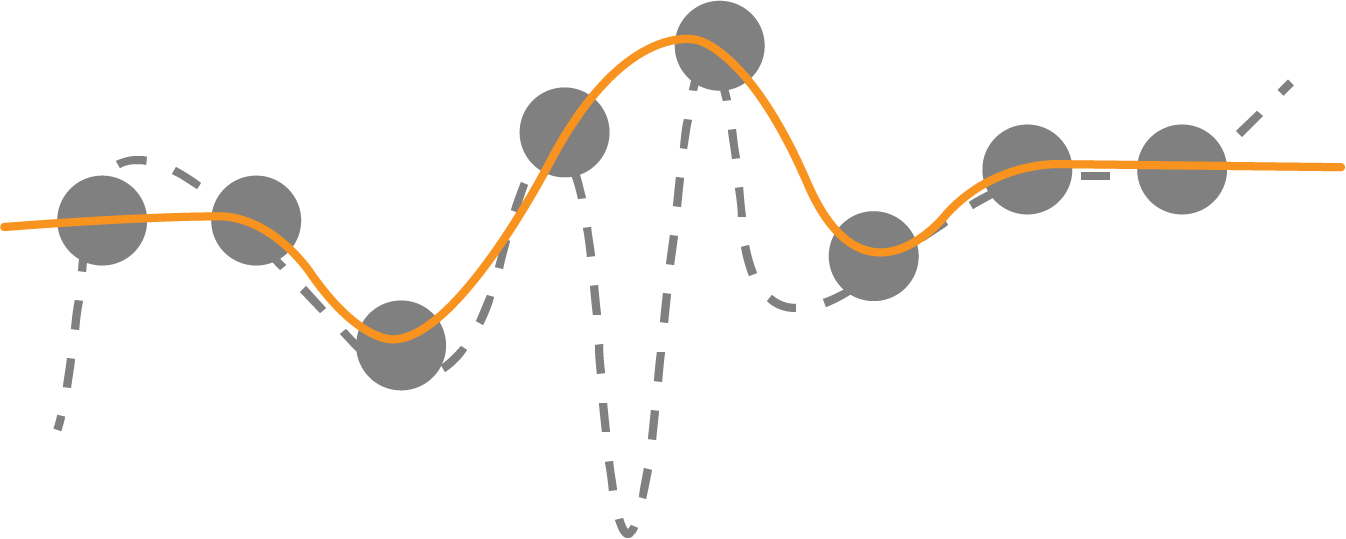

If all possible inputs can be tabulated, then as shown by the dotted curve in Figure 4-4, an overfit model will still make the same predictions as the “true” model if all possible input points are trained for. So overfitting is not a concern. We have to take care that inferences are made on rounded-off values of the inputs, with the rounding determined by the resolution with which the input space was gridded.

Figure 4-4. Overfitting is not a concern if all possible input points are trained for because predictions are the same with both curves.

Is it possible to find a model function that gets arbitrarily close to the true labels? One bit of intuition as to why this works comes from the Uniform Approximation Theorem of deep learning, which, loosely put, states that any function (and its derivatives) can be approximated by a neural network with at least one hidden layer and any “squashing” activation function, like sigmoid. This means that no matter what function we are given, so long as it’s relatively well behaved, there exists a neural network with just one hidden layer that approximates that function as closely as we want.1

Deep learning approaches to solving differential equations or complex dynamical systems aim to represent a function defined implicitly by a differential equation, or system of equations, using a neural network.

Overfitting is useful when the following two conditions are met:

-

There is no noise, so the labels are accurate for all instances.

-

You have the complete dataset at your disposal (you have all the examples there are). In this case, overfitting becomes interpolating the dataset.

Trade-Offs and Alternatives

We introduced overfitting as being useful when the set of inputs can be exhaustively listed and the accurate label for each set of inputs can be calculated. If the full input space can be tabulated, overfitting is not a concern because there is no unseen data. However, the Useful Overfitting design pattern is useful beyond this narrow use case. In many real-world situations, even if one or more of these conditions has to be relaxed, the concept that overfitting can be useful remains valid.

Interpolation and chaos theory

The machine learning model essentially functions as an approximation to a lookup table of inputs to outputs. If the lookup table is small, just use it as a lookup table! There is no need to approximate it by a machine learning model. An ML approximation is useful in situations where the lookup table will be too large to effectively use. It is when the lookup table is too unwieldy that it becomes better to treat it as the training dataset for a machine learning model that approximates the lookup table.

Note that we assumed that the observations would have a finite number of possibilities. For example, we posited that temperature would be measured in 0.01°C increments and lie between 60°C and 80°C. This will be the case if the observations are made by digital instruments. If this is not the case, the ML model is needed to interpolate between entries in the lookup table.

Machine learning models interpolate by weighting unseen values by the distance of these unseen values from training examples. Such interpolation works only if the underlying system is not chaotic. In chaotic systems, even if the system is deterministic, small differences in initial conditions can lead to dramatically different outcomes. Nevertheless, in practice, each specific chaotic phenomenon has a specific resolution threshold beyond which it is possible for models to forecast it over short time periods. Therefore, provided the lookup table is fine-grained enough and the limits of resolvability are understood, useful approximations can result.

Monte Carlo methods

In reality, tabulating all possible inputs might not be possible, and you might take a Monte Carlo approach of sampling the input space to create the set of inputs, especially where not all possible combinations of inputs are physically possible.

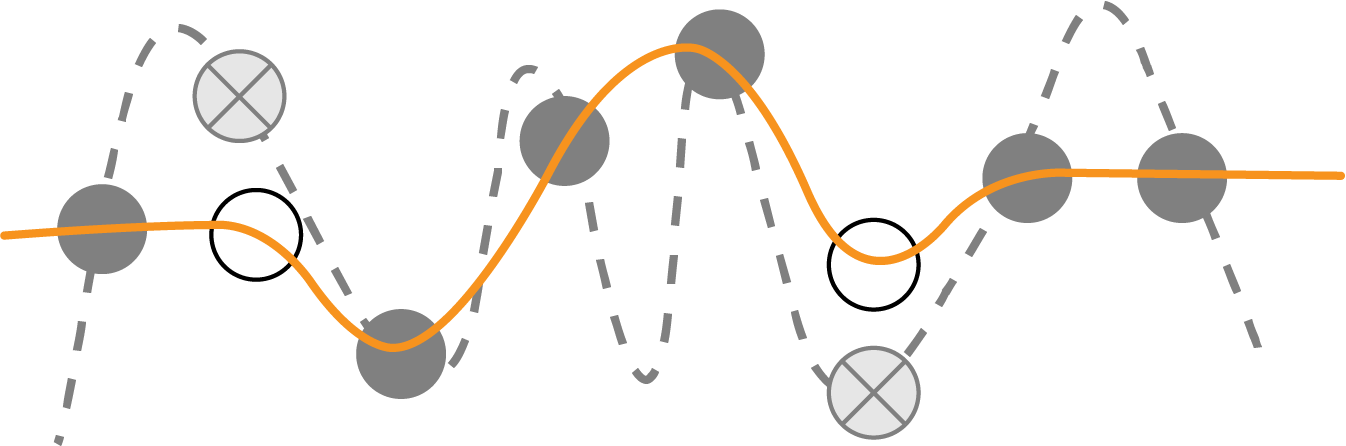

In such cases, overfitting is technically possible (see Figure 4-5, where the unfilled circles are approximated by wrong estimates shown by crossed circles).

Figure 4-5. If the input space is sampled, not tabulated, then you need to take care to limit model complexity.

However, even here, you can see that the ML model will be interpolating between known answers. The calculation is always deterministic, and it is only the input points that are subject to random selection. Therefore, these known answers do not contain noise, and because there are no unobserved variables, errors at unsampled points will be strictly bounded by the model complexity. Here, the overfitting danger comes from model complexity and not from fitting to noise. Overfitting is not as much of a concern when the size of the dataset is larger than the number of free parameters. Therefore, using a combination of low-complexity models and mild regularization provides a practical way to avoid unacceptable overfitting in the case of Monte Carlo selection of the input space.

Data-driven discretizations

Although deriving a closed-form solution is possible for some PDEs, determining solutions using numerical methods is more common. Numerical methods of PDEs are already a deep field of research, and there are many books, courses, and journals devoted to the subject. One common approach is to use finite difference methods, similar to Runge-Kutta methods, for solving ordinary differential equations. This is typically done by discretizing the differential operator of the PDE and finding a solution to the discrete problem on a spatio-temporal grid of the original domain. However, when the dimension of the problem becomes large, this mesh-based approach fails dramatically due to the curse of dimensionality because the mesh spacing of the grid must be small enough to capture the smallest feature size of the solution. So, to achieve 10× higher resolution of an image requires 10,000× more compute power, because the mesh grid must be scaled in four dimensions accounting for space and time.

However, it is possible to use machine learning (rather than Monte Carlo methods) to select the sampling points to create data-driven discretizations of PDEs. In the paper "Learning data-driven discretizations for PDEs,” Bar-Sinai et al. demonstrate the effectiveness of this approach. The authors use a low-resolution grid of fixed points to approximate a solution via a piecewise polynomial interpolation using standard finite-difference methods as well as one obtained from a neural network. The solution obtained from the neural network vastly outperforms the numeric simulation in minimizing the absolute error, in some places achieving a 102 order of magnitude improvement. While increasing the resolution requires substantially more compute power using finite-difference methods, the neural network is able to maintain high performance with only marginal additional cost. Techniques like the Deep Galerkin Method can then use deep learning to provide a mesh-free approximation of the solution to the given PDE. In this way, solving the PDE is reduced to a chained optimization problem (see “Design Pattern 8: Cascade ”).

Unbounded domains

The Monte Carlo and data-driven discretization methods both assume that sampling the entire input space, even if imperfectly, is possible. That’s why the ML model was treated as an interpolation between known points.

Generalization and the concern of overfitting become difficult to ignore whenever we are unable to sample points in the full domain of the function—for example, for functions with unbounded domains or projections along a time axis into the future. In these settings, it is important to consider overfitting, underfitting, and generalization error. In fact, it’s been shown that although techniques like the Deep Galerkin Method do well on regions that are well sampled, a function that is learned this way does not generalize well on regions outside the domain that were not sampled in the training phase. This can be problematic for using ML to solve PDEs that are defined on unbounded domains, since it would be impossible to capture a representative sample for training.

Distilling knowledge of neural network

Another situation where overfitting is warranted is in distilling, or transferring knowledge, from a large machine learning model into a smaller one. Knowledge distillation is useful when the learning capacity of the large model is not fully utilized. If that is the case, the computational complexity of the large model may not be necessary. However, it is also the case that training smaller models is harder. While the smaller model has enough capacity to represent the knowledge, it may not have enough capacity to learn the knowledge efficiently.

The solution is to train the smaller model on a large amount of generated data that is labeled by the larger model. The smaller model learns the soft output of the larger model, instead of actual labels on real data. This is a simpler problem that can be learned by the smaller model. As with approximating a numerical function by a machine learning model, the aim is for the smaller model to faithfully represent the predictions of the larger machine learning model. This second training step can employ Useful Overfitting.

Overfitting a batch

In practice, training neural networks requires a lot of experimentation, and a practitioner must make many choices, from the size and architecture of the network to the choice of the learning rate, weight initializations, or other hyperparameters.

Overfitting on a small batch is a good sanity check both for the model code as well as the data input pipeline. Just because the model compiles and the code runs without errors doesn’t mean you’ve computed what you think you have or that the training objective is configured correctly. A complex enough model should be able to overfit on a small enough batch of data, assuming everything is set up correctly. So, if you’re not able to overfit a small batch with any model, it’s worth rechecking your model code, input pipeline, and loss function for any errors or simple bugs. Overfitting on a batch is a useful technique when training and troubleshooting neural networks.

Tip

Overfitting goes beyond just a batch. From a more holistic perspective, overfitting follows the general advice commonly given with regards to deep learning and regularization. The best fitting model is a large model that has been properly regularized. In short, if your deep neural network isn’t capable of overfitting your training dataset, you should be using a bigger one. Then, once you have a large model that overfits the training set, you can apply regularization to improve the validation accuracy, even though training accuracy may decrease.

You can test your Keras model code in this way using the tf.data.Dataset you’ve written for your input pipeline. For example, if your training data input pipeline is called trainds, we’ll use batch() to pull a single batch of data. You can find the full code for this example in the repository accompanying this book:

BATCH_SIZE=256single_batch=trainds.batch(BATCH_SIZE).take(1)

Then, when training the model, instead of calling the full trainds dataset inside the fit() method, use the single batch that we created:

model.fit(single_batch.repeat(),validation_data=evalds,…)

Note that we apply repeat() so that we won’t run out of data when training on that single batch. This ensures that we take the one batch over and over again while training. Everything else (the validation dataset, model code, engineered features, and so on) remains the same.

Tip

Rather than choose an arbitrary sample of the training dataset, we recommend that you overfit on a small dataset, each of whose examples has been carefully verified to have correct labels. Design your neural network architecture such that it is able to learn this batch of data precisely and get to zero loss. Then take the same network and train it on the full training dataset.

Design Pattern 12: Checkpoints

In Checkpoints, we store the full state of the model periodically so that we have partially trained models available. These partially trained models can serve as the final model (in the case of early stopping) or as the starting points for continued training (in the cases of machine failure and fine-tuning).

Problem

The more complex a model is (for example, the more layers and nodes a neural network has), the larger the dataset that is needed to train it effectively. This is because more complex models tend to have more tunable parameters. As model sizes increase, the time it takes to fit one batch of examples also increases. As the data size increases (and assuming batch sizes are fixed), the number of batches also increases. Therefore, in terms of computational complexity, this double whammy means that training will take a long time.

At the time of writing, training an English-to-German translation model on a state-of-the-art tensor processing unit (TPU) pod on a relatively small dataset takes about two hours. On real datasets of the sort used to train smart devices, the training can take several days.

When we have training that takes this long, the chances of machine failure are uncomfortably high. If there is a problem, we’d like to be able to resume from an intermediate point, instead of from the very beginning.

Solution

At the end of every epoch, we can save the model state. Then, if the training loop is interrupted for any reason, we can go back to the saved model state and restart. However, when doing this, we have to make sure to save the intermediate model state, not just the model. What does that mean?

Once training is complete, we save or export the model so that we can deploy it for inference. An exported model does not contain the entire model state, just the information necessary to create the prediction function. For a decision tree, for example, this would be the final rules for each intermediate node and the predicted value for each of the leaf nodes. For a linear model, this would be the final values of the weights and biases. For a fully connected neural network, we’d also need to add the activation functions and the weights of the hidden connections.

What data on model state do we need when restoring from a checkpoint that an exported model does not contain? An exported model does not contain which epoch and batch number the model is currently processing, which is obviously important in order to resume training. But there is more information that a model training loop can contain. In order to carry out gradient descent effectively, the optimizer might be changing the learning rate on a schedule. This learning rate state is not present in an exported model. Additionally, there might be stochastic behavior in the model, such as dropout. This is not captured in the exported model state either. Models like recurrent neural networks incorporate history of previous input values. In general, the full model state can be many times the size of the exported model.

Saving the full model state so that model training can resume from a point is called checkpointing, and the saved model files are called checkpoints. How often should we checkpoint? The model state changes after every batch because of gradient descent. So, technically, if we don’t want to lose any work, we should checkpoint after every batch. However, checkpoints are huge and this I/O would add considerable overhead. Instead, model frameworks typically provide the option to checkpoint at the end of every epoch. This is a reasonable tradeoff between never checkpointing and checkpointing after every batch.

To checkpoint a model in Keras, provide a callback to the fit() method:

checkpoint_path='{}/checkpoints/taxi'.format(OUTDIR)cp_callback=tf.keras.callbacks.ModelCheckpoint(checkpoint_path,save_weights_only=False,verbose=1)history=model.fit(x_train,y_train,batch_size=64,epochs=3,validation_data=(x_val,y_val),verbose=2,callbacks=[cp_callback])

With checkpointing added, the training looping becomes what is shown in Figure 4-6.

Figure 4-6. Checkpointing saves the full model state at the end of every epoch.

Why It Works

TensorFlow and Keras automatically resume training from a checkpoint if checkpoints are found in the output path. To start training from scratch, therefore, you have to start from a new output directory (or delete previous checkpoints from the output directory). This works because enterprise-grade machine learning frameworks honor the presence of checkpoint files.

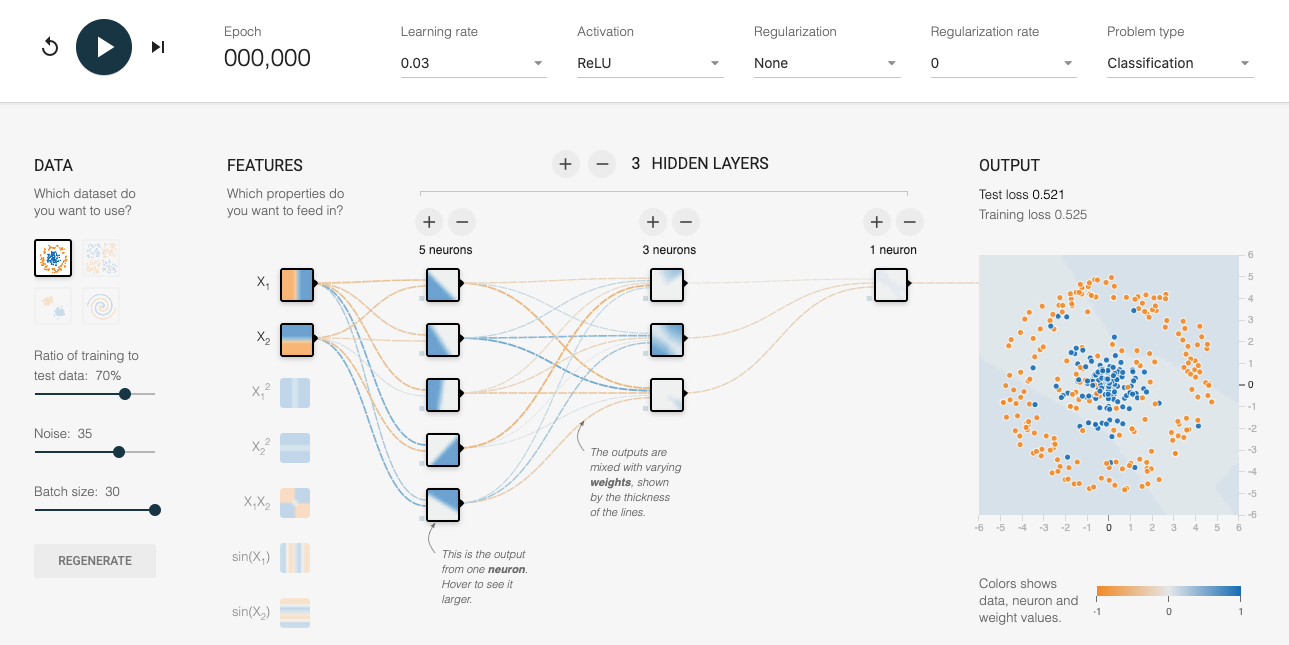

Even though checkpoints are designed primarily to support resilience, the availability of partially trained models opens up a number of other use cases. This is because the partially trained models are usually more generalizable than the models created in later iterations. A good intuition of why this occurs can be obtained from the TensorFlow playground, as shown in Figure 4-7.

Figure 4-7. Starting point of the spiral classification problem. You can get to this setup by opening up this link in a web browser.

In the playground, we are trying to build a classifier to distinguish between blue dots and orange dots (if you are reading this in the print book, please do follow along by navigating to the link in a web browser). The two input features are x1 and x2, which are the coordinates of the points. Based on these features, the model needs to output the probability that the point is blue. The model starts with random weights and the background of the dots shows the model prediction for each coordinate point. As you can see, because the weights are random, the probability tends to hover near the center value for all the pixels.

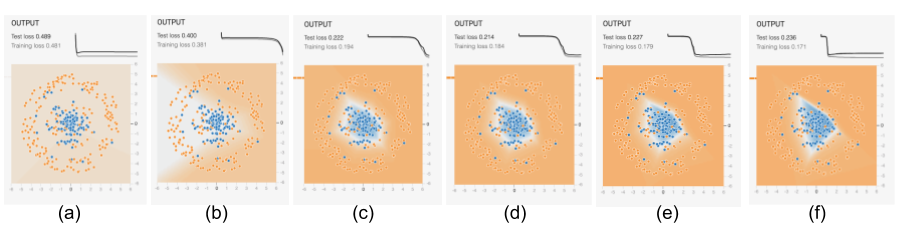

Starting the training by clicking on the arrow at the top left of the image, we see the model slowly start to learn with successive epochs, as shown in Figure 4-8.

Figure 4-8. What the model learns as training progresses. The graphs at the top are the training loss and validation error, while the images show how the model at that stage would predict the color of a point at each coordinate in the grid.

We see the first hint of learning in Figure 4-8(b), and see that the model has learned the high-level view of the data by Figure 4-8(c). From then on, the model is adjusting the boundaries to get more and more of the blue points into the center region while keeping the orange points out. This helps, but only up to point. By the time we get to Figure 4-8(e), the adjustment of weights is starting to reflect random perturbations in the training data, and these are counterproductive on the validation dataset.

We can therefore break the training into three phases. In the first phase, between stages (a) and (c), the model is learning high-level organization of the data. In the second phase, between stages and (c) and (e), the model is learning the details. By the time we get to the third phase, stage (f), the model is overfitting. A partially trained model from the end of phase 1 or from phase 2 has some advantages precisely because it has learned the high-level organization but is not caught up in the details.

Trade-Offs and Alternatives

Besides providing resilience, saving intermediate checkpoints also allows us to implement early stopping and fine-tuning capabilities.

Early stopping

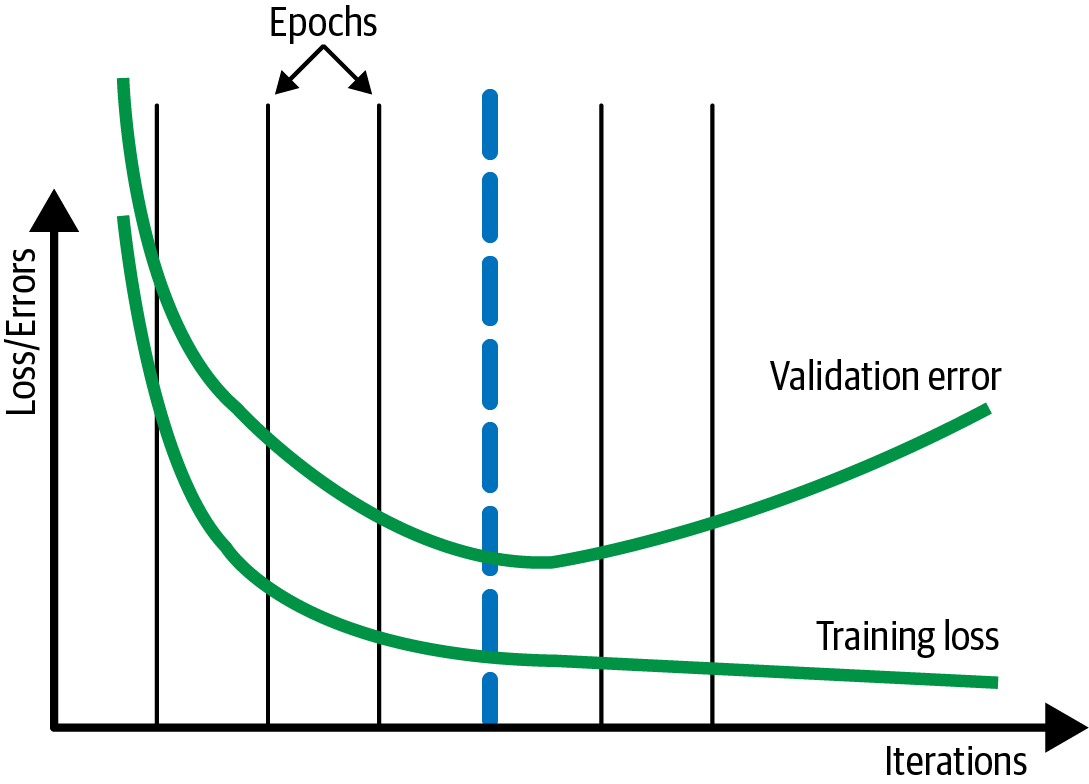

In general, the longer you train, the lower the loss on the training dataset. However, at some point, the error on the validation dataset might stop decreasing. If you are starting to overfit to the training dataset, the validation error might even start to increase, as shown in Figure 4-9.

Figure 4-9. Typically, the training loss continues to drop the longer you train, but once overfitting starts, the validation error on a withheld dataset starts to go up.

In such cases, it can be helpful to look at the validation error at the end of every epoch and stop the training process when the validation error is more than that of the previous epoch. In Figure 4-9, this will be at the end of the fourth epoch, shown by the thick dashed line. This is called early stopping.

Tip

Had we been checkpointing at the end of every batch, we might have been able to capture the true minimum, which might have been a bit before or after the epoch boundary. See the discussion on virtual epochs in this section for a more frequent way to checkpoint.

If we are checkpointing much more frequently, it can be helpful if early stopping isn’t overly sensitive to small perturbations in the validation error. Instead, we can apply early stopping only after the validation error doesn’t improve for more than N checkpoints.

Checkpoint selection

While early stopping can be implemented by stopping the training as soon as the validation error starts to increase, we recommend training longer and choosing the optimal run as a postprocessing step. The reason we suggest training well into phase 3 (see the preceding “Why It Works” section for an explanation of the three phases of the training loop) is that it is not uncommon for the validation error to increase for a bit and then start to drop again. This is usually because the training initially focuses on more common scenarios (phase 1), then starts to home in on the rarer situations (phase 2). Because rare situations may be imperfectly sampled between the training and validation datasets, occasional increases in the validation error during the training run are to be expected in phase 2. In addition, there are situations endemic to big models where deep double descent is expected, and so it is essential to train a bit longer just in case.

In our example, instead of exporting the model at the end of the training run, we will load up the fourth checkpoint and export our final model from there instead. This is called checkpoint selection, and in TensorFlow, it can be achieved using BestExporter.

Regularization

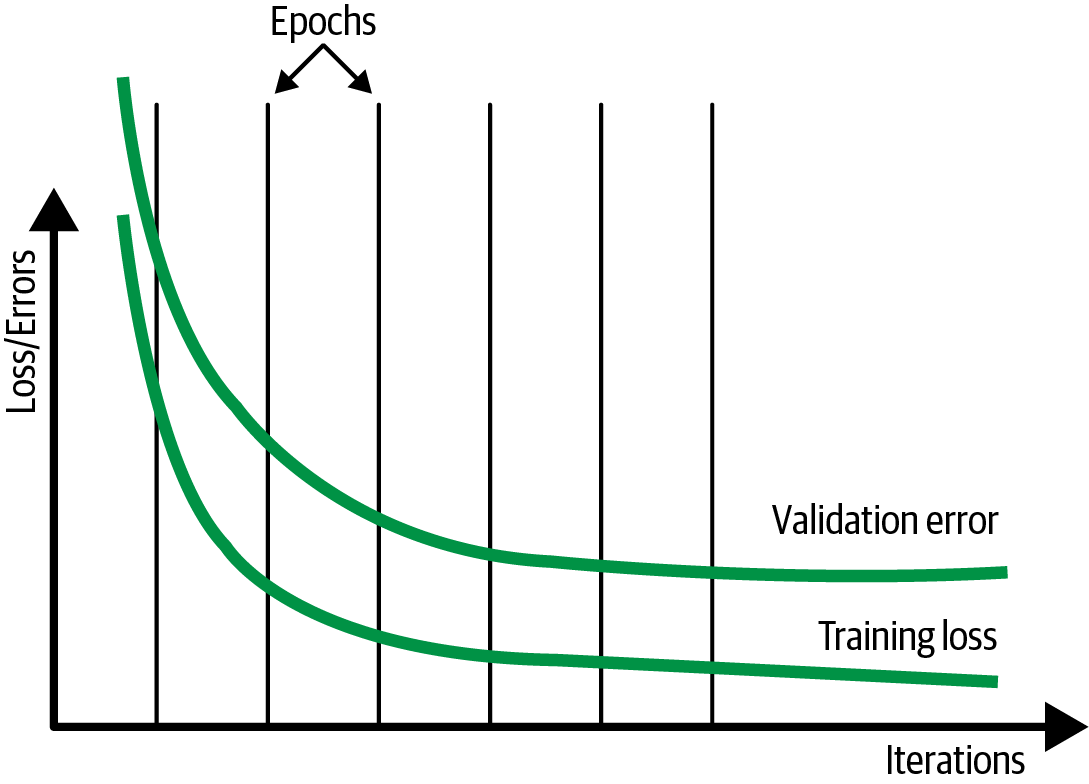

Instead of using early stopping or checkpoint selection, it can be helpful to try to add L2 regularization to your model so that the validation error does not increase and the model never gets into phase 3. Instead, both the training loss and the validation error should plateau, as shown in Figure 4-10. We term such a training loop (where both training and validation metrics reach a plateau) a well-behaved training loop.

Figure 4-10. In the ideal situation, validation error does not increase. Instead, both the training loss and validation error plateau.

If early stopping is not carried out, and only the training loss is used to decide convergence, then we can avoid having to set aside a separate testing dataset. Even if we are not doing early stopping, displaying the progress of the model training can be helpful, particularly if the model takes a long time to train. Although the performance and progress of the model training is normally monitored on the validation dataset during the training loop, it is for visualization purposes only. Since we don’t have to take any action based on metrics being displayed, we can carry out visualization on the test dataset.

The reason that using regularization might be better than early stopping is that regularization allows you to use the entire dataset to change the weights of the model, whereas early stopping requires you to waste 10% to 20% of your dataset purely to decide when to stop training. Other methods to limit overfitting (such as dropout and using models with lower complexity) are also good alternatives to early stopping. In addition, recent research indicates that double descent happens in a variety of machine learning problems, and therefore it is better to train longer rather than risk a suboptimal solution by stopping early.

Two splits

Isn’t the advice in the regularization section in conflict with the advice in the previous sections on early stopping or checkpoint selection? Not really.

We recommend that you split your data into two parts: a training dataset and an evaluation dataset. The evaluation dataset plays the part of the test dataset during experimentation (where there is no validation dataset) and plays the part of the validation dataset in production (where there is no test dataset).

The larger your training dataset, the more complex a model you can use, and the more accurate a model you can get. Using regularization rather than early stopping or checkpoint selection allows you to use a larger training dataset. In the experimentation phase (when you are exploring different model architectures, training techniques, and hyperparameters), we recommend that you turn off early stopping and train with larger models (see also “Design Pattern 11: Useful Overfitting”). This is to ensure that the model has enough capacity to learn the predictive patterns. During this process, monitor error convergence on the training split. At the end of experimentation, you can use the evaluation dataset to diagnose how well your model does on data it has not encountered during training.

When training the model to deploy in production, you will need to prepare to be able to do continuous evaluation and model retraining. Turn on early stopping or checkpoint selection and monitor the error metric on the evaluation dataset. Choose between early stopping and checkpoint selection depending on whether you need to control cost (in which case, you would choose early stopping) or want to prioritize model accuracy (in which case, you would choose checkpoint selection).

Fine-tuning

In a well-behaved training loop, gradient descent behaves such that you get to the neighborhood of the optimal error quickly on the basis of the majority of your data, then slowly converge toward the lowest error by optimizing on the corner cases.

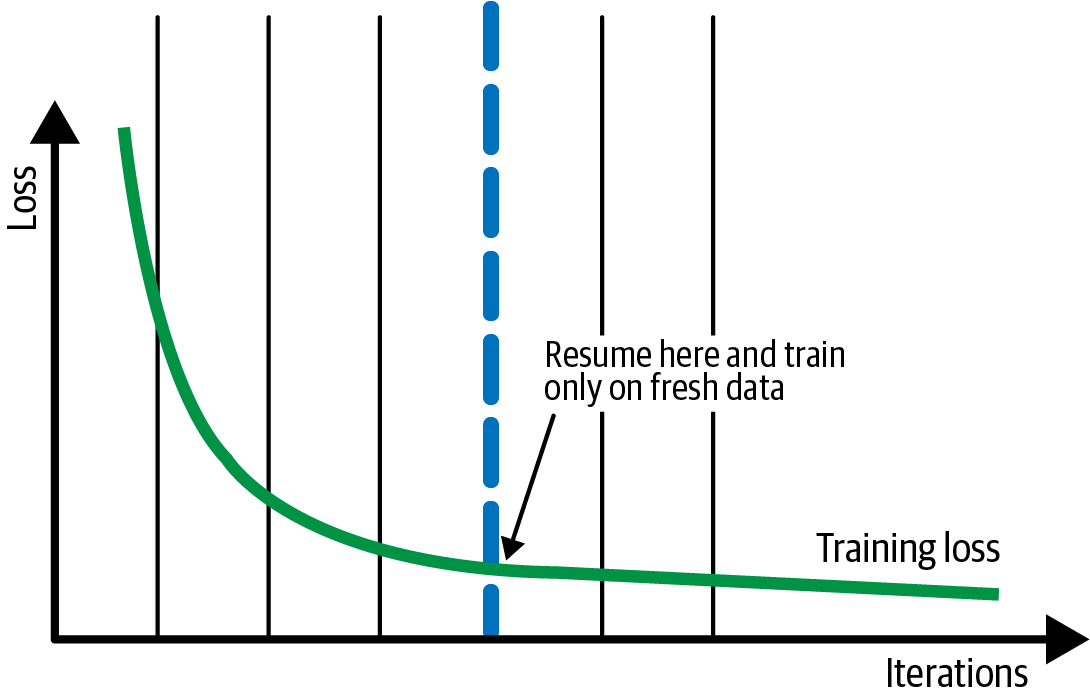

Now, imagine that you need to periodically retrain the model on fresh data. You typically want to emphasize the fresh data, not the corner cases from last month. You are often better off resuming your training, not from the last checkpoint, but the checkpoint marked by the blue line in Figure 4-11. This corresponds to the start of phase 2 in our discussion of the phases of model training described earlier in “Why It Works”. This helps ensure that you have a general method that you are able to then fine-tune for a few epochs on just the fresh data.

When you resume from the checkpoint marked by the thick dashed vertical line, you will be on the fourth epoch, and so the learning rate will be quite low. Therefore, the fresh data will not dramatically change the model. However, the model will behave optimally (in the context of the larger model) on the fresh data because you will have sharpened it on this smaller dataset. This is called fine-tuning. Fine-tuning is also discussed in “Design Pattern 13: Transfer Learning”.

Figure 4-11. Resume from a checkpoint from before the training loss starts to plateau. Train only on fresh data for subsequent iterations.

Warning

Fine-tuning only works as long as you are not changing the model architecture.

It is not necessary to always start from an earlier checkpoint. In some cases, the final checkpoint (that is used to serve the model) can be used as a warm start for another model training iteration. Still, starting from an earlier checkpoint tends to provide better generalization.

Redefining an epoch

Machine learning tutorials often have code like this:

model.fit(X_train,y_train,batch_size=100,epochs=15)

This code assumes that you have a dataset that fits in memory, and consequently that your model can iterate through 15 epochs without running the risk of machine failure. Both these assumptions are unreasonable—ML datasets range into terabytes, and when training can last hours, the chances of machine failure are high.

To make the preceding code more resilient, supply a TensorFlow dataset (not just a NumPy array) because the TensorFlow dataset is an out-of-memory dataset. It provides iteration capability and lazy loading. The code is now as follows:

cp_callback=tf.keras.callbacks.ModelCheckpoint(...)history=model.fit(trainds,validation_data=evalds,epochs=15,batch_size=128,callbacks=[cp_callback])

However, using epochs on large datasets remains a bad idea. Epochs may be easy to understand, but the use of epochs leads to bad effects in real-world ML models. To see why, imagine that you have a training dataset with one million examples. It can be tempting to simply go through this dataset 15 times (for example) by setting the number of epochs to 15. There are several problems with this:

-

The number of epochs is an integer, but the difference in training time between processing the dataset 14.3 times and 15 times can be hours. If the model has converged after having seen 14.3 million examples, you might want to exit and not waste the computational resources necessary to process 0.7 million more examples.

-

You checkpoint once per epoch, and waiting one million examples between checkpoints might be way too long. For resilience, you might want to checkpoint more often.

-

Datasets grow over time. If you get 100,000 more examples and you train the model and get a higher error, is it because you need to do an early stop, or is the new data corrupt in some way? You can’t tell because the prior training was on 15 million examples and the new one is on 16.5 million examples.

-

In distributed, parameter-server training (see “Design Pattern 14: Distribution Strategy”) with data parallelism and proper shuffling, the concept of an epoch is not clear anymore. Because of potentially straggling workers, you can only instruct the system to train on some number of mini-batches.

Steps per epoch

Instead of training for 15 epochs, we might decide to train for 143,000 steps where the batch_size is 100:

NUM_STEPS=143000BATCH_SIZE=100NUM_CHECKPOINTS=15cp_callback=tf.keras.callbacks.ModelCheckpoint(...)history=model.fit(trainds,validation_data=evalds,epochs=NUM_CHECKPOINTS,steps_per_epoch=NUM_STEPS//NUM_CHECKPOINTS,batch_size=BATCH_SIZE,callbacks=[cp_callback])

Each step involves weight updates based on a single mini-batch of data, and this allows us to stop at 14.3 epochs. This gives us much more granularity, but we have to define an “epoch” as 1/15th of the total number of steps:

steps_per_epoch=NUM_STEPS//NUM_CHECKPOINTS,

This is so that we get the right number of checkpoints. It works as long as we make sure to repeat the trainds infinitely:

trainds=trainds.repeat()

The repeat() is needed because we no longer set num_epochs, so the number of epochs defaults to one. Without the repeat(), the model will exit once the training patterns are exhausted after reading the dataset once.

Retraining with more data

What happens when we get 100,000 more examples? Easy! We add it to our data warehouse but do not update the code. Our code will still want to process 143,000 steps, and it will get to process that much data, except that 10% of the examples it sees are newer. If the model converges, great. If it doesn’t, we know that these new data points are the issue because we are not training longer than we were before. By keeping the number of steps constant, we have been able to separate out the effects of new data from training on more data.

Once we have trained for 143,000 steps, we restart the training and run it a bit longer (say, 10,000 steps), and as long as the model continues to converge, we keep training it longer. Then, we update the number 143,000 in the code above (in reality, it will be a parameter to the code) to reflect the new number of steps.

This all works fine, until you want to do hyperparameter tuning. When you do hyperparameter tuning, you will want to want to change the batch size. Unfortunately, if you change the batch size to 50, you will find yourself training for half the time because we are training for 143,000 steps, and each step is only half as long as before. Obviously, this is no good.

Virtual epochs

The answer is to keep the total number of training examples shown to the model (not number of steps; see Figure 4-12) constant:

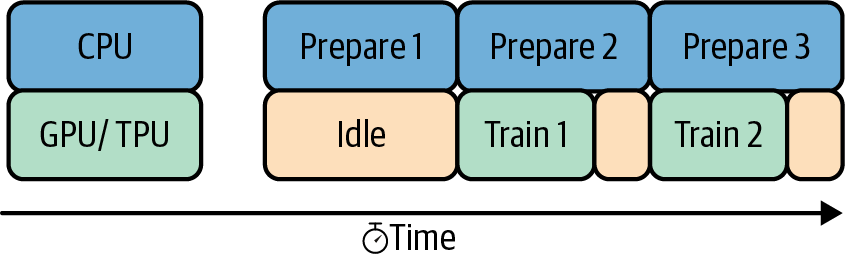

NUM_TRAINING_EXAMPLES=1000*1000STOP_POINT=14.3TOTAL_TRAINING_EXAMPLES=int(STOP_POINT*NUM_TRAINING_EXAMPLES)BATCH_SIZE=100NUM_CHECKPOINTS=15steps_per_epoch=(TOTAL_TRAINING_EXAMPLES//(BATCH_SIZE*NUM_CHECKPOINTS))cp_callback=tf.keras.callbacks.ModelCheckpoint(...)history=model.fit(trainds,validation_data=evalds,epochs=NUM_CHECKPOINTS,steps_per_epoch=steps_per_epoch,batch_size=BATCH_SIZE,callbacks=[cp_callback])

Figure 4-12. Defining a virtual epoch in terms of the desired number of steps between checkpoints.

When you get more data, first train it with the old settings, then increase the number of examples to reflect the new data, and finally change the STOP_POINT to reflect the number of times you have to traverse the data to attain convergence.

This is now safe even with hyperparameter tuning (discussed later in this chapter) and retains all the advantages of keeping the number of steps constant.

Design Pattern 13: Transfer Learning

In Transfer Learning, we take part of a previously trained model, freeze the weights, and incorporate these nontrainable layers into a new model that solves a similar problem, but on a smaller dataset.

Problem

Training custom ML models on unstructured data requires extremely large datasets, which are not always readily available. Consider the case of a model identifying whether an x-ray of an arm contains a broken bone. To achieve high accuracy, you’ll need hundreds of thousands of images, if not more. Before your model learns what a broken bone looks like, it needs to first learn to make sense of the pixels, edges, and shapes that are part of the images in your dataset. The same is true for models trained on text data. Let’s say we’re building a model that takes descriptions of patient symptoms and predicts the possible conditions associated with those symptoms. In addition to learning which words differentiate a cold from pneumonia, the model also needs to learn basic language semantics and how the sequence of words creates meaning. For example, the model would need to not only learn to detect the presence of the word fever, but that the sequence no fever carries a very different meaning than high fever.

To see just how much data is required to train high-accuracy models, we can look at ImageNet, a database of over 14 million labeled images. ImageNet is frequently used as a benchmark for evaluating machine learning frameworks on various hardware. As an example, the MLPerf benchmark suite uses ImageNet to compare the time it took for various ML frameworks running on different hardware to reach 75.9% classification accuracy. In the v0.7 MLPerf Training results, a TensorFlow model running on a Google TPU v3 took around 30 seconds to reach this target accuracy.2 With more training time, models can reach even higher accuracy on ImageNet. However, this is largely due to ImageNet’s size. Most organizations with specialized prediction problems don’t have nearly as much data available.

Because use cases like the image and text examples described above involve particularly specialized data domains, it’s also not possible to use a general-purpose model to successfully identify bone fractures or diagnose diseases. A model that is trained on ImageNet might be able to label an x-ray image as x-ray or medical imaging but is unlikely to be able to label it as a broken femur. Because such models are often trained on a wide variety of high-level label categories, we wouldn’t expect them to understand conditions present in the images that are specific to our dataset. To handle this, we need a solution that allows us to build a custom model using only the data we have available and with the labels that we care about.

Solution

With the Transfer Learning design pattern, we can take a model that has been trained on the same type of data for a similar task and apply it to a specialized task using our own custom data. By “same type of data,” we mean the same data modality—images, text, and so forth. Beyond just the broad category like images, it is also ideal to use a model that has been pre-trained on the same types of images. For example, use a model that has been pre-trained on photographs if you are going to use it for photograph classification and a model that has been pre-trained on remotely sensed imagery if you are going to use it to classify satellite images. By similar task, we’re referring to the problem being solved. To do transfer learning for image classification, for example, it is better to start with a model that has been trained for image classification, rather than object detection.

Continuing with the example, let’s say we’re building a binary classifier to determine whether an image of an x-ray contains a broken bone. We only have 200 images of each class: broken and not broken. This isn’t enough to train a high-quality model from scratch, but it is sufficient for transfer learning. To solve this with transfer learning, we’ll need to find a model that has already been trained on a large dataset to do image classification. We’ll then remove the last layer from that model, freeze the weights of that model, and continue training using our 400 x-ray images. We’d ideally find a model trained on a dataset with similar images to our x-rays, like images taken in a lab or another controlled condition. However, we can still utilize transfer learning if the datasets are different, so long as the prediction task is the same. In this case we’re doing image classification.

You can use transfer learning for many prediction tasks in addition to image classification, so long as there is an existing pre-trained model that matches the task you’d like to perform on your dataset. For example, transfer learning is also frequently applied in image object detection, image style transfer, image generation, text classification, machine translation, and more.

Note

Transfer learning works because it lets us stand on the shoulders of giants, utilizing models that have already been trained on extremely large, labeled datasets. We’re able to use transfer learning thanks to years of research and work others have put into creating these datasets for us, which has advanced the state-of-the-art in transfer learning. One example of such a dataset is the ImageNet project, started in 2006 by Fei-Fei Li and published in 2009. ImageNet3 has been essential to the development of transfer learning and paved the way for other large datasets like COCO and Open Images.

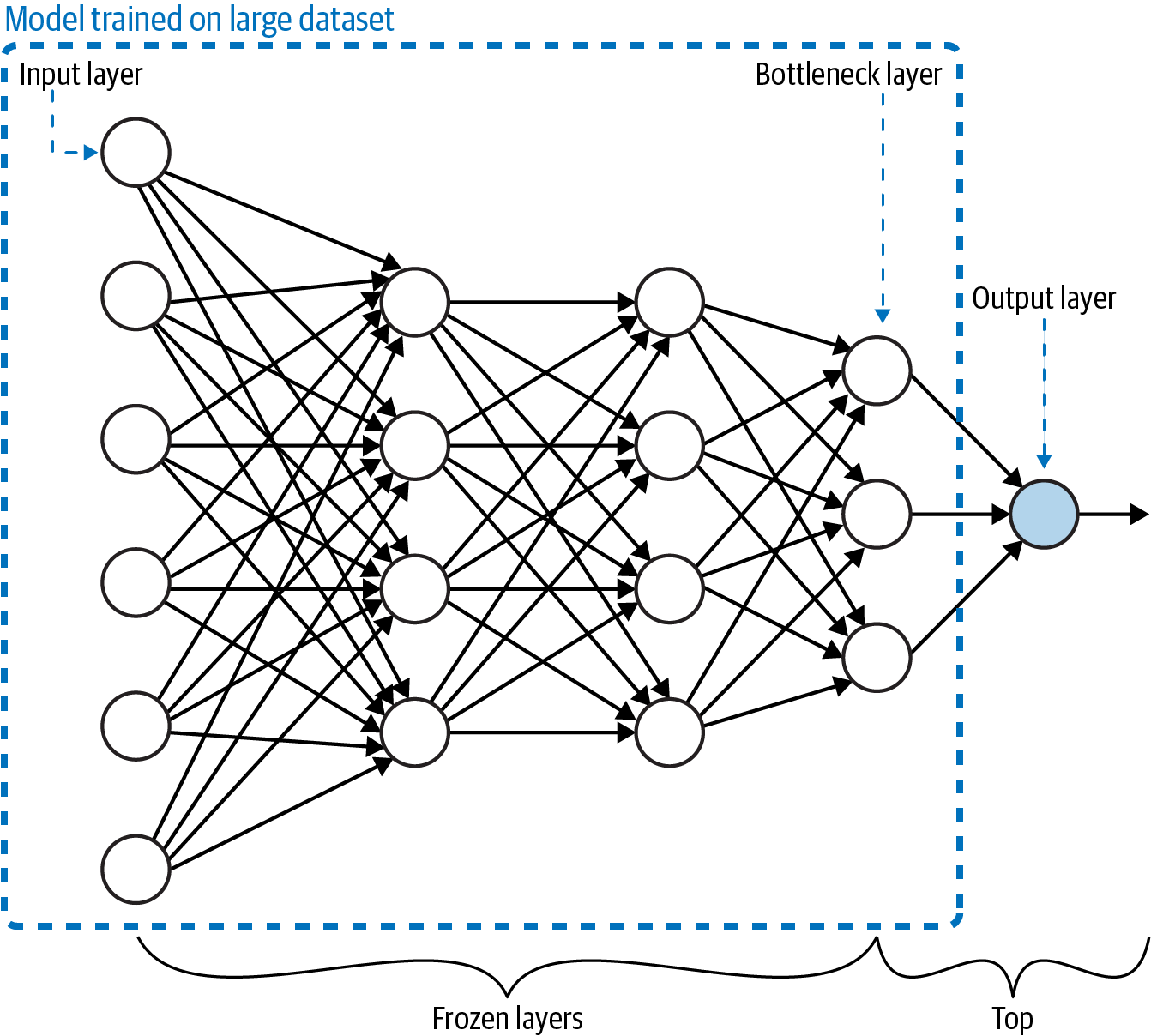

The idea behind transfer learning is that you can utilize the weights and layers from a model trained in the same domain as your prediction task. In most deep learning models, the final layer contains the classification label or output specific to your prediction task. With transfer learning, we remove this layer, freeze the model’s trained weights, and replace the final layer with the output for our specialized prediction task before continuing to train. We can see how this works in Figure 4-13.

Typically, the penultimate layer of the model (the layer before the model’s output layer) is chosen as the bottleneck layer. Next, we’ll explain the bottleneck layer, along with different ways to implement transfer learning in TensorFlow.

Figure 4-13. Transfer learning involves training a model on a large dataset. The “top” of the model (typically, just the output layer) is removed and the remaining layers have their weights frozen. The last layer of the remaining model is called the bottleneck layer.

Bottleneck layer

In relation to an entire model, the bottleneck layer represents the input (typically an image or text document) in the lowest-dimensionality space. More specifically, when we feed data into our model, the first layers see this data nearly in its original form. To see how this works, let’s continue with a medical imaging example, but this time we’ll build a model with a colorectal histology dataset to classify the histology images into one of eight categories.

To explore the model we are going to use for transfer learning, let’s load the VGG model architecture pre-trained on the ImageNet dataset:

vgg_model_withtop=tf.keras.applications.VGG19(include_top=True,weights='imagenet',)

Notice that we’ve set include_top=True, which means we’re loading the full VGG model, including the output layer. For ImageNet, the model classifies images into 1,000 different classes, so the output layer is a 1,000-element array. Let’s look at the output of model.summary() to understand which layer will be used as the bottleneck. For brevity, we’ve left out some of the middle layers here:

Model:"vgg19"_________________________________________________________________Layer(type)OutputShapeParam#=================================================================input_3(InputLayer)[(None,224,224,3)]0_________________________________________________________________block1_conv1(Conv2D)(None,224,224,64)1792...morelayershere..._________________________________________________________________block5_conv3(Conv2D)(None,14,14,512)2359808_________________________________________________________________block5_conv4(Conv2D)(None,14,14,512)2359808_________________________________________________________________block5_pool(MaxPooling2D)(None,7,7,512)0_________________________________________________________________flatten(Flatten)(None,25088)0_________________________________________________________________fc1(Dense)(None,4096)102764544_________________________________________________________________fc2(Dense)(None,4096)16781312_________________________________________________________________predictions(Dense)(None,1000)4097000=================================================================Totalparams:143,667,240Trainableparams:143,667,240Non-trainableparams:0_________________________________________________________________

As you can see, the VGG model accepts images as a 224×224×3-pixel array. This 128-element array is then passed through successive layers (each of which may change the dimensionality of the array) until it is flattened into a 25,088×1-dimensional array in the layer called flatten. Finally, it is fed into the output layer, which returns a 1,000-element array (for each class in ImageNet). In this example, we’ll choose the block5_pool layer as the bottleneck layer when we adapt this model to be trained on our medical histology images. The bottleneck layer produces a 7×7×512-dimensional array, which is a low-dimensional representation of the input image. It has retained enough of the information from the input image to be able to classify it. When we apply this model to our medical image classification task, we hope that the information distillation will be sufficient to successfully carry out classification on our dataset.

The histology dataset comes with images as (150,150,3) dimensional arrays. This 150×150×3 representation is the highest dimensionality. To use the VGG model with our image data, we can load it with the following:

vgg_model=tf.keras.applications.VGG19(include_top=False,weights='imagenet',input_shape=((150,150,3)))vgg_model.trainable=False

By setting include_top=False, we’re specifying that the last layer of VGG we want to load is the bottleneck layer. The input_shape we passed in matches the input shape of our histology images. A summary of the last few layers of this updated VGG model looks like the following:

block5_conv3 (Conv2D) (None, 9, 9, 512) 2359808_________________________________________________________________block5_conv4 (Conv2D) (None, 9, 9, 512) 2359808_________________________________________________________________block5_pool (MaxPooling2D) (None, 4, 4, 512) 0=================================================================Total params: 20,024,384Trainable params: 0Non-trainable params: 20,024,384_________________________________________________________________

The last layer is now our bottleneck layer. You may notice that the size of block5_pool is (4,4,512), whereas before, it was (7,7,512). This is because we instantiated VGG with an input_shape parameter to account for the size of the images in our dataset. It’s also worth noting that setting include_top=False is hardcoded to use block5_pool as the bottleneck layer, but if you want to customize this, you can load the full model and delete any additional layers you don’t want to use.

Before this model is ready to be trained, we’ll need to add a few layers on top, specific to our data and classification task. It’s also important to note that because we’ve set trainable=False, there are 0 trainable parameters in the current model.

Tip

As a general rule of thumb, the bottleneck layer is typically the last, lowest-dimensionality, flattened layer before a flattening operation.

Because they both represent features in reduced dimensionality, bottleneck layers are conceptually similar to embeddings. For example, in an autoencoder model with an encoder-decoder architecture, the bottleneck layer is an embedding. In this case, the bottleneck serves as the middle layer of the model, mapping the original input data to a lower-dimensionality representation, which the decoder (the second half of the network) uses to map the input back to its original, higher-dimensional representation. To see a diagram of the bottleneck layer in an autoencoder, refer to Figure 2-13 in Chapter 2.

An embedding layer is essentially a lookup table of weights, mapping a particular feature to some dimension in vector space. The main difference is that the weights in an embedding layer can be trained, whereas all the layers leading up to and including the bottleneck layer have their weights frozen. In other words, the entire network up to and including the bottleneck layer is nontrainable, and the weights in the layers after the bottleneck are the only trainable layers in the model.

Note

It’s also worth noting that pre-trained embeddings can be used in the Transfer Learning design pattern. When you build a model that includes an embedding layer, you can either utilize an existing (pre-trained) embedding lookup, or train your own embedding layer from scratch.

To summarize, transfer learning is a solution you can employ to solve a similar problem on a smaller dataset. Transfer learning always makes use of a bottleneck layer with nontrainable, frozen weights. Embeddings are a type of data representation. Ultimately, it comes down to purpose. If the purpose is to train a similar model, you would use transfer learning. Consequently, if the purpose is to represent an input image more concisely, you would use an embedding. The code might be exactly the same.

Implementing transfer learning

You can implement transfer learning in Keras using one of these two methods:

-

Loading a pre-trained model on your own, removing the layers after the bottleneck, and adding a new final layer with your own data and labels

-

Using a pre-trained TensorFlow Hub module as the foundation for your transfer learning task

Let’s start by looking at how to load and use a pre-trained model on your own. For this, we’ll build on the VGG model example we introduced earlier. Note that VGG is a model architecture, whereas ImageNet is the data it was trained on. Together, these make up the pre-trained model we’ll be using for transfer learning. Here, we’re using transfer learning to classify colorectal histology images. Whereas the original ImageNet dataset contains 1,000 labels, our resulting model will only return 8 possible classes that we’ll specify, as opposed to the thousands of labels present in ImageNet.

Note

Loading a pre-trained model and using it to get classifications on the original labels that model was trained on is not transfer learning. Transfer learning is going one step further, replacing the final layers of the model with your own prediction task.

The VGG model we’ve loaded will be our base model. We’ll need to add a few layers to flatten the output of our bottleneck layer and feed this flattened output into an 8-element softmax array:

global_avg_layer=tf.keras.layers.GlobalAveragePooling2D()feature_batch_avg=global_avg_layer(feature_batch)prediction_layer=tf.keras.layers.Dense(8,activation='softmax')prediction_batch=prediction_layer(feature_batch_avg)

Finally, we can use the Sequential, API to create our new transfer learning model as a stack of layers:

histology_model=keras.Sequential([vgg_model,global_avg_layer,prediction_layer])

Let’s take note of the output of model.summary() on our transfer learning model:

_________________________________________________________________Layer (type) Output Shape Param #=================================================================vgg19 (Model) (None, 4, 4, 512) 20024384_________________________________________________________________global_average_pooling2d (Gl (None, 512) 0_________________________________________________________________dense (Dense) (None, 8) 4104=================================================================Total params: 20,028,488Trainable params: 4,104Non-trainable params: 20,024,384_________________________________________________________________

The important piece here is that the only trainable parameters are the ones after our bottleneck layer. In this example, the bottleneck layer is the feature vectors from the VGG model. After compiling this model, we can train it using our dataset of histology images.

Pre-trained embeddings

While we can load a pre-trained model on our own, we can also implement transfer learning by making use of the many pre-trained models available in TF Hub, a library of pre-trained models (called modules). These modules span a variety of data domains and use cases, including classification, object detection, machine translation, and more. In TensorFlow, you can load these modules as a layer, then add your own classification layer on top.

To see how TF Hub works, let’s build a model that classifies movie reviews as either positive or negative. First, we’ll load a pre-trained embedding model trained on a large corpus of news articles. We can instantiate this model as a hub.KerasLayer:

hub_layer=hub.KerasLayer("https://tfhub.dev/google/tf2-preview/gnews-swivel-20dim/1",input_shape=[],dtype=tf.string,trainable=True)

We can stack additional layers on top of this to build our classifier:

model=keras.Sequential([hub_layer,keras.layers.Dense(32,activation='relu'),keras.layers.Dense(1,activation='sigmoid')])

We can now train this model, passing it our own text dataset as input. The resulting prediction will be a 1-element array indicating whether our model thinks the given text is positive or negative.

Why It Works

To understand why transfer learning works, let’s first look at an analogy. When children are learning their first language, they are exposed to many examples and corrected if they misidentify something. For example, the first time they learn to identify a cat, they’ll see their parents point to the cat and say the word cat, and this repetition strengthens pathways in their brain. Similarly, they are corrected when they say cat referring to an animal that is not a cat. When the child then learns how to identify a dog, they don’t need to start from scratch. They can use a similar recognition process to the one they used for the cat but apply it to a slightly different task. In this way, the child has built a foundation for learning. In addition to learning new things, they have also learned how to learn new things. Applying these learning methods to different domains is roughly how transfer learning works, too.

How does this play out in neural networks? In a typical convolutional neural network (CNN), the learning is hierarchical. The first layers learn to recognize edges and shapes present in an image. In the cat example, this might mean that the model can identify areas in an image where the edge of the cat’s body meets the background. The next layers in the model begin to understand groups of edges—perhaps that there are two edges that meet toward the top-left corner of the image. A CNN’s final layers can then piece together these groups of edges, developing an understanding of different features in the image. In the cat example, the model might be able to identify two triangular shapes toward the top of the image and two oval shapes below them. As humans, we know that these triangular shapes are ears and the oval shapes are eyes.

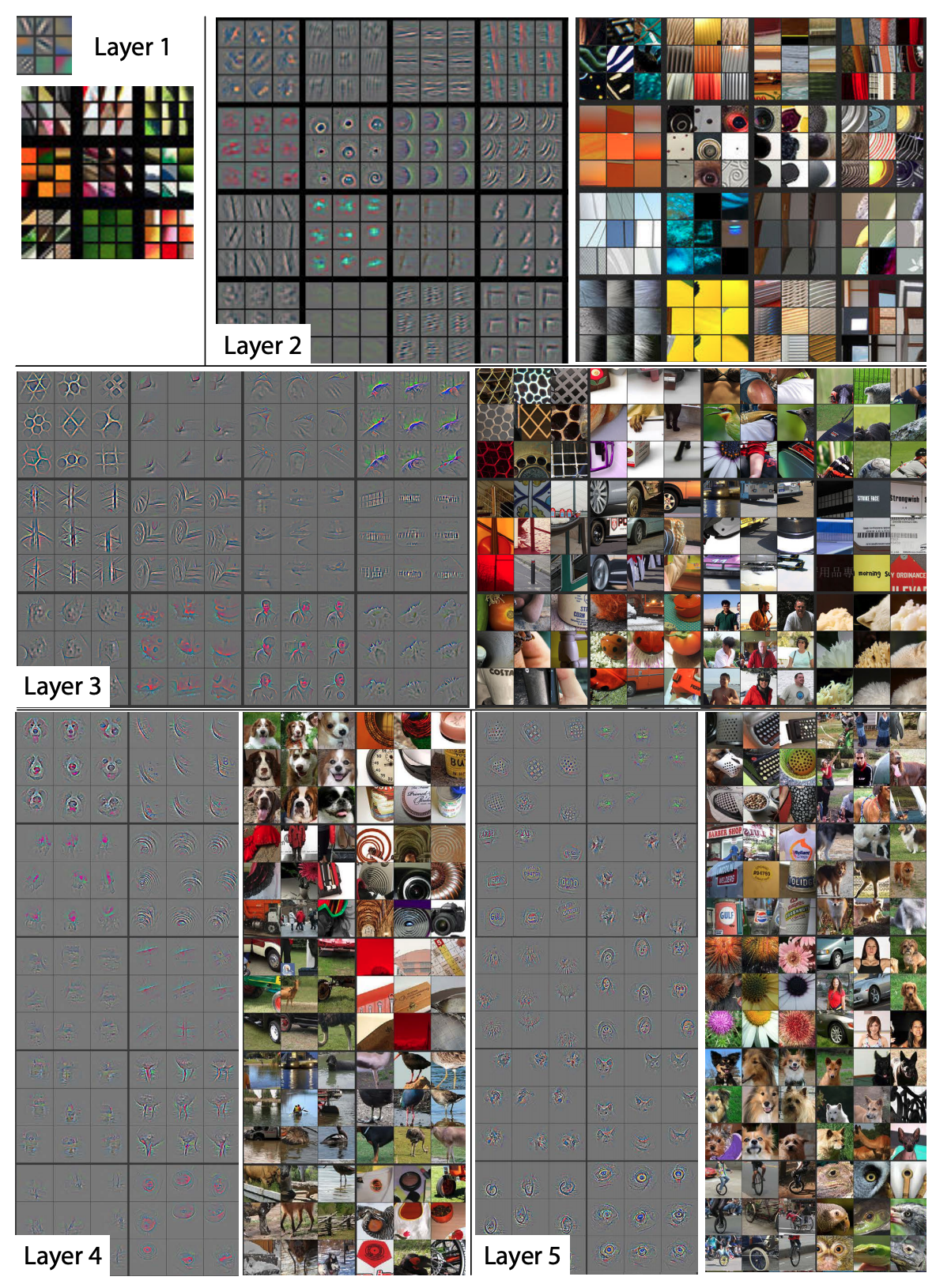

We can visualize this process in Figure 4-14, from research by Zeiler and Fergus on deconstructing CNNs to understand the different features that were activated throughout each layer of the model. For each layer in a five-layer CNN, this shows an image’s feature map for a given layer alongside the actual image. This lets us see how the model’s perception of an image progresses as it moves throughout the network. Layers 1 and 2 recognize only edges, layer 3 begins to recognize objects, and layers 4 and 5 can understand focal points within the entire image.

Remember, though, that to our model, these are simply groupings of pixel values. It doesn’t know that the triangular and oval shapes are ears and eyes—it only knows to associate specific groupings of features with the labels it has been trained on. In this way, the model’s process of learning what groupings of features make up a cat isn’t much different from learning the groups of features that are part of other objects, like a table, a mountain, or even a celebrity. To a model, these are all just different combinations of pixel values, edges, and shapes.

Trade-Offs and Alternatives

So far, we haven’t discussed methods of modifying the weights of our original model when implementing transfer learning. Here, we’ll examine two approaches for this: feature extraction and fine-tuning. We’ll also discuss why transfer learning is primarily focused on image and text models and look at the relationship between text sentence embeddings and transfer learning.

Fine-tuning versus feature extraction

Feature extraction describes an approach to transfer learning where you freeze the weights of all layers before the bottleneck layer and train the following layers on your own data and labels. Another option is instead fine-tuning the weights of the pre-trained model’s layers. With fine-tuning, you can either update the weights of each layer in the pre-trained model, or just a few of the layers right before the bottleneck. Training a transfer learning model using fine-tuning typically takes longer than feature extraction. You’ll notice in our text classification example above, we set trainable=True when initializing our TF Hub layer. This is an example of fine-tuning.

When fine-tuning, it’s common to leave the weights of the model’s initial layers frozen since these layers have been trained to recognize basic features that are often common across many types of images. To fine-tune a MobileNet model, for example, we’d set trainable=False only for a subset of layers in the model, rather than making every layer non-trainable. For example, to fine-tune after the 100th layer, we could run:

base_model=tf.keras.applications.MobileNetV2(input_shape=(160,160,3),include_top=False,weights='imagenet')forlayerinbase_model.layers[:100]:layer.trainable=False

One recommended approach to determining how many layers to freeze is known as progressive fine-tuning, and it involves iteratively unfreezing layers after every training run to find the ideal number of layers to fine-tune. This works best and is most efficient if you keep your learning rate low (0.001 is common) and the number of training iterations relatively small. To implement progressive fine-tuning, start by unfreezing only the last layer of your transferred model (the layer closest to the output) and calculate your model’s loss after training. Then, one by one, unfreeze more layers until you reach the Input layer or until the loss starts to plateau. Use this to inform the number of layers to fine-tune.

How should you determine whether to fine-tune or freeze all layers of your pre-trained model? Typically, when you’ve got a small dataset, it’s best to use the pre-trained model as a feature extractor rather than fine-tuning. If you’re retraining the weights of a model that was likely trained on thousands or millions of examples, fine-tuning can cause the updated model to overfit to your small dataset and lose the more general information learned from those millions of examples. Although it depends on your data and prediction task, when we say “small dataset” here, we’re referring to datasets with hundreds or a few thousand training examples.

Another factor to take into account when deciding whether to fine-tune is how similar your prediction task is to that of the original pre-trained model you’re using. When the prediction task is similar or a continuation of the previous training, as it was in our movie review sentiment analysis model, fine-tuning can produce higher-accuracy results. When the task is different or the datasets are significantly different, it’s best to freeze all the layers of the pre-trained model instead of fine-tuning them. Table 4-1 summarizes the key points.4

| Criterion | Feature extraction | Fine-tuning |

|---|---|---|

| How large is the dataset? | Small | Large |

| Is your prediction task the same as that of the pre-trained model? | Different tasks | Same task, or similar task with same class distribution of labels |

| Budget for training time and computational cost | Low | High |

In our text example, the pre-trained model was trained on a corpus of news text but our use case was sentiment analysis. Because these tasks are different, we should use the original model as a feature extractor rather than fine-tune it. An example of different prediction tasks in an image domain might be using our MobileNet model trained on ImageNet as a basis for doing transfer learning on a dataset of medical images. Although both tasks involve image classification, the nature of the images in each dataset are very different.

Focus on image and text models

You may have noticed that all of the examples in this section focused on image and text data. This is because transfer learning is primarily for cases where you can apply a similar task to the same data domain. Models trained with tabular data, however, cover a potentially infinite number of possible prediction tasks and data types. You could train a model on tabular data to predict how you should price tickets to your event, whether or not someone is likely to default on loan, your company’s revenue next quarter, the duration of a taxi trip, and so forth. The specific data for these tasks is also incredibly varied, with the ticket problem depending on information about artists and venues, the loan problem on personal income, and the taxi duration on urban traffic patterns. For these reasons, there are inherent challenges in transferring the learnings from one tabular model to another.

Although transfer learning is not yet as common on tabular data as it is for image and text domains, a new model architecture called TabNet presents novel research in this area. Most tabular models require significant feature engineering when compared with image and text models. TabNet employs a technique that first uses unsupervised learning to learn representations for tabular features, and then fine-tunes these learned representations to produce predictions. In this way, TabNet automates feature engineering for tabular models.

Embeddings of words versus sentences

In our discussion of text embeddings so far, we’ve referred mostly to word embeddings. Another type of text embedding is sentence embeddings. Where word embeddings represent individual words in a vector space, sentence embeddings represent entire sentences. Consequently, word embeddings are context agnostic. Let’s see how this plays out with the following sentence:

“I’ve left you fresh baked cookies on the left side of the kitchen counter.”

Notice that the word left appears twice in that sentence, first as a verb and then as an adjective. If we were to generate word embeddings for this sentence, we’d get a separate array for each word. With word embeddings, the array for both instances of the word left would be the same. Using sentence-level embeddings, however, we’d get a single vector to represent the entire sentence. There are several approaches for generating sentence embeddings—from averaging a sentence’s word embeddings to training a supervised learning model on a large corpus of text to generate the embeddings.

How does this relate to transfer learning? The latter method—training a supervised learning model to generate sentence-level embeddings—is actually a form of transfer learning. This is the approach used by Google’s Universal Sentence Encoder (available in TF Hub) and BERT. These methods differ from word embeddings in that they go beyond simply providing a weight lookup for individual words. Instead, they have been built by training a model on a large dataset of varied text to understand the meaning conveyed by sequences of words. In this way, they are designed to be transferred to different natural language tasks and can thus be used to build models that implement transfer learning.

Design Pattern 14: Distribution Strategy

In Distribution Strategy, the training loop is carried out at scale over multiple workers, often with caching, hardware acceleration, and parallelization.

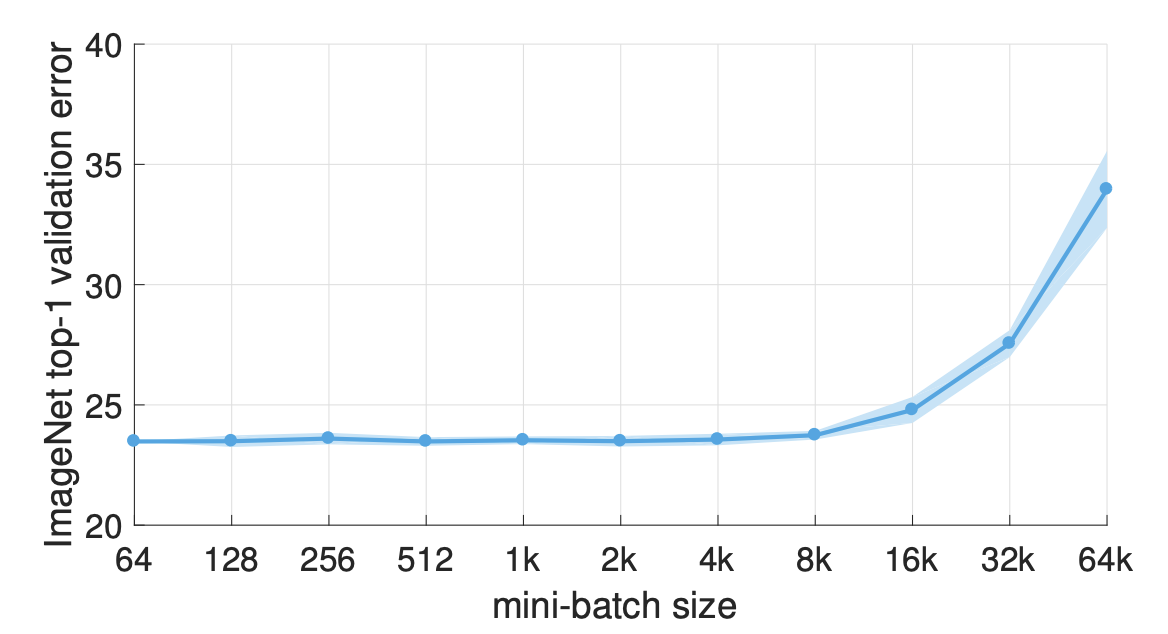

Problem

These days, it’s common for large neural networks to have millions of parameters and be trained on massive amounts of data. In fact, it’s been shown that increasing the scale of deep learning, with respect to the number of training examples, the number of model parameters, or both, drastically improves model performance. However, as the size of models and data increases, the computation and memory demands increase proportionally, making the time it takes to train these models one of the biggest problems of deep learning.

GPUs provide a substantial computational boost and bring the training time of modestly sized deep neural networks within reach. However, for very large models trained on massive amounts of data, individual GPUs aren’t enough to make the training time tractible. For example, at the time of writing, training ResNet-50 on the benchmark ImageNet dataset for 90 epochs on a single NVIDIA M40 GPU requires 1018 single precision operations and takes 14 days. As AI is being used more and more to solve problems within complex domains, and open source libraries like Tensorflow and PyTorch make building deep learning models more accessible, large neural networks comparable to ResNet-50 have become the norm.

This is a problem. If it takes two weeks to train your neural network, then you have to wait two weeks before you can iterate on new ideas or experiment with tweaking the settings. Furthermore, for some complex problems like medical imaging, autonomous driving, or language translation, it’s not always feasible to break the problem down into smaller components or work with only a subset of the data. It’s only with the full scale of the data that you can assess whether things work or not.

Training time translates quite literally to money. In the world of serverless machine learning, rather than buying your own expensive GPU, it is possible to submit training jobs via a cloud service where you are charged for training time. The cost of training a model, whether it is to pay for a GPU or to pay for a serverless training service, quickly adds up.

Is there a way to speed up the training of these large neural networks?

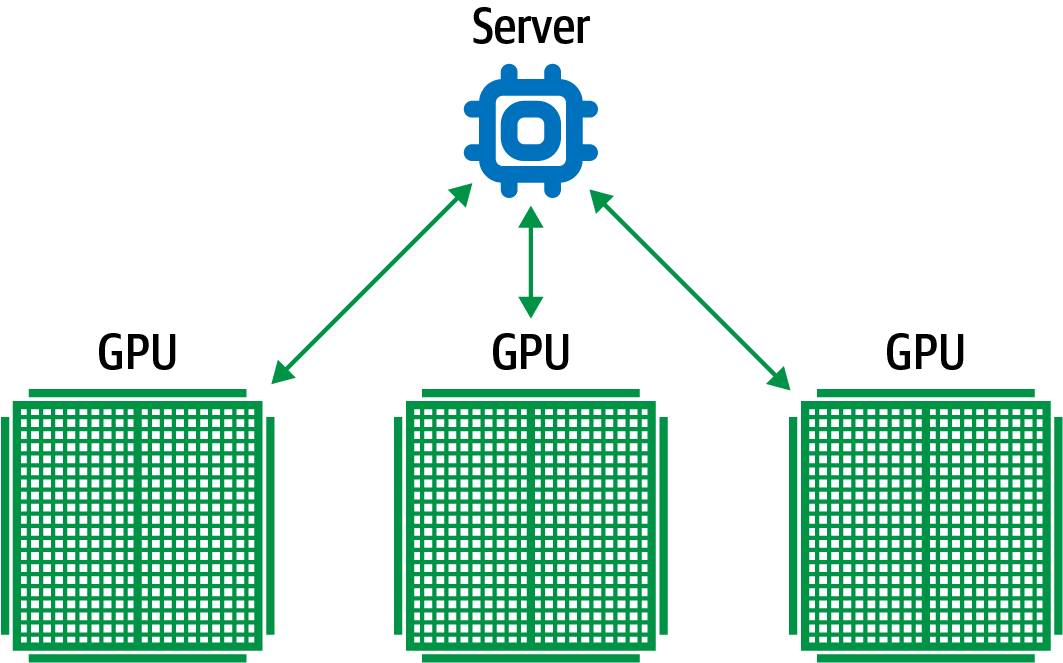

Solution

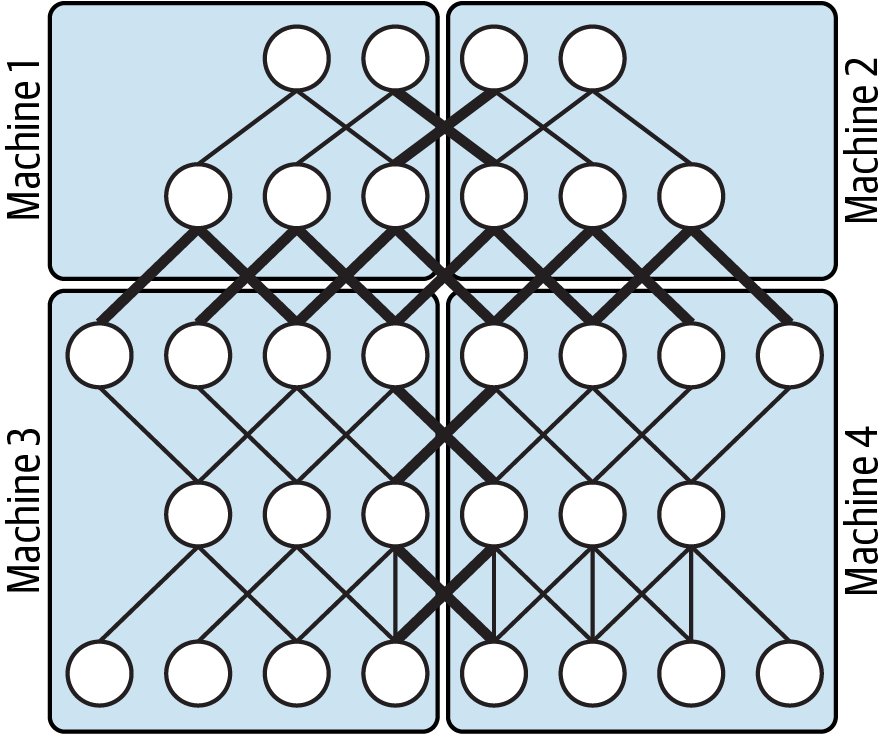

One way to accelerate training is through distribution strategies in the training loop. There are different distribution techniques, but the common idea is to split the effort of training the model across multiple machines. There are two ways this can be done: data parallelism and model parallelism. In data parallelism, computation is split across different machines and different workers train on different subsets of the training data. In model parallelism, the model is split and different workers carry out the computation for different parts of the model. In this section, we’ll focus on data parallelism and show implementations in TensorFlow using the tf.distribute.Strategy library. We’ll discuss model parallelism in “Trade-Offs and Alternatives”.

To implement data parallelism, there must be a method in place for different workers to compute gradients and share that information to make updates to the model parameters. This ensures that all workers are consistent and each gradient step works to train the model. Broadly speaking, data parallelism can be carried out either synchronously or asynchronously.

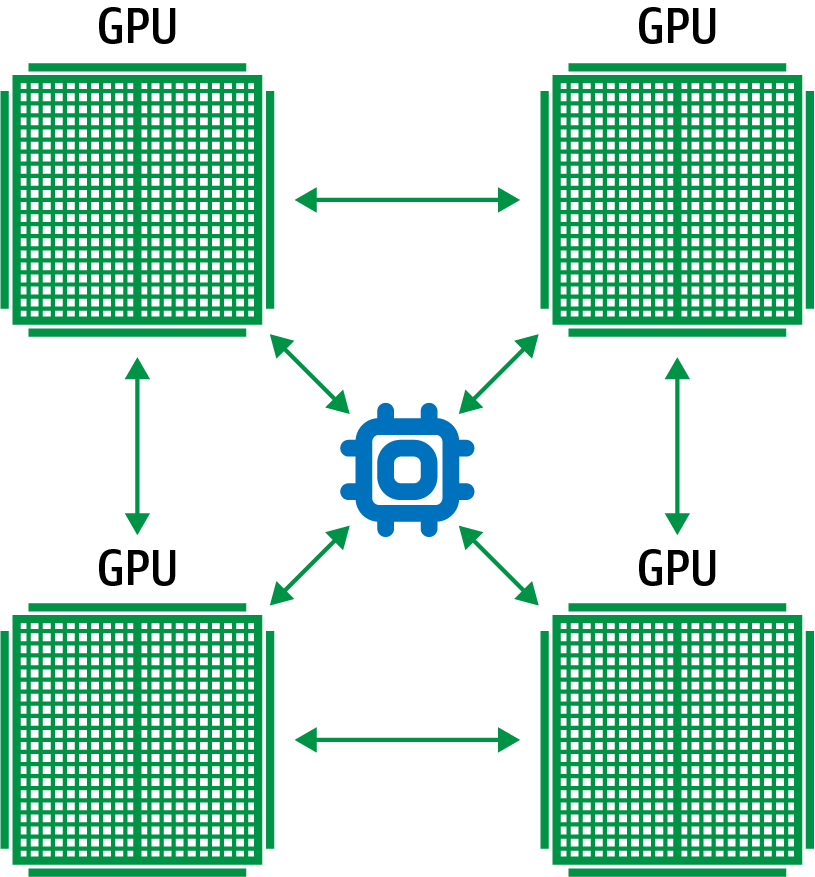

Synchronous training

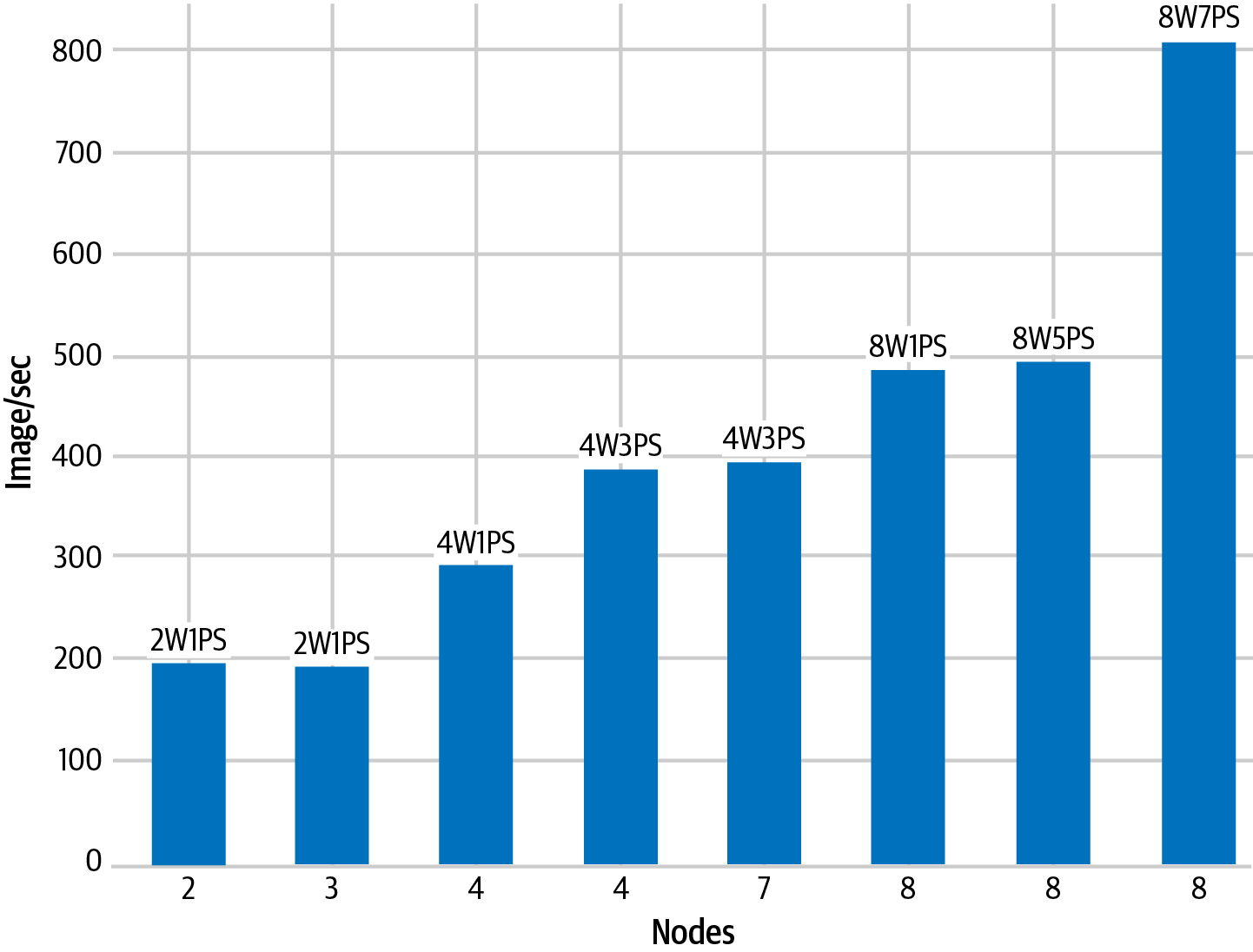

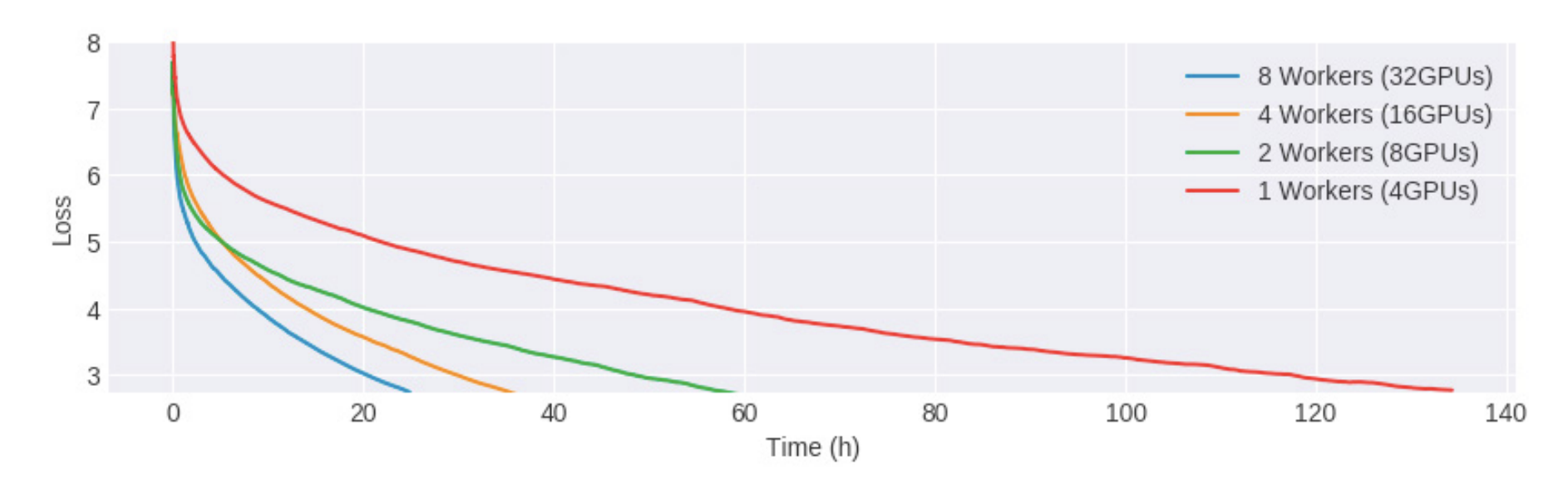

In synchronous training, the workers train on different slices of input data in parallel and the gradient values are aggregated at the end of each training step. This is performed via an all-reduce algorithm. This means that each worker, typically a GPU, has a copy of the model on device and, for a single stochastic gradient descent (SGD) step, a mini-batch of data is split among each of the separate workers. Each device performs a forward pass with their portion of the mini-batch and computes gradients for each parameter of the model. These locally computed gradients are then collected from each device and aggregated (for example, averaged) to produce a single gradient update for each parameter. A central server holds the most current copy of the model parameters and performs the gradient step according to the gradients received from the multiple workers. Once the model parameters are updated according to this aggregated gradient step, the new model is sent back to the workers along with another split of the next mini-batch, and the process repeats. Figure 4-15 shows a typical all-reduce architecture for synchronous data distribution.

As with any parallelism strategy, this introduces additional overhead to manage timing and communication between workers. Large models could cause I/O bottlenecks as data is passed from the CPU to the GPU during training, and slow networks could also cause delays.

In TensorFlow, tf.distribute.MirroredStrategy supports synchronous distributed training across multiple GPUs on the same machine. Each model parameter is mirrored across all workers and stored as a single conceptual variable called MirroredVariable. During the all-reduce step, all gradient tensors are made available on each device. This helps to significantly reduce the overhead of synchronization. There are also various other implementations for the all-reduce algorithm available, many of which use NVIDIA NCCL.

Figure 4-15. In synchronous training, each worker holds a copy of the model and computes gradients using a slice of the training data mini-batch.

To implement this mirrored strategy in Keras, you first create an instance of the mirrored distribution strategy, then move the creation and compiling of the model inside the scope of that instance. The following code shows how to use MirroredStrategy when training a three-layer neural network: