Chapter 1. Quick-Start Guide

In this chapter, you’ll plunge in and develop your first application from scratch. The goal is to write a HelloArrow program that draws an arrow and rotates it in response to an orientation change.

You’ll be using the OpenGL ES API to render the arrow, but OpenGL is only one of many graphics technologies supported on the iPhone. At first, it can be confusing which of these technologies is most appropriate for your requirements. It’s also not always obvious which technologies are iPhone-specific and which cross over into general Mac OS X development.

Apple neatly organizes all of the iPhone’s public APIs into four layers: Cocoa Touch, Media Services, Core Services, and Core OS. Mac OS X is a bit more sprawling, but it too can be roughly organized into four layers, as shown in Figure 1-1.

At the very bottom layer, Mac OS X and the iPhone share their kernel architecture and core operating system; these shared components are collectively known as Darwin.

Despite the similarities between the two

platforms, they diverge quite a bit in their handling of OpenGL. Figure 1-1 includes some OpenGL-related classes, shown in

bold. The NSOpenGLView class in Mac OS X does not exist

on the iPhone, and the iPhone’s EAGLContext and

CAEGLLayer classes are absent on Mac OS X. The OpenGL API

itself is also quite different in the two platforms, because Mac OS X

supports full-blown OpenGL while the iPhone relies on the more svelte OpenGL

ES.

The iPhone graphics technologies include the following:

- Quartz 2D rendering engine

Vector-based graphics library that supports alpha blending, layers, and anti-aliasing. This is also available on Mac OS X. Applications that leverage Quartz technology must reference a framework (Apple’s term for a bundle of resources and libraries) known as Quartz Core.

- Core Graphics

Vanilla C interface to Quartz. This is also available on Mac OS X.

- UIKit

Native windowing framework for iPhone. Among other things, UIKit wraps Quartz primitives into Objective-C classes. This has a Mac OS X counterpart called AppKit, which is a component of Cocoa.

- Cocoa Touch

Conceptual layer in the iPhone programming stack that contains UIKit along with a few other frameworks.

- Core Animation

- OpenGL ES

Low-level hardware-accelerated C API for rendering 2D or 3D graphics.

- EAGL

Tiny glue API between OpenGL ES and UIKit. Some EAGL classes (such as

CAEGLLayer) are defined in Quartz Core framework, while others (such asEAGLContext) are defined in the OpenGL ES framework.

This book chiefly deals with OpenGL ES, the only technology in the previous list that isn’t Apple-specific. The OpenGL ES specification is controlled by a consortium of companies called the Khronos Group. Different implementations of OpenGL ES all support the same core API, making it easy to write portable code. Vendors can pick and choose from a formally defined set of extensions to the API, and the iPhone supports a rich set of these extensions. We’ll cover many of these extensions throughout this book.

Transitioning to Apple Technology

Yes, you do need a Mac to develop applications for the iPhone App Store! Developers with a PC background should quell their fear; my own experience was that the PC-to-Apple transition was quite painless, aside from some initial frustration with a different keyboard.

Xcode serves as Apple’s preferred development

environment for Mac OS X. If you are new to Xcode, it might initially

strike you as resembling an email client more than an IDE. This layout is

actually quite intuitive; after learning the keyboard shortcuts, I found

Xcode to be a productive environment. It’s also fun to work with. For

example, after typing in a closing delimiter such as ),

the corresponding ( momentarily glows and seems to push

itself out from the screen. This effect is pleasant and subtle; the only

thing missing is a kitten-purr sound effect. Maybe Apple will add that to

the next version of Xcode.

Objective-C

Now we come to the elephant in the room. At some point, you’ve probably heard that Objective-C is a requirement for iPhone development. You can actually use pure C or C++ for much of your application logic, if it does not make extensive use of UIKit. This is especially true for OpenGL development because it is a C API. Most of this book uses C++; Objective-C is used only for the bridge code between the iPhone operating system and OpenGL ES.

The origin of Apple’s usage of Objective-C

lies with NeXT, which was another Steve Jobs company whose technology

was ahead of its time in many ways—perhaps too far ahead. NeXT failed to

survive on its own, and Apple purchased it in 1997. To this day, you can

still find the NS prefix in many of Apple’s APIs,

including those for the iPhone.

Some would say that Objective-C is not as complex or feature-rich as C++, which isn’t necessarily a bad thing. In many cases, Objective-C is the right tool for the right job. It’s a fairly simple superset of C, making it quite easy to learn.

However, for 3D graphics, I find that certain C++ features are indispensable. Operator overloading makes it possible to perform vector math in a syntactically natural way. Templates allow the reuse of vector and matrix types using a variety of underlying numerical representations. Most importantly, C++ is widely used on many platforms, and in many ways, it’s the lingua franca of game developers.

A Brief History of OpenGL ES

In 1982, a Stanford University professor named Jim Clark started one of the world’s first computer graphics companies: Silicon Graphics Computer Systems, later known as SGI. SGI engineers needed a standard way of specifying common 3D transformations and operations, so they designed a proprietary API called IrisGL. In the early 1990s, SGI reworked IrisGL and released it to the public as an industry standard, and OpenGL was born.

Over the years, graphics technology advanced even more rapidly than Moore’s law could have predicted.[1] OpenGL went through many revisions while largely preserving backward compatibility. Many developers believed that the API became bloated. When the mobile phone revolution took off, the need for a trimmed-down version of OpenGL became more apparent than ever. The Khronos Group announced OpenGL for Embedded Systems (OpenGL ES) at the annual SIGGRAPH conference in 2003.

OpenGL ES rapidly gained popularity and today is used on many platforms besides the iPhone, including Android, Symbian, and PlayStation 3.

All Apple devices support at least version 1.1 of the OpenGL ES API, which added several powerful features to the core specification, including vertex buffer objects and mandatory multitexture support, both of which you’ll learn about in this book.

In March 2007, the Khronos Group released the OpenGL ES 2.0 specification, which entailed a major break in backward compatibility by ripping out many of the fixed-function features and replacing them with a shading language. This new model for controlling graphics simplified the API and shifted greater control into the hands of developers. Many developers (including myself) find the ES 2.0 programming model to be more elegant than the ES 1.1 model. But in the end, the two APIs simply represent two different approaches to the same problem. With ES 2.0, an application developer needs to do much more work just to write a simple Hello World application. The 1.x flavor of OpenGL ES will probably continue to be used for some time, because of its low implementation burden.

Choosing the Appropriate Version of OpenGL ES

Apple’s newer handheld devices, such as the iPhone 3GS and iPad, have graphics hardware that supports both ES 1.1 and 2.0; these devices are said to have a programmable graphics pipeline because the graphics processor executes instructions rather than performing fixed mathematical operations. Older devices like the first-generation iPod touch, iPhone, and iPhone 3G are said to have a fixed-function graphics pipeline because they support only ES 1.1.

Before writing your first line of code, be sure to have a good handle on your graphics requirements. It’s tempting to use the latest and greatest API, but keep in mind that there are many 1.1 devices out there, so this could open up a much broader market for your application. It can also be less work to write an ES 1.1 application, if your graphical requirements are fairly modest.

Of course, many advanced effects are possible only in ES 2.0—and, as I mentioned, I believe it to be a more elegant programming model.

To summarize, you can choose from among four possibilities for your application:

Choose wisely! We’ll be using the third choice for many of the samples in this book, including the HelloArrow sample presented in this chapter.

Getting Started

Assuming you already have a Mac, the first step is to head over to Apple’s iPhone developer site and download the SDK. With only the free SDK in hand, you have the tools at your disposal to develop complex applications and even test them on the iPhone Simulator.

The iPhone Simulator cannot emulate certain features such as the accelerometer, nor does it perfectly reflect the iPhone’s implementation of OpenGL ES. For example, a physical iPhone cannot render anti-aliased lines using OpenGL’s smooth lines feature, but the simulator can. Conversely, there may be extensions that a physical iPhone supports that the simulator does not. (Incidentally, we’ll discuss how to work around the anti-aliasing limitation later in this book.)

Having said all that, you do not need to own an iPhone to use this book. I’ve ensured that every code sample either runs against the simulator or at least fails gracefully in the rare case where it leverages a feature not supported on the simulator.

If you do own an iPhone and are willing to cough up a reasonable fee ($100 at the time of this writing), you can join Apple’s iPhone Developer Program to enable deployment to a physical iPhone. When I did this, it was not a painful process, and Apple granted me approval almost immediately. If the approval process takes longer in your case, I suggest forging ahead with the simulator while you wait. I actually use the simulator for most of my day-to-day development anyway, since it provides a much faster debug-build-run turnaround than deploying to my device.

The remainder of this chapter is written in a tutorial style. Be aware that some of the steps may vary slightly from what’s written, depending on the versions of the tools that you’re using. These minor deviations most likely pertain to specific actions within the Xcode UI; for example, a menu might be renamed or shifted in future versions. However, the actual sample code is relatively future-proof.

Installing the iPhone SDK

You can download the iPhone SDK from here:

| http://developer.apple.com/iphone/ |

It’s packaged as a .dmg file, Apple’s standard disk image format. After you download it, it should automatically open in a Finder window—if it doesn’t, you can find its disk icon on the desktop and open it from there. The contents of the disk image usually consist of an “about” PDF, a Packages subfolder, and an installation package, whose icon resembles a cardboard box. Open the installer and follow the steps. When confirming the subset of components to install, simply accept the defaults. When you’re done, you can “eject” the disk image to remove it from the desktop.

As an Apple developer, Xcode will become your home base. I recommend dragging it to your Dock at the bottom of the screen. You’ll find Xcode in /Developer/Applications/Xcode.

Tip

If you’re coming from a PC background, Mac’s windowing system may seem difficult to organize at first. I highly recommend the Exposé and Spaces desktop managers that are built into Mac OS X. Exposé lets you switch between windows using an intuitive “spread-out” view. Spaces can be used to organize your windows into multiple virtual desktops. I’ve used several virtual desktop managers on Windows, and in my opinion Spaces beats them all hands down.

Building the OpenGL Template Application with Xcode

When running Xcode for the first time, it presents you with a Welcome to Xcode dialog. Click the Create a new Xcode project button. (If you have the welcome dialog turned off, go to File→New Project.) Now you’ll be presented with the dialog shown in Figure 1-2, consisting of a collection of templates. The template we’re interested in is OpenGL ES Application, under the iPhone OS heading. It’s nothing fancy, but it is a fully functional OpenGL application and serves as a decent starting point.

In the next dialog, choose a goofy name for your application, and then you’ll finally see Xcode’s main window. Build and run the application by selecting Build and Run from the Build menu or by pressing ⌘-Return. When the build finishes, you should see the iPhone Simulator pop up with a moving square in the middle, as in Figure 1-3. When you’re done gawking at this amazing application, press ⌘-Q to quit.

Deploying to Your Real iPhone

This is not required for development, but if you want to deploy your application to a physical device, you should sign up for Apple’s iPhone Developer Program. This enables you to provision your iPhone for developer builds, in addition to the usual software you get from the App Store. Provisioning is a somewhat laborious process, but thankfully it needs to be done only once per device. Apple has now made it reasonably straightforward by providing a Provisioning Assistant applet that walks you through the process. You’ll find this applet after logging into the iPhone Dev Center and entering the iPhone Developer Program Portal.

When your iPhone has been provisioned properly, you should be able to see it in Xcode’s Organizer window (Control-⌘-O). Open up the Provisioning Profiles tree node in the left pane, and make sure your device is listed.

Now you can go back to Xcode’s main window, open the Overview combo box in the upper-left corner, and choose the latest SDK that has the Device prefix. The next time you build and run (⌘-Return), the moving square should appear on your iPhone.

HelloArrow with Fixed Function

In the previous section, you learned your way around the development environment with Apple’s boilerplate OpenGL application, but to get a good understanding of the fundamentals, you need to start from scratch. This section of the book builds a simple application from the ground up using OpenGL ES 1.1. The 1.x track of OpenGL ES is sometimes called fixed-function to distinguish it from the OpenGL ES 2.0 track, which relies on shaders. We’ll learn how to modify the sample to use shaders later in the chapter.

Let’s come up with a variation of the classic Hello World in a way that fits well with the theme of this book. As you’ll learn later, most of what gets rendered in OpenGL can be reduced to triangles. We can use two overlapping triangles to draw a simple arrow shape, as shown in Figure 1-4. Any resemblance to the Star Trek logo is purely coincidental.

To add an interesting twist, the program will make the arrow stay upright when the user changes the orientation of his iPhone.

Layering Your 3D Application

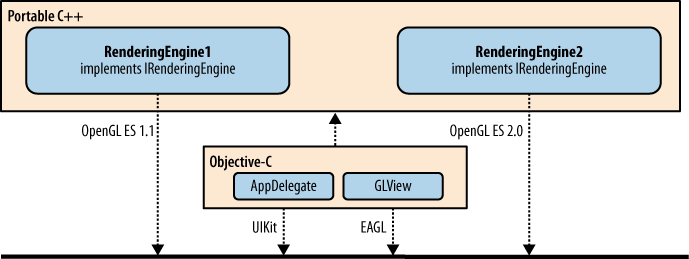

If you love Objective-C, then by all means, use it everywhere you can. This book supports cross-platform code reuse, so we leverage Objective-C only when necessary. Figure 1-5 depicts a couple ways of organizing your application code such that the guts of the program are written in C++ (or vanilla C), while the iPhone-specific glue is written in Objective-C. The variation on the right separates the application engine (also known as game logic) from the rendering engine. Some of the more complex samples in this book take this approach.

The key to either approach depicted in Figure 1-6 is designing a robust interface to

the rendering engine and ensuring that any platform can use it. The

sample code in this book uses the name

IRenderingEngine for this interface, but you can call

it what you want.

The IRenderingEngine

interface also allows you to build multiple rendering engines into your

application, as shown in Figure 1-7. This

facilitates the “Use ES 2.0 if supported, otherwise fall back” scenario

mentioned in Choosing the Appropriate Version of OpenGL ES. We’ll take this

approach for HelloArrow.

You’ll learn more about the pieces in Figure 1-7 as we walk through the code to HelloArrow. To summarize, you’ll be writing three classes:

- RenderingEngine1 and RenderingEngine2 (portable C++)

These classes are where most of the work takes place; all calls to OpenGL ES are made from here.

RenderingEngine1uses ES 1.1, whileRenderingEngine2uses ES 2.0.- HelloArrowAppDelegate (Objective-C)

Small Objective-C class that derives from

NSObjectand adopts theUIApplicationDelegateprotocol. (“Adopting a protocol” in Objective-C is somewhat analogous to “implementing an interface” in languages such as Java or C#.) This does not use OpenGL or EAGL; it simply initializes theGLViewobject and releases memory when the application closes.- GLView (Objective-C)

Derives from the standard

UIViewclass and uses EAGL to instance a valid rendering surface for OpenGL.

Starting from Scratch

Launch Xcode and start with the simplest project template by going to File→New Project and selecting Window-Based Application from the list of iPhone OS application templates. Name it HelloArrow.

Xcode comes bundled with an application called Interface Builder, which is Apple’s interactive designer for building interfaces with UIKit (and AppKit on Mac OS X). I don’t attempt to cover UIKit because most 3D applications do not make extensive use of it. For best performance, Apple advises against mixing UIKit with OpenGL.

Note

For simple 3D applications that aren’t too demanding, it probably won’t hurt you to add some UIKit controls to your OpenGL view. We cover this briefly in Mixing OpenGL ES and UIKit.

Linking in the OpenGL and Quartz Libraries

In the world of Apple programming, you can think of a framework as being similar to a library, but technically it’s a bundle of resources. A bundle is a special type of folder that acts like a single file, and it’s quite common in Mac OS X. For example, applications are usually deployed as bundles—open the action menu on nearly any icon in your Applications folder, and you’ll see an option for Show Package Contents, which allows you to get past the façade.

You need to add some framework references to your Xcode project. Pull down the action menu for the Frameworks folder. This can be done by selecting the folder and clicking the Action icon or by right-clicking or Control-clicking the folder. Next choose Add→Existing Frameworks. Select OpenGLES.Framework, and click the Add button. You may see a dialog after this; if so, simply accept its defaults. Now, repeat this procedure with QuartzCore.Framework.

Note

Why do we need Quartz if we’re writing an

OpenGL ES application? The answer is that Quartz owns the layer object

that gets presented to the screen, even though we’re rendering with

OpenGL. The layer object is an instance of

CAEGLLayer, which is a subclass of

CALayer; these classes are defined in the Quartz

Core framework.

Subclassing UIView

The abstract UIView class

controls a rectangular area of the screen, handles user events, and

sometimes serves as a container for child views. Almost all standard

controls such as buttons, sliders, and text fields are descendants of

UIView. We tend to avoid using these controls in this

book; for most of the sample code, the UI requirements are so modest

that OpenGL itself can be used to render simple buttons and various

widgets.

For our HelloArrow sample, we do need to

define a single UIView subclass, since all rendering

on the iPhone must take place within a view. Select the

Classes folder in Xcode, click the

Action icon in the toolbar, and select Add→New file. Under the Cocoa Touch

Class category, select the Objective-C class template, and

choose UIView in the Subclass

of menu. In the next dialog, name it

GLView.mm, and leave the box checked to ensure that

the corresponding header gets generated. The .mm

extension indicates that this file can support C++ in addition to

Objective-C. Open GLView.h. You should see

something like this:

#import <UIKit/UIKit.h>

@interface GLView : UIView {

}

@endFor C/C++ veterans, this syntax can be a little jarring—just wait until you see the syntax for methods! Fear not, it’s easy to become accustomed to.

#import is almost the same

thing as #include but automatically ensures that the

header file does not get expanded twice within the same source file.

This is similar to the #pragma once feature found in

many C/C++ compilers.

Keywords specific to Objective-C stand out

because of the @ prefix. The

@interface keyword marks the beginning of a class

declaration; the @end keyword marks the end of a

class declaration. A single source file may contain several class

declarations and therefore can have several

@interface blocks.

As you probably already guessed, the previous

code snippet simply declares an empty class called

GLView that derives from UIView.

What’s less obvious is that data fields will go inside the curly braces,

while method declarations will go between the ending curly brace and the

@end, like this:

#import <UIKit/UIKit.h>

@interface GLView : UIView {

// Protected fields go here...

}

// Public methods go here...

@endBy default, data fields have protected

accessibility, but you can make them private using the

@private keyword. Let’s march onward and fill in the

pieces shown in bold in Example 1-1. We’re also adding

some new #imports for OpenGL-related stuff.

The m_context field is a

pointer to the EAGL object that manages our OpenGL context. EAGL is a

small Apple-specific API that links the iPhone operating system with

OpenGL.

Note

Every time you modify API state through an OpenGL function call, you do so within a context. For a given thread running on your system, only one context can be current at any time. With the iPhone, you’ll rarely need more than one context for your application. Because of the limited resources on mobile devices, I don’t recommend using multiple contexts.

If you have a C/C++ background, the

drawView method declaration in Example 1-1 may look odd. It’s less jarring if you’re

familiar with UML syntax, but UML uses - and

+ to denote private and public methods, respectively;

with Objective-C, - and + denote

instance methods and class methods. (Class methods in Objective-C are

somewhat similar to C++ static methods, but in Objective-C, the class

itself is a proper object.)

Take a look at the top of the

GLView.mm file that Xcode generated. Everything

between @implementation and @end

is the definition of the GLView class. Xcode created

three methods for you: initWithFrame,

drawRect (which may be commented out), and dealloc. Note that these methods do not

have declarations in the header file that Xcode generated. In this

respect, an Objective-C method is similar to a plain old function in C;

it needs a forward declaration only if gets called before it’s defined.

I usually declare all methods in the header file anyway to be consistent

with C++ class declarations.

Take a closer look at the first method in the file:

- (id) initWithFrame: (CGRect) frame

{

if (self = [super initWithFrame:frame]) {

// Initialize code...

}

return self;

}This is an Objective-C initializer method,

which is somewhat analogous to a C++ constructor. The return type and

argument types are enclosed in parentheses, similar to C-style casting

syntax. The conditional in the if statement

accomplishes several things at once: it calls the base implementation of

initWithFrame, assigns the object’s pointer to

self, and checks the result for success.

In Objective-C parlance, you don’t call

methods on objects; you send messages to objects.

The square bracket syntax denotes a message. Rather than a

comma-separated list of values, arguments are denoted with a

whitespace-separated list of name-value pairs. The idea is that messages

can vaguely resemble English sentences. For example, consider this

statement, which adds an element to an

NSMutableDictionary:

[myDictionary setValue: 30 forKey: @"age"];

If you read the argument list aloud, you get an English sentence! Well, sort of.

That’s enough of an Objective-C lesson for

now. Let’s get back to the HelloArrow application. In

GLView.mm, provide the implementation to the

layerClass method by adding the following snippet

after the @implementation line:

+ (Class) layerClass

{

return [CAEAGLLayer class];

}This simply overrides the default

implementation of layerClass to return an

OpenGL-friendly layer type. The class method is

similar to the typeof operator found in other

languages; it returns an object that represents the type itself, rather

than an instance of the type.

Note

The + prefix means that

this is an override of a class method rather than an instance method.

This type of override is a feature of Objective-C rarely found in

other languages.

Now, go back to

initWithFrame, and replace the contents of the

if block with some EAGL initialization code, as shown

in Example 1-2.

- (id) initWithFrame: (CGRect) frame

{

if (self = [super initWithFrame:frame]) {

CAEAGLLayer* eaglLayer = (CAEAGLLayer*) super.layer;  eaglLayer.opaque = YES;

eaglLayer.opaque = YES;  m_context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1];

m_context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1];  if (!m_context || ![EAGLContext setCurrentContext:m_context]) {

if (!m_context || ![EAGLContext setCurrentContext:m_context]) {  [self release];

return nil;

[self release];

return nil; }

// Initialize code...

}

return self;

}

}

// Initialize code...

}

return self;

}

Retrieve the

layerproperty from the base class (UIView), and downcast it fromCALayerinto aCAEAGLLayer. This is safe because of the override to thelayerClassmethod.

Set the

opaqueproperty on the layer to indicate that you do not need Quartz to handle transparency. This is a performance benefit that Apple recommends in all OpenGL programs. Don’t worry, you can easily use OpenGL to handle alpha blending.

Create an

EAGLContextobject, and tell it which version of OpenGL you need, which is ES 1.1.

Tell the

EAGLContextto make itself current, which means any subsequent OpenGL calls in this thread will be tied to it.

If context creation fails or if

setCurrentContextfails, then poop out and returnnil.

Next, continue filling in the initialization

code with some OpenGL setup. Replace the OpenGL

Initialization comment with Example 1-3.

GLuint framebuffer, renderbuffer;

glGenFramebuffersOES(1, &framebuffer);

glGenRenderbuffersOES(1, &renderbuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, framebuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, renderbuffer);

[m_context

renderbufferStorage:GL_RENDERBUFFER_OES

fromDrawable: eaglLayer];

glFramebufferRenderbufferOES(

GL_FRAMEBUFFER_OES, GL_COLOR_ATTACHMENT0_OES,

GL_RENDERBUFFER_OES, renderbuffer);

glViewport(0, 0, CGRectGetWidth(frame), CGRectGetHeight(frame));

[self drawView];Example 1-3 starts off by generating two OpenGL identifiers, one for a renderbuffer and one for a framebuffer. Briefly, a renderbuffer is a 2D surface filled with some type of data (in this case, color), and a framebuffer is a bundle of renderbuffers. You’ll learn more about framebuffer objects (FBOs) in later chapters.

Note

The use of FBOs is an advanced feature that is not part of the core OpenGL ES 1.1 API, but it is specified in an OpenGL extension that all iPhones support. In OpenGL ES 2.0, FBOs are included in the core API. It may seem odd to use this advanced feature in the simple HelloArrow program, but all OpenGL iPhone applications need to leverage FBOs to draw anything to the screen.

The renderbuffer and framebuffer are both of

type GLuint, which is the type that OpenGL uses to

represent various objects that it manages. You could just as easily use

unsigned int in lieu of GLuint,

but I recommend using the GL-prefixed types for objects that get passed

to the API. If nothing else, the GL-prefixed types make it easier for

humans to identify which pieces of your code interact with

OpenGL.

After generating identifiers for the

framebuffer and renderbuffer, Example 1-3 then

binds these objects to the pipeline. When an object

is bound, it can be modified or consumed by subsequent OpenGL

operations. After binding the renderbuffer, storage is allocated by

sending the renderbufferStorage message to the

EAGLContext object.

Note

For an off-screen surface, you would use

the OpenGL command glRenderbufferStorage to perform

allocation, but in this case you’re associating the renderbuffer with

an EAGL layer. You’ll learn more about off-screen surfaces later in

this book.

Next, the

glFramebufferRenderbufferOES command is used to

attach the renderbuffer object to the framebuffer object.

After this, the glViewport

command is issued. You can think of this as setting up a coordinate

system. In Chapter 2 you’ll learn more precisely what’s

going on here.

The final call in Example 1-3 is to the drawView method. Go

ahead and create the drawView

implementation:

- (void) drawView

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT);

[m_context presentRenderbuffer:GL_RENDERBUFFER_OES];

}This uses OpenGL’s “clear” mechanism to fill

the buffer with a solid color. First the color is set to gray using four

values (red, green, blue, alpha). Then, the clear operation is issued.

Finally, the EAGLContext object is told to present

the renderbuffer to the screen. Rather than drawing directly to the

screen, most OpenGL programs render to a buffer that is then presented

to the screen in an atomic operation, just like we’re doing here.

You can remove the

drawRect stub that Xcode provided for you. The

drawRect method is typically used for a “paint

refresh” in more traditional UIKit-based applications; in 3D

applications, you’ll want finer control over when rendering

occurs.

At this point, you almost have a fully

functioning OpenGL ES program, but there’s one more loose end to tie up.

You need to clean up when the GLView object is

destroyed. Replace the definition of dealloc with the

following:

- (void) dealloc

{

if ([EAGLContext currentContext] == m_context)

[EAGLContext setCurrentContext:nil];

[m_context release];

[super dealloc];

}You can now build and run the program, but you won’t even see the gray background color just yet. This brings us to the next step: hooking up the application delegate.

Hooking Up the Application Delegate

The application delegate template

(HelloArrowAppDelegate.h) that Xcode provided

contains nothing more than an instance of UIWindow.

Let’s add a pointer to an instance of the GLView

class along with a couple method declarations (new/changed lines are

shown in bold):

#import <UIKit/UIKit.h> #import "GLView.h" @interface HelloArrowAppDelegate : NSObject <UIApplicationDelegate> { UIWindow* m_window; GLView* m_view; } @property (nonatomic, retain) IBOutlet UIWindow *m_window; @end

If you performed the instructions in Optional: Creating a Clean Slate, you won’t see the @property

line, which is fine. Interface Builder leverages Objective-C’s property

mechanism to establish connections between objects, but we’re not using

Interface Builder or properties in this book. In brief, the

@property keyword declares a property; the

@synthesize keyword defines accessor

methods.

Note that the Xcode template already had a

window member, but I renamed it to m_window. This is in keeping with the

coding conventions that we use throughout this book.

Note

I recommend using Xcode’s

Refactor feature to rename this variable because

it will also rename the corresponding property (if it exists). Simply

right-click the window variable and choose

Refactor. If you did not make the changes shown

in Optional: Creating a Clean Slate, you must use

Refactor so that the xib

file knows the window is now represented by

m_window.

Now open the corresponding

HelloArrowAppDelegate.m file.

Xcode already provided skeleton implementations for

applicationDidFinishLaunching and

dealloc as part of the Window-Based

Application template that we selected to create our

project.

Note

Since you need this file to handle both Objective-C and C++, you must rename the extension to .mm. Right-click the file to bring up the action menu, and then select Rename.

Flesh out the file as shown in Example 1-4.

#import "HelloArrowAppDelegate.h"

#import <UIKit/UIKit.h>

#import "GLView.h"

@implementation HelloArrowAppDelegate

- (BOOL) application: (UIApplication*) application

didFinishLaunchingWithOptions: (NSDictionary*) launchOptions

{

CGRect screenBounds = [[UIScreen mainScreen] bounds];

m_window = [[UIWindow alloc] initWithFrame: screenBounds];

m_view = [[GLView alloc] initWithFrame: screenBounds];

[m_window addSubview: m_view];

[m_window makeKeyAndVisible];

return YES;

}

- (void) dealloc

{

[m_view release];

[m_window release];

[super dealloc];

}

@endExample 1-4 uses the alloc-init pattern to construct the window and view objects, passing in the bounding rectangle for the entire screen.

If you haven’t removed the Interface Builder bits as described in Optional: Creating a Clean Slate, you’ll need to make a couple changes to the previous code listing:

Add a new line after

@implementation:@synthesize m_window;

As mentioned previously, the

@synthesizekeyword defines a set of property accessors, and Interface Builder uses properties to hook things up.Remove the line that constructs

m_window. Interface Builder has a special way of constructing the window behind the scenes. (Leave in the calls tomakeKeyAndVisibleandrelease.)

Compile and build, and you should now see a solid gray screen. Hooray!

Setting Up the Icons and Launch Image

To set a custom launch icon for your application, create a 57×57 PNG file (72×72 for the iPad), and add it to your Xcode project in the Resources folder. If you refer to a PNG file that is not in the same location as your project folder, Xcode will copy it for you; be sure to check the box labeled “Copy items into destination group’s folder (if needed)” before you click Add. Then, open the HelloArrow-Info.plist file (also in the Resources folder), find the Icon file property, and enter the name of your PNG file.

The iPhone will automatically give your icon rounded corners and a shiny overlay. If you want to turn this feature off, find the HelloArrow-Info.plist file in your Xcode project, select the last row, click the + button, and choose Icon already includes gloss and bevel effects from the menu. Don’t do this unless you’re really sure of yourself; Apple wants users to have a consistent look in SpringBoard (the built-in program used to launch apps).

In addition to the 57×57 launch icon, Apple recommends that you also provide a 29×29 miniature icon for the Spotlight search and Settings screen. The procedure is similar except that the filename must be Icon-Small.png, and there’s no need to modify the .plist file.

For the splash screen, the procedure is similar to the small icon, except that the filename must be Default.png and there’s no need to modify the .plist file. The iPhone fills the entire screen with your image, so the ideal size is 320×480, unless you want to see an ugly stretchy effect. Apple’s guidelines say that this image isn’t a splash screen at all but a “launch image” whose purpose is to create a swift and seamless startup experience. Rather than showing a creative logo, Apple wants your launch image to mimic the starting screen of your running application. Of course, many applications ignore this rule!

Dealing with the Status Bar

Even though your application fills the

renderbuffer with gray, the iPhone’s status bar still appears at the top

of the screen. One way of dealing with this would be adding the

following line to

didFinishLaunchingWithOptions:

[application setStatusBarHidden: YES withAnimation: UIStatusBarAnimationNone];

The problem with this approach is that the status bar does not hide until after the splash screen animation. For HelloArrow, let’s remove the pesky status bar from the very beginning. Find the HelloArrowInfo.plist file in your Xcode project, and add a new property by selecting the last row, clicking the + button, choosing “Status bar is initially hidden” from the menu, and checking the box.

Of course, for some applications, you’ll want to keep the status bar visible—after all, the user might want to keep an eye on battery life and connectivity status! If your application has a black background, you can add a Status bar style property and select the black style. For nonblack backgrounds, the semitransparent style often works well.

Defining and Consuming the Rendering Engine Interface

At this point, you have a walking skeleton for HelloArrow, but you still don’t have the rendering layer depicted in Figure 1-7. Add a file to your Xcode project to define the C++ interface. Right-click the Classes folder, and choose Add→New file, select C and C++, and choose Header File. Call it IRenderingEngine.hpp. The .hpp extension signals that this is a pure C++ file; no Objective-C syntax is allowed.[2] Replace the contents of this file with Example 1-5.

// Physical orientation of a handheld device, equivalent to UIDeviceOrientation.

enum DeviceOrientation {

DeviceOrientationUnknown,

DeviceOrientationPortrait,

DeviceOrientationPortraitUpsideDown,

DeviceOrientationLandscapeLeft,

DeviceOrientationLandscapeRight,

DeviceOrientationFaceUp,

DeviceOrientationFaceDown,

};

// Creates an instance of the renderer and sets up various OpenGL state.

struct IRenderingEngine* CreateRenderer1();

// Interface to the OpenGL ES renderer; consumed by GLView.

struct IRenderingEngine {

virtual void Initialize(int width, int height) = 0;

virtual void Render() const = 0;

virtual void UpdateAnimation(float timeStep) = 0;

virtual void OnRotate(DeviceOrientation newOrientation) = 0;

virtual ~IRenderingEngine() {}

};It seems redundant to include an enumeration

for device orientation when one already exists in an iPhone header

(namely, UIDevice.h), but this makes the IRenderingEngine interface portable to

other environments.

Since the view class consumes the rendering

engine interface, you need to add an IRenderingEngine

pointer to the GLView class declaration,

along with some fields and methods to help with rotation and animation.

Example 1-6 shows the complete class declaration. New

fields and methods are shown in bold. Note that we removed the two

OpenGL ES 1.1 #imports; these OpenGL calls are moving

to the RenderingEngine1 class. The EAGL header is not

part of the OpenGL standard, but it’s required to create the OpenGL ES

context.

#import "IRenderingEngine.hpp" #import <OpenGLES/EAGL.h> #import <QuartzCore/QuartzCore.h> @interface GLView : UIView { @private EAGLContext* m_context; IRenderingEngine* m_renderingEngine; float m_timestamp; } - (void) drawView: (CADisplayLink*) displayLink; - (void) didRotate: (NSNotification*) notification; @end

Example 1-7 is the full

listing for the class implementation. Calls to the rendering engine are

highlighted in bold. Note that GLView no longer

contains any OpenGL calls; we’re delegating all OpenGL work to the

rendering engine.

#import <OpenGLES/EAGLDrawable.h>

#import "GLView.h"

#import "mach/mach_time.h"

#import <OpenGLES/ES2/gl.h> // <-- for GL_RENDERBUFFER only

@implementation GLView

+ (Class) layerClass

{

return [CAEAGLLayer class];

}

- (id) initWithFrame: (CGRect) frame

{

if (self = [super initWithFrame:frame]) {

CAEAGLLayer* eaglLayer = (CAEAGLLayer*) super.layer;

eaglLayer.opaque = YES;

m_context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1];

if (!m_context || ![EAGLContext setCurrentContext:m_context]) {

[self release];

return nil;

}

m_renderingEngine = CreateRenderer1();

[m_context

renderbufferStorage:GL_RENDERBUFFER

fromDrawable: eaglLayer];

m_renderingEngine->Initialize(CGRectGetWidth(frame), CGRectGetHeight(frame));

[self drawView: nil];

m_timestamp = CACurrentMediaTime();

CADisplayLink* displayLink;

displayLink = [CADisplayLink displayLinkWithTarget:self

selector:@selector(drawView:)];

[displayLink addToRunLoop:[NSRunLoop currentRunLoop]

forMode:NSDefaultRunLoopMode];

[[UIDevice currentDevice] beginGeneratingDeviceOrientationNotifications];

[[NSNotificationCenter defaultCenter]

addObserver:self

selector:@selector(didRotate:)

name:UIDeviceOrientationDidChangeNotification

object:nil];

}

return self;

}

- (void) didRotate: (NSNotification*) notification

{

UIDeviceOrientation orientation = [[UIDevice currentDevice] orientation];

m_renderingEngine->OnRotate((DeviceOrientation) orientation);

[self drawView: nil];

}

- (void) drawView: (CADisplayLink*) displayLink

{

if (displayLink != nil) {

float elapsedSeconds = displayLink.timestamp - m_timestamp;

m_timestamp = displayLink.timestamp;

m_renderingEngine->UpdateAnimation(elapsedSeconds);

}

m_renderingEngine->Render();

[m_context presentRenderbuffer:GL_RENDERBUFFER];

}

@endThis completes the Objective-C portion of the project, but it won’t build yet because you still need to implement the rendering engine. There’s no need to dissect all the code in Example 1-7, but a brief summary follows:

The

initWithFramemethod calls the factory method to instantiate the C++ renderer. It also sets up two event handlers. One is for the “display link,” which fires every time the screen refreshes. The other event handler responds to orientation changes.The

didRotateevent handler casts the iPhone-specificUIDeviceOrientationto our portableDeviceOrientationtype and then passes it on to the rendering engine.The

drawViewmethod, called in response to a display link event, computes the elapsed time since it was last called and passes that value into the renderer’sUpdateAnimationmethod. This allows the renderer to update any animations or physics that it might be controlling.The

drawViewmethod also issues theRendercommand and presents the renderbuffer to the screen.

Note

At the time of writing, Apple recommends

CADisplayLink for triggering OpenGL rendering. An

alternative strategy is leveraging the NSTimer

class. CADisplayLink became available with iPhone

OS 3.1, so if you need to support older versions of the iPhone OS,

take a look at NSTimer in the

documentation.

Implementing the Rendering Engine

In this section, you’ll create an

implementation class for the IRenderingEngine

interface. Right-click the Classes folder, choose

Add→New file, click the C and

C++ category, and select the C++ File template. Call it

RenderingEngine1.cpp, and deselect the “Also create

RenderingEngine1.h” option, since you’ll declare the class directly

within the .cpp file. Enter the class declaration

and factory method shown in Example 1-8.

#include <OpenGLES/ES1/gl.h>

#include <OpenGLES/ES1/glext.h>

#include "IRenderingEngine.hpp"

class RenderingEngine1 : public IRenderingEngine {

public:

RenderingEngine1();

void Initialize(int width, int height);

void Render() const;

void UpdateAnimation(float timeStep) {}

void OnRotate(DeviceOrientation newOrientation) {}

private:

GLuint m_framebuffer;

GLuint m_renderbuffer;

};

IRenderingEngine* CreateRenderer1()

{

return new RenderingEngine1();

}

For now, UpdateAnimation

and OnRotate are implemented with stubs; you’ll add

support for the rotation feature after we get up and running.

Example 1-9 shows more of the code from RenderingEngine1.cpp with the OpenGL initialization code.

struct Vertex {

float Position[2];

float Color[4];

};

// Define the positions and colors of two triangles.

const Vertex Vertices[] = {

{{-0.5, -0.866}, {1, 1, 0.5f, 1}},

{{0.5, -0.866}, {1, 1, 0.5f, 1}},

{{0, 1}, {1, 1, 0.5f, 1}},

{{-0.5, -0.866}, {0.5f, 0.5f, 0.5f}},

{{0.5, -0.866}, {0.5f, 0.5f, 0.5f}},

{{0, -0.4f}, {0.5f, 0.5f, 0.5f}},

};

RenderingEngine1::RenderingEngine1()

{

glGenRenderbuffersOES(1, &m_renderbuffer);

glBindRenderbufferOES(GL_RENDERBUFFER_OES, m_renderbuffer);

}

void RenderingEngine1::Initialize(int width, int height)

{

// Create the framebuffer object and attach the color buffer.

glGenFramebuffersOES(1, &m_framebuffer);

glBindFramebufferOES(GL_FRAMEBUFFER_OES, m_framebuffer);

glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES,

GL_COLOR_ATTACHMENT0_OES,

GL_RENDERBUFFER_OES,

m_renderbuffer);

glViewport(0, 0, width, height);

glMatrixMode(GL_PROJECTION);

// Initialize the projection matrix.

const float maxX = 2;

const float maxY = 3;

glOrthof(-maxX, +maxX, -maxY, +maxY, -1, 1);

glMatrixMode(GL_MODELVIEW);

}

Example 1-9 first defines a POD type (plain old data) that represents the structure of each vertex that makes up the triangles. As you’ll learn in the chapters to come, a vertex in OpenGL can be associated with a variety of attributes. HelloArrow requires only two attributes: a 2D position and an RGBA color.

In more complex OpenGL applications, the vertex data is usually read from an external file or generated on the fly. In this case, the geometry is so simple that the vertex data is defined within the code itself. Two triangles are specified using six vertices. The first triangle is yellow, the second gray (see Figure 1-4, shown earlier).

Next, Example 1-9

divides up some framebuffer initialization work between the constructor

and the Initialize method. Between instancing the

rendering engine and calling Initialize, the caller

(GLView) is responsible for allocating the

renderbuffer’s storage. Allocation of the renderbuffer isn’t done with

the rendering engine because it requires Objective-C.

Last but not least,

Initialize sets up the viewport transform and

projection matrix. The projection matrix defines

the 3D volume that contains the visible portion of the scene. This will

be explained in detail in the next chapter.

To recap, here’s the startup sequence:

Generate an identifier for the renderbuffer, and bind it to the pipeline.

Allocate the renderbuffer’s storage by associating it with an EAGL layer. This has to be done in the Objective-C layer.

Create a framebuffer object, and attach the renderbuffer to it.

Set up the vertex transformation state with

glViewportandglOrthof.

Example 1-10 contains

the implementation of the Render method.

void RenderingEngine1::Render() const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT); glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_VERTEX_ARRAY); glEnableClientState(GL_COLOR_ARRAY);

glVertexPointer(2, GL_FLOAT, sizeof(Vertex), &Vertices[0].Position[0]);

glEnableClientState(GL_COLOR_ARRAY);

glVertexPointer(2, GL_FLOAT, sizeof(Vertex), &Vertices[0].Position[0]); glColorPointer(4, GL_FLOAT, sizeof(Vertex), &Vertices[0].Color[0]);

GLsizei vertexCount = sizeof(Vertices) / sizeof(Vertex);

glDrawArrays(GL_TRIANGLES, 0, vertexCount);

glColorPointer(4, GL_FLOAT, sizeof(Vertex), &Vertices[0].Color[0]);

GLsizei vertexCount = sizeof(Vertices) / sizeof(Vertex);

glDrawArrays(GL_TRIANGLES, 0, vertexCount); glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_VERTEX_ARRAY); glDisableClientState(GL_COLOR_ARRAY);

}

glDisableClientState(GL_COLOR_ARRAY);

}We’ll examine much of this in the next chapter, but briefly here’s what’s going on:

Tell OpenGL how to fetch the data for the position and color attributes. We’ll examine these in detail later in the book; for now, see Figure 1-8.

Execute the draw command with

glDrawArrays, specifyingGL_TRIANGLESfor the topology, 0 for the starting vertex, andvertexCountfor the number of vertices. This function call marks the exact time that OpenGL fetches the data from the pointers specified in the precedinggl*Pointercalls; this is also when the triangles are actually rendered to the target surface.

Disable the two vertex attributes; they need to be enabled only during the preceding draw command. It’s bad form to leave attributes enabled because subsequent draw commands might want to use a completely different set of vertex attributes. In this case, we could get by without disabling them because the program is so simple, but it’s a good habit to follow.

Congratulations, you created a complete OpenGL program from scratch! Figure 1-9 shows the result.

Handling Device Orientation

Earlier in the chapter, I promised you would

learn how to rotate the arrow in response to an orientation change.

Since you already created the listener in the UIView

class in Example 1-7, all that remains is handling it

in the rendering engine.

First add a new floating-point field to the

RenderingEngine class called m_currentAngle. This represents an angle

in degrees, not radians. Note the changes to UpdateAnimation and

OnRotate (they are no longer stubs and will be

defined shortly).

class RenderingEngine1 : public IRenderingEngine {

public:

RenderingEngine1();

void Initialize(int width, int height);

void Render() const;

void UpdateAnimation(float timeStep);

void OnRotate(DeviceOrientation newOrientation);

private:

float m_currentAngle;

GLuint m_framebuffer;

GLuint m_renderbuffer;

};

Now let’s implement the

OnRotate method as follows:

void RenderingEngine1::OnRotate(DeviceOrientation orientation)

{

float angle = 0;

switch (orientation) {

case DeviceOrientationLandscapeLeft:

angle = 270;

break;

case DeviceOrientationPortraitUpsideDown:

angle = 180;

break;

case DeviceOrientationLandscapeRight:

angle = 90;

break;

}

m_currentAngle = angle;

}Note that orientations such as

Unknown, Portrait,

FaceUp, and FaceDown are not

included in the switch statement, so the angle

defaults to zero in those cases.

Now you can rotate the arrow using a call to

glRotatef in the Render method, as

shown in Example 1-11. New code lines are shown

in bold. This also adds some calls to glPushMatrix

and glPopMatrix to prevent rotations from

accumulating. You’ll learn more about these commands (including

glRotatef) in the next chapter.

void RenderingEngine1::Render() const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT);

glPushMatrix();

glRotatef(m_currentAngle, 0, 0, 1);

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glVertexPointer(2, GL_FLOAT, sizeof(Vertex), &Vertices[0].Position[0]);

glColorPointer(4, GL_FLOAT, sizeof(Vertex), &Vertices[0].Color[0]);

GLsizei vertexCount = sizeof(Vertices) / sizeof(Vertex);

glDrawArrays(GL_TRIANGLES, 0, vertexCount);

glDisableClientState(GL_VERTEX_ARRAY);

glDisableClientState(GL_COLOR_ARRAY);

glPopMatrix();

}Animating the Rotation

You now have a HelloArrow program that rotates in response to an orientation change, but it’s lacking a bit of grace—most iPhone applications smoothly rotate the image, rather than suddenly jolting it by 90º.

It turns out that Apple provides

infrastructure for smooth rotation via the UIViewController class, but this is not

the recommended approach for OpenGL ES applications. There are several

reasons for this:

For best performance, Apple recommends avoiding interaction between Core Animation and OpenGL ES.

Ideally, the renderbuffer stays the same size and aspect ratio for the lifetime of the application. This helps performance and simplifies code.

In graphically intense applications, developers need to have complete control over animations and rendering.

To achieve the animation effect, Example 1-12 adds a new floating-point field to the

RenderingEngine class called

m_desiredAngle. This represents the destination value

of the current animation; if no animation is occurring, then

m_currentAngle and m_desiredAngle are equal.

Example 1-12 also

introduces a floating-point constant called

RevolutionsPerSecond to represent angular velocity,

and the private method RotationDirection, which I’ll

explain later.

#include <OpenGLES/ES1/gl.h> #include <OpenGLES/ES1/glext.h> #include "IRenderingEngine.hpp" static const float RevolutionsPerSecond = 1; class RenderingEngine1 : public IRenderingEngine { public: RenderingEngine1(); void Initialize(int width, int height); void Render() const; void UpdateAnimation(float timeStep); void OnRotate(DeviceOrientation newOrientation); private: float RotationDirection() const; float m_desiredAngle; float m_currentAngle; GLuint m_framebuffer; GLuint m_renderbuffer; }; ... void RenderingEngine1::Initialize(int width, int height) { // Create the framebuffer object and attach the color buffer. glGenFramebuffersOES(1, &m_framebuffer); glBindFramebufferOES(GL_FRAMEBUFFER_OES, m_framebuffer); glFramebufferRenderbufferOES(GL_FRAMEBUFFER_OES, GL_COLOR_ATTACHMENT0_OES, GL_RENDERBUFFER_OES, m_renderbuffer); glViewport(0, 0, width, height); glMatrixMode(GL_PROJECTION); // Initialize the projection matrix. const float maxX = 2; const float maxY = 3; glOrthof(-maxX, +maxX, -maxY, +maxY, -1, 1); glMatrixMode(GL_MODELVIEW); // Initialize the rotation animation state. OnRotate(DeviceOrientationPortrait); m_currentAngle = m_desiredAngle; }

Now you can modify

OnRotate so that it changes the desired angle rather

than the current angle:

void RenderingEngine1::OnRotate(DeviceOrientation orientation)

{

float angle = 0;

switch (orientation) {

...

}

m_desiredAngle = angle;

}Before implementing

UpdateAnimation, think about how the application

decides whether to rotate the arrow clockwise or counterclockwise.

Simply checking whether the desired angle is greater than the current

angle is incorrect; if the user changes his device from a 270º

orientation to a 0º orientation, the angle should

increase up to 360º.

This is where the

RotationDirection method comes in. It returns –1, 0,

or +1, depending on which direction the arrow needs to spin. Assume that

m_currentAngle and m_desiredAngle are both normalized to

values between 0 (inclusive) and 360 (exclusive).

float RenderingEngine1::RotationDirection() const

{

float delta = m_desiredAngle - m_currentAngle;

if (delta == 0)

return 0;

bool counterclockwise = ((delta > 0 && delta <= 180) || (delta < -180));

return counterclockwise ? +1 : -1;

}Now you’re ready to write the

UpdateAnimation method, which takes a time step in

seconds:

void RenderingEngine1::UpdateAnimation(float timeStep)

{

float direction = RotationDirection();

if (direction == 0)

return;

float degrees = timeStep * 360 * RevolutionsPerSecond;

m_currentAngle += degrees * direction;

// Ensure that the angle stays within [0, 360).

if (m_currentAngle >= 360)

m_currentAngle -= 360;

else if (m_currentAngle < 0)

m_currentAngle += 360;

// If the rotation direction changed, then we overshot the desired angle.

if (RotationDirection() != direction)

m_currentAngle = m_desiredAngle;

}This is fairly straightforward, but that last conditional might look curious. Since this method incrementally adjusts the angle with floating-point numbers, it could easily overshoot the destination, especially for large time steps. Those last two lines correct this by simply snapping the angle to the desired position. You’re not trying to emulate a shaky compass here, even though doing so might be a compelling iPhone application!

You now have a fully functional HelloArrow application. As with the other examples, you can find the complete code on this book’s website (see the preface for more information on code samples).

HelloArrow with Shaders

In this section you’ll create a new rendering engine that uses ES 2.0. This will show you the immense difference between ES 1.1 and 2.0. Personally I like the fact that Khronos decided against making ES 2.0 backward compatible with ES 1.1; the API is leaner and more elegant as a result.

Thanks to the layered architecture of HelloArrow, it’s easy to add ES 2.0 support while retaining 1.1 functionality for older devices. You’ll be making these four changes:

These changes are described in detail in the following sections. Note that step 4 is somewhat artificial; in the real world, you’ll probably want to write your ES 2.0 backend from the ground up.

Shaders

By far the most significant new feature in ES

2.0 is the shading language. Shaders are

relatively small pieces of code that run on the graphics processor, and

they are divided into two categories: vertex shaders and fragment

shaders. Vertex shaders are used to transform the vertices that you

submit with glDrawArrays, while fragment shaders

compute the colors for every pixel in every triangle. Because of the

highly parallel nature of graphics processors, thousands of shader

instances execute simultaneously.

Shaders are written in a C-like language called OpenGL Shading Language (GLSL), but unlike C, you do not compile GLSL programs within Xcode. Shaders are compiled at runtime, on the iPhone itself. Your application submits shader source to the OpenGL API in the form of a C-style string, which OpenGL then compiles to machine code.

Note

Some implementations of OpenGL ES do allow you to compile your shaders offline; on these platforms your application submits binaries rather than strings at runtime. Currently, the iPhone supports shader compilation only at runtime. Its ARM processor compiles the shaders and sends the resulting machine code over to the graphics processor for execution. That little ARM does some heavy lifting!

The first step to upgrading HelloArrow is creating a new project folder for the shaders. Right-click the HelloArrow root node in the Groups & Files pane, and choose Add→New Group. Call the new group Shaders.

Next, right-click the Shaders folder, and choose Add→New file. Select the Empty File template in the Other category. Name it Simple.vert, and add /Shaders after HelloArrow in the location field. You can deselect the checkbox under Add To Targets, because there’s no need to deploy the file to the device. In the next dialog, allow it to create a new directory. Now repeat the procedure with a new file called Simple.frag.

Before showing you the code for these two

files, let me explain a little trick. Rather than reading in the shaders

with file I/O, you can simply embed them within your C/C++ code through

the use of #include. Multiline strings are usually

cumbersome in C/C++, but they can

be tamed with a sneaky little macro:

#define STRINGIFY(A) #A

Later in this section, we’ll place this macro

definition at the top of the rendering engine source code, right before

#including the shaders. The entire shader gets

wrapped into a single string—without the use of quotation marks on every

line!

Examples 1-13 and 1-14 show the contents

of the vertex shader and fragment shader. For brevity’s sake, we’ll

leave out the STRINGIFY accouterments in future

shader listings, but we’re including them here for clarity.

First the vertex shader declares a set of attributes, varyings, and uniforms. For now you can think of these as connection points between the vertex shader and the outside world. The vertex shader itself simply passes through a color and performs a standard transformation on the position. You’ll learn more about transformations in the next chapter. The fragment shader (Example 1-14) is even more trivial.

Again, the varying parameter is a connection point. The fragment shader itself does nothing but pass on the color that it’s given.

Frameworks

Next, make sure all the frameworks in HelloArrow are referencing a 3.1 (or greater) version of the SDK. To find out which version a particular framework is using, right-click it in Xcode’s Groups & Files pane, and select Get Info to look at the full path.

Note

There’s a trick to quickly change all your

SDK references by manually modifying the project file. First you need

to quit Xcode. Next, open Finder, right-click

HelloArrow.xcodeproj, and select Show

package contents. Inside, you’ll find a file called

project.pbxproj, which you can then open with

TextEdit. Find the two places that define SDKROOT,

and change them appropriately.

GLView

You might recall passing in a version constant when constructing the OpenGL context; this is another place that obviously needs to be changed. In the Classes folder, open GLView.mm, and change this snippet:

m_context = [[EAGLContext alloc] initWithAPI:kEAGLRenderingAPIOpenGLES1];

if (!m_context || ![EAGLContext setCurrentContext:m_context]) {

[self release];

return nil;

}

m_renderingEngine = CreateRenderer1();

EAGLRenderingAPI api = kEAGLRenderingAPIOpenGLES2;

m_context = [[EAGLContext alloc] initWithAPI:api];

if (!m_context || ForceES1) {

api = kEAGLRenderingAPIOpenGLES1;

m_context = [[EAGLContext alloc] initWithAPI:api];

}

if (!m_context || ![EAGLContext setCurrentContext:m_context]) {

[self release];

return nil;

}

if (api == kEAGLRenderingAPIOpenGLES1) {

m_renderingEngine = CreateRenderer1();

} else {

m_renderingEngine = CreateRenderer2();

}

The previous code snippet creates a fallback

path to allow the application to work on older devices while leveraging

ES 2.0 on newer devices. For convenience, the ES 1.1 path is used even

on newer devices if the ForceES1 constant is enabled.

Add this to the top of GLView.mm:

const bool ForceES1 = false;

There’s no need to make any changes to the

IRenderingEngine interface, but you do need to add a

declaration for the new CreateRenderer2 factory

method in IRenderingEngine.hpp:

...

// Create an instance of the renderer and set up various OpenGL state.

struct IRenderingEngine* CreateRenderer1();

struct IRenderingEngine* CreateRenderer2();

// Interface to the OpenGL ES renderer; consumed by GLView.

struct IRenderingEngine {

virtual void Initialize(int width, int height) = 0;

virtual void Render() const = 0;

virtual void UpdateAnimation(float timeStep) = 0;

virtual void OnRotate(DeviceOrientation newOrientation) = 0;

virtual ~IRenderingEngine() {}

};

RenderingEngine Implementation

You’re done with the requisite changes to the glue code; now it’s time for the meat. Use Finder to create a copy of RenderingEngine1.cpp (right-click or Control-click RenderingEngine1.cpp and choose Reveal in Finder), and name the new file RenderingEngine2.cpp. Add it to your Xcode project by right-clicking the Classes group and choosing Add→Existing Files. Next, revamp the top part of the file as shown in Example 1-15. New or modified lines are shown in bold.

#include <OpenGLES/ES2/gl.h> #include <OpenGLES/ES2/glext.h> #include <cmath> #include <iostream> #include "IRenderingEngine.hpp" #define STRINGIFY(A) #A #include "../Shaders/Simple.vert" #include "../Shaders/Simple.frag" static const float RevolutionsPerSecond = 1; class RenderingEngine2 : public IRenderingEngine { public: RenderingEngine2(); void Initialize(int width, int height); void Render() const; void UpdateAnimation(float timeStep); void OnRotate(DeviceOrientation newOrientation); private: float RotationDirection() const; GLuint BuildShader(const char* source, GLenum shaderType) const; GLuint BuildProgram(const char* vShader, const char* fShader) const; void ApplyOrtho(float maxX, float maxY) const; void ApplyRotation(float degrees) const; float m_desiredAngle; float m_currentAngle; GLuint m_simpleProgram; GLuint m_framebuffer; GLuint m_renderbuffer; };

As you might expect, the implementation of

Render needs to be replaced. Flip back to Example 1-11 to compare it with Example 1-16.

void RenderingEngine2::Render() const

{

glClearColor(0.5f, 0.5f, 0.5f, 1);

glClear(GL_COLOR_BUFFER_BIT);

ApplyRotation(m_currentAngle);

GLuint positionSlot = glGetAttribLocation(m_simpleProgram, "Position");

GLuint colorSlot = glGetAttribLocation(m_simpleProgram, "SourceColor");

glEnableVertexAttribArray(positionSlot);

glEnableVertexAttribArray(colorSlot);

GLsizei stride = sizeof(Vertex);

const GLvoid* pCoords = &Vertices[0].Position[0];

const GLvoid* pColors = &Vertices[0].Color[0];

glVertexAttribPointer(positionSlot, 2, GL_FLOAT, GL_FALSE, stride, pCoords);

glVertexAttribPointer(colorSlot, 4, GL_FLOAT, GL_FALSE, stride, pColors);

GLsizei vertexCount = sizeof(Vertices) / sizeof(Vertex);

glDrawArrays(GL_TRIANGLES, 0, vertexCount);

glDisableVertexAttribArray(positionSlot);

glDisableVertexAttribArray(colorSlot);

}As you can see, the 1.1 and 2.0 versions of

Render are quite different, but at a high level they

basically follow the same sequence of actions.

Framebuffer objects have been promoted from a mere OpenGL extension to the core API. Luckily OpenGL has a very strict and consistent naming convention, so this change is fairly mechanical. Simply remove the OES suffix everywhere it appears. For function calls, the suffix is OES; for constants the suffix is _OES. The constructor is very easy to update:

RenderingEngine2::RenderingEngine2()

{

glGenRenderbuffers(1, &m_renderbuffer);

glBindRenderbuffer(GL_RENDERBUFFER, m_renderbuffer);

}

The only remaining public method that needs

to be updated is Initialize, shown in Example 1-17.

void RenderingEngine2::Initialize(int width, int height)

{

// Create the framebuffer object and attach the color buffer.

glGenFramebuffers(1, &m_framebuffer);

glBindFramebuffer(GL_FRAMEBUFFER, m_framebuffer);

glFramebufferRenderbuffer(GL_FRAMEBUFFER,

GL_COLOR_ATTACHMENT0,

GL_RENDERBUFFER,

m_renderbuffer);

glViewport(0, 0, width, height);

m_simpleProgram = BuildProgram(SimpleVertexShader, SimpleFragmentShader);

glUseProgram(m_simpleProgram);

// Initialize the projection matrix.

ApplyOrtho(2, 3);

// Initialize rotation animation state.

OnRotate(DeviceOrientationPortrait);

m_currentAngle = m_desiredAngle;

}This calls the private method

BuildProgram, which in turn makes two calls on the

private method BuildShader. In OpenGL terminology, a

program is a module composed of several shaders

that get linked together. Example 1-18 shows the

implementation of these two methods.

GLuint RenderingEngine2::BuildProgram(const char* vertexShaderSource,

const char* fragmentShaderSource) const

{

GLuint vertexShader = BuildShader(vertexShaderSource, GL_VERTEX_SHADER);

GLuint fragmentShader = BuildShader(fragmentShaderSource, GL_FRAGMENT_SHADER);

GLuint programHandle = glCreateProgram();

glAttachShader(programHandle, vertexShader);

glAttachShader(programHandle, fragmentShader);

glLinkProgram(programHandle);

GLint linkSuccess;

glGetProgramiv(programHandle, GL_LINK_STATUS, &linkSuccess);

if (linkSuccess == GL_FALSE) {

GLchar messages[256];

glGetProgramInfoLog(programHandle, sizeof(messages), 0, &messages[0]);

std::cout << messages;

exit(1);

}

return programHandle;

}

GLuint RenderingEngine2::BuildShader(const char* source, GLenum shaderType) const

{

GLuint shaderHandle = glCreateShader(shaderType);

glShaderSource(shaderHandle, 1, &source, 0);

glCompileShader(shaderHandle);

GLint compileSuccess;

glGetShaderiv(shaderHandle, GL_COMPILE_STATUS, &compileSuccess);

if (compileSuccess == GL_FALSE) {

GLchar messages[256];

glGetShaderInfoLog(shaderHandle, sizeof(messages), 0, &messages[0]);

std::cout << messages;

exit(1);

}

return shaderHandle;

}

You might be surprised to see some console I/O in Example 1-18. This dumps out the shader compiler output if an error occurs. Trust me, you’ll always want to gracefully handle these kind of errors, no matter how simple you think your shaders are. The console output doesn’t show up on the iPhone screen, but it can be seen in Xcode’s Debugger Console window, which is shown via the Run→Console menu. See Figure 1-10 for an example of how a shader compilation error shows up in the console window.

Note that the log in Figure 1-10 shows the version of OpenGL ES being used. To

include this information, go back to the GLView

class, and add the lines in bold:

if (api == kEAGLRenderingAPIOpenGLES1) {

NSLog(@"Using OpenGL ES 1.1");

m_renderingEngine = CreateRenderer1();

} else {

NSLog(@"Using OpenGL ES 2.0");

m_renderingEngine = CreateRenderer2();

}The preferred method of outputting diagnostic

information in Objective-C is NSLog, which

automatically prefixes your string with a timestamp and follows it with

a carriage return. (Recall that Objective-C string objects are

distinguished from C-style strings using the @

symbol.)

Return to

RenderingEngine2.cpp. Two methods remain:

ApplyOrtho and ApplyRotation. Since ES 2.0 does not

provide glOrthof or glRotatef, you

need to implement them manually, as seen in Example 1-19. (In the next chapter, we’ll create a simple

math library to simplify code like this.) The calls to

glUniformMatrix4fv provide values for the

uniform variables that were declared in the shader

source.

void RenderingEngine2::ApplyOrtho(float maxX, float maxY) const

{

float a = 1.0f / maxX;

float b = 1.0f / maxY;

float ortho[16] = {

a, 0, 0, 0,

0, b, 0, 0,

0, 0, -1, 0,

0, 0, 0, 1

};

GLint projectionUniform = glGetUniformLocation(m_simpleProgram, "Projection");

glUniformMatrix4fv(projectionUniform, 1, 0, &ortho[0]);

}

void RenderingEngine2::ApplyRotation(float degrees) const

{

float radians = degrees * 3.14159f / 180.0f;

float s = std::sin(radians);

float c = std::cos(radians);

float zRotation[16] = {

c, s, 0, 0,

-s, c, 0, 0,

0, 0, 1, 0,

0, 0, 0, 1

};

GLint modelviewUniform = glGetUniformLocation(m_simpleProgram, "Modelview");

glUniformMatrix4fv(modelviewUniform, 1, 0, &zRotation[0]);

}Again, don’t be intimidated by the matrix math; I’ll explain it all in the next chapter.

Next, go through the file, and change any

remaining occurrences of RenderingEngine1 to

RenderingEngine2, including the factory method (and

be sure to change the name of that method to

CreateRenderer2). This completes all the

modifications required to run against ES 2.0. Phew! It’s obvious by now

that ES 2.0 is “closer to the metal” than ES 1.1.

Wrapping Up

This chapter has been a headfirst dive into the world of OpenGL ES development for the iPhone. We established some patterns and practices that we’ll use throughout this book, and we constructed our first application from the ground up — using both versions of OpenGL ES!

In the next chapter, we’ll go over some graphics fundamentals and explain the various concepts used in HelloArrow. If you’re already a computer graphics ninja, you’ll be able to skim through quickly.

Get iPhone 3D Programming now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.