Chapter 4. Concurrency and Parallelism

Writing multithreaded programs is one of the most difficult tasks many programmers will face. They are difficult to reason about, and often exhibit nondeterministic behavior: a typical program that utilizes concurrency facilities will sometimes yield different results given the same inputs, a result of ill-defined execution order that can additionally produce race conditions and deadlocks. Some of these conditions are hard to detect, and none of them are easy to debug.

Most languages give us paltry few resources to cope with the cognitive burden of concurrency. Threads and locks, in all their forms, are often the only real tools at our disposal, and we are often victims of how difficult they are to use properly and efficiently. In which order should locks be acquired and released? Does a reader have to acquire a lock to read a value another thread might be writing to? How can multithreaded programs that rely upon locks be comprehensively tested? Complexity spirals out of control in a hurry; meanwhile, you are left debugging a race condition that only occurs in production or a deadlock that happens on this machine, but not that one.

Considering how low-level they are, the continued reliance upon threads, locks, and pale derivatives as the sole “user-facing” solution to the varied complexities of concurrency is a remarkable contrast to the never-ending stampede of activity seen over the years in developing more effective and less error-prone abstractions. Clojure’s response to this has many facets:

As we discussed in Chapter 2, minimize the amount of mutable state in your programs, with the help of immutable values and collections with reliable semantics and efficient operations.

When you do need to manage changing state over time and in conjunction with concurrent threads of execution, isolate that state and constrain the ways in which that state can be changed. This is the basis of Clojure’s reference types, which we’ll discuss shortly.

When you absolutely have no other choice—and are willing to shrug off the benefits of the semantic guarantees of Clojure’s reference types—make it straightforward to drop back to bare locks, threads, and the high-quality concurrency APIs provided by Java.

Clojure provides no silver bullet that makes concurrent programming instantly trivial, but it does provide some novel and now battle-tested tools to makes it far more tractable and reliable.

Shifting Computation Through Time and Space

Clojure provides a number of entities—delays, futures, and promises—that encapsulate discrete use cases for controlling when and how computations are performed. While only futures are solely concerned with concurrency, they are all often used to help implement specific concurrent semantics and mechanics.

Delays

A delay is a construct that suspends

some body of code, evaluating it only upon demand, when it is

dereferenced:

(def d (delay (println "Running...")

:done!))

;= #'user/d

(deref d)

; Running...

;= :done!Note

The deref abstraction is

defined by Clojure’s clojure.lang.IDeref interface; any type

that implements it acts as a container for a value. It may be

dereferenced, either via deref,

or the corresponding reader syntax, @.[124] Many Clojure entities are dereferenceable, including

delays, futures, promises, and all reference types, atoms, refs,

agents, and vars. We talk about them all in this chapter.

You can certainly accomplish the same sort of thing just by using functions:

(def a-fn (fn []

(println "Running...")

:done!))

;= #'user/a-fn

(a-fn)

; Running...

;= :done!However, delays provide a couple of compelling advantages.

Delays only evaluate their body of code

once, caching the return value. Thus,

subsequent accesses using deref

will return instantly, and not reevaluate that code:[125]

@d ;= :done!

A corollary to this is that multiple threads can safely attempt to dereference a delay for the first time; all of them will block until the delay’s code is evaluated (only once!), and a value is available.

When you may want to provide a value that contains some expensive-to-produce or optional data, you can use delays as useful (if crude) optimization mechanisms, where the end “user” of the value can opt into the costs associated with that data.

(defn get-document

[id]

; ... do some work to retrieve the identified document's metadata ...

{:url "http://www.mozilla.org/about/manifesto.en.html"

:title "The Mozilla Manifesto"

:mime "text/html"

:content (delay (slurp "http://www.mozilla.org/about/manifesto.en.html"))})  ;= #'user/get-document

(def d (get-document "some-id"))

;= #'user/d

d

;= {:url "http://www.mozilla.org/about/manifesto.en.html",

;= :title "The Mozilla Manifesto",

;= :mime "text/html",

;= :content #<Delay@2efb541d: :pending>}

;= #'user/get-document

(def d (get-document "some-id"))

;= #'user/d

d

;= {:url "http://www.mozilla.org/about/manifesto.en.html",

;= :title "The Mozilla Manifesto",

;= :mime "text/html",

;= :content #<Delay@2efb541d: :pending>}

Some parts of our program may be perfectly satisfied with the

metadata associated with a document and not require its content

at all, and so can avoid the costs associated with retrieving that

content. On the other hand, other parts of our application may

absolutely require the content, and still others might make use of

it if it is already available. This latter use

case is made possible with realized?, which polls a delay to see if its value has been

materialized yet:

(realized? (:content d)) ;= false @(:content d) ;= "<!DOCTYPE html><html>..." (realized? (:content d)) ;= true

Note

Note that realized? may

also be used with futures, promises, and lazy sequences.

realized? allows you to

immediately use data provided by a delay that has already been

dereferenced, but perhaps opt out of forcing the evaluation of a

delay if you know that doing so will be too expensive an operation

than you’re willing to allow at that point in time and can do

without its eventual value.

Futures

Before getting to more sophisticated topics like reference types, Clojure programmers often start off asking, “How do I start a new thread and run some code in it?” Now, you can use the JVM’s native threads if you have to (see Using Java’s Concurrency Primitives), but Clojure provides a kinder, gentler option in futures.

A Clojure future evaluates a body of code in another thread:[126]

(def long-calculation (future (apply + (range 1e8)))) ;= #'user/long-calculation

future returns immediately,

allowing the current thread of execution (such as your REPL) to

carry on. The result of evaluation will be retained by the future,

which you can obtain by dereferencing it:

@long-calculation ;= 4999999950000000

Just like a delay, dereferencing a future will block if the code it is evaluating has not completed yet; thus, this expression will block the REPL for five seconds before returning:

@(future (Thread/sleep 5000) :done!) ;= :done!

Also like delays, futures retain the value their body of code

evaluated to, so subsequent accesses via deref will return that value immediately.

Unlike delays, you can provide a timeout and a “timeout value”

when dereferencing a future, the latter being what deref will return if the specified timeout

is reached:[127]

(deref (future (Thread/sleep 5000) :done!)

1000

:impatient!)

;= :impatient!Futures are often used as a device to simplify the usage of

APIs that perform some concurrent aspect to their operation. For

example, say we knew that all users of the get-document function from Example 4-1 would need the :content value. Our first impulse might be

to synchronously retrieve the document’s :content within the scope of the get-document call, but this would make

every caller wait until that content is retrieved fully, even if the

caller doesn’t need the content immediately. Instead, we can use a

future for the value of :content;

this starts the retrieval of the content in another thread right

away, allowing the caller to get back to work without blocking on

that I/O. When the :content value

is later dereferenced for use, it is likely to block for less time

(if any), since the content retrieval had already been in

motion.

(defn get-document

[id]

; ... do some work to retrieve the identified document's metadata ...

{:url "http://www.mozilla.org/about/manifesto.en.html"

:title "The Mozilla Manifesto"

:mime "text/html"

:content (future (slurp "http://www.mozilla.org/about/manifesto.en.html"))})

The only change from Example 4-1 is replacing

delaywithfuture.

This requires no change on the part of clients (since they

continue to be interested only in dereferencing the value of

:content), but if callers are

likely to always require that data, this small change can prove to

be a significant improvement in throughput.

Futures carry a couple of advantages compared to starting up a native thread to run some code:

Clojure futures are evaluated within a thread pool that is shared with potentially blocking agent actions (which we discuss in Agents). This pooling of resources can make futures more efficient than creating native threads as needed.

Using

futureis much more concise than setting up and starting a native thread.Clojure futures (the value returned by

future) are instances ofjava.util.concurrent.Future, which can make it easier to interoperate with Java APIs that expect them.

Promises

Promises share many of the mechanics of delays and futures: a promise may be dereferenced with an optional timeout, dereferencing a promise will block until it has a value to provide, and a promise will only ever have one value. However, promises are distinct from delays and futures insofar as they are not created with any code or function that will eventually define its value:

(def p (promise)) ;= #'user/p

promise is initially a

barren container; at some later point in time, the promise may be

fulfilled by having a value delivered to it:

(realized? p) ;= false (deliver p 42) ;= #<core$promise$reify__1707@3f0ba812: 42> (realized? p) ;= true @p ;= 42

Thus, a promise is similar to a one-time, single-value pipe:

data is inserted at one end via deliver and retrieved at the other end by

deref. Such things are sometimes

called dataflow variables and are the building blocks of declarative

concurrency. This is a strategy where relationships between

concurrent processes are explicitly defined such that derivative

results are calculated on demand as soon as their inputs are

available, leading to deterministic behavior. A simple example would

involve three promises:

(def a (promise)) (def b (promise)) (def c (promise))

We can specify how these promises are related by creating a future that uses (yet to be delivered) values from some of the promises in order to calculate the value to be delivered to another:

(future (deliver c (+ @a @b)) (println "Delivery complete!"))

In this case, the value of c will not be delivered until both

a and b are available (i.e., realized?); until that time, the future

that will deliver the value to

c will block on dereferencing

a and b. Note that attempting to dereference

c (without a timeout) with the

promises in this state will block your REPL thread

indefinitely.

In most cases of dataflow programming, other threads will be

at work doing whatever computation that will eventually result in

the delivery of values to a and

b. We can short-circuit the

process by delivering values from the REPL;[128] as soon as both a

and b have values, the future

will unblock on dereferencing them and will be able to deliver the

final value to c:

(deliver a 15) ;= #<core$promise$reify__5727@56278e83: 15> (deliver b 16) ; Delivery complete! ;= #<core$promise$reify__5727@47ef7de4: 16> @c ;= 31

Promises don’t detect cyclic dependencies

This means that (deliver p

@p), where p is a

promise, will block indefinitely.

However, such blocked promises are not locked down, and the situation can be resolved:

(def a (promise)) (def b (promise)) (future (deliver a @b))(future (deliver b @a)) (realized? a)

;= false (realized? b) ;= false (deliver a 42)

;= #<core$promise$reify__5727@6156f1b0: 42> @a ;= 42 @b ;= 42

An immediately practical application of promises is in easily making callback-based APIs synchronous. Say you have a function that takes another function as a callback:

(defn call-service [arg1 arg2 callback-fn] ; ...perform service call, eventually invoking callback-fn with results... (future (callback-fn (+ arg1 arg2) (- arg1 arg2))))

Using this function’s results in a synchronous body of code

requires providing a callback, and then using any number of

different (relatively unpleasant) techniques to wait for the

callback to be invoked with the results. Alternatively, you can

write a simple wrapper on top of the asynchronous, callback-based

API that uses a promise’s blocking behavior on deref to enforce the synchronous semantics

for you. Assuming for the moment that all of the asynchronous

functions you’re interested in take the callback as their last

argument, this can be implemented as a general-purpose higher-order

function:

(defn sync-fn

[async-fn]

(fn [& args]

(let [result (promise)]

(apply async-fn (conj (vec args) #(deliver result %&)))

@result)))

((sync-fn call-service) 8 7)

;= (15 1)Parallelism on the Cheap

We’ll be examining all of Clojure’s flexible concurrency facilities in a bit, one of which—agents—can be used to orchestrate very efficient parallelization of workloads. However, sometimes you may find yourself wanting to parallelize some operation with as little ceremony as possible.

The flexibility of Clojure’s seq abstraction[129] makes implementing many routines in terms of processing sequences very easy. For example, say we had a function that uses a regular expression to find and return phone numbers found within other strings:

(defn phone-numbers

[string]

(re-seq #"(\d{3})[\.-]?(\d{3})[\.-]?(\d{4})" string))

;= #'user/phone-numbers

(phone-numbers " Sunil: 617.555.2937, Betty: 508.555.2218")

;= (["617.555.2937" "617" "555" "2937"] ["508.555.2218" "508" "555" "2218"])Simple enough, and applying it to any seq of strings is easy,

fast, and effective. These seqs could be loaded from disk using

slurp and file-seq, or be coming in as messages from a

message queue, or be the results obtained by retrieving large chunks

of text from a database. To keep things simple, we can dummy up a seq

of 100 strings, each about 1MB in size, suffixed with some phone

numbers:

(def files (repeat 100

(apply str

(concat (repeat 1000000 \space)

"Sunil: 617.555.2937, Betty: 508.555.2218"))))Let’s see how fast we can get all of the phone numbers from all of these “files”:

(time (dorun (map phone-numbers files))); "Elapsed time: 2460.848 msecs"

This is parallelizable though, and trivially so. There is a

cousin of map—pmap – that will parallelize the application of a function

across a sequence of values, returning a lazy seq of results just like

map:

(time (dorun (pmap phone-numbers files))); "Elapsed time: 1277.973 msecs"

Run on a dual-core machine, this roughly doubles the throughput

compared to the use of map in the

prior example; for this particular task and dataset, roughly a 4x

improvement could be expected on a four-core machine, and so on. Not

bad for a single-character change to a function name! While this might

look magical, it’s not; pmap is

simply using a number of futures—calibrated to suit the number of CPU

cores available—to spread the computation involved in evaluating

phone-numbers for each file across

each of those cores.

This works for many operations, but you still must use pmap judiciously. There

is a degree of overhead associated with

parallelizing operations like this. If the operation being

parallelized does not have a significant enough runtime, that overhead

will dominate the real work being performed; this can make a naive

application of pmap

slower than the equivalent use of map:

(def files (repeat 100000

(apply str

(concat (repeat 1000 \space)

"Sunil: 617.555.2937, Betty: 508.555.2218"))))

(time (dorun (map phone-numbers files)))

; "Elapsed time: 2649.807 msecs"

(time (dorun (pmap phone-numbers files)))

; "Elapsed time: 2772.794 msecs"The only change we’ve made here is to the data: each string is

now around 1K in size instead of 1MB in size. Even though the total

amount of work is the same (there are more “files”), the

parallelization overhead outstrips the gains we get from putting each

evaluation of phone-numbers onto a

different future/core. Because of this overhead, it is very common to

see speedups of something less than Nx (where N is the number of CPU cores available) when

using pmap. The lesson is clear:

use pmap when the operation you’re

performing is parallelizable in the first place, and is significant

enough for each value in the seq that its workload will eclipse the

process coordination inherent in its parallelization. Trying to force

pmap into service where it’s not

warranted can be disastrous.

There is often a workaround for such scenarios, however. You can

often efficiently parallelize a relatively trivial operation by

chunking your dataset so that each unit of parallelized work is

larger. In the above example, the unit of work is just 1K of text;

however, we can take steps to ensure that the unit of work is larger,

so that each value processed by pmap is a seq of 250 1K strings, thus

boosting the work done per future dispatch and cutting down on the

parallelization overhead:

(time (->> files

(partition-all 250)

(pmap (fn [chunk] (doall (map phone-numbers chunk))))  (apply concat)

dorun))

; "Elapsed time: 1465.138 msecs"

(apply concat)

dorun))

; "Elapsed time: 1465.138 msecs"

mapwill return a lazy seq, so we usedoallto force the realization of that lazy seq within the scope of the function provided topmap. Otherwise,phone-numberswould never be called at all in parallel, leaving the work of applying it to each string to whatever process might have consumed the lazy seq later.

By changing the chunk size of our workload, we’ve regained the benefits of parallelization even though our per-operation computation complexity dropped substantially when applied to many more smaller strings.

Two other parallelism constructs are built on top of pmap: pcalls and pvalues. The former evaluates any number of

no-arg functions provided as arguments, returning a lazy sequence of

their return values; the latter is a macro that does the same, but for

any number of expressions.

State and Identity

In Clojure, there is a clear distinction between state and identity. These concepts are almost universally conflated; we can see that conflation in its full glory here:

class Person {

public String name;

public int age;

public boolean wearsGlasses;

public Person (String name, int age, boolean wearsGlasses) {

this.name = name;

this.age = age;

this.wearsGlasses = wearsGlasses;

}

}

Person sarah = new Person("Sarah", 25, false);Nothing particularly odd, right? Just a Java class[130] with some fields, of which we can create instances. Actually, the problems here are legion.

We have established a reference to a Person, meant to represent "Sarah", who is apparently 25 years old.

Over time, Sarah has existed in many different states: Sarah as a

child, as a teenager, as an adult. At each point in time—say, last

Tuesday at 11:07 a.m.—Sarah has

precisely one state, and each state in time is

inviolate. It makes absolutely no sense to talk about changing one of

Sarah’s states. Her characteristics last Tuesday don’t change on

Wednesday; her state may change from one point in time to another, but

that doesn’t modify what she was previously.

Unfortunately, this Person

class and low-level references (really, just pointers) provided by

most languages are ill-suited to representing even this trivial—we

might say fundamental—concept. If Sarah is to turn 26 years old, our

only option is to clobber the particular state we have

available:[131]

sarah.age++;

Even worse, what happens when a particular change in Sarah’s state has to modify multiple attributes?

sarah.age++; sarah.wearsGlasses = true;

At any point in time between the execution of these two lines of code, Sarah’s age has been incremented, but she does not yet wear glasses. For some period of time (technically, an indeterminate period of time given the way modern processor architectures and language runtimes operate), Sarah may exist in an inconsistent state that is factually and perhaps semantically impossible, depending on our object model. This is the stuff that race conditions are made of, and a key motivator of deadlock-prone locking strategies.

Note that we can even change this sarah object to represent a completely

different person:

sarah.name = "John";

This is troublesome. The sarah object does not represent a single

state of Sarah, nor even the concept of Sarah as an identity. Rather,

it’s an unholy amalgam of the two. More generally, we cannot make any

reliable statements about prior states of a Person reference, particular instances of

Person are liable to change at any

time (of particular concern in programs with concurrent threads of

execution), and not only is it easy to put instances into inconsistent

states, it is the default.

The Clojure approach. What we really want to be able to say is that Sarah has an identity that represents her; not her at any particular point in time, but her as a logical entity throughout time. Further, we want to be able to say that that identity can have a particular state at any point in time, but that each state transition does not change history; thinking back to On the Importance of Values and the contrast between mutable objects and immutable values, this characterization of state would seem to carry many practical benefits as well as being semantically more sound. After all, in addition to wanting to ensure that a state of some identity is never internally inconsistent (something guaranteed by using immutable values), we may very well want to be able to easily and safely refer to Sarah as she was last Tuesday or last year.

Unlike most objects, Clojure data structures are immutable. This makes them ideal for representing state:

(def sarah {:name "Sarah" :age 25 :wears-glasses? false})

;= #'user/sarahThe map we store in the sarah

var is one state of Sarah at some point in time. Because the map is

immutable, we can be sure that any code that holds a reference to that

map will be able to safely use it for all time regardless of what

changes are made to other versions of it or to the state held by the

var. The var itself is one of Clojure’s reference

types, essentially a container with defined concurrency and

change semantics that can hold any value, and be used as a stable

identity. So, we can say that Sarah is represented by the sarah var, the state of which may change

over time according to the var’s semantics.

This is just a glimpse of how Clojure treats identity and state and how they relate over time as distinct concepts worthy of our attention.[132] The rest of this chapter will be devoted to exploring the mechanics of that treatment. In large part, this will consist of exploring Clojure’s four reference types, each of which implement different yet well-defined semantics for changing state over time. Along with Clojure’s emphasis on immutable values, these reference types and their semantics make it possible to design concurrent programs that take maximum advantage of the increasingly capable hardware we have available to us, while eliminating entire categories of bugs and failure conditions that would otherwise go with the territory of dealing with bare threads and locks.

Clojure Reference Types

Identities are represented in Clojure using four

reference types: vars, refs, agents, and atoms. All of these are very different in

certain ways, but let’s first talk about what they have in

common.

At their most fundamental level, references are just boxes that hold a value, where that value can be changed by certain functions (different for each reference type):

All references always contain some value

(even if that value is nil);

accessing one is always done using deref or @:

@(atom 12)

;= 12

@(agent {:c 42})

;= {:c 42}

(map deref [(agent {:c 42}) (atom 12) (ref "http://clojure.org") (var +)])

;= ({:c 42} 12 "http://clojure.org" #<core$_PLUS_ clojure.core$_PLUS_@65297549>)Dereferencing will return a snapshot of the

state of a reference when deref was

invoked. This doesn’t mean there’s copying of any sort when you obtain

a snapshot, simply that the returned state—assuming you’re using

immutable values for reference state, like Clojure’s collections—is

inviolate, but that the reference’s state at later points in time may

be different.

One critical guarantee of deref within the context of Clojure’s reference types is that

deref will never

block, regardless of the change semantics of the reference

type being dereferenced or the operations being applied to it in other

threads of execution. Similarly, dereferencing a reference type will

never interfere with other operations. This is in contrast with

delays, promises, and futures—which can block on deref if their value is not yet realized—and

most concurrency primitives in other languages, where readers are

often blocked by writers and vice versa.

“Setting” the value of a reference type is a more nuanced affair. Each reference type has its own semantics for managing change, and each type has its own family of functions for applying changes according to those semantics. Talking about those semantics and their corresponding functions will form the bulk of the rest of our discussion.

In addition to all being dereferenceable, all reference types:

May be decorated with metadata (see Metadata). Rather than using

with-metaorvary-meta, metadata on reference types may only be changed withalter-meta!, which modifies a reference’s metadata in-place.[133]Can notify functions you specify when the their state changes; these functions are called watches, which we discuss in Watches.

Can enforce constraints on the state they hold, potentially aborting change operations, using validator functions (see Validators).

Classifying Concurrent Operations

In thinking about Clojure’s reference types, we’ll repeatedly stumble across a couple of key concepts that can be used to characterize concurrent operations. Taken together, they can help us think clearly about how each type is best used.

Coordination. A coordinated operation is one where multiple actors must cooperate (or, at a minimum, be properly sequestered so as to not interfere with each other) in order to yield correct results. A classic example is any banking transaction: a process that aims to transfer monies from one account to another must ensure that the credited account not reflect an increased balance prior to the debited account reflecting a decreased balance, and that the transaction fail entirely if the latter has insufficient funds. Along the way, many other processes may provoke similar transactions involving the same accounts. Absent methods to coordinate the changes, some accounts could reflect incorrect balances for some periods, and transactions that should have failed (or should have succeeded) would succeed (or fail) improperly.

In contrast, an uncoordinated operation is one where multiple actors cannot impact each other negatively because their contexts are separated. For example, two different threads of execution can safely write to two different files on disk with no possibility of interfering with each other.

Synchronization. Synchronous operations are those where the caller’s thread of execution waits or blocks or sleeps until it may have exclusive access to a given context, whereas asynchronous operations are those that can be started or scheduled without blocking the initiating thread of execution.

Just these two concepts (or, four, if you count their duals) are sufficient to fully characterize many (if not most) concurrent operations you might encounter. Given that, it makes sense that Clojure’s reference types were designed to implement the semantics necessary to address permutations of these concepts, and that they can be conveniently classified according to the types of operations for which each is suited:[134]

When choosing which reference type(s) to use for a given problem, keep this classification in mind; if you can characterize a particular problem using it, then the most appropriate reference type will be obvious.

Note

You’ll notice that none of Clojure’s reference types are slated as implementing coordinated and asynchronous semantics. This combination of characteristics is more common in distributed systems, such as eventually consistent databases where changes are only guaranteed to be merged into a unified model over time. In contrast, Clojure is fundamentally interested in addressing in-process concurrency and parallelism.

Atoms

Atoms are the most basic reference type; they are identities that implement synchronous, uncoordinated, atomic compare-and-set modification. Thus, operations that modify the state of atoms block until the modification is complete, and each modification is isolated—on their own, there is no way to orchestrate the modification of two atoms.

Atoms are created using atom.

swap! is the most common modification operation used with

them, which sets the value of an atom to the result of applying some

function to the atom’s value and any additional arguments provided to

swap!:

(def sarah (atom {:name "Sarah" :age 25 :wears-glasses? false}))

;= #'user/sarah

(swap! sarah update-in [:age] + 3)  ;= {:age 28, :wears-glasses? false, :name "Sarah"}

;= {:age 28, :wears-glasses? false, :name "Sarah"}

Atoms are the minimum we need to do right by Sarah: every modification of an atom occurs atomically, so it’s safe to apply any function or composition of functions to an atom’s value. You can be sure that no other threads of execution will ever see an atom’s contents in an inconsistent or partially applied state:

(swap! sarah (comp #(update-in % [:age] inc)

#(assoc % :wears-glasses? true)))

;= {:age 29, :wears-glasses? true, :name "Sarah"}One thing you must keep in mind when using swap! is that, because atoms use

compare-and-set semantics, if the atom’s value changes before your

update function returns (as a

result of action by another thread of execution), swap! will retry, calling your update

function again with the atom’s newer value. swap! will continue to retry the

compare-and-set until it succeeds:

(def xs (atom #{1 2 3}))

;= #'user/xs

(wait-futures 1 (swap! xs (fn [v]

(Thread/sleep 250)

(println "trying 4")

(conj v 4)))

(swap! xs (fn [v]

(Thread/sleep 500)

(println "trying 5")

(conj v 5))))

;= nil

; trying 4

; trying 5  ; trying 5

@xs

;= #{1 2 3 4 5}

; trying 5

@xs

;= #{1 2 3 4 5}We can visualize the retry semantics of swap! like so:

If the value of atom a

changes between the time when function g is invoked and the time when it returns a

new value for a (a1 and a2, respectively),

swap! will discard that new value

and reevaluate the call with the latest available state of a. This will continue until the return value

of g can be set on a as the immediate successor of the state of

a with which it was invoked.

There is no way to constrain swap!’s retry semantics; given this, the

function you provide to swap!

must be pure, or things will surely go awry in

hard-to-predict ways.

Being a synchronous reference type, functions that change atom values do not return until they have completed:

(def x (atom 2000)) ;= #'user/x (swap! x #(Thread/sleep %));= nil

A “bare” compare-and-set!

operation is also provided for use with atoms, if you already think

you know what the value of the atom being modified is; it returns

true only if the atom’s value was

changed:

(compare-and-set! xs :wrong "new value") ;= false (compare-and-set! xs @xs "new value") ;= true @xs ;= "new value"

Warning

compare-and-set! does

not use value semantics; it requires that the

value in the atom be identical[135] to the expected value provided to it as its second

argument:

(def xs (atom #{1 2}))

;= #'user/xs

(compare-and-set! xs #{1 2} "new value")

;= falseFinally, there is a “nuclear option”: if you want to set the

state of an atom without regard for what it contains currently, there

is reset!:

(reset! xs :y) ;= :y @xs ;= :y

Now that we know about atoms, this is a good time to look at two facilities that all reference types support, since some later examples will use them.

Notifications and Constraints

We already learned about one common operation in Clojure Reference Types—dereferencing—which allows us to obtain the current value of a reference regardless of its particular type. There are certain other common things you’ll sometimes want to do with every type of reference that involve being able to monitor or validate state changes as they happen. All of Clojure’s reference types provide hooks for these, in the form of watches and validators.

Watches

Watches are functions that are called whenever the state of a reference has changed. If you are familiar with the “observer” design pattern, you will recognize the applicable use cases immediately, although watches are decidedly more general: a watch can be registered with any reference type, and all watches are functions—there are no special interfaces that must be implemented, and the notification machinery is provided for you.

All reference types start off with no watches, but they can be registered and removed at any time. A watch function must take four arguments: a key, the reference that’s changed (an atom, ref, agent, or var), the old state of the reference, and its new state:

(defn echo-watch[key identity old new] (println key old "=>" new)) ;= #'user/echo-watch (def sarah (atom {:name "Sarah" :age 25})) ;= #'user/sarah (add-watch sarah :echo echo-watch) ;= #<Atom@418bbf55: {:name "Sarah", :age 25}> (swap! sarah update-in [:age] inc) ; :echo {:name Sarah, :age 25} => {:name Sarah, :age 26} ;= {:name "Sarah", :age 26} (add-watch sarah :echo2 echo-watch)

;= #<Atom@418bbf55: {:name "Sarah", :age 26}> (swap! sarah update-in [:age] inc)

; :echo {:name Sarah, :age 26} => {:name Sarah, :age 27} ; :echo2 {:name Sarah, :age 26} => {:name Sarah, :age 27} ;= {:name "Sarah", :age 27}

Warning

Watch functions are called synchronously on the same thread that effected the reference’s state change in question. This means that, by the time your watch function has been called, the reference it is attached to could have been updated again from another thread of execution. Thus, you should rely only on the “old” and “new” values passed to the watch function, rather than dereferencing the host ref, agent, atom, or var.

The key you provide to add-watch can be used to remove the watch later on:

(remove-watch sarah :echo2)

;= #<Atom@418bbf55: {:name "Sarah", :age 27}>

(swap! sarah update-in [:age] inc)

; :echo {:name Sarah, :age 27} => {:name Sarah, :age 28}

;= {:name "Sarah", :age 28}Note that watches on a reference type are called whenever the reference’s state has been modified, but this does not guarantee that the state is different:

(reset! sarah @sarah)

; :echo {:name Sarah, :age 28} => {:name Sarah, :age 28}

;= {:name "Sarah", :age 28}Thus, it’s common for watch functions to check if the old and new states of the watched reference are equal before proceeding.

Generally speaking, watches are a great mechanism for triggering the propagation of local changes to other references or systems as appropriate. For example, they make it dead easy to keep a running log of a reference’s history:

(def history (atom ()))

(defn log->list

[dest-atom key source old new]

(when (not= old new)

(swap! dest-atom conj new)))

(def sarah (atom {:name "Sarah", :age 25}))

;= #'user/sarah

(add-watch sarah :record (partial log->list history))  ;= #<Atom@5143f787: {:age 25, :name "Sarah"}>

(swap! sarah update-in [:age] inc)

;= {:age 26, :name "Sarah"}

(swap! sarah update-in [:age] inc)

;= {:age 27, :name "Sarah"}

(swap! sarah identity)

;= #<Atom@5143f787: {:age 25, :name "Sarah"}>

(swap! sarah update-in [:age] inc)

;= {:age 26, :name "Sarah"}

(swap! sarah update-in [:age] inc)

;= {:age 27, :name "Sarah"}

(swap! sarah identity)  ;= {:age 27, :name "Sarah"}

(swap! sarah assoc :wears-glasses? true)

;= {:age 27, :wears-glasses? true, :name "Sarah"}

(swap! sarah update-in [:age] inc)

;= {:age 28, :wears-glasses? true, :name "Sarah"}

(pprint @history)

;= ;= nil

;= ; ({:age 28, :wears-glasses? true, :name "Sarah"}

;= ; {:age 27, :wears-glasses? true, :name "Sarah"}

;= ; {:age 27, :name "Sarah"}

;= ; {:age 26, :name "Sarah"})

;= {:age 27, :name "Sarah"}

(swap! sarah assoc :wears-glasses? true)

;= {:age 27, :wears-glasses? true, :name "Sarah"}

(swap! sarah update-in [:age] inc)

;= {:age 28, :wears-glasses? true, :name "Sarah"}

(pprint @history)

;= ;= nil

;= ; ({:age 28, :wears-glasses? true, :name "Sarah"}

;= ; {:age 27, :wears-glasses? true, :name "Sarah"}

;= ; {:age 27, :name "Sarah"}

;= ; {:age 26, :name "Sarah"})

We use

partialhere to bind in the atom to which the watch function will always log history.

Since

identityalways returns its sole argument unchanged, thisswap!will result in a state change in the reference, but the old and new states will be equal.log->listonly adds an entry if the new state is different, so this “repeated” state will not appear in the history.

Depending on how clever you get in your use of watches, you can also make the behavior of the watch function vary depending upon the key it’s registered under. A simple example would be a watch function that logged changes, not to an in-memory sink but to a database identified by its registered key:

(defn log->db

[db-id identity old new]

(when (not= old new)

(let [db-connection (get-connection db-id)]

...)))

(add-watch sarah "jdbc:postgresql://hostname/some_database" log->db)We’ll combine watches with refs and agents to great effect in Persisting reference states with an agent-based write-behind log.

Validators

Validators enable you to constrain a reference’s state however you like. A validator is a function of a single argument that is invoked just before any proposed new state is installed into a reference. If the validator returns logically false or throws an exception, then the state change is aborted with an exception.

A proposed change is the result of any change function you

attempt to apply to a reference. For example, the map of sarah that has had its :age slot incremented, but before swap! installs that updated state into the

reference. It is at this point that a validator function—if any has

been set on the affected reference—has a chance to veto it.

(def n (atom 1 :validator pos?)) ;= #'user/n (swap! n + 500) ;= 501 (swap! n - 1000) ;= #<IllegalStateException java.lang.IllegalStateException: Invalid reference state>

Because validator functions take a single argument, you can

readily use any applicable predicate you might have available

already, like pos?.

While all reference types may have a validator associated with

them, atoms, refs, and agents may be created

with them by providing the validator function as a :validator option to atom, ref, or agent. To add a validator to a var, or to

change the validator associated with an atom, ref, or agent, you can

use the set-validator!

function:

(def sarah (atom {:name "Sarah" :age 25}))

;= #'user/sarah

(set-validator! sarah :age)

;= nil

(swap! sarah dissoc :age)

;= #<IllegalStateException java.lang.IllegalStateException: Invalid reference state>You can make the message included in the thrown exception more

helpful by ensuring that the validator you use throws its own

exception, instead of simply returning false or nil upon a validation failure:[136]

(set-validator! sarah #(or (:age %)(throw (IllegalStateException. "People must have `:age`s!")))) ;= nil (swap! sarah dissoc :age) ;= #<IllegalStateException java.lang.IllegalStateException: People must have `:age`s!>

Remember that validators must return a logically true value, or the state change will be vetoed. In this case, if we implemented the validator using, for example,

#(when-not (:age %) (throw ...)), the validator would returnnilwhen the state did have an:ageslot, thus causing an unintentional validation failure.

While validators are very useful in general, they hold a special status with regard to refs, as we’ll learn about next and in particular in Enforcing local consistency by using validators.

Refs

Refs are Clojure’s coordinated reference type. Using them, you can ensure that multiple identities can participate in overlapping, concurrently applied operations with:

No possibility of the involved refs ever being in an observable inconsistent state

No possibility of race conditions among the involved refs

No manual use of locks, monitors, or other low-level synchronization primitives

No possibility of deadlocks

This is made possible by Clojure’s implementation of software transactional memory, which is used to manage all change applied to state held by refs.

Software Transactional Memory

In general terms, software transactional memory (STM) is any method of coordinating multiple concurrent modifications to a shared set of storage locations. Doing this in nearly any other language means you have to take on the management of locks yourself, accepting all that comes along with them. STM offers an alternative.

Just as garbage collection has largely displaced the need for manual memory management—eliminating a wide range of subtle and not-so-subtle bugs associated with it in the process—so has STM often been characterized as providing the same kind of systematic simplification of another error-prone programming practice, manual lock management. In both instances, using a proven, automated solution to address what is otherwise an error-prone manual activity both frees you from having to develop expertise in low-level details unrelated to your domain, and often produces end results with more desirable runtime characteristics than those attainable by experts in those low-level details.[137]

Clojure’s STM is implemented using techniques that have been relied upon by database management systems for decades.[138] As the name implies, each change to a set of refs has transactional semantics that you are sure to be familiar with from your usage of databases; each STM transaction ensures that changes to refs are made:

Atomically, so that all the changes associated with a transaction are applied, or none are.

Consistently, so that a transaction will fail if the changes to affected refs do not satisfy their respective constraints.

In isolation, so that an in-process transaction does not affect the states of involved refs as observed from within other transactions or other threads of execution in general.

Clojure’s STM therefore satisfies the A, C, and I properties of ACID (https://en.wikipedia.org/wiki/ACID), as you may understand it from the database world. The “D” property, durability, is not something that the STM is concerned with since it is purely an in-memory implementation.[139]

The Mechanics of Ref Change

With that background out of the way, let’s see what refs can do for us. Earlier in Classifying Concurrent Operations, we talked about banking transactions being an example of an operation that requires coordination among multiple identities and threads of execution. While this is true, banking is perhaps an overwrought example when it comes to demonstrating transactional semantics. It might be more enlightening (and entertaining!) to explore refs and Clojure’s STM as an ideal foundation for implementing a multiplayer game engine.

While some problems are rightfully described as “embarrassingly parallel” because of their potential to be parallelized given suitable facilities, we can say that multiplayer games are embarrassingly concurrent: the datasets involved are often massive, and it’s possible to have hundreds or thousands of independent players each provoking changes that must be applied in a coordinated, consistent fashion so as to ensure the game’s rules are reliably enforced.

Our “game”[140] will be in the fantasy/role-playing genre, the sort that contains classes like wizards and rangers and bards. Given that, we’ll represent each player’s character as a ref holding a map, which will contain all of the data relevant to the player’s character’s class and abilities. Regardless of their class, all characters will have a minimal set of attributes:

:name, the character’s name within the game.:health, a number indicating the character’s physical well-being. When:healthdrops to0, that character will be dead.:items, the set of equipment that a character is carrying.

Of course, specific character classes will have their own

attributes. character is a

function that implements all this, with default values for :items and :health:

(defn character

[name & {:as opts}]

(ref (merge {:name name :items #{} :health 500}

opts)))With this available, we can now define some actual characters that different players could control:[141]

(def smaug (character "Smaug" :health 500 :strength 400 :items (set (range 50))))(def bilbo (character "Bilbo" :health 100 :strength 100)) (def gandalf (character "Gandalf" :health 75 :mana 750))

In a game like this, if Bilbo and Gandalf were to defeat Smaug in a battle, they would be able to “loot” Smaug of the items he’s carrying. Without getting into gameplay details, all this means is that we want to take some item from Smaug and transfer it to another character. This transfer needs to occur so that the item being transferred is only in one place at a time from the perspective of any outside observers.

Enter Clojure’s STM and transactions. dosync establishes the scope of a transaction.[142] All modifications of refs must occur within

a transaction, the processing of which happens synchronously. That

is, the thread that initiates a transaction will “block” on that

transaction completing before proceeding in its execution.

Similar to atoms’ swap!, if

two transactions attempt to make a conflicting change to one or more

shared refs, one of them will retry. Whether two concurrently

applied transactions are in conflict depends entirely upon which

functions are used to modify refs shared between those transactions.

There are three such functions—alter, commute, and ref-set—each of which has different

semantics when it comes to producing (or avoiding) conflict.

With all that said, how do we implement looting of items among

characters in our game? The loot

function transfers one value from (:items

@from) to (:items @to)

transactionally, assuming each is a set,[143] and returns the new state of from:

Again, assuming Smaug is dead, we can cause Bilbo and Gandalf to loot his items:

(wait-futures 1

(while (loot smaug bilbo))

(while (loot smaug gandalf)))

;= nil

@smaug

;= {:name "Smaug", :items #{}, :health 500}

@bilbo

;= {:name "Bilbo", :items #{0 44 36 13 ... 16}, :health 500}

@gandalf

;= {:name "Gandalf", :items #{32 4 26 ... 15}, :health 500}Right, so Gandalf and Bilbo have now taken all of Smaug’s

items. The important point to notice is that the bilbo and gandalf characters divvied up Smaug’s loot

from different futures (therefore, threads), and that all the

looting occurred atomically: no items are unaccounted for, no item

references were duplicated, and at no point was an item owned by

multiple characters.

This was accomplished without the manual management of locks, and this process will scale to accommodate transactions involving far more refs and far more interleaving transactions applied by far more separate threads of execution.

Understanding alter

loot uses alter,

which is similar to swap!

insofar as it takes a ref, a function ƒ, and additional arguments

to that function. When alter

returns, the in-transaction value of the ref

in question will have been changed to the return of a call to ƒ,

with the ref’s value as the first argument, followed by all of the

additional arguments to alter.

The notion of an in-transaction value is an important one. All the functions that modify the state of a ref actually operate on a speculative timeline for the ref’s state, which starts for each ref when it is first modified. All later ref access and modification works on this separate timeline, which only exists and can only be accessed from within the transaction. When control flow is to exit a transaction, the STM attempts to commit it. In the optimistic case, this will result in the in-transaction, speculative states of each affected ref being installed as the refs’ new shared, non-transaction state, fully visible to the rest of the world. However, depending upon the semantics of the operation(s) used to establish those in-transaction values, any change made to the refs’ state outside of the transaction may conflict with the transaction’s modifications, resulting in the transaction being restarted from scratch.

Throughout this process, any thread of execution that is solely reading (i.e., dereferencing) refs involved in a transaction can do so without being blocked or waiting in any circumstance. Further, until a given transaction commits successfully, its changes will not affect the state of refs seen by readers outside of that transaction, including readers operating within the scope of entirely different transactions.

The unique semantic of alter is that, when the transaction is to be committed,

the value of the ref outside of the transaction must be the same

as it was prior to the first in-transaction application of

alter. Otherwise, the

transaction is restarted from the beginning with the new observed

values of the refs involved.

This dynamic can be visualized as the interaction between

two transactions, t1 and t2, which both

affect some shared ref a using

alter:

Even though t1 starts before

t2, its

attempt to commit changes to a

fails because t2 has already

modified it in the interim: the current state of a (a2) is different

than the state of a (a1) when it was

first modified within t1. This conflict

aborts the commit of any and all in-transaction modifications to

refs affected by t1 (e.g.,

x, y, z,

…). t1

then restarts, using up-to-date values for all of the refs it

touches.

Depicted and described this way, you can think of Clojure’s STM as a process that optimistically attempts to reorder concurrent change operations so they are applied serially. Unsurprisingly, the same semantics are found in the database world as well, often called serializable snapshot isolation (https://en.wikipedia.org/wiki/Serializability).

Minimizing transaction conflict with commute

Because it makes no assumptions about the

reorderability of the modifications made to affected refs,

alter is the safest mechanism

for effecting ref change. However, there are

situations where the modifications being made to refs can be

reordered safely; in such contexts, commute can be used in place of alter, potentially minimizing conflicts

and transaction retries and therefore maximizing total

throughput.

As its name hints, commute has to do with

commutative functions (https://en.wikipedia.org/wiki/Commutative_property)—those

whose arguments may be reordered without impacting results, such

as +, *, clojure.set/union…—but it doesn’t

mandate that the functions passed to it be commutative. What

really matters is that the function applications performed using

commute are reorderable without

violating program semantics. It follows that in such cases, it is

the final result of all commutable function applications that

matters, and not any intermediate results.

For example, although division is not commutative, it may be

often used with commute when

you are not concerned with intermediate results:

(= (/ (/ 120 3) 4) (/ (/ 120 4) 3)) ;= true

Thus, commute can be used

when the functional composition is commutative for the functions

involved:

(= ((comp #(/ % 3) #(/ % 4)) 120) ((comp #(/ % 4) #(/ % 3)) 120)) ;= true

Generally, commute should

only be used to apply changes to ref states where reordering of

that application is acceptable.

commute differs from

alter in two ways. First, the

value returned by alter on a

ref will be the committed value of this ref; in other words, the

in-transaction value is the eventual committed value. On the other

hand, the in-transaction value produced by commute is not guaranteed to be the

eventual committed value, because the commuted function will be applied again

at commit time with the latest value for the commuted ref.

Second, a change made to a ref by using commute will never

cause a conflict, and therefore never cause a transaction to

retry. This obviously has potentially significant performance and

throughput implications: transaction retries are fundamentally

rework and time that a thread is “blocked” waiting for a

transaction to complete successfully instead of moving on to its

next task.

We can demonstrate this very directly. Given some ref

x:

(def x (ref 0)) ;= #'user/x

We’ll beat on it with 10,000 transactions that do some small

amount of work (just obtaining the sum of some integers), and then

alter x’s value:

(time (wait-futures 5

(dotimes [_ 1000]

(dosync (alter x + (apply + (range 1000)))))

(dotimes [_ 1000]

(dosync (alter x - (apply + (range 1000)))))))

; "Elapsed time: 1466.621 msecs"At least some of the time taken to process these

transactions was spent in retries, thus forcing the resumming of

the integer sequence. However, the operations used with alter here (addition and subtraction)

can safely be used with commute:

(time (wait-futures 5

(dotimes [_ 1000]

(dosync (commute x + (apply + (range 1000)))))

(dotimes [_ 1000]

(dosync (commute x - (apply + (range 1000)))))))

; "Elapsed time: 818.41 msecs"Even though it applies the change function to the ref’s

value twice—once to set the in-transaction value (so x would have an updated value if we were

to refer to it again later in the transaction), and once again at

commit-time to make the “real” change to the (potentially

modified) value of x—our

cumulative runtime is cut nearly in half because commute will never retry.

commute is not magic

though: it needs to be used judiciously, or it can produce invalid

results. Let’s see what happens if we carelessly use commute instead of alter in the loot function from Example 4-2:

(defn flawed-loot

[from to]

(dosync

(when-let [item (first (:items @from))]

(commute to update-in [:items] conj item)

(commute from update-in [:items] disj item))))Let’s reset our characters and see what our new looting function does:

(def smaug (character "Smaug" :health 500 :strength 400 :items (set (range 50))))

(def bilbo (character "Bilbo" :health 100 :strength 100))

(def gandalf (character "Gandalf" :health 75 :mana 750))

(wait-futures 1

(while (flawed-loot smaug bilbo))

(while (flawed-loot smaug gandalf)))

;= nil

(map (comp count :items deref) [bilbo gandalf])

;= (5 48)

(filter (:items @bilbo) (:items @gandalf))

;= (18 32 1)Using the same checks from Example 4-3, we can see that

flawed-loot has produced some

problems: Bilbo has 5 items while Gandalf has 48 (with 18, 32, and 1 being the three duplicated items), a

situation that should never happen since Smaug started with 50.

What went wrong? In three instances, the same value was

pulled from Smaug’s set of :items and conjed into both Bilbo’s and Gandalf’s

:items. This is prevented in

the known-good implementation of loot because using alter properly guarantees that the

in-transaction and committed values will be identical.

In this peculiar case, we can safely

use commute to add the looted

item to the recipient’s set (since the order in which items are

added to the set is of no importance); it is the removal of the

looted item from its source that requires the use of alter:

(defn fixed-loot

[from to]

(dosync

(when-let [item (first (:items @from))]

(commute to update-in [:items] conj item)

(alter from update-in [:items] disj item))))

(def smaug (character "Smaug" :health 500 :strength 400 :items (set (range 50))))

(def bilbo (character "Bilbo" :health 100 :strength 100))

(def gandalf (character "Gandalf" :health 75 :mana 750))

(wait-futures 1

(while (fixed-loot smaug bilbo))

(while (fixed-loot smaug gandalf)))

;= nil

(map (comp count :items deref) [bilbo gandalf])

;= (24 26)

(filter (:items @bilbo) (:items @gandalf))

;= ()On the other hand, commute is perfect for other functions

in our game. For example, attack and heal functions are just going to be

incrementing and decrementing various character attributes, so

such changes can be made safely using commute:

(defn attack

[aggressor target]

(dosync

(let [damage (* (rand 0.1) (:strength @aggressor))]

(commute target update-in [:health] #(max 0 (- % damage))))))

(defn heal

[healer target]

(dosync

(let [aid (* (rand 0.1) (:mana @healer))]

(when (pos? aid)

(commute healer update-in [:mana] - (max 5 (/ aid 5)))

(commute target update-in [:health] + aid)))))With a couple of additional functions, we can simulate a player taking some action within our game:

Now we can have duels:

(wait-futures 1

(play bilbo attack smaug)

(play smaug attack bilbo))

;= nil

(map (comp :health deref) [smaug bilbo])  ;= (488.80755445030337 -12.0394908759935)

;= (488.80755445030337 -12.0394908759935)…or, “epic” battles:

(dosync(alter smaug assoc :health 500) (alter bilbo assoc :health 100)) (wait-futures 1 (play bilbo attack smaug) (play smaug attack bilbo) (play gandalf heal bilbo)) ;= nil (map (comp #(select-keys % [:name :health :mana]) deref) [smaug bilbo gandalf])

;= ({:health 0, :name "Smaug"} ;= {:health 853.6622368542827, :name "Bilbo"} ;= {:mana -2.575955687302212, :health 75, :name "Gandalf"})

Clobbering ref state with ref-set

ref-set will set the in-transaction state of a ref to the

given value:

(dosync (ref-set bilbo {:name "Bilbo"}))

;= {:name "Bilbo"}Just like alter, ref-set will provoke a retry of the

surrounding transaction if the affected ref’s state changes prior

to commit-time. Said differently, ref-set is semantically equivalent to using alter with a function that returns a

constant value:

(dosync (alter bilbo (constantly {:name "Bilbo"})))

; {:name "Bilbo"}Since this change is made without reference to the current

value of the ref, it is quite easy to change a ref’s value in a

way that is consistent with regard to the STM’s transactional

guarantees, but that violates application-level contracts. Thus,

ref-set is generally used only

to reinitialize refs’ state to starting values.

Enforcing local consistency by using validators

If you’ll notice, Bilbo has a

very high :health value at the end of Example 4-7. Indeed, there is no limit to how

high a character’s :health can

go, as a results of heals or other restorative actions.

These sorts of games generally do not allow a character’s health to exceed a particular level. However, from both a technical and management perspective—especially given a large team or codebase—it may be too onerous to guarantee that every function that might increase a character’s health would not produce a health “overage.” Such functions may, as part of their own semantics, attempt to avoid such an illegal condition, but we must be able to protect the integrity of our model separately. Maintaining local consistency like this—the C in “ACID”—in the face of concurrent changes is the job of validators.

We talked about validators already in Validators. Their use and semantics with refs is entirely the same as with other reference types, but their interaction with the STM is particularly convenient: if a validator function signals an invalid state, the exception that is thrown (just like any other exception thrown within a transaction) causes the current transaction itself to fail.

With this in mind, we should refactor our game’s

implementation details a bit. First, character should be changed so

that:

A common set of validators is added to every character.

Additional validators can be provided for each character, so as to enforce constraints related to a character’s class, level, or other status in the game:

(defn- enforce-max-health[name health] (fn [character-data] (or (<= (:health character-data) health) (throw (IllegalStateException. (str name " is already at max health!")))))) (defn character [name & {:as opts}] (let [cdata (merge {:name name :items #{} :health 500} opts) cdata (assoc cdata :max-health (:health cdata))

validators (list* (enforce-max-health name (:health cdata))

(:validator cdata))] (ref (dissoc cdata :validator) :validator #(every? (fn [v] (v %)) validators))))

enforce-max-healthreturns a function that accepts a character’s potential new state, throwing an exception if the new:healthattribute is above the character’s original health level.

We record the character’s initial

:healthas their:max-health, which will come in handy later.

In addition to always ensuring that a character’s maximum health is never exceeded, it is easy to allow individual characters to be created with their own additional set of validator functions…

…which can be easily rolled into the validation of their containing refs.

Now, no character can ever be healed past his original health level:

(def bilbo (character "Bilbo" :health 100 :strength 100))

;= #'user/bilbo

(heal gandalf bilbo)

;= #<IllegalStateException java.lang.IllegalStateException: Bilbo is already at max

health!>One limitation of validators is that they are strictly local; that is, their charter does not extend past ensuring that the next state held by a reference satisfies the constraints they check:

(dosync (alter bilbo assoc-in [:health] 95))

;= {:max-health 100, :strength 100, :name "Bilbo", :items #{}, :health 95, :xp 0}

(heal gandalf bilbo)

;= #<IllegalStateException java.lang.IllegalStateException: Bilbo is already at max

health!>Here, Bilbo’s :health is

set just short of his :max-health, so he really should be

heal-able. However, the implementation of heal does not yet take :max-health into account, and there is

no way for the relevant validator to “tweak” Bilbo’s new state to

suit its constraints—in this case, to make his :health the lesser of his :max-health or the sum of his current

:health and Gandalf’s heal amount. If validators

were allowed to make changes like this, then

it would be difficult to avoid introducing inconsistency into the

refs modified within a transaction. Validators exist solely to

maintain invariants within your model.

A tweak to heal is

warranted to ensure that “partial” heals are possible, up to a

character’s maximum health:

(defn heal

[healer target]

(dosync

(let [aid (min (* (rand 0.1) (:mana @healer))

(- (:max-health @target) (:health @target)))]

(when (pos? aid)

(commute healer update-in [:mana] - (max 5 (/ aid 5)))

(alter target update-in [:health] + aid)))))Now heal will improve a

character’s health up to his maximum health,

returning nil when the

character’s health is already at that level:

(dosync (alter bilbo assoc-in [:health] 95))

;= {:max-health 100, :strength 100, :name "Bilbo", :items #{}, :health 95}

(heal gandalf bilbo)

;= {:max-health 100, :strength 100, :name "Bilbo", :items #{}, :health 100}

(heal gandalf bilbo)

;= nilNote that our modification to target now potentially depends upon its

prior state, so we use alter

instead of commute. This isn’t

strictly required: perhaps you would be happy enough to have the

validator catch errant heals, which would happen only if some

other concurrently applied transaction also increased the health

of the target character. This points to a potential downside to

how we’ve modeled our characters, as all-encompassing bags of

state (maps in this case) held by a single ref: if concurrent

transactions modify unrelated parts of that state using alter, a transaction will retry

unnecessarily.[144]

The Sharp Corners of Software Transactional Memory

As we said at the beginning of this chapter, Clojure does not offer any silver bullet to solve the problem of concurrency. Its STM implementation may sometimes seem magical—and, compared to the typical alternatives involving manual lock management, it sorta is—but even the STM has its own sharp corners and rough edges of which you should be aware.

Side-effecting functions strictly verboten

The only operations that should ever be performed

within the scope of a transaction are things that are safe to

retry, which rules out many forms of I/O. For example, if you

attempt to write to a file or database inside a dosync block, you will quite possibly end up writing the

same data to the file or database multiple times.

Clojure can’t detect that you’re attempting to perform an

unsafe operation inside a transaction; it will happily and

silently retry those operations, perhaps with disastrous results.

For this reason, Clojure provides an io! macro, which will throw an error if

it is ever evaluated within a transaction. Thus, if you have a

function that may be used within a transaction, you can wrap the

side-effecting portion of its body in an io! form to help guard against

accidentally calling unsafe code:

(defn unsafe [] (io! (println "writing to database..."))) ;= #'user/unsafe (dosync (unsafe)) ;= #<IllegalStateException java.lang.IllegalStateException: I/O in transaction>

Warning

As a corollary, operations on atoms should generally be considered side-effecting,

insofar as swap!, et al., do

not participate in the STM’s transactional semantics. Thus, if a

transaction is retried three times, and it contains a swap! call, swap! will be invoked three times and

the affected atom will be modified three times…rarely what you

want, unless you’re using an atom to count transaction

retries.

Note also that the values held by refs must be immutable.[145] Clojure isn’t going to stop you from putting mutable objects into a ref, but things like retries and the usual foibles associated with mutability will likely result in undesirable effects:

(def x (ref (java.util.ArrayList.)))

;= #'user/x

(wait-futures 2 (dosync (dotimes [v 5]

(Thread/sleep (rand-int 50))  (alter x #(doto % (.add v))))))

;= nil

@x

;= #<ArrayList [0, 0, 1, 0, 2, 3, 4, 0, 1, 2, 3, 4]>

(alter x #(doto % (.add v))))))

;= nil

@x

;= #<ArrayList [0, 0, 1, 0, 2, 3, 4, 0, 1, 2, 3, 4]>

Minimize the scope of each transaction

Remember from the discussion around Figure 4-2 that the STM’s job is to ensure that all of the work encapsulated as transactions be applied to affected refs in a serial fashion, reordering that work and those ref state changes if necessary. This implies that, the shorter each transaction is, the easier it will be for the STM to schedule that transaction, thus leading to faster application and higher total throughput.

What happens if you have out-sized transactions, or

transactions with a mix of scopes and scales? In general, the

largest transactions will be delayed (along with whatever else the

thread waiting on that transaction would otherwise be doing).

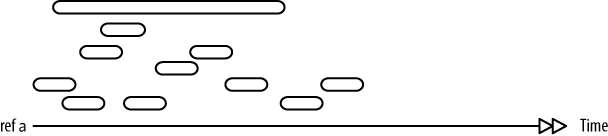

Consider a bunch of transactions, all affecting some ref a:

Assuming each of them is altering a, the execution of those transactions

will be retried until they can be applied serially. The

longest-running transaction will end up being retried repeatedly,

with the likely result that it will be delayed until a long enough

slot opens up in the contended ref’s timeline for it to

fit:

Note

Remember that commute (discussed in Minimizing transaction conflict with commute) does not provoke change

conflicts and retries. Therefore, if you can use it safely with

the change functions applicable to your state’s domain, you will

effectively sidestep any potential hazards associated with

long-running transactions.

Doing a lot of time-consuming computation can result in a long-running transaction, but so can retries prompted by contention over other refs. For example, the long-running transaction depicted above may be performing some complex computation, which may need to be restarted repeatedly due to contention over another ref. Thus, you should aim to minimize the scope of transactions in general as much as possible both in terms of the computational runtime involved and in the number of affected refs.

Live lock. You might wonder: what happens if, particularly in times of heavy load, a large transaction never gets a chance to commit due to ref contention? This is called live lock, the STM equivalent to a deadlock, where the thread(s) driving the transactions involved are blocked indefinitely attempting to commit their respective transactions. Without suitable fallbacks, and we’d be no better off than if we were manually managing locks and causing our own deadlocks!

Thankfully, Clojure’s STM does have a couple of fallbacks. The first is called barging, where an older transaction is allowed to proceed in certain circumstances, forcing newer transactions to retry. When barging fails to push through the older transaction in a reasonable amount of time, the STM will simply cause the offending transaction to fail:

(def x (ref 0)) ;= #'user/x (dosync @(future (dosync (ref-set x 0))) (ref-set x 1)) ;= #<RuntimeException java.lang.RuntimeException: ;= Transaction failed after reaching retry limit> @x ;= 0

The transaction running in the REPL thread above always starts a new future, itself running a transaction that modifies the state of the contended ref. Dereferencing that future ensures that the REPL thread’s transaction waits until the future’s transaction has completed, thus ensuring a retry—and therefore the spawning of a new future, and so on.

Clojure’s STM will permit a transaction to retry only so many times before throwing an exception. An error thrown with a stack trace you can examine is infinitely better than an actual deadlock (or live lock), where the only solution is to forcibly kill the application’s process with little to no information about the problem’s locale.

Readers may retry

In the case of reference types, deref is guaranteed to never block.

However, inside a transaction dereferencing a ref may trigger a transaction

retry!

This is because, if a new value is committed by another transaction since the beginning of the current transaction, the value of the ref as of the start of the transaction cannot be provided.[146] Helpfully, the STM notices this problem and maintains a bounded history of the states of refs involved in a transaction, where the size of the history is incremented by each retry. This increases the chance that—at some point—the transaction won’t have to retry anymore because, while the ref is concurrently updated, the desired value is still present in the history.

History length can be queried (and tuned) with

ref-history-count, ref-max-history, and ref-min-history. Minimum and maximum history sizes can also be

specified when a ref is created by using the named arguments

:min-history and :max-history:

(ref-max-history (ref "abc" :min-history 3 :max-history 30)) ;= 30

This allows you to potentially tune a ref to suit expected workloads.

Retries on deref

generally occur in the context of read-only transactions, which

attempt to snapshot a lot of very active refs. We can visualize

this behavior with a single ref and a slow reading

transaction:

(def a (ref 0)) (future (dotimes [_ 500] (dosync (Thread/sleep 200) (alter a inc)))) ;= #<core$future_call$reify__5684@10957096: :pending> @(future (dosync (Thread/sleep 1000) @a)) ;= 28(ref-history-count a) ;= 5

So, the a ref has grown

its history to accommodate the needs of the slow reading

transaction. What happens if the writes occur even faster?

(def a (ref 0)) (future (dotimes [_ 500] (dosync (Thread/sleep 20) (alter a inc)))) ;= #<core$future_call$reify__5684@10957096: :pending> @(future (dosync (Thread/sleep 1000) @a)) ;= 500 (ref-history-count a) ;= 10

This time the history has been maxed out and the reader transaction has only been executed after all the writers. This means that the writers blocked the reader in the second transaction. If we relax the max history, the problem should be fixed:

(def a (ref 0 :max-history 100)) (future (dotimes [_ 500] (dosync (Thread/sleep 20) (alter a inc)))) ;= #<core$future_call$reify__5684@10957096: :pending> @(future (dosync (Thread/sleep 1000) @a)) ;= 500 (ref-history-count a) ;= 10

It didn’t work because by the time there’s enough history, the writers are done. So, the key is to set the minimum history to a good value:

(def a (ref 0 :min-history 50 :max-history 100))(future (dotimes [_ 500] (dosync (Thread/sleep 20) (alter a inc)))) @(future (dosync (Thread/sleep 1000) @a)) ;= 33

This time the reader transaction completes quickly and successfully with no retry!

Write skew

Clojure’s STM provides for the transactional

consistency of ref state, but so far we’ve only seen that to be

the case for refs that are modified by the transactions involved.

If a ref isn’t modified by a transaction, but the consistency of

that transaction’s changes depend upon the state of a ref that is

read but not modified, there is no way for the STM to know about

this through calls to alter,

commute, and set-ref. If the read ref’s state happens

to change mid-transaction, that transaction’s effects on other

refs may end up being inconsistent with the read ref; this state

of affairs is called write skew.

Such a circumstance is rare; generally, refs involved in a

transaction are all being modified in some way. However, when

that’s not the case, ensure may

be used to prevent write skew: it is a way to dereference a ref

such that that read will conflict with any modifications prompted

by other transactions, causing retries as necessary.

An example of this within the game’s context might be the

current amount of daylight. It’s safe to say that attacks made

with the benefit of mid-day sun will be more successful than those

made at night, so a modification to attack to take into consideration the

current amount of daylight would make sense:

(def daylight (ref 1))

(defn attack

[aggressor target]

(dosync

(let [damage (* (rand 0.1) (:strength @aggressor) @daylight)]

(commute target update-in [:health] #(max 0 (- % damage))))))However, if the state of daylight is changed between the time it

is read within a transaction and when that transaction commits its

changes, those changes may be inconsistent. For example, a