Chapter 1. Introduction

Cloud Run is a platform on Google Cloud that lets you build scalable and reliable web-based applications. As a developer, you can get very close to being able to just write your code and push it, and then let the platform deploy, run, and scale your application for you.

Public cloud has provided the opportunity to developers and businesses to turn physical servers and data centers into virtual ones, greatly decreasing lead time and turning big, up-front investments in physical servers and data centers into ongoing operational expenses. For most businesses, this is already a great step forward.

However, virtual machines and virtual networks are still a relatively low-level abstraction. You can take an even bigger leap if you design your application to take full advantage of the modern cloud platform. Cloud Run provides a higher level of abstraction over the actual server infrastructure and allows you to focus on code, not infrastructure.

Using the higher-level abstraction that Cloud Run provides doesn’t mean you tie yourself to Google Cloud forever. First, Cloud Run requires your application to be packaged in a container—a portable way to deploy and run your application. If your container runs on Cloud Run, you can also run it on your own server, using Docker, for instance. Second, the Cloud Run platform is based on the open Knative specification, which means you can migrate your applications to another vendor or your own hardware with limited effort.

Serverless Applications

You probably bought this book because you are interested in building a serverless application. It makes sense to be specific about what application means, because it is a very broad term; your phone runs applications, and so does a server. This book is about web-based applications, which receive requests (or events) over HTTPS and respond to them.

Examples of web-based applications include the sites you interact with using a web browser and APIs you can program against. These are the two primary use cases I focus on in this book. You can also build event processing pipelines and workflow automation with Cloud Run.

One thing I want to emphasize is that when I say HTTP, I refer to the entire family of HTTP protocols, including HTTP/2 (the more advanced and performant version). If you are interested in reading more about the evolution of HTTP, I suggest you read this well-written overview at MDN.

Now that I have scoped down what “application” means in the context of this book, let’s take a look at serverless. If you use serverless components to build your application, your application is serverless. But what does serverless mean? It’s an abstract and overloaded term that means different things to different people.

When trying to understand serverless, you shouldn’t focus too much on the “no servers” part—it’s more than that. In general, this is what I think people mean when they call something serverless and why they are excited about it:

It simplifies the developer experience by eliminating the need to manage infrastructure.

It’s scalable out of the box.

Its cost model is “pay-per-use”: you pay exactly for what you use, not for capacity you reserve up front. If you use nothing, you pay nothing.

In the next sections, I’ll explore these three aspects of serverless in more depth.

A Simple Developer Experience

Eliminating infrastructure management means you can focus on writing your code and have someone else worry about deploying, running, and scaling your application. The platform will take care of all the important and seemingly simple details that are surprisingly hard to get right, like autoscaling, fault tolerance, logging, monitoring, upgrades, deployment, and failover.

One thing you specifically don’t have to do in the serverless context is infrastructure management. The platform offers an abstraction layer. This is the primary reason we call it serverless.

When you are running a small system, infrastructure management might not seem like a big deal, but readers who manage more than 10 servers know that this can be a significant responsibility that takes a lot of work to get right. Here is an incomplete list of tasks you no longer need to perform when you run your application logic on a serverless platform:

Provisioning and configuring servers (or setting up automation)

Applying security patches to your servers

Configuring networking and firewalls

Setting up SSL/TLS certificates, updating them yearly, and configuring a web server

Automating application deployment on a cluster of servers

Building automation that can handle hardware failures transparently

Setting up logging and metrics monitoring to provide insights into system performance

And that’s just about servers! Most businesses have higher and higher expectations for system availability. More than 30 minutes of downtime per month is generally unacceptable. To reach these levels of availability, you will need to automate your way out of every failure mode—there is not enough time for manual troubleshooting. As you can imagine, this is a lot of work and leads to more complexity in your infrastructure. If you build software in an enterprise environment, you’ll have an easier time getting approvals from security and operations teams because a lot of their responsibilities shift to the vendor.

Availability is also related to software deployments now that it is more common to deploy new software versions on a daily basis instead of monthly. When you deploy your application, you don’t want to experience downtime, even when the deployment fails.

Serverless technology helps you focus on solving your business problems and building a great product while someone else worries about the fundamentals of running your app. This sounds very convenient, but you shouldn’t take this to mean that all your responsibilities disappear. Most importantly, you still need to write and patch your code and make sure it is secure and correct. There is still some configuration you need to manage, too, like setting resource requirements, adding scaling boundaries, and configuring access policies.

Autoscalable Out of the Box

Serverless products are built to increase and decrease their capacity automatically, closely tracking demand. The scale of the cloud ensures that your application will be able to handle almost any load you throw at it. A key feature of serverless is that it shows stable and consistent performance, regardless of scale.

One of our clients runs a fairly popular soccer site in the Netherlands. The site has a section that shows live scores, which means they experience peak loads during matches. When a popular match comes up, they provision more servers and add them to the instance pool. Then, when the match is over, they remove the virtual machines again to save costs. This has generally worked well for them, and they saw little reason to change things.

However, they were not prepared when one of our national clubs suddenly did very well in the UEFA Champions League. Contrary to all expectations, this club reached the semifinals. While all soccer fans in the Netherlands were uniting in support of the team, our client experienced several outages, which couldn’t be solved by adding more servers.

The point is that, while you might not feel the drawbacks of a serverful system right now, you might need the scalability benefits of serverless in the future when you need to handle unforeseen circumstances. Most systems have the tendency to scale just fine until they hit a bottleneck and performance suddenly degrades. The architecture of Cloud Run provides you with guardrails that help you avoid common mistakes and build more scalable applications by default.

A Different Cost Model

The cost model of serverless is different: you pay for actual usage only, not for the preallocation of capacity. When you are not handling requests on a serverless platform, you pay nothing. On Cloud Run, you pay for the system resources you use to handle a request with a granularity of 100 ms and a small fee for every million requests. Pay-per-use can also apply to other types of products. With a serverless database, you pay for every query and for the data you store.

I present this with a caveat: I am not claiming that serverless is cheap. While most early adopters seem to experience a cost reduction, in some cases, serverless might turn out to be more expensive. One of these cases is when you currently manage to utilize close to 100% of your server capacity all of the time. I think this is very rare; utilization rates of 20 to 40% are much more common. That’s a lot of idle servers that you are paying for.

The serverless cost model provides the vendor with an incentive to make sure your application scales fast and is always available. They have skin in the game.

This is how that works: you pay for the resources you actually use, which means your vendor wants to make sure your application handles every request that comes in. As soon as your vendor drops a request, they potentially fail to monetize their server resources.

Serverless Is Not Functions as a Service

People often associate serverless with functions as a service (FaaS) products such as Cloud Functions or AWS Lambda. With FaaS, you typically use a function as “glue code” to connect and extend existing Google Cloud services. Functions use a runtime framework: you deploy a small snippet of code, not a container. In the snippet of code, you implement only one function or override a method, which handles an incoming request or an event. You’re not starting an HTTP server yourself.

FaaS is serverless because it has a simple developer experience—you don’t need to worry about the runtime of your code (other than configuring the programming language) or about creating and managing the HTTPS endpoint. Scaling is built in, and you pay a small fee per one million requests.

As you will discover in this book, Cloud Run is serverless, but it has more capabilities than a FaaS platform. Serverless is also not limited to handling HTTPS requests. The other primitives you use to build your application can be serverless as well. Before I give an overview of the other serverless products on Google Cloud, it’s now time to introduce Google Cloud itself.

Google Cloud

Google Cloud started in 2008 when Google released App Engine, a serverless application platform. App Engine was serverless before we started using the word serverless. However, back then, the App Engine runtime had a lot of limitations, which in practice meant that it was only suitable for new projects. Some people loved it, some didn’t. Notable customer success stories include Snapchat and Spotify. App Engine got limited traction in the market.

Prompted by this lukewarm reaction to App Engine and a huge market demand for virtual server infrastructure, Google Cloud released Compute Engine in 2012. (That’s a solid six years after Amazon launched EC2, the product that runs virtual machines on AWS.) This leads me to believe that the Google mindset has always been serverless.

Here’s another perspective: a few years ago, Google published a paper about Borg, the global container infrastructure on which they run most of their software, including Google Search, Gmail, and Compute Engine (that’s how you run virtual machines).1 Here’s how they describe it (emphasis mine):

Borg provides three main benefits: it (1) hides the details of resource management and failure handling so its users can focus on application development instead; (2) operates with very high reliability and availability, and supports applications that do the same; and (3) lets us run workloads across tens of thousands of machines effectively.

Let this sink in for a bit: Google has been working on planet-scale container infrastructure since at least 2005, based on the few public accounts on Borg. A primary design goal of the Borg system is to allow developers to focus on application development instead of the operational details of running software and to scale to tens of thousands of machines. That’s not just scalable, it’s hyper-scalable.

If you were developing and running software at the scale that Google does, would you want to be bothered with maintaining a serverful infrastructure, let alone worry about basic building blocks like IP addresses, networks, and servers?

By now it should be clear that I like to work with Google Cloud. That’s why I’m writing a book about building serverless applications with Google Cloud Run. I will not be comparing it with other cloud vendors, such as Amazon and Microsoft, because I lack the deep expertise in other cloud platforms that would be required to make such a comparison worth reading. However, rest assured, the general application design principles you will pick up in this book do translate well to other platforms.

Serverless on Google Cloud

Take a look at Table 1-1 for an overview of Google Cloud serverless products that relate to application development. I’ve also noted information on each one’s open source compatibility to indicate if there are open source alternatives you can host on a different provider. This is important, because vendor tie-in is the number-one concern with serverless. Cloud Run does well on this aspect because it is API compatible with an open source product.

| Product | Purpose | Open source compatibility |

|---|---|---|

Messaging |

||

Events |

Proprietary1 |

|

Delayed tasks |

Proprietary1 |

|

Scheduled tasks |

Proprietary1 |

|

Events |

OSS compatible |

|

Compute |

||

Container platform |

OSS compatible |

|

Application platform |

Proprietary2 |

|

Connecting existing Google Cloud services |

Proprietary2 |

|

Data Storage |

||

Blob storage |

Proprietary3 |

|

Key-value store |

Proprietary |

|

|

||

Notably missing from the serverless offering on Google Cloud is a relational database. I think there are a lot of applications out there for which using a relational database makes a lot of sense, and most readers are already familiar with this form of application architecture. This is why I’ll show you how to use Cloud SQL, a managed relational database, in Chapter 4. As a managed service, it provides many of the benefits of serverless but does not scale automatically. In addition, you pay for the capacity you allocate, even if you do not use it.

Cloud Run

Cloud Run is a serverless platform that runs and scales your container-based application. It is implemented directly on top of Borg, the hyper-scalable container infrastructure that Google uses to power Google Search, Maps, Gmail, and App Engine.

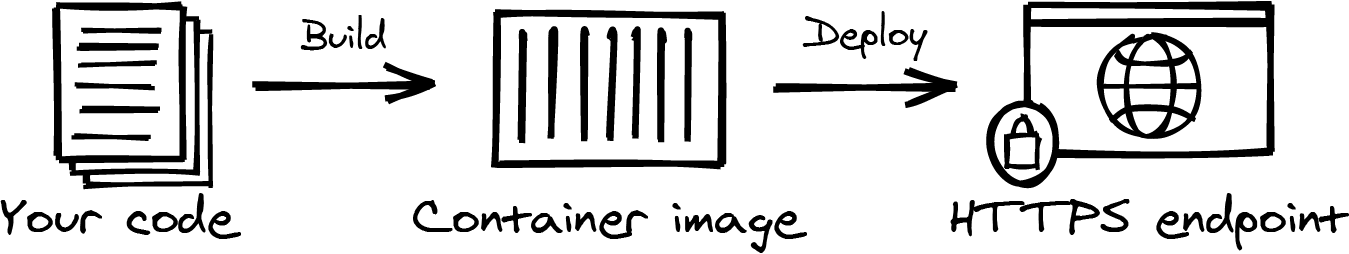

The developer workflow is a straightforward three-step process. First, you write your application using your favorite programming language. Your app should start an HTTP server. Second, you build and package your application into a container image. The third and final step is to deploy the container image to Cloud Run. Cloud Run creates a service resource and returns an invokable HTTPS endpoint to you (Figure 1-1).

Figure 1-1. Cloud Run developer workflow

Service

I want to pause here and direct your attention to the word service, because this is a key concept in Cloud Run. Every service has a unique name and an associated HTTPS endpoint. You’ll interact primarily with a service resource to perform your tasks, such as deploying a new container image, rolling back to a previously deployed version, and changing configuration settings like environment variables and scaling boundaries.

Container Image

Depending on how far you are in your learning journey, you might not know what a container image is. If you do know what it is, you might associate container technology with a lot of low-level infrastructure overhead that you are not remotely interested in learning. I want to reassure you: you don’t need to become a container expert in order to be productive with Cloud Run. Depending on your use case, you might never have to build a container image yourself. However, understanding what containers are and how they work is certainly helpful. I’ll cover containers from first principles in Chapter 3.

For now, it’s enough to understand that a container image is a package that contains your application and everything it needs to run. The mental model that “a container image contains a program you can start” works well for understanding this chapter.

Cloud Run is not just a convenient way to launch a container. The platform has additional features that make it a suitable platform for running a major production system. The rest of this section will give you an overview of those features and help you understand some of Cloud Run’s most important aspects. Then, in Chapter 2, I’ll explore Cloud Run in depth.

Scalability and Self-Healing

Incoming requests to your Cloud Run service will be served by your container. If necessary, Cloud Run will add additional instances of your container to handle all incoming requests. If a single container fails, Cloud Run will replace it with a new instance. By default, Cloud Run can add up to a thousand container instances per service, and you can further raise this limit via a quota increase. The scale of the cloud really works with you here. If you need to scale out to tens of thousands of containers, that might be possible—the architecture of Cloud Run is designed to be limited only by the available capacity in a given Google Cloud region (a physical datacenter).

HTTPS Serving

By default, Cloud Run automatically creates a unique HTTPS endpoint you can use to reach your container. You can also use your own domain or hook your service into the virtual networking stack of Google Cloud if you need more advanced integrations with existing applications running on-premises or on virtual machines on Google Cloud, or with features like DDoS mitigation and a Web Application Firewall.

You can also create internal Cloud Run services that are not publicly accessible. These are great to receive events on from other Google Cloud products, for example, when a file is uploaded to a Cloud Storage bucket.

Microservices Support

Cloud Run supports the microservices model where you break your application into smaller services that communicate using API calls or event queues. This can help you scale your engineering organization, and can possibly lead to improved scalability. I cover an example of multiple services working together in Chapter 6.

Identity, Authentication, and Access Management

Every Cloud Run service has an assigned identity, which you can use to call Cloud APIs and other Cloud Run services from within the container. You can set up access policies that govern which identities can invoke your service.

Especially if you are building a more serious application, an identity system is important. You’ll want to make sure that every Cloud Run service only has the permissions to do what it is supposed to do (principle of least privilege) and that the service can only be invoked by the identities that are supposed to invoke it.

For example, if your payment service gets a request to confirm a payment, you want to know who sent that request, and you want to be sure that only the payment service can access the database that stores payment data. In Chapter 6, I explore service identity and authentication in more depth.

Monitoring and Logging

Cloud Run captures standard container and request metrics, like request duration and CPU usage, and your application logs are forwarded to Cloud Logging. If you use structured logs, you can add metadata that will help you debug issues in production. In Chapter 9, I will get to the bottom of structured logs with a practical example.

Transparent Deployments

I don’t know about you, but personally, having to endure slow or complicated deployments raises my anxiety. I’d say watching a thriller movie is comparable. You can double that if I need to perform a lot of orchestration on my local machine to get it right. On Cloud Run, deployments and configuration changes don’t require a lot of hand-holding, and more advanced deployment strategies such as a gradual rollout or blue-green deployments are supported out of the box. If you make sure your app is ready for new requests within seconds after starting the container, you will reduce the time it takes to deploy a new version.

Pay-Per-Use

Cloud Run charges you for the resources (CPU and memory) your containers use when they serve requests. Even if Cloud Run, for some reason, decides to keep your container running when it is not serving requests, you will not pay for it. Surprisingly enough, Cloud Run keeps those idling containers running much longer than you would expect. I’ll explain this in more detail in Chapter 2.

Concerns About Serverless

So far, I’ve only highlighted the reasons why you would want to adopt serverless. But you should be aware that, while serverless solves certain problems, it also creates new ones. Let’s talk about what you should be concerned about. What risks do you take when adopting serverless, and what can you do about them?

Unpredictable Costs

The cost model is different with serverless, and this means the architecture of your system has a more direct and visible influence on the running costs of your system. Additionally, with rapid autoscaling comes the risk of unpredictable billing. If you run a serverless system that scales elastically, then when big demand comes, it will be served, and you will pay for it. Just like performance, security, and scalability, costs are now a quality aspect of your code that you need to be aware of and that you as a developer can control.

Don’t let this paragraph scare you: you can set boundaries to scaling behavior and fine-tune the amount of resources that are available to your containers. You can also perform load testing to predict cost.

Hyper-Scalability

Cloud Run can scale out to a lot of container instances very quickly. This can cause trouble for downstream systems that cannot increase capacity as quickly or that have trouble serving a highly concurrent workload, such as traditional relational databases and external APIs with enforced rate limits. In Chapter 4, I will investigate how you can protect your relational database from too much concurrency.

When Things Go Really Wrong

When you run your software on top of a platform you do not own and control, you are at the mercy of your provider when things go really wrong. I don’t mean when your code has an everyday bug that you can easily spot and fix. By “really wrong,” I mean when, for example, your software breaks in production and you have no way to reproduce the fault when you run it locally because the bug is really in the vendor-controlled platform.

On a traditional server-based infrastructure, when you are confronted with an error or performance issue you can’t explain, you can take a look under the hood because you control the entire stack. There is an abundance of tools that can help you figure out what is happening, even in production. On a vendor-controlled platform, all you can do is file a support ticket.

Separation of Compute and Storage

If you use Cloud Run, you need to store data that needs to be persisted externally in a database or on blob storage. This is called separation of compute and storage, and it is how Cloud Run realizes scalability. However, for workloads that benefit from fast, random access to a lot of data (that can’t fit in RAM), this can add latency to request processing. This is mitigated in part by the internal networking inside of a Google Cloud datacenter, which is generally very fast with low latency.

Open Source Compatibility

Portability is important—you want to be able to migrate your application from one vendor to another, without being confronted with great challenges. While I was writing this chapter, the CIOs of a hundred large companies in the Netherlands raised the alarm about their software suppliers changing terms and conditions unilaterally, then imposing audits and considerable extra charges on the companies. I think this illustrates perfectly why it is important to “have a way out” and make sure a supplier never gets that kind of leverage. Even though you trust Google today, you never know what the future holds.

There are two reasons why Cloud Run is portable. First, it’s container based. If a container runs on Cloud Run, it can run on any container engine, anywhere. Second, if you’ve built a complex distributed application with a lot of Cloud Run services that work together, you might be concerned about the portability of the platform itself. Cloud Run is API compatible with the Knative open source product. This means that you can migrate from Cloud Run to a self-hosted Kubernetes cluster (the open source container platform) with Knative installed with limited effort.2 Kubernetes is the de facto standard when you want to deploy containers on a cluster of servers.

In your own datacenter, you are in full control of your infrastructure, but your developers still have a “serverless” developer experience if you run Knative. In Chapter 10, I explore Knative in more detail.

Summary

In this first chapter, you learned what serverless actually means and what its key characteristics are. You also got an overview of Google Cloud and saw that serverless is not limited to applications that handle HTTPS requests. Databases and task queues can be serverless, too.

Serverless is great for you if you value a simple and fast developer experience, and when you don’t want to build and maintain traditional server infrastructure. The servers are still there—you just don’t have to manage them anymore.

Your application will still be portable if you choose Cloud Run. It is container based, and the Cloud Run platform itself is based on the open Knative specification.

You might still have a lot of questions after reading this first chapter. That’s a good thing! As you join me on this serverless journey, I encourage you to forget everything you’ve ever learned about managing server infrastructure.

1 Verma Abhishek et al., “Large-scale cluster management at Google with Borg”, Proceedings of EuroSys (2015).

2 Be careful not to tie in your application source code to Google Cloud services too closely without OSS alternatives, or you could lose the portability benefit.

Get Building Serverless Applications with Google Cloud Run now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.