Chapter 1. LLM Applications

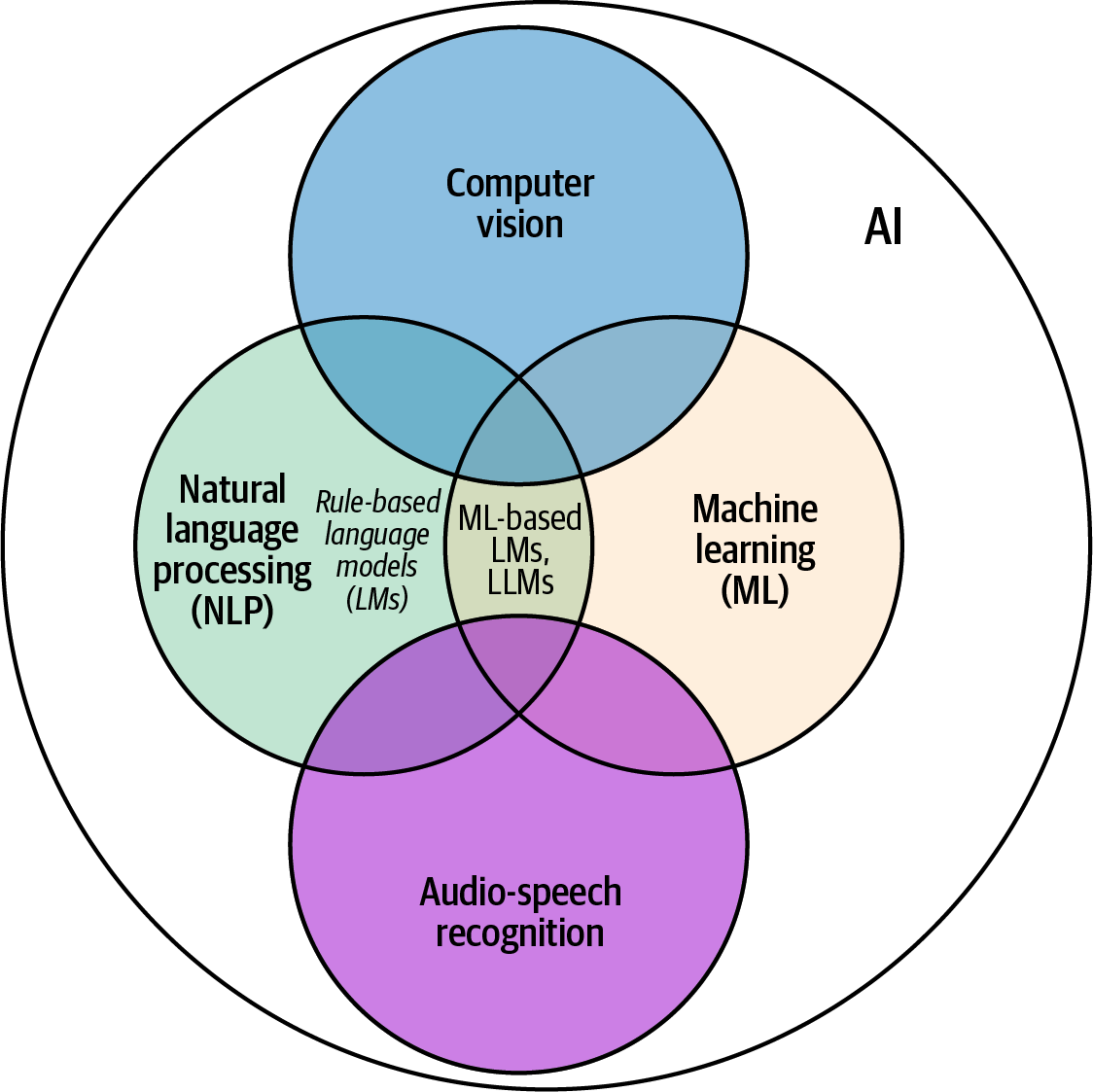

A large language model (LLM) is a statistical model trained on large amounts of text data to emulate human speech for natural language processing tasks (Figure 1-1), such as information extraction, text classification, speech synthesis, summarization, and machine translation. LLMOps, thus, is a framework to automate and streamline large language model (also called a foundational or generative AI model) pipelines.

While task-specific models for natural language processing (NLP) have been used in practice for a while, recent advances in NLP have shifted public interest to more task-agnostic models that allow a single model to do all of the tasks listed in the preceding paragraph.

Figure 1-1. A Venn diagram explaining the correlation among AI, ML, and LLMs1

LLMs do this by using a large number of parameters (variables that store input-output patterns in the data to help the model make predictions): LLaMA, an LLM developed by Meta, contains 65 billion parameters; PaLM, by Google, has 540 billion; and GPT-4, developed by OpenAI, is estimated to have about 1.7 trillion. These parameters allow them to capture massive amounts of linguistic and contextual information about the world and perform well on a wide range of tasks.

As such, LLMs can be used in chatbots, coding assistance tools, and many other kinds of applications. Essentially, any information ...

Get What Is LLMOps? now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.