Chapter 7. Integrating with Other Technologies

The Web Audio API makes audio processing and analysis a fundamental part of the web platform. As a core building block for web developers, it is designed to play well with other technologies.

Setting Up Background Music with the <audio> Tag

As I mentioned at the very start of the book, the <audio> tag has many limitations that make

it undesirable for games and interactive applications. One advantage of

this HTML5 feature, however, is that it has built-in buffering and

streaming support, making it ideal for long-form playback. Loading a large

buffer is slow from a network perspective, and expensive from a

memory-management perspective. The <audio> tag setup is ideal for music

playback or for a game soundtrack.

Rather than going the usual path of loading a sound directly by

issuing an XMLHttpRequest and then

decoding the buffer, you can use the media stream audio source node

(MediaElementAudioSourceNode) to create

nodes that behave much like audio source nodes (AudioSourceNode), but wrap an existing

<audio> tag. Once we have this node connected to

our audio graph, we can use our knowledge of the Web Audio API to do great

things. This small example applies a low-pass filter to the <audio> tag:

window.addEventListener('load',onLoad,false);functiononLoad(){varaudio=newAudio();source=context.createMediaElementSource(audio);varfilter=context.createBiquadFilter();filter.type=filter.LOWPASS;filter.frequency.value=440;source.connect(this.filter);filter.connect(context.destination);audio.src='http://example.com/the.mp3';audio.play();}

Demo: to see <audio> tag integration with the Web Audio

API in action, visit http://webaudioapi.com/samples/audio-tag/.

Live Audio Input

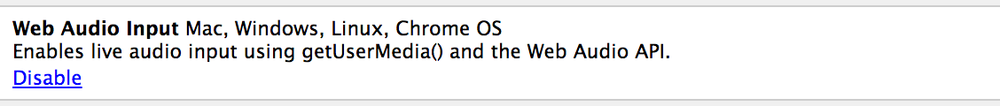

One highly requested feature of the Web Audio API is integration

with getUserMedia, which gives browsers

access to the audio/video stream of connected microphones and cameras. At

the time of this writing, this feature is available behind a flag in

Chrome. To enable it, you need to visit about:flags and turn on the “Web Audio Input”

experiment, as in Figure 7-1.

Once this is enabled, you can use the MediaStreamSourceNode Web Audio node. This node

wraps around the audio stream object that is available once the stream is

established. This is directly analogous to the way that MediaElementSourceNodes wrap <audio> elements. In the following sample,

we visualize the live audio input that has been processed by a notch

filter:

functiongetLiveInput(){// Only get the audio stream.navigator.webkitGetUserMedia({audio:true},onStream,onStreamError);};functiononStream(stream){// Wrap a MediaStreamSourceNode around the live input stream.varinput=context.createMediaStreamSource(stream);// Connect the input to a filter.varfilter=context.createBiquadFilter();filter.frequency.value=60.0;filter.type=filter.NOTCH;filter.Q=10.0;varanalyser=context.createAnalyser();// Connect graph.input.connect(filter);filter.connect(analyser);// Set up an animation.requestAnimationFrame(render);};functiononStreamError(e){console.error(e);};functionrender(){// Visualize the live audio input.requestAnimationFrame(render);};

Another way to establish streams is based on a WebRTC PeerConnection. By bringing a communication stream into the Web Audio API, you could, for example, spatialize multiple participants in a video conference.

Demo: to visualize live input, visit http://webaudioapi.com/samples/microphone/.

Page Visibility and Audio Playback

Whenever you develop a web application that involves audio playback, you should be cognizant of the state of the page. The classic failure mode here is that one of many tabs is playing sound, but you have no idea which one it is. This may make sense for a music player application, in which you want music to continue playing regardless of the visibility of the page. However, for a game, you often want to pause gameplay (and sound playback) when the page is no longer in the foreground.

Luckily, the Page Visibility API provides functionality to detect

when a page becomes hidden or visible. The state can be determined from

the Boolean document.hidden property.

The event that fires when the visibility changes is called visibilitychange. Because the API is still

considered to be experimental, all of these names are webkit-prefixed.

With this in mind, the following code will stop a source node when a page

becomes hidden, and resume it when the page becomes visible:

// Listen to the webkitvisibilitychange event.document.addEventListener('webkitvisibilitychange',onVisibilityChange);functiononVisibilityChange(){if(document.webkitHidden){source.stop(0);}else{source.start(0);}}

Get Web Audio API now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.