Chapter 4. Perception, Cognition, and Affordance

In the Universe, there are things that are known, and things that are unknown, and in between there are doors.

âWILLIAM BLAKE

Information of a Different Sort

IF WE ARE TO KNOW HOW USERS UNDERSTAND THE CONTEXT OF OBJECTS, people, and places, we need to stipulate what we mean by understand in the first place. The way people understand things is through cognition, which is the process by which we acquire knowledge and understanding through thought, experience, and our senses. Cognition isnât an abstraction. Itâs bound up in the very structures of our bodies and physical surroundings.

When a spider quickly and gracefully traverses the intricacies of a web, or a bird like the green bee-eater on this bookâs cover catches an insect in flight, these creatures are relying on their bodies to form a kind of coupling with their environmentsâa natural, intuitive dance wherein environment and creature work together as a system. These wonderfully evolved, coupled systems result in complex, advanced behavior, yet with no large brains in sight.

It turns out that we humans, who evolved on the same planet among the same essential structures as spiders and birds, also rely on this kind of body-to-environment coupling. Our most basic actionsâthe sort we hardly notice we doâwork because our bodies are able to perceive and act among the structures of our environment with little or no thought required.

When I see users tapping and clicking pages or screens to learn how a product works, ignoring and dismissing pop-ups with important alerts because they want to get at the information underneath, or keeping their smartphones with them from room to room in their homes, I wonder why these behaviors occur. Often they donât seem very logical, or at least they show a tendency to act first and think about the logic of the action later. Even though these interfaces and gadgets arenât natural objects and surfaces, users try using them as if they were.

This theory about the body-environment relationship originates in a field called ecological psychology, which posits that creatures directly perceive and act in the world by their bodiesâ ability to detect information about the structures in the environment. This information is what I will be calling physical informationâa mode of information that is at work when bodies and environments do this coupled, dynamic dance of action and perception.

Ecological psychology is sometimes referred to as Gibsonian psychology because the theory started with a scientist named James J. Gibson, whose theory of information uses neither the colloquial meaning of information nor the definition we get from information science.[18] Gibson explains his usage in a key passage of his landmark work, The Ecological Approach to Visual Perception:

Information, as the term is used in this book (but not in other books), refers to specification of the observerâs environment, not to specification of the observerâs receptors or sense organs....[For discussing perception, the term] information cannot have its familiar dictionary meaning of knowledge communicated to a receiver. This is unfortunate, and I would use another term if I could. The only recourse is to ask the reader to remember that picking up information is not to be thought of as a case of communicating. The world does not speak to the observer. Animals and humans communicate with cries, gestures, speech, pictures, writing and television, but we cannot hope to understand perception in terms of these channels; it is quite the other way around. Words and pictures convey information, carry it, or transmit it, but the information in the sea of energy around each of us, luminous or mechanical or chemical energy, is not conveyed. It is simply there. The assumption that information can be transmitted and the assumption that it can be stored are appropriate for the theory of communication, not for the theory of perception.[19]

Gibson often found himself having to appropriate or invent terms in order to have language he could use to express ideas that the contemporaneous language didnât accommodate.[20] Heâs having to ask readers to set aside their existing meaning of information and to look at it in a different way, when trying to understand how perception works. For him, âTo perceive is to be aware of the surfaces of the environment and of oneself in it.â[21] In other words, perception is about the agent, figuring out the elements of its surroundings and understanding how the agent itself is one of those elements. And information is what organisms perceive in the environment that informs the possibilities for action.

Even this usage of âperceptionâ is more specific than we might be used to: itâs about core perceptual faculties, not statements such as âmy perception of the painting is that it is prettyâ or âthe audience perceives her to be very talented.â Those are cultural, social layers that we might refer to as perception, but not the sort of perception we will mainly be discussing in Part 1.

Even though we humans might now be using advanced technology with voice recognition and backlit touch-screen displays, we still depend on the same bodies and brains that our ancestors used thousands of years ago to allow us to act in our environment, no matter how digitally enhanced. Just as with the field and the stone wall presented in Chapter 3, even without language or digital technology, the world is full of structures that inform bodies about what actions those structures afford.

Iâll be drawing from Gibsonâs work substantially, especially in this part of the book, because I find that it provides an invaluable starting point for rethinking (and more deeply grasping) how users perceive and understand their environments. Gibsonâs ideas have also found a more recent home as a significant influence in a theoretical perspective called embodied cognition.

A Mainstream View of Cognition

Since roughly the mid-twentieth century, conventional cognitive science holds that cognition is primarily (or exclusively) a brain function, and that the body is mainly an input-output mechanism; the body does not constitute a significant part of cognitive work. Iâll be referring to this perspective as mainstream or disembodied cognition, though it is called by other names in the literature, such as representationalism or cognitivism.

According to this view, cognition works something similar to the diagram in Figure 4-2.

The process happens through inputs and outputs, with the brain as the âcentral processing unitâ:

The brain gathers data about the world through the bodyâs senses.

It then works with abstract representations of what is sensed.

The brain processes the representational data by using disembodied rules, models, and logical operations.

The brain then takes this disembodied, abstract information and translates it into instructions to the body.

In other words, according to the mainstream view, perception is indirect, and requires representations that are processed the way a computer would process math and logic. The model holds that this is how cognition works for even the most basic bodily action.

This computer-like way of understanding cognition emerged for a reason: modern cognitive science was coming of age just as information theory and computer science were emerging as well; in fact, the âcognitive revolutionâ that moved psychology past its earlier behaviorist orthodoxy was largely due to the influence of the new field of information science.[28]

So, of course, cognitive science absorbed a lot of perspectives and metaphors from computing. The computer became not just a metaphor for understanding the brain, but a literal explanation for its function. It framed the human mind as made of information processed by a brain that works like an advanced machine.[29] This theoretical foundation is still influential today, in many branches of psychology, neuroscience, economics, and even human-computer interaction (HCI).

To be fair, this is a simplified summary, and the disembodied-cognition perspective has evolved over time. Some versions have even adopted aspects of competing theories. Still, the core assumptions are based on brain-first cognition, arguing that at the center is a âmodel human processorâ that computes our cognition using logical rules and representations, much like the earliest cognitive scientists and HCI theorists described.[30] And letâs face it, this is how most of us learned how the brain and body function; the brain-is-like-a-computer meme has fully saturated our culture to the point at which itâs hard to imagine any other way of understanding cognition.

The mainstream view has been challenged for quite a while by alternative theories, which include examples such as activity theory, situated action theory, and distributed cognition theory.[31] These and others are all worth learning about, and they all bring some needed rigor to design practice. They also illustrate how there isnât necessarily a single accepted way to understand cognition, users, or products. For our purposes, we will be exploring context through the perspective of embodied cognition theory.

Embodied Cognition: An Alternative View

In my own experience, and in the process of investigating this bookâs subject, Iâve found the theory of embodied cognition to be a convincing approach that explains many of the mysteries Iâve encountered over my years of observing users and designing for them.

Embodied cognition has been gaining traction in the last decade or so, sparking a paradigm shift in cognitive science, but it still isnât mainstream. Thatâs partly because the argument implies mainstream cognitive science has been largely wrong for a generation or more. Yet, embodied cognition is an increasingly influential perspective in the user-experience design fields, and stands to fundamentally change the way we think about and design human-computer interaction.[32]

Generally, the embodiment argument claims that our brains are not the only thing we use for thought and action; instead, our bodies are an important part of how cognition works. There are multiple versions of embodiment theory, some of which still insist the brain is where cognition starts, with the body just helping out. However, the perspective we will be following argues that cognition is truly environment-first, emerging from an active relationship between environment, body, and brain.[33] As explained by Andrew Wilson and Sabrina Golonka in their article âEmbodied Cognition Is Not What You Think It Isâ:

The most common definitions [of embodied cognition] involve the straightforward claim that âstates of the body modify states of the mind.â However, the implications of embodiment are actually much more radical than this. If cognition can span the brain, body, and the environment, the âstates of mindâ of disembodied cognitive science wonât exist to be modified. Cognition will instead be an extended system assembled from a broad array of resources. Taking embodiment seriously therefore requires both new methods and theory.[34]

The embodied approach insists on understanding perception from the first-person perspective of the perceiving organism, not abstract principles from a third-person observer. A spider doesnât âknowâ about webs, or that itâs moving vertically up a surface; it just takes action according to its nature. A bird doesnât âknowâ it is flying in air; it just moves its body to get from one place to another through its native medium. For we humans, this can be confusing, because by the time we are just past infancy, we develop a dependence on language and abstraction for talking and thinking about how we perceive the worldâa lens that adds a lot of conceptual information to our experience. But the perception and cognition underlying that higher-order comprehension is just about bodies and structures, not concepts. Conscious reflection on our experience happens after the perception, not before.

How can anything behave intelligently without a brain orchestrating every action? To illustrate, letâs look at how a Venus flytrap âbehavesâ with no brain at all. Even though ecological psychology and embodiment are not about plants, but terrestrial animals with brains and bodies that move, the flytrap is a helpful example because it illustrates how something that seems like intelligent behavior can occur through a coupled action between environment and organism.

The Venus flytrap (Figure 4-3) excretes a chemical that attracts insects. The movement of insects drawn to the plant then triggers tiny hairs on its surface. These hairs structurally cause the plant to close on the prey and trap it.

This behavior already has some complexity going on, but thereâs more: the trap closes only if more than one hair has been triggered within about 20 seconds. This bit of conditional logic embodied by the plantâs structure prevents it from trapping things with no nutritional value. Natural selection evidently filtered out all the flytraps that made too many mistakes when catching dinner. This is complex behavior with apparent intelligence underpinning it. Yet itâs all driven by the physical coupling of the organismâs âbodyâ and a particular environmental niche.

Now, imagine an organism that evolved to have the equivalent of millions of Venus flytraps, simple mechanisms that engage the structures of the environment in a similar manner, each adding a unique and complementary piece to a huge cognition puzzle. Though fanciful, it is one way of thinking about how complex organisms evolved, some of them eventually developing brains.

In animals with brains, the brain enhances and augments the body. The brain isnât the center of the behavioral universe; rather, itâs the other way around. Itâs this âother way aroundâ perspective that Gibson continually emphasizes in his work.

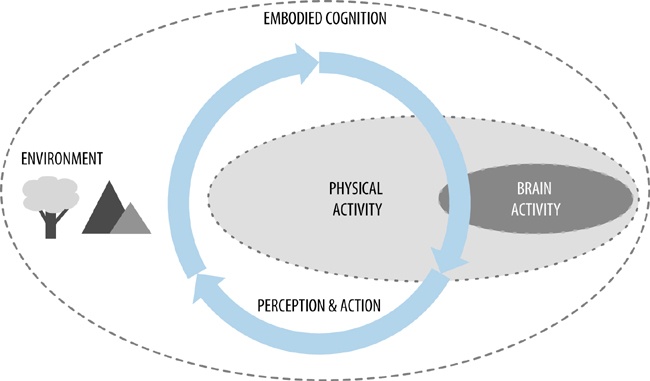

Figure 4-4 illustrates a new model for the brain-body-environment relationship.

In this model, thereâs a continuous loop of action and perception in which the entire environment is involved in how a perceiver deciphers that environment, all of it working as a dynamical, perceptual system. Of course, the brain plays an important role, but it isnât the originating source of cognition. Perception gives rise to cognition in a reciprocal relationshipâa resonant couplingâbetween the body and its surroundings.

This perception-action loop is the dynamo at the center of our cognition. In fact, perception makes up most of what we think of as cognition to begin with. As Louise Barrett puts it in Beyond the Brain: How Body and Environment Shape Animal and Human Minds (Princeton University Press), âOnce we begin exploring the actual mechanisms that animals use to negotiate their worlds, it becomes hard to decide where âperceptionâ ends and âcognitionâ starts.â[36] Just perceiving the environmentâs information already does a lot of the work that we often attribute to brain-based cognition.

Embodiment challenges us to understand the experience of the agent not from general abstract categories, but through the lived experience of the perceiver. One reason I prefer this approach is that it aligns nicely with what user experience (UX) design is all about: including the experiential reality of the user as a primary input to design rather than relying only on the goals of a business or the needs of a technology. Embodied cognition is a way of understanding more deeply how users have experiences, and how even subtle changes in the environment can have profound impacts on those experiences.

Action and the Perceptual System

As shown in the perception-action loop of Figure 4-4, we understand our environment by taking action in it. Gibson stresses that perception is not a set of discrete inputs and outputs, but happens as a perceptual system that uses all the parts of the system at once, where the distinction between input and output is effaced so that they âare considered together so as to make a continuous loop.â[38] The body plays an active part in the dynamical feedback system of perception. Context, then, is also a result of action by a perceiving agent, not a separate set of facts somehow insulated from that active perception. Even when observing faraway stars, astronomersâ actions have effects on how the light that has reached the telescope is interpreted and understood.

Itâs important to stress how deeply physical action and perception are connected, even when we are perceiving âvirtualâ or screen-based artifacts. In the documentary Visions of Light: The Art of Cinematography, legendary cameraman William Fraker tells a story about being the cinematographer on the movie Rosemaryâs Baby. At one point, he was filming a scene in which Ruth Gordonâs spry-yet-sinister character, Minnie, is talking on the phone in a bedroom. Fraker explains how director Roman Polanski asked him to move the camera so that Minnieâs face would be mostly hidden by the edge of a doorway, as shown in Figure 4-7. Fraker was puzzled by the choice, but he went along with it.

Fraker then recounts seeing the movieâs theatrical premiere, during which he noticed the audience actually lean to the right in their seats in an attempt to peek around the bedroom door frame. It turned out that Polanski asked for the odd, occluding angle for a good reason: to engage the audience physically and heighten dramatic tension by obscuring the available visual information.

Even though anyone in the theater would have consciously admitted there was no way to see more by shifting position, the unconscious impulse is to shift to the side to get a better look. Itâs an intriguing illustration of how our bodies are active participants in understanding our environment, even in defiance of everyday logic.

Gibson uses the phrase perceptual system rather than just âthe eyeâ because we donât perceive anything with just one isolated sense organ.[40] Perception is a function of the whole bodily context. The eye is made of parts, and the eye itself is a part of a larger system of parts, which is itself part of some other larger system. Thus, what we see is influenced by how we move and what we touch, smell, and hear, and vice versa.

In the specific case of watching a movie, viewers trying to see more of Minnieâs conversation were responding to a virtual experience as if it were a three-dimensional physical environment. They responded this way not because those dimensions were actually there, but because that sort of information was being mimicked on-screen, and taking actionâin this case leaning to adjust the angle of viewable surfacesâis what a body does when it wants to detect richer information about the elements in view. As we will see in later chapters, this distinction between directly perceived information and interpreted, simulated-physical information is important to the way we design interfaces between people and digital elements of the environment.

This systemic point of view is important in a broader sense of how we look at context and the products we design and build. Breaking things down into their component parts is a necessary approach to understanding complex systems and getting collaborative work done. But we have a tendency (or even a cognitive bias) toward forgetting the big picture of where the components came from. A specific part of a system might work fine and come through testing with flying colors, but it might fail once placed into the full context of the environment.

Information Pickup

Gibson coins the phrase information pickup to express how perception picks up, or detects, the information in the environment that our bodies use for taking action. Itâs in this dynamic of information pickup that the environment specifies what our bodies can or cannot do. The information picked up is about the mutual structural compatibility for action between bodies and environments.

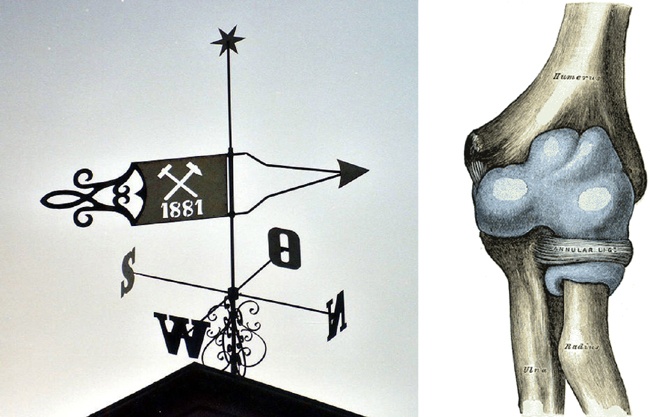

In the same way a weather vaneâs âbodyâ adjusts its behavior directly based on the direction of wind, an organismâs biological structures respond to the environment directly. A weather vane (Figure 4-8) moves the way it does because its structure responds to the movement of air surrounding it. Similarly, the movements of the elbow joint also shown in Figure 4-8 are largely responses to the structure of the environment. When we reach for a fork at dinner or prop ourselves at the table, the specifics of our motion donât need to be computed because their physical structure evolved for reaching, propping, and other similar actions. The evolutionary pressures on a species result in bodily structures and systems that fit within and resonate with the structures and systems of the environment.[41]

Information pickup is the process whereby the body can âorient, explore, investigate, adjust, optimize, resonate, extract, and come to an equilibrium.â[44] Most of our action is calibrated on the fly by our bodies, tuning themselves in real time to the tasks at hand.[45] When standing on a wobbly surface, we squat a bit to lower our center of gravity, and our arms shoot out to help our balance. This unthinking reaction to maintain equilibrium is behind more of our behavior than we realize, as when moviegoers leaned to the side to see more of Minnieâs phone conversation. Thereâs not a lot of abstract calculation driving those responses. Theyâre baked into the whole-body root system that makes vision possible, responding to arrays of energy interacting with the surfaces of the environment. Gibson argued that all our senses work in a similar manner.

Affordance

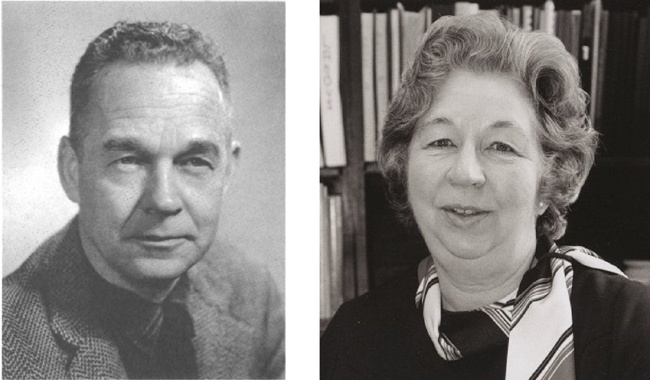

If youâve done much design work, youâve probably encountered talk of affordances. The concept was invented by J.J. Gibson, and codeveloped by his wife, Eleanor. Over time, affordance became an important principle for the Gibsonsâ theoretical system, tying together many elements of their theories.

Gibson explains his coining of the term in his final, major work, The Ecological Approach to Visual Perception:

The affordances of the environment are what it offers the animal, what it provides or furnishes, either for good or ill. The verb to afford is found in the dictionary, but the noun affordance is not. I have made it up. I mean by it something that refers to both the environment and the animal in a way that no existing term does. It implies the complementarity of the animal and the environment.[46]

More succinctly: Affordances are properties of environmental structures that provide opportunities for action to complementary organisms.[47]

When ambient energyâlight, sound, and so onâinteracts with structures, it produces perceivable information about the intrinsic properties of those structures. The âcomplementaryâ part is key: it refers to the way organismsâ physical abilities are complementary to the particular affordances in the environment. Hands can grasp branches, spider legs can traverse webs, or an animalâs eyes (and the rest of its visual system) can couple with the structure of light reflected from surfaces and objects. Affordances exist on their own in the environment, but they are partly defined by their relationship with a particular speciesâ physical capabilities. These environmental and bodily structures fit together because the contours of the latter evolved within the shaping mold of the former.

And, of course, action is required for this information pickup. In nature, a tree branch has structural properties that we detect through the way light and other energy interact with the substance and texture of the branch. We are always in motion and perceiving the branch from multiple angles. Even if weâre standing still, our bodies are breathing, our eyes shifting, and able to detect the way shadows and light shift in our view. This is an unconscious, brain-body-environment dynamic that results in our bodiesâ detection of affording properties: whether a branch is a good size to fit the hand, and whether itâs top-heavy or just right for use as a cane or club.

Perception evolved in a natural world full of affordances, but our built environment also has these environmental properties. Stairs, such as those shown in Figure 4-9, are made of surfaces and materials that have affordances when arranged into steps. Those affordances, when interacting with energy such as light and vibration, uniquely structure that energy to create information that our bodies detect.

Our bodies require no explanation of how stairs work, because the information our bodies need is intrinsic in the structure of the stairs. Just as a hand pressed in clay shapes the clay into structure identical to the hand, affordance shapes energy in ways that accurately convey information about the affordance. Organisms rely on this uniquely structured energy to âpick upâ information relevant to what the organism can do.

For a person who never encountered stairs before, there might be some question as to why climbing up their incline would be desirable, but the perceiverâs body would pick up the fact that it could use them to go upward either way. Humans evolved among surfaces that varied in height, so our bodies have properties that are complementary with the affordances of such surface arrangements.

For most of us, this is a counterintuitive idea, because weâre so used to thinking in terms of brain-first cognition. In addition, some of the specifics of exactly what affordance is and how Gibson meant it are still being worked out among academics. The principle of affordance was always a sort of work in progress for Gibson, and actually emerged later in his work than some of his other concepts.[48] He developed it as an answer to Gestalt psychology theories about how we seem to perceive the meaning of something as readily as we perceive its physical properties; rather than splitting these two kinds of meaning apart, he wanted to unify the dualism into one thing: affordance.[49]

We wonât be exploring all the various shades of theory involved with affordance scholarship. But, because there is so much talk of affordances in design circles, I think itâs valuable to establish some basic assumptions we will be working from, based on Gibsonâs own work.

Affordance is a revolutionary idea

Gibson claimed in 1979 that affordance is âa radical departure from existing theories of value and meaning.â[50]And in some ways, it is still just as radical. As an empiricist, Gibson was interested in routing around the cultural orthodoxies and interpretive ideas we layer onto our surroundings; he wanted to start with raw facts about how nature works. If we take Gibsonâs theory of affordances seriously on its own terms, we have to take seriously the whole of his ecological system, not pick and choose parts of it to bolt onto mainstream, brain-first theory.[51]

Affordances are value-neutral

In design circles, there is sometimes mention of anti-affordances. But in Gibsonâs framework thereâs no need for that idea. Affordances offer the animal opportunities for action, in his words, âfor good or ill.â He writes of affordances having âpositiveâ or ânegativeâ effects, explaining that some affordances are inconvenient or even dangerous for a given agent, but they are still affordances.[52] An affordance isnât always good from the perspective of a particular organism. Water affords drowning for a terrestrial mammal, but it affords movement and respiration for a fish.[53] Fire affords comforting heat, light, and the ability to cook food, but it also affords injury by burning, or destruction of property. Affordance is about the structural and chemical properties that involve relationships between elements in the environment, some of which happen to be human beings. Separating what a structure affords from the effect on the perceiverâs self-interests helps us to remember that not all situations are the same from one perceiver to the next.

Perception of affordance information comes first; our ideas about it come later

Gibson argued that when we perceive something, we are not constructing the perception in our brains based on preexisting abstract ideas. He states, âYou do not have to classify and label things in order to perceive what they afford.â When I pick up a fork to eat food, my brain isnât first considering the forkâs form and matching it to a category of eating utensils and then telling my arm itâs OK to use the fork. The fork affords the stabbing of bites of food and bringing them to my mouth; my body extends its abilities by using the fork as a multipointed extension of my arm. That is, my body appropriates the fork based on its structure, not its category. The facts that it is a dinner fork and part of a set of flatware are based on categories that emerge later, from personal experience and social convention.

Affordances exist in the environment whether they are perceived or not

One contemporary theoretical stance argues that we do not perceive real things in the world, but only our brainâs ideas and representations of them. Gibson strongly disagreed, insisting that we couldnât perceive anything unless there were a real, physical, and measurable relationship between the things in the world and our bodies. He allowed room for a sort of âlife of the mindâ that might, in a sense, slosh about atop these real foundations, but it exists only because it was able to emerge from physical coupling between creature and world.

The properties that give something affordance exist whether they are perceived in the moment or not; they are latent possibilities for action. Affordance is required for perception; but an affordance doesnât have to be perceived to exist. I might not be able to see the stairs around the corner in a building, but that doesnât mean the stairsâ ability to support climbing doesnât exist. This idea complicates the commonly taught concept that a mental model drives behavior. Affordance means the information we need for action does not have to be âmentalâ and is actually in the structures of the environment. I perceive and use the stairs not because I have a mental model of them; no model is needed because all the information necessary is intrinsic to the shape and substance of the stairs. Prior learned experience might influence my usage in some way, but thatâs in addition to perception, not perception itself.

Affordances are there, whether they are perceived accurately or not

A Venus flytrap exists because it can get nutrition. And it gets nutrition because it âtricksâ its prey into thinking it is a source of food for the prey, rather than the other way around. For a fly, the affordance of the flytrap is being caught, dissolved, and absorbed by a plant. What the fly perceives, until it is often too late, is âfood.â Likewise, we might perceive ground where there is actually quicksand, or a tree branch that is actually a snake. Perception is of the information created by the affordance, not the affordance itself. This is an important distinction that has often been misunderstood in design practice, leading to convoluted discussions of âperceivedâ versus âactualâ affordances. The affordance is a property of the object, not the perception of it.

Affording information is always in a context of other information

No single affordance exists by itself; itâs always nested within a broader context of other affording structures. For example, even if we claim to âadd an affordanceâ by attaching a handle to a hammer head, the hammer is useful only insofar as it can bang on things that need to be banged upon. Stairs afford climbing, but theyâre always part of some surrounding environment that affords other actions, such as floors and landings, walls, handrails, and whatever is in the rooms the stairs connect. In digital devices, the physical buttons and switches mean nothing on their own, physically, other than âpushableâ or âflippableââwhat they actually affect when invoked is perceived only contextually.

Affordances are learned

Human infants are not born understanding how to use stairs. Even if we allow that perception couples with the information of the stairsâ surfaces to detect they are solid, flat, and go upward, we still have to learn how and why to use them with any degree of facility. Infants and toddlers not only inspect the stairs themselves, but also were likely carried up them by caregivers and saw others walking or running up and down them long before trying out the stairs for themselves. Learning how to use the environment happens in a densely textured context of social and physical experience. This is true of everything we take for granted in our environment, down to the simplest shapes and surfaces. We learned how to use it all, whether we remember learning it or not. In digital design, there is talk of natural interfaces and intuitive designs. What those phrases are really getting at is whether an interface or environment has information for action that has already been learned by its users. When designing objects and places for humans, we generally should assume that no affordance is natural. We should instead ask: is this structureâs affordance more or less conventional or learnableâkeeping in mind that âlearnableâ is often dependent upon how the affordance builds on established convention.

Directly Perceived versus Indirectly Meaningful

The Gibsons continued to expand their theories into how affordances function underneath complex cultural structures, such as language, cinema, and whole social systems. Other scholars have since continued to apply affordance theory to understanding all sorts of information. Likewise, for designers of digital interfaces, affordance has become a tool for asking questions about what an interface offers the user for taking action. Is an on-screen item a button or link? Is it movable? Or is it just decoration or background? Is a touch target too small to engage? Can a user discover a feature, or is it hidden? The way affordance is discussed in these questions tends to be inexact and muddled. Thatâs partly because, even among design theorists and practitioners, affordance has a long and muddled history. There are good reasons for the confusion, and they have to do with the differences between how we perceive physical things versus how we interpret the meaning of language or simulated objects.

The scene from Rosemaryâs Baby serves as an apt example of this simulated-object issue. In the scene depicted in Figure 4-7, moviegoers canât see Minnieâs mouth moving behind the door frame, even if they shift to the right in their seats, because the information on the screen only simulates what it portrays. There is no real door frame or bedroom that a viewer can perceive more richly via bodily movement. If there were, the door and rooms would have affordances that create directly specifying information for bodies to pick up, informing the body that moving further right will continue revealing more of Minnieâs actions. Of course, some audience members tried this, but calibrated by stopping as soon as their perception picked up that nothing was changing. Then, there were undoubtedly nervous titters in the crowdâhow silly that we tried to see more! As we will see later, we tend to begin to interact with information this way, based on what our bodies assume it will give us, even if that information is tricking us or simulating something else, as in digital interfaces.

Perception might be momentarily fooled by the movie, but the only affordance actually at work is what is produced by a projector, film, and the reflective surface of the screen. The projector, film, and screen are quite real and afford the viewing of the projected light, but thatâs where affordance stops. The way the audience interprets the meaning of the shapes, colors, and shadows simulated by that reflected light is a different sort of experience than being in an actual room and looking through an actual door.

In his ecological framework, Gibson refers to any surface on which we show communicative information as a âdisplay.â This includes paintings, sketches, photographs, scrolls, clay tablets, projected images, and even sculptures. To Gibson, a display is âa surface that has been shaped or processed so as to exhibit information for more than just the surface itself.â[54] Like a smartphone screen, a surface with writing on it has no intrinsic meaning outside of its surfaceâs physical information; but we arenât interested in the surface so much as what we interpret from the writing. Gibson refers to the knowledge one can gain from these information artifacts as mediated or indirectâthat is, compared to direct physical information pickup, these provide information via a medium.

Depending on where you read about affordances, you might see Affordance used to explain this sort of mediated, indirectly meaningful information. However, for the sake of clarity, I will be specifying Affordance as that which creates information about itself, and I will not be using the term for information that is about something beyond the affordance. Images, words, digital interfacesâthese things all provide information, but the ultimately relevant meaning we take away from them is not intrinsic. It is interpreted, based on convention or abstraction. This is a complex point to grasp, but donât worry if it isnât clear just yet. We will be contemplating it together even more in many chapters to come.

This approach is roughly similar to that found in the more recent work of Don Norman, who is most responsible for introducing the theory of affordance to the design profession. Norman updates his take on affordance in the revised, updated edition of his landmark book, The Design of Everyday Things (Basic Books). Generally, Norman cautions that we should distinguish between affordances, such as the form of a door handle that we recognize as fitting our hand and suited for pulling or pushing, and signifiers, such as the âPushâ or âPullâ signs that often adorn such doors.[55] We will look at signifiers and how they intersect with affordance in Part III.

This distinction is also recommended in recent work by ecological psychologist Sabrina Golonka, whose research focuses on the way language works to create information we find meaningful, âwithout straining or redefining original notions of affordances or direct-perception.â For affordance to be a useful concept, we need to tighten down what it means and put a solid boundary around it.[56]

That doesnât mean we are done with affordance after this part of the book. Affordance is a critical factor in how we understand other sorts of information. Just as a complex brain wouldnât exist without a body, mediated information wouldnât exist without direct perception to build upon. No matter how lofty and abstract our thoughts are or how complex our systems might be, all of it is rooted, finally, to the human bodyâs mutual relationship with the physical environment.

As designers of digitally infused parts of our environment, we have to continually work to keep this bodily foundation in mind. Thatâs because the dynamic by which we understand the context of a scene in a movieâor a link on a web pageâborrows from the dynamic that makes it possible for us to use the stairs in a building or pick a blackberry in a briar patch. Our perception is, in a sense, hungry for affordance and tries to find it wherever it can, even from indirectly meaningful information. That is, what matters to the first-person perspective of a user is the blended spectrum of information the user perceives, whether it is direct or indirect. Itâs in the teasing apart of these sorts of information where the challenge of context for design truly lies.

Soft Assembly

Affordance gives us one kind of information: what Iâm calling physical information. But, what we experience and use for perception is the information, not the affordance that created it. Cognition grabs information and acts on it, without being especially picky about technical distinctions of where the information originates.

Cognition recruits all sorts of mechanisms in the name of figuring out the world, from many disparate bodily and sensory functions. The way this works is called soft assembly. Itâs a process wherein many various factors of body-environment interaction aggregate on the fly, adding up to behaviors effective in the moment for the body.[57] Out of all that activity of mutual interplay between environment and perceiver, there emerges the singular behavior. Now that we can even embed sensors and reactive mechanisms into our own skin, this way of thinking about how those small parts assemble into a whole may be more relevant than ever.[58]

Weâre used to thinking of ourselves as separate from our environment, yet an ecological or embodied view offers that the boundary between the self and the environment is not absolute; itâs porous and in flux. For example, when we pick up a fallen tree branch and use it as a toolâperhaps to knock fruit from the higher reaches of the treeâthe tool becomes an extension of our bodies, perceived and wielded as we would wield a longer arm. Even when we drive a car, with practice, the car blends into our sense of how our bodies fit into the environment.[59]

This isnât so radical a notion if we donât think of the outer layer of the human body as an absolute boundary but as more of an inflection point. Thus, itâs not a big leap to go from âcounting with my fingersâ to âcounting with sticks.â As author Louise Barrett explains, âWhen we take a step back and consider how a cognitive process operates as a whole, we often find that the barrier between whatâs inside the skin and whatâs outside is often purely arbitrary, and, once we realize this, it dissolves.â[60] The way we understand our context is deeply influenced by the environment around us, partly because cognition includes the environment itself.

In Supersizing the Mind: Embodiment, Action, and Cognitive Extension (Oxford University Press), Andy Clark argues that our bodies and brains move with great fluidity between various sorts of cognition. From moment to moment, our cognition uses various combinations of cognitive loopsâsubactive cognition, active-body cognition, and extended cognition, using the scaffolding of the environment around us. Clark explains that our minds âare promiscuously body-and-world exploiting. They are forever testing and exploring the possibilities for incorporating new resources and structures deep into their embodied acting and problem-solving regimes.â[61]

Clark also explains how an assembly principle is behind how cognition works as efficiently as it can, using âwhatever mix of problem-solving resources will yield an acceptable result with minimal effort.â[62] We might say that we use a combination of âloops of least effort.â[63] Thatâs why audience members leaned to the right in a theater, even though there was no logical reason to do so. We act to perceive, based on the least effortful interpretation of the information provided, even though it sometimes leads us astray. That is, we can easily misinterpret our context, and act before our error is clear to us. Even though we might logically categorize the variety of resources the perceiver recruits for cognition, to the perceiver it is all a big mash-up of information about the environment. For designers, that means the burden is on the work of design to carefully parse how each element of an environment might influence user action, because the user will probably just act, without perceiving a difference.

âSatisficingâ

Perhaps this loops-of-least-effort idea helps explain a behavior pattern first described by scientist-economist Herbert Simon, who called it satisficing. Satisficing is a concept that explains how we conserve energy by doing whatever is just enough to meet a threshold of acceptability. Itâs a portmanteau combining âsatisfyâ and âsuffice.â Its use has been expanded to explain other phenomena, from how people decide what to buy to the way a species changes in response to evolutionary pressures.[64] Satisficing is a valuable idea for design practice, because it reminds us that users use what we design. They donât typically ponder it, analyze it, or come to know all its marvelous secrets. They act in the world based on the most obvious information available and with as little concentration as possible. Thatâs because cognition starts with, and depends upon, continual action and interaction with the environment. Users arenât motivated by first understanding the environment. Theyâre too busy just getting things done, and in fact they tend to improvise as they go, often using the environment in different ways than intended by designers.[65]

Even the most careful users eventually âpokeâ the environment to see how it responds or where it will take them by clicking or tapping things, hovering with a mouse, waving a controller or phone around in the air, or entering words into a search field. Just the act of looking is a physical action that probes the environment for structural affordance information, picking up the minimum that seems to be needed to move and then appropriating the environment to their needs. We see this when we observe people using software: theyâll often try things out just to see what happens, or they find workarounds that we never imagined they would use. It does no good to call them âbad users.â These are people who behave the way people behave. This is one reason why lab-based testing can be a problem; test subjects can be primed to assume too much about the tested artifact, and they can overthink their interactions because they know theyâre being observed. Out in the world they are generally less conscious of their behaviors and improvised actions.

The embodied view flips the traditional role of the designer. Weâre used to thinking of design as creating an intricately engineered setting for the user, for which every act has been accounted. But the contextual meaning of the environment is never permanently established, because context is a function of the active engagement of the user. This means the primary aim of the designer is not to design ways for the artifact to be used but instead to design the artifact to be clearly understood,[66] so the user can recruit it into her full environmental experience in whatever way she needs.

Umwelts

We have a Boston terrier named Sigmund (Figure 4-10). Heâs a brownish-red color, unlike typical Bostons. When walking Sigmund, I notice that no matter how well heâs staying by my side, on occasion he canât help going off-task. Sometimes, he stops in his tracks, as if the ground has reached up and grabbed him. And, in a sense, thatâs what is happening. Sigmund is perceiving something in his environment that is making him stop. Itâs not premeditated or calculated; itâs a response to the environment not unlike walking into a glass wall. For him, itâs something he perceives as âin the wayâ or even dangerous, like an angle of shadow along the ground that could be a hole or something closing in on him. It happens less as he gets older and learns more about the world around him. Stairs used to completely freak him out, but now heâs a pro.

But often what affects Sigmundâs behavior is invisible to me. Like many dogs, heâs much more perceptive of sound and smell than hominids. In those dimensions, Sigmundâs world is much richer than mine. If I bring him outside and we encounter even a mild breeze, heâll stop with his nose pointed upward and just smell the air the way I might watch a movie at IMAX. Certainly we make friends differently. At the dog park, Iâm mostly paying attention to the visual aspects of faces around me, whereas Sigmund gets to know his kind from sniffing the other end.[67]

For Sigmund and me, much of our worlds overlap; weâre both warm-blooded terrestrial mammals, after all. Weâre just responding to different sorts of information in addition to what we share. Sigmund might stop because of a scent I cannot smell, but I might stop in my tracks because I see a caution light or stop sign. Sigmund and I are walking in somewhat different worldsâeach of us in our own umwelt.

Umwelt is an idea introduced by biologist Jakob Johann von Uexküll (1874â1944), who defined it as the world as perceived and acted upon by a given organism. Uexküll studied the sense organs and behaviors of various creatures such as insects, amoebae, and jellyfish, and developed theories on how these they experience their environments.[68]

As a result of this work, Uexküll argued that biological existence couldnât be understood only as molecular pieces and parts, but as organisms sensing the world as part of a system of signs. In other words, Uexküll pioneered the connection of biology with semiotics, creating a field now called biosemiotics (we will look more closely at signs, signification, and semiotics in Part III). His work also strongly influenced seminal ideas in phenomenology from the likes of Martin Heidegger and Maurice Merleau-Ponty.

If we stretch Uexküllâs concept just a little, we can think of different people as being in their own umwelts, even though they are the same species. Our needs and experiences shape how we interpret the information about the structures around us.

For skateboarders, an empty swimming pool has special meaning for activities that donât register for nonriders; for jugglers, objects of a certain size and weight, such as oranges, can mean âgreat for juggling,â whereas for the rest of us, they just look delicious.

When youâre looking for a parking place at the grocery store, you notice every nuance that might indicate if a space is empty. After you park, you could probably recall how many spaces you thought were empty but turned out to just have small cars or motorcycles parked in them. But, when youâre leaving the store, youâre no longer attending to that task with the same level of explicit concentration; you might notice no empty spaces at all. Instead, youâre trying to locate the lotâs exit, which itself can be an exercise in maddening frustration. The parking lot didnât change; the physical information is the same. But your perspective shifted enough that other information about different affordances mattered more than it did earlier.

In the airport scenario in Chapter 1, when I was conversing with my coworker about my schedule, my perception was different from his because the system for understanding the world that I was inhabiting (that is, my view of the calendar) was different from his, even though we are the same species, and even fit the same user demographics.

This idea of umwelt can also help us understand how digital systems, when given agency, are a sort of species that see the world in a particular way. Our bodies are part of their environment the way their presence is part of ours. New âsmartâ products like intelligent thermostats and self-driving carsâand even basic websites and appsâessentially use our bodies as interfaces between our needs and their actions. When we design the environments that contain such agents, itâs valuable to ask: what umwelt are these agents living in, and how do we best translate between them and the umwelt of their human inhabitants?

[18] I should note that in James Gibsonâs work, he never called this sort of information âphysical information.â He was careful to use âphysicalâ specifically for describing properties of the world that exist regardless of creaturely perception. A more accurate term might be âecologicalâ or âdirectly perceivedâ information, but this is an academic distinction that I found unhelpful to design audiences. So, for simplicity, Iâve opted to call the mode âphysicalâ instead.

[19] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979: 242.

[20] âââ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979: 239.

[21] âââ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979: 255.

[22] Russ Hamilton, photographer. Cornell University Photo Services. Division of Rare and Manuscript Collections, Cornell University Library.

[23] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979: 148

[24] âââ. Sensory processes and perception. A Century of Psychology as a Science. American Psychological Association, Washington, DC, 1992: 224â230. (ISBN 155798171X)

[25] James Gibson actually studied under a protege of William James, E.B. Holt. Burke, F. Thomas. What Pragmatism Was. Indiana University Press, 2013: 92.

[26] Gibson, E. J., and R. D. Walk. âThe visual cliff.â Scientific American, 1960; 202:64â72.

[27] McCullough, Malcolm. [Based on a diagram in] Ambient Commons: Attention in the Age of Embodied Information. Cambridge, MA: MIT Press, 2013.

[28] Gleick, James. The Information: A History, a Theory, a Flood. New York: Random House, Inc., 2011: 4604â5, Kindle edition.

[29] Louise Barrett traces the origin of the brain-as-computer metaphor to the work of John von Neumann in the late 1940s. Barrett, Louise. Beyond the Brain: How Body and Environment Shape Animal and Human Minds. Princeton, NJ: Princeton University Press, 2011:121, Kindle edition.

[30] Durso, Francis T. et al. Handbook of Applied Cognition. New York: John Wiley & Sons, 2007.

[31] Nardi, Bonnie. Context and Consciousness: Activity Theory and Human-Computer Interaction. âStudying Context: A Comparison of Activity Theory, Situated Action Models, and Distributed Cognitionâ pp. 35â52.

[32] Kirsh David. Embodied Cognition and the Magical Future of Interaction Design. ACM Trans. On Human Computer Interaction 2013.

[33] Including the entire environment as part of cognition has also been called âextended cognition theory.â Weâll just be using âembodiedâ as the umbrella term.

[34] Wilson, A., and S. Golonka. Frontiers in Psychology February 2013; Volume 4, Article 58.

[35] Wikimedia Commons: http://bit.ly/1xauZXB

[36] Barrett, Louise. Beyond the Brain: How Body and Environment Shape Animal and Human Minds. Princeton, NJ: Princeton University Press, 2011:55.

[37] Photo by Jared Spool.

[38] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:245.

[39] A frame captured from the streamed version of the film, reproduced under fair use.

[40] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:244â6.

[41] Gibson, J. J., The Senses Considered as Perceptual Systems. Boston: Houghton Mifflin, 1966:319.

[42] Wikimedia Commons: http://bit.ly/1rYwHIj

[43] From Grayâs Anatomy. Wikimedia Commons: http://bit.ly/1CM8viC

[44] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:245.

[45] It bears mentioning that Gibsonâs idea of âcalibrationâ Norbert Wienerâs cybernetics concept of âfeedback.â For more on Wiener, see: Bates, Marcia J. Information. In Encyclopedia of Library and Information Sciences. 3rd ed. 2010; Bates, Marcia J, Mary Niles Maack, eds. New York: CRC Press, Volume 3, pp. 2347â60. Retrieved from Scenario: Andrew Goes to the AirportâScenario: Andrew Goes to the Airport of http://bit.ly/1wkajgO.

[46] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:127.

[47] This is a slight paraphrase from Golonka, Sabrina. âA Gibsonian analysis of linguistic information.â Posted in Notes from Two Scientific Psychologists June 24, 2014. http://bit.ly/1rYwsgm.

[48] Jones, K. âWhat Is An Affordance?â Ecological Psychology 2003;15(2):107â14.

[49] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:138â9.

[50] âââ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:140.

[51] Dotov, Dobromir G, Lin Nie, Matthieu M de Wit. âUnderstanding affordances: history and contemporary development of Gibsonâs central concept.â Avant 2012;3(2).

[52] Gibson, J. J. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:137.

[53] âââ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979: 21.

[54] âââ. The Ecological Approach to Visual Perception. Boston: Houghton Mifflin, 1979:42.

[55] Norman does, however, explain that he is âappropriatingâ Gibsonâs concept and using it in a different way than Gibson intended. (Norman, 2013:14). My focus on affordance here has attempted to bring more Gibson into our understanding of the theory.

[56] Golonka, Sabrina. âA Gibsonian analysis of linguistic information.â Posted in Notes from Two Scientific Psychologists June 24, 2014 (http://bit.ly/1rYwsgm).

[57] Barrett, Louise. Beyond the Brain: How Body and Environment Shape Animal and Human Minds. Princeton, NJ: Princeton University Press, 2011:172.

[58] Meinhold, Bridgette. âBandaid-Like Stick-On Circuit Board Turns Your Body Into a Gadget.â Ecouterre April 15, 2014 (http://bit.ly/1nx6TYB).

[59] Haggard, Patrick, Matthew R Longo. âYou Are What You Touch: How Tool Use Changes the Brainâs Representations of the Body.â Scientific American September 7, 2010 (http://bit.ly/1FrVPll).

[60] Barrett, Louise. Beyond the Brain: How Body and Environment Shape Animal and Human Minds. Princeton, NJ: Princeton University Press, 2011:199.

[61] Clark, A. Supersizing the Mind: Embodiment, Action, and Cognitive Extension London: Oxford University Press, 2010:42.

[62] âââ. Supersizing the Mind: Embodiment, Action, and Cognitive Extension London: Oxford University Press, 2012:568â569, Kindle locations.

[63] âLoops of least effortâ is not Clarkâs phrasing, but my own. This is an idea weâll return to later, when we see how users tend to rely on physical information before bothering with the extra effort required by most semantic information.

[64] Barrett, Louise. Beyond the Brain: How Body and Environment Shape Animal and Human Minds. Princeton, NJ: Princeton University Press, 2011:128, Kindle edition.

[65] Dourish, Paul. Where the Action Is: The Foundations of Embodied Interaction. Cambridge, MA: MIT Press, 2005:170.

[66] âââ. Where the Action Is: The Foundations of Embodied Interaction. Cambridge, MA: MIT Press, 2005:172.

[67] Interestingly, though, due to millennia of breeding, dogs depend on faces more than scent or body language for recognizing and relating to humans. Gill, Victoria. âDogs recognize their ownerâs faceâ BBC Earth News October 22, 2010 (http://bbc.in/1nx6Snh).

Get Understanding Context now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.

![The mainstream model for cognitionMcCullough, Malcolm. [Based on a diagram in] Ambient Commons: Attention in the Age of Embodied Information. Cambridge, MA: MIT Press, 2013.](/api/v2/epubs/9781449326531/files/httpatomoreillycomsourceoreillyimages2160397.png.jpg)