Chapter 1. How Did We Get Here?

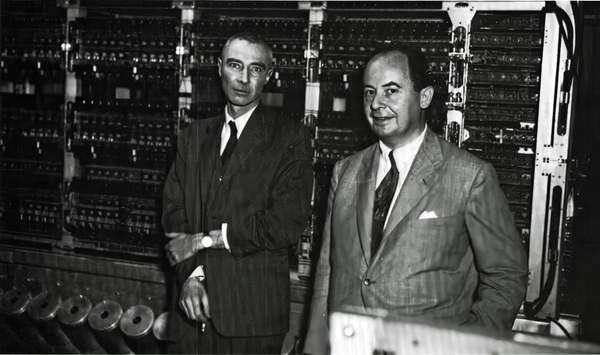

Figure 1-1. J. Robert Oppenheimer (left) and John von Neumann at the October 1952 dedication of the computer built for the Institute for Advanced Study. Oppenheimer, who was head of the Los Alamos National Laboratory during World War II, became the institute’s director in 1947.1

What we are creating now is a monster whose existence is going to change history, provided there is any history left.

John von Neumann2

In this chapter, I depict the cybersecurity industry as a super ouroboros: a snake that not only eats its own tail but also grows larger with every bite. Red (offensive) and blue (defensive) teams have been perpetually squaring off and creating new products and services for roughly 25 years while the customer (the technology enterprise, financial institution, hospital, power station, automobile manufacturer, etc.) pays the price.

This chapter will show you that there has never been such a thing as a “secure” or “healthy” network, from the first high-speed computer, known as MANIAC, to the present time; that the business of exposing vulnerabilities only makes the attacker’s job easier; and that, when used in medicine, the model of finding new ways to attack a network, advising the company about it, and then publishing your findings, which lets bad actors use that information, would be not only illegal but a crime against humanity.

By the time you’re finished you’ll have learned that software programming is inherently insecure, that the multibillion-dollar cybersecurity industry exploits that fact, and that it pays much better to play offense than defense.

von Neumann’s Monster

There is a marker in the history of civilization at which our future security became more perilous than ever before. Logic and math combined to form a new type of computing that enabled the creation of a thermonuclear weapon, a weapon so powerful that if used today it would result in an estimated two billion people dying if a nuclear war happened between India and Pakistan, and five billion people dying if the war was between the United States and Russia, due to the global effects that radiation would have on crops, marine fisheries, and livestock.3

Many would argue that the successful detonation of the first ever thermonuclear device in 1952 would certainly qualify as that marker, but the risk of such a war happening is extremely low thanks to the doctrine of mutually assured destruction (MAD).4 MAD relies on the theory of rational deterrence, which says that if two opponents each have the capability of using nuclear weapons, and that both players would die if either player used it, then neither will use it.

However, John von Neumann wasn’t nearly as worried about the bomb that he helped build as he was about the high-speed computer that he invented in order to mathematically prove that such a bomb was possible. The “monster” in von Neumann’s quote at the start of this chapter wasn’t the bomb. It was his stored program architecture code that ran the MANIAC computer at Los Alamos National Laboratory, an architecture that was inspired by his former student Alan Turing’s paper “On Computable Numbers.”5 Stored-program architecture went on to become the basis for digital computing worldwide.

With thermonuclear war, while the potential for harm was astronomical, the risk of it happening was very low thanks to MAD. Computing, on the other hand, was a seductive charmer that only a select few understood fully in the beginning. As computing became more pervasive and complex, no one knew more than their specialty. The average person can assess risk when it comes to things that they understand, but no one completely grasps how computing works, even the experts, and so our collective risk has grown to the point where online sabotage, extortion, theft, and espionage are unstoppable. Cyber insurance companies are now worried about claims so large that they could result in bankrupting the industry.

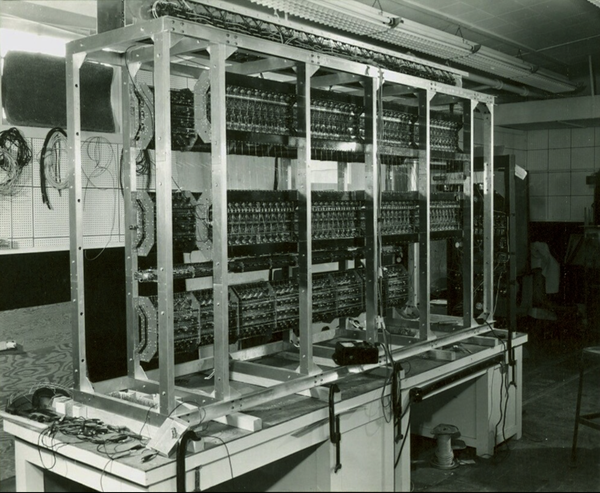

In order to understand just how unsafe the world is today because of the perils inherent in software and hardware, we need to return to Los Alamos and the MANIAC computer (see Figure 1-2).

Figure 1-2. The MANIAC’s chassis under construction in 1950.6

MANIAC’s primary purpose was to run mathematical models to test the thermonuclear process of a hydrogen bomb explosion. It successfully achieved that with a single mathematical operation that ran nonstop for sixty days. Then on November 1, 1952, IVY MIKE, the code name for the world’s first thermonuclear device, had a successful detonation on Elugelab, an island that was part of the Enewetak Atoll of the Marshall Islands.

Note

MANIAC also went on to become the first computer to beat a human in a modified game of chess; there were no bishops because of the limitations of the machine. MANIAC’s entire memory storage was five kilobytes (about the size of a short email), sitting within a six-foot-by-eight-foot beast weighing one thousand pounds. MANIAC went through three iterations between 1952 and 1965.

New challenges swiftly arose in the area of software because programs were haphazardly written without any formal rules or structure, and it all came to a head in 1968 at the NATO Software Engineering Conferences at Garmisch-Partenkirchen, a resort in the Bavarian Alps (see Figure 1-3). It should come as no surprise that there were serious differences of opinion among the attendees, all of whom came from the elite universities in their respective countries as well as Bell Labs and IBM.

Figure 1-3. An unidentified photographer captured this image at the NATO Software Engineering Conference in 1968.7

One side viewed the use of the phrase “software crisis” as unwarranted and unnecessarily dramatic. Sure, there were some supply problems, and there were “certain classes of systems that are beyond our capabilities,” but—their view went—we can handle payroll and sort routines perfectly well!

Douglas Ross, a pioneer in computer-aided design at MIT, and one who believed there was a serious software programming crisis at hand, had a perfectly succinct response to the critics: “It makes no difference if my legs, arms, brain, and digestive tract are in fine working condition if I am at the moment suffering from a heart attack. I am still very much in a crisis.”8

Around that same time, the US Department of Defense was struggling with how to do secure programming on a shared server provided by IBM. A task force, chaired by Willis Howard Ware from the RAND Corporation and including representatives from the US’s National Security Agency, Central Intelligence Agency, and Department of Defense, as well as academia, spent two years on the problem. The Ware Task Force produced a report in 1970 that advocated for a system of document flags representing the four levels of clearance: Unclassified, Confidential, Secret, and Top Secret. They would also program a rule that they called “No Read Up,” basically what we call “least privilege access” today.

Unfortunately, the Ware report concluded that it would be very difficult to secure such a system; one would have to delineate each method whereby the No Read Up rule could be defeated, and then create a flowchart that solves the security problem for each method of compromise. And that process would be next to impossible because, according to Ware, “the operating systems were Swiss cheese in terms of security loopholes.”9

Another two years went by with little to no action, and then Major Roger Schell of the US Air Force’s Electronic Systems division commissioned a new study. According to Schell, the Ware report was all doom and gloom with no solutions: “You’ve got all these problems. They offer almost nothing by way of solutions. The US Air Force wants to know solutions.”10

Is Software Killing People?

As the years went by, and computers were called upon to perform more and more complex tasks, the question of software’s dependability became more and more critical. In 1969, J. C. R. Licklider of the Defense Department’s Advanced Research Project Agency (today known as DARPA) was one of the contrarian voices decrying the use of software to run missile defense systems that protected American cities. It was a “potentially hideous folly,” Licklider said, because all software contains bugs.11

In 1994, Donald MacKenzie, author of Mechanizing Proof (published in 2001 by MIT Press), was determined to investigate just how big a problem software with programming flaws was. Were people actually dying because of it? Were the fears overblown or justified? He set about to determine the number of deaths in computer-related accidents worldwide up until 1992. As he found:

The resultant data set contained 1,100 deaths. Over 90 percent of those deaths were caused by faulty human-computer interaction (often the result of poorly designed interfaces or of organizational failings as much as mistakes by individuals).12 Physical faults such as electromagnetic interference were implicated in a further 4 percent of deaths. Software bugs caused no more than 3 percent, or thirty, deaths: two from a radiation-therapy machine whose software control system contained design faults, and twenty-eight from faulty software in the Patriot antimissile system that caused a failed interception in the 1991 Gulf War.13

MacKenzie’s research paper on computer-related deaths was published separately in the journal Science and Public Safety in 1994. In that paper he raised the problem of underreporting of less catastrophic accidents, “such as industrial accidents involving robots or other forms of computer-controlled automated plants.”14

For example, in 2001, five patients of Panama’s National Oncological Institute died due to radiation overexposure that resulted from software flaws in the radiation treatment planning software.15

The acceleration of the transition to the electronic health record (EHR) in health care from 2011 to the present brought about a shocking number of computer-related injuries and deaths in spite of being a notoriously underreported sector. This doesn’t diminish the fact that EHRs have also had their successes; however, unless and until hospitals and clinics are required to report the cases where EHRs caused harm, patients should be advised of the risks, known and unknown, associated with them.

The adoption of EHRs accelerated under US Presidents Bush and Obama. President Bush wrote an executive order (EO) that created the Office of the National Coordinator for Health Information Technology with the goal of achieving nationwide adoption of EHRs by 2014. President Obama funded the rollout by incorporating EHRs into his American Recovery and Reinvestment Act of 2009, which paid health care providers more money if they implemented EHR systems and associated requirements.16 It also incentivized providers with higher payments for “meaningful use,” which goes beyond simple record-keeping functions to improving quality of care. The Centers for Medicare and Medicaid Services had a three-stage process to encourage hospitals to expand their use of EHR technology and receive incentive payments for doing so.17

- Stage one

-

Capture basic health information and provide printed copies to patients after a visit.

- Stage two

-

Improve clinical processes and quality of care; improve information sharing.

- Stage three

-

Improve health outcomes.

These incentives prompted a gold-rush mentality among software companies that wanted to take advantage of the federal dollars available to support this initiative. And hospitals that took Medicare and Medicaid payments were mandated to transition to EHR by a certain deadline if they wanted to keep getting paid by the federal government. The combination of greed and speed led to terrible results.

Researchers at the University of Illinois at Urbana-Champaign, MIT, and Rush University Medical Center tackled the subject of accidents and deaths by robotic surgeries between 2000 and 2013 as reported to the FDA MAUDE database, which houses reports on medical device usage. The researchers’ goal was “to determine the frequency, causes, and patient impact of adverse events in robotic procedures across different surgical specialties.”18 Their results showed that “144 deaths (1.4% of the 10,624 reports), 1,391 patient injuries (13.1%), and 8,061 device malfunctions (75.9%) occurred during the study period.” The researchers believed that these numbers were low due to underreporting.

A report by Kaiser Health News and Fortune magazine entitled “Death by a Thousand Clicks” interviewed more than one hundred physicians, patients, IT experts and administrators, health policy leaders, attorneys, top government officials, and representatives at more than a half-dozen EHR vendors, including the CEOs of two of the companies.19 Its result:

Our investigation found alarming reports of patient deaths, serious injuries and near misses—thousands of them—tied to software glitches, user errors or other flaws that have piled up, largely unseen, in various government-funded and private repositories.

Compounding the problem are entrenched secrecy policies that continue to keep software failures out of public view. EHR vendors often impose contractual “gag clauses” that discourage buyers from speaking out about safety issues and disastrous software installations—though some customers have taken to the courts to air their grievances. Plaintiffs, moreover, say hospitals often fight to withhold records from injured patients or their families. Indeed, two doctors who spoke candidly about the problems they faced with EHRs later asked that their names not be used, adding that they were forbidden by their health care organizations to talk. Says Assistant U.S. Attorney Foster, the EHR vendors “are protected by a shield of silence.”

I spoke with a patient safety researcher in Switzerland who confirmed that it wasn’t just a US problem. He told me that there’s no formal tracking of EHR’s safety record because (a) it’s not required by law, and (b) at least one hospital administrator told him, “we don’t want to know the safety performance of our system because what would that mean?” The administrator further went on to say that safety researchers should be more diplomatic about what they say because it might result in vendors leaving the market. “Isn’t that what we want for unsafe systems?” the researcher asked him somewhat incredulously.

Note

At a 2017 meeting with health care leaders in Washington, former Vice President Joe Biden railed against the infuriating challenge of getting his son Beau’s medical records from one hospital to another. “I was stunned when my son for a year was battling stage 4 glioblastoma,” said Biden. “I couldn’t get his records. I’m the vice president of the United States of America…It was an absolute nightmare. It was ridiculous, absolutely ridiculous, that we’re in that circumstance.20

The impact of poorly designed, fault-prone EHR software has predictably struck our most vulnerable population—children. A study published in the medical journal Patient Safety in November 2018 analyzed nine thousand pediatric patient safety reports, made in the period 2012–2017, from three different health care institutions that were likely related to EHR use. “Of the 9,000 reports,” it found, “3,243 (36 percent) had a usability issue that contributed to the medication event, and 609 (18.8 percent) of the 3,243 might have resulted in patient harm.”21

The averseness of the health care profession to tracking usability and patient harm (potential and realized) is evident by the fact that only one state—Pennsylvania—has required acute health care facilities to report patient safety events that either could have harmed or did harm the patient.22 While other states have reporting requirements, they’re narrowly focused and no one really wants to track that data. In that respect, it’s very similar to the reluctance of companies to report a cybersecurity incident—and if they do report it, it’s to underplay the seriousness. The same corporate reluctance applies to fixing vulnerabilities that have been discovered by security researchers.

To Disclose, or Not to Disclose, or to Responsibly Disclose

A vulnerability is a flaw, glitch, or weakness in the coding of a software product that may be exploited by an attacker.23

Vulnerability disclosure was once hotly debated with almost religious ferocity. On the one side were the skeptics who painstakingly tried to explain that when you build on top of a fundamentally insecure base, you are perpetuating insecurity. Or to frame it another way, if you build a perfect house on sand, its collapse is inevitable. If you publicly announce that the house is built on sand, and a third party announces that there is one point of the structure where, if pushed at just the right angle with n amount of pressure, you can greatly accelerate that process, you’re making it really easy for others to cause problems that otherwise they would have to discover on their own.

On the other side were the proponents who, while acknowledging that nothing will ever be 100% secure, say that public disclosure is a must because back when we didn’t have it, companies that were informed about vulnerabilities in their software didn’t bother fixing them. It wasn’t until researchers went public with their findings, with the help of journalists who gave them headlines, that companies would spend the money on developing patches. Technologist Bruce Schneier summed it up in his essay “Damned Good Idea” when he wrote: “Public scrutiny is the only reliable way to improve security, while secrecy only makes us less secure.”24

There are numerous examples of companies that were notified about problems in their software products, didn’t patch or update them, and suffered a breach. A few of those appear below.

Sony PlayStation Network

The Sony PlayStation Network had a month-long series of data breaches starting around April 17, 2011, that resulted in the compromise of personal data for 77 million Sony PlayStation Network users. It was large enough to attract the attention of Representative Mary Bono Mack (R-CA), chair of the congressional Subcommittee on Commerce, Manufacturing, and Trade, who sent a formal letter to Kazuo Hirai, Sony’s executive deputy president, with a request to appear before the committee and answer 13 questions. Hirai declined to appear personally but responded to the questions via a letter.

The committee did take testimony from four individuals about the matter, one of whom was Dr. Gene Spafford, a professor of computer science at Purdue University. In his testimony, Spafford said that Sony knew that it was using outdated Apache web server software and no firewall because those issues had been reported several months earlier in an online forum that Sony employees monitored.

Equifax

Any complex software contains flaws.

That’s an excerpt from the official statement of the Apache Software Foundation on the 2017 Equifax data breach, and while it seems simplistic, it’s a necessary reminder that there’s no such thing as 100% secure. The only question defenders have to answer is how easy they want to make it for the attackers to get in.

In the case of the Equifax breach, the company made it really easy by not immediately installing a software vulnerability patch that Apache had written and pushed out the same day that Apache had been notified of it—March 7, 2017. Because Equifax never installed the patch, the personal credit information of 143 million people had been compromised.

If the vulnerability had not been publicly disclosed, attackers would have had to discover the software flaw on their own. But that’s not how the system of vulnerability disclosure works.

The Apache Software Foundation issued a press release that included the following paragraph:

Since vulnerability detection and exploitation has become a professional business, it is and always will be likely that attacks will occur even before we fully disclose the attack vectors, by reverse engineering the code that fixes the vulnerability in question or by scanning for yet unknown vulnerabilities.

The worst example of corporate negligence when it comes to poor cybersecurity practices has to be Twitter.

An explosive 2022 whistleblower complaint that was leaked to the Washington Post revealed the egregious state of Twitter’s cybersecurity:

-

More than half of Twitter’s 500,000 servers were running operating systems so out of date that many did not support basic privacy and security features and lacked vendor support.

-

More than a quarter of the around 10,000 employee computers had software updates disabled.

-

More than one major security incident occurred every week, involving millions of user accounts.

-

Every engineer had a full copy of Twitter’s proprietary source code on their laptop instead of in the cloud or in a data center; laptops had poor to no security configurations including running endpoint security software that had been discontinued by the vendor.

This wasn’t the first time Twitter had cybersecurity issues. After the company suffered a serious breach and compromise of user data in 2009, the Federal Trade Commission put Twitter, then a private company, under a consent decree in March 2011 with a list of requirements that it must perform to maintain an acceptable level of security. One of those requirements specified the appointment of a “qualified, objective, independent third-party professional” to do several tasks:

-

Set forth the specific administrative, technical, and physical safeguards that respondent has implemented and maintained during the reporting period.

-

Explain how such safeguards are appropriate to respondent’s size and complexity, the nature and scope of respondent’s activities, and the sensitivity of the nonpublic personal information collected from or about consumers.

-

Explain how the safeguards that have been implemented meet or exceed the protections required.

-

Certify that respondent’s security program is operating with sufficient effectiveness to provide reasonable assurance to protect the security, privacy, confidentiality, and integrity of nonpublic consumer information and that the program has so operated throughout the reporting period.

The worst part of the Twitter (now X) fiasco is that no one at the company, from former CEO Jack Dorsey on down, seemed to care about the fact that a global telecommunications platform was so vulnerable to compromise, disinformation, and data theft. It takes a certain amount of skill, time, and money to break into a well-defended network even with the inherent weaknesses found in computer programming. But it becomes exponentially easier when security controls are carelessly applied or missing altogether.

Problematic Reporting of Exploits and Vulnerabilities

Speaking of making things easier for an attacker, that’s precisely what the controversial and firmly entrenched practice of vulnerability disclosure has done by incentivizing security researchers to discover and reveal not only the flaws they found in a product’s programming but also how to exploit them as well. The path to exploitation, also called the proof of concept, needs to be demonstrated so that the owner of the code can see that the flaw is genuine and how hard or easy it is to exploit. The worst-case scenario is an easy-to-exploit vulnerability that could cause serious harm to the users of the product.

The Cybersecurity and Infrastructure Security Agency (CISA) has created a catalog of known vulnerabilities that threat actors have been exploiting to make it easier for the federal government’s cyber defenders to conduct vulnerability management. Prioritize the vulnerability by how exploitable it is, test the patch, then execute the fix.

CISA has authority over every federal branch, department, and agency that isn’t part of the intelligence community or the Department of Defense and has issued a binding operational directive (BOD 22-01, “Reducing the Significant Risk of Known Exploitable Vulnerabilities”) that requires those departments and agencies to do the following:25

-

Within 60 days of issuance, agencies shall review and update agency internal vulnerability management procedures.

-

Remediate each vulnerability according to the timelines set forth in the CISA-managed vulnerability catalog.

-

Report on the status of vulnerabilities listed in the repository.

Part of CISA’s responsibilities under that directive is to maintain a catalog of Known Exploited Vulnerabilities (KEVs) and regularly review it based on changes in the cybersecurity landscape.26

The Exploit Database

It’s important to note that there is no correlation between the CVE database run by MITRE, the National Vulnerability Database run by the National Institute of Standards and Technology, and the Exploit Database run by Offensive Security.27 In fact, a study by Unit 42 discovered that of the 11,079 exploits that were mapped to CVEs at the time of the study, 80% of them were published in the Exploit Database an average of 23 days before their respective CVE was published. That’s the exact opposite of what should happen, assuming that you agree that public disclosure should happen at all. The entire point of responsible disclosure is that the vulnerability and proof of concept isn’t made public until after the patch is ready and the CVE released. Even then, it’s still a race for most companies to get the patch tested and installed before the bad guys move against you.

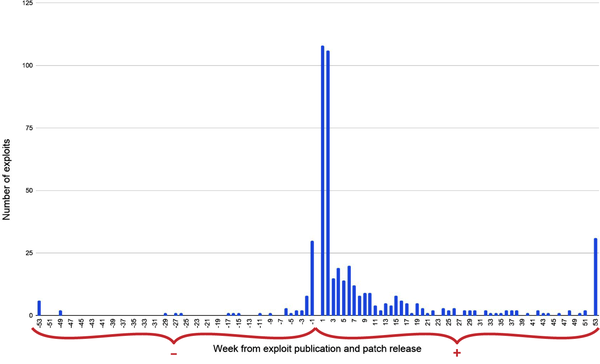

The minus numbers in Figure 1-4 show the vast majority of exploits were published in the Exploit Database before their respective CVEs were published. That not only doesn’t comply with the spirit of responsible disclosure, but it also begs the question of where the line is drawn between serving the needs of vulnerability researchers versus harming the companies, organizations, and agencies that are relying on cybersecurity products and services for protection.

Figure 1-4. Graph courtesy of Unit 42.

A Protection Racket?

The cybersecurity industry has a reverse financial incentive. Systems built with software are inherently vulnerable, and many of those vulnerabilities are unknown. Money and professional reputations are made by discovering new vulnerabilities, of which there is an unlimited supply, and announcing them to the world. Then more money is made by creating products to protect those systems that are put at risk every time a new exploitable vulnerability is born. There’s a name for a business that’s responsible for both making you vulnerable to attack and defending you from that attack. The business is organized crime, the racket is called “protection,” and it’s illegal.28

Imagine if this model also applied to medicine. Medical hackers would make their living finding ways that the human body was vulnerable to illness and then announce those new vulnerabilities at medical conferences, which would then start a race between pharmaceutical companies to create a new drug (“patch”) and bad actors to create malicious organisms (bioweapons) to exploit those vulnerabilities.

What a horror show that would be! In fact, we call that scenario biological warfare, and it’s been prohibited worldwide since 1928 under the United Nations’ Biological Weapons Convention.

No other industry is allowed to do what the cybersecurity industry has been doing since its inception. It’s time for a new model with different incentives and accompanying regulations.

Summary

My goal with this chapter was to demonstrate how cybersecurity is an industry driven by profit incentives that are at cross-purposes to its stated mission. Startups are reliant upon venture capital (VC) firms and private equity that have a different “why” than the founders do, a why that is entirely driven by how big of a multiple the VC firm can exit for rather than how effective the product or service is at preventing losses by unauthorized network access.

It is an industry divided between offense and defense, but one where only those engaged in offense are rewarded with speaking engagements, VC funding, and celebrity hacker status, which isn’t surprising as this chapter also demonstrated how there has never been such a thing as a “secure” or “healthy” network since the birth of the first high-speed computer—MANIAC.

Finally, it is an industry desperately in need of a moral imperative similar to the Hippocratic Oath, to help the sick, and abstain from intentional wrongdoing.29

If you’re looking for additional reading, I encourage you to seek out Mechanizing Proof, referenced in the footnotes of this chapter, and Dr. Olav Lysne’s The Huawei and Snowden Questions, published by Springer and available for free download.

1 A version of the computer in this image that was built for the Institute of Advanced Study was later built for Los Alamos. Both utilized the von Neumann architecture. The image is in the public domain.

2 William Poundstone, “Unleashing the Power”, review of Turing’s Cathedral, by George Dyson, New York Times, May 4, 2012.

3 The two atomic bombs dropped on Japan during World War II were fission bombs. The thermonuclear device that von Neumann was helping to create was a fusion bomb, which is a more complex nuclear reaction resulting in a much more powerful explosion. More information on the differences can be found here; Lili Xia et al., “Global Food Insecurity and Famine from Reduced Crop, Marine Fishery and Livestock Production Due to Climate Disruption from Nuclear War Soot Injection”, Nature Food 3 (2022): 586–596.

4 Alan J. Parrington, “Mutually Assured Destruction Revisited: Strategic Doctrine in Question,” Airpower Journal 11, no. 4 (1997), 4–19.

5 Lily Rothman, “How Time Explained the Way Computers Work”, Time, May 28, 2015.

6 Taken at Los Alamos National Laboratory 1950. Unless otherwise indicated, this information has been authored by an employee or employees of the Los Alamos National Security, LLC (LANS), operator of the Los Alamos National Laboratory under Contract No. DE-AC52-06NA25396 with the U.S. Department of Energy. The U.S. Government has rights to use, reproduce, and distribute this information. The public may copy and use this information without charge, provided that this Notice and any statement of authorship are reproduced on all copies. Neither the Government nor LANS makes any warranty, express or implied, or assumes any liability or responsibility for the use of this information.

7 “The Birth of Software Engineering” posted on Github.

8 Donald A. MacKenzie, Mechanizing Proof (Cambridge, MA: MIT Press, 2001), 36.

9 Ibid, 160.

10 Ibid, 160.

11 Ibid, 299.

12 Computer-related accidents may include bugs, malware, a poorly designed user interface, and failure.

13 Mechanizing Proof, 300.

14 Donald MacKenzie, “Computer-Related Accidental Death: An Empirical Exploration”, Science and Public Policy 21, 4 (August 1994): 233–248; possibly the worst example of software flaws resulting in fatalities were the two Boeing 737 crashes (in October 2018 and February 2019, which) that resulted in the deaths of 346 people.

15 “FDA Statement on Radiation Overexposures in Panama”, US Food and Drug Administration, content current as of June 13, 2019.

16 Jim Atheron, “Development of the Electronic Health Record”, Virtual Mentor, American Medical Association Journal of Ethics 13, no. 3 (March 2011): 186–189.

17 “Stages of Promoting Interoperability Programs: First Year Demonstrating Meaningful Use”, Department of Health and Human Services, accessed 2022.

18 Homa Alemzadeh et al., “Adverse Events in Robotic Surgery: A Retrospective Study of 14 Years of FDA Data,” PLoS One 11, no. 4 (2016): e0151470.

19 Fred Schulte and Erika Fry, “Death by 1,000 Clicks: Where Electronic Health Records Went Wrong”, Fortune, March 18, 2019.

20 Ibid.

21 Raj M. Ratwani et al., “Identifying Electronic Health Record Usability and Safety Challenges in Pediatric Settings,” Health Affairs (Project Hope) 37, no. 11 (2018): 1752–1759, doi:10.1377/hlthaff.2018.0699.

22 Shawn Kepner and Rebecca Jones, “2020 Pennsylvania Patient Safety Reporting: An Analysis of Serious Events and Incidents from the Nation’s Largest Event Reporting Database”, Patient Safety 3, no. 2 (2021): 6–21.

23 Kelley Dempsey et al., “Automation Support for Security Control Assessments: Software Vulnerability Management”, National Institute of Standards and Technology, report 8011, no. 4 (April 2020).

24 Bruce Schneier, “Schneier: Full Disclosure of Security Vulnerabilities a ‘Damned Good Idea’”, Schneier on Security, January 2007.

25 “BOD 22-01: Reducing the Significant Risk of Known Exploited Vulnerabilities”, Cybersecurity and Infrastructure Security Agency, November 3, 2021.

26 See the CISA’s Known Exploited Vulnerabilities catalog.

27 Offensive Security, which maintains the Exploit Database, is a for-profit company with over 800 employees that provides training and certification in penetration testing as well as offering penetration testing services to companies.

28 Paolo Campana, “Organized Crime and Protection Rackets”, in Wim Bernasco, Jean-Louis van Gelder, and Henk Elffers (eds.), The Oxford Handbook of Offender Decision Making, Oxford Handbooks (2017; online ed., Oxford Academic, 6 June 2017).

29 The principle of “First, do no harm,” although widely attributed to the Hippocratic Oath, is not a correct translation, nor is it even possible to do. A more accurate translation is “to help the sick, and abstain from intentional wrongdoing.” If you substitute “vulnerable” for “sick,” I think you have the makings of a good oath for cybersecurity practitioners to take.

Get Inside Cyber Warfare, 3rd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.