Chapter 4. Software Assurance

Software assurance, an important subdiscipline of software engineering, is the confidence that software will run as expected and be free of vulnerabilities. Given the weight and importance of these tasks, scientific experimentation and evaluation can help ensure that software is secure. In this chapter, we will look at the intersection of software assurance and cybersecurity science. We will use fuzzing as an example of experimentally testing a hypothesis, the importance and design of an adversarial model, and how to put the scientific method to work in evaluating software exploitability.

The Department of Homeland Security describes software assurance as “trustworthiness, predictable execution, and conformance.” Programmers and cybersecurity practitioners spend a lot of time finding and mitigating vulnerabilities to build software assurance, and cybersecurity science can aid that practice. “Since software engineering is in its adolescence, it is certainly a candidate for the experimental method of analysis. Experimentation is performed in order to help us better evaluate, predict, understand, control, and improve the software development process and product.” This quote is from an article from 1986, and is as true today as it was then.

In an ideal world, software developers could apply a magic process to confirm without a doubt that software is secure. Unfortunately, such a solution is not available, or at least not easily and universally available for all software. Formal verification uses the field of formal methods in mathematics to prove the correctness of algorithms, protocols, circuits, and other systems. The Common Criteria, and before it the Trusted Computer System Evaluation Criteria, provides standards for computer security certification. Documentation, analysis, and testing determine the evaluation assurance level (EAL) of a system. FreeBSD and Windows 7, for example, have both obtained EAL Level 4 (“Methodically Designed, Tested, and Reviewed”).

There are plenty of interesting scientific experiments in software assurance. For example, if you want to know how robust your company’s new music streaming service is, you could design the experiment methodology to test the software in a large-scale environment that simulates thousands of real-world users. Perhaps you want to know how to deploy or collect telemetry—automatic, remote collection of metrics and measurements—from Internet-connected vehicles, and need to find the balance of frequent transmissions of real-time data versus the cost of data connectivity. Software assurance is especially sensitive to correctly modeling the threat, so you might experiment with the realism of the test conditions themselves. Discovering new ways to automate the instrumentation and testing of software will continue to be valuable to software assurance.

An Example Scientific Experiment in Software Assurance

A fundamental research question in software assurance is “how do we find all the unknown vulnerabilities in a piece of software?” This question arises from the practical desire to create secure solutions, especially as software grows ever larger and complex.1 A few general techniques have emerged in the past decade that practitioners rely on to find vulnerabilities. Some techniques are tailored for specific situations, such as static analysis when source code is available. Others can be applied in a variety of situations. Here are some of the more common software assurance techniques:

- Static analysis

Looks for vulnerabilities without executing the program. This may include source code analysis, if available.

- Dynamic analysis

Runs the program looking for anomalies or vulnerabilities based on different program inputs. Often done in instrumented sandbox environments.

- Fuzzing

A specific type of dynamic analysis in which many pseudorandom inputs are provided to the program to find vulnerabilities.

- Penetration testing

The manual or automated search for vulnerabilities by attempting to exploit system vulnerabilities and misconfigurations, often including human users.

For an example of scientific experimentation in software assurance, look at the paper “Optimizing Seed Selection for Fuzzing” by Rebert et al. (2014). Because it is computationally prohibitive to feed every possible input to a program you are analyzing, such as a PDF reader, the experimenter must choose the least number of inputs or seeds to find the most bugs in the target program. The following abstract describes the experiment and results of this experiment. The implied hypothesis is that the quality of seed selection can maximize the total number of bugs found during a fuzz campaign.

Abstract from a software assurance experimentRandomly mutating well-formed program inputs or simply fuzzing, is a highly effective and widely used strategy to find bugs in software. Other than showing fuzzers find bugs, there has been little systematic effort in understanding the science of how to fuzz properly. In this paper, we focus on how to mathematically formulate and reason about one critical aspect in fuzzing: how best to pick seed files to maximize the total number of bugs found during a fuzz campaign. We design and evaluate six different algorithms using over 650 CPU days on Amazon Elastic Compute Cloud (EC2) to provide ground truth data. Overall, we find 240 bugs in 8 applications and show that the choice of algorithm can greatly increase the number of bugs found. We also show that current seed selection strategies as found in Peach may fare no better than picking seeds at random. We make our data set and code publicly available.

Consider some ways that you could build and extend on this result. Software assurance offers some interesting opportunities for cross-disciplinary scientific exploration. Think of questions that bridge the cyber aspect with a non-cyber aspect, such as economics or psychology. Could you use the fuzzing experiment as a way to measure questions like: Does your company produce more secure software if a new developer is paired with an experienced employee to instill a culture of security awareness? Do developers who are risk-averse in the physical world produce more security-conscious choices in the software they create? Multi-disciplinary research can be a rich and interesting source of scientific questioning.

Fuzzing for Software Assurance

Fuzzing is one method for experimentally testing a hypothesis in the scientific method. For example, a hypothesis might be that my webapp can withstand 10,000 examples of malformed input without crashing. Fuzzing has been around since the 1980s and offers an automated, scalable approach to testing how software handles various input. In 2007, Microsoft posted on its blog that it uses fuzz testing internally to test and analyze its own software, saying “it does happen to be one of our most scalable testing approaches to detecting program failures that may have security implications.”

Choosing fuzzing for your experimental methodology is only the start. Presumably you have already narrowed your focus to a particular aspect of the software attack surface. You must also make some assumptions about your adversaries, a topic we will cover later in this chapter. It usually makes sense to use a model-based fuzzer that understands the protocols and input formats. If you are fuzzing XML input, then you can generate test cases for every valid field plus try breaking all the rules. In the interest of repeatability, you must track which test case triggers a given failure. Finally, you certainly want to fuzz the software in as realistic an environment as possible. Use production-quality code in the same configuration and environment as it will be deployed.

Fuzzing requires some decisions that impact the process. For example, if the fuzzer is generating random data, you must decide when to stop fuzzing. Previous scientific exploration has helped uncover techniques for correlating fuzzing progress based on code coverage, but code coverage may not be your goal. Even if you run a fixed number of test cases, what does it mean if no crashes or bugs are found? There is also a fundamental challenge in monitoring the target application to know if and why a fuzzed input affected the target application. Furthermore, generating crashes is much easier than tracking down the software bug that caused the crash.

Fuzzing may seem like a random and chaotic process that doesn’t belong in the scientific method. Admittedly, this can be true if used carelessly, but that holds for any experimental method. Scientific rigor can improve the validity of information you get from fuzzing. The reason behind why you choose fuzzing over any other technique is also important. A user who applies fuzzing to blindly find crashes is accomplishing a valuable task, but that alone is not a scientific task. Fuzzing must help test a hypothesis, and must adhere to the scientific principles previously discussed in “The Scientific Method”, including repeatability and reproducibility.

At the opposite end of the bug-finding spectrum from fuzzing are formal methods. Formal methods can be used to evaluate a hypothesis using mathematical models for verifying complex hardware and software systems. SLAM, a Microsoft Research project, is such a software model checker. The SLAM engine can be used to check if Windows device drivers satisfy driver API usage rules, for example. Formal methods are best suited for situations where source code is available.

Recall from Chapter 1 that empirical methods are based on observations and experience. By contrast, theoretical methods are based on theory or pure logic. Fuzzing is an empirical method of scientific knowledge. Empirical methods don’t necessarily have to occur in the wild or by observing the real world. Empirical strategies can also take many forms including exploratory surveys, case studies, and experiments. The way to convert software assurance claims into validated facts is with the experimental scientific method.

The Scientific Method and the Software Development Life Cycle

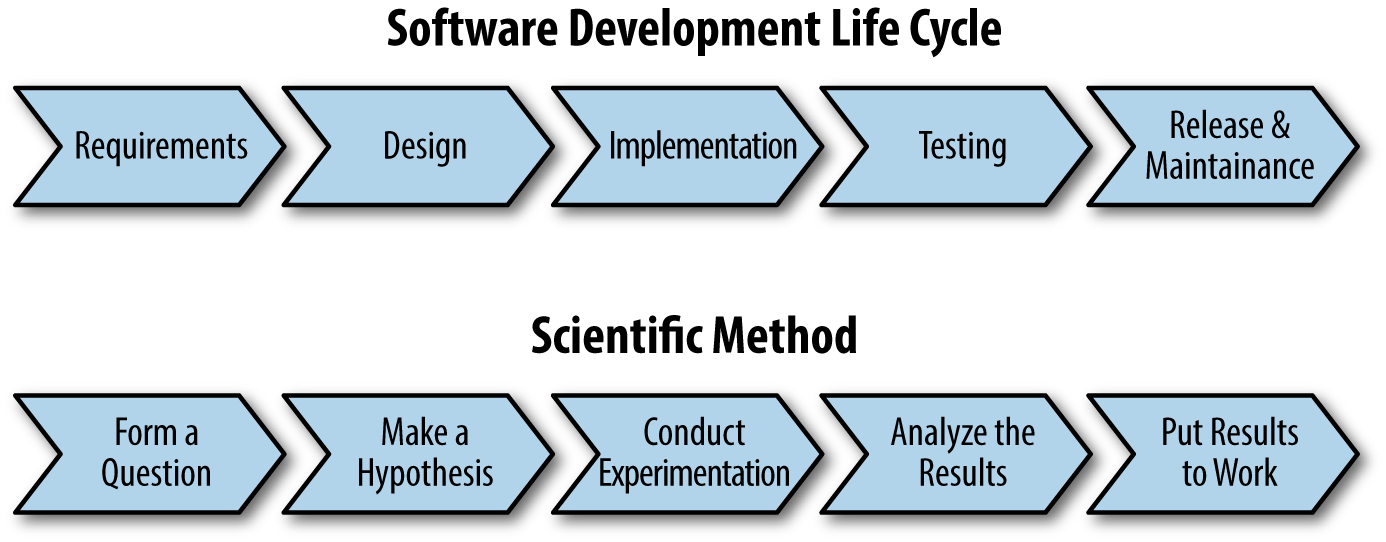

Software assurance comes from following development best practices, and from consciously, deliberately adding security measures into the process. The software development life cycle (SDLC) is surprisingly similar to the scientific method, as you can see in Figure 4-1. Both processes have an established procedure which helps ensure that the final product or result is of high quality. The IEEE Standard Glossary even says “Software Engineering means application of systematic, disciplined, quantifiable approach to development, operation, and maintenance of software.” The adjectives used to describe this approach mirror those of the approach to scientific exploration. However, just because both have a defined structure, simply following the process-oriented SDLC does not necessarily mean you are doing science or following the scientific method.

Figure 4-1. Comparison of the software development life cycle with the scientific method

There are opportunities to apply the scientific method in the development life cycle. First, scientific exploration can be applied to the SDLC process itself. For example, do developers find more bugs than dedicated test engineers, or what is the optimal amount of time to spend testing in order to balance security and risk? Second, science can inform or improve specific stages of the SDLC. For example, is pair-programming more efficient or more secure than individual programming, or what is the optimal number of people who should conduct code reviews?

The SDLC also has lessons to teach you about the scientific method. Immersing yourself in the scientific method can sometimes cause you to lose sight of the goal. Science may prove beneficial to cybersecurity practitioners by allowing them to do their jobs better, improving their products, and generating value for their employers. The SDLC helps maximize productivity, and satisfy customer needs and demands, and science for its own sake might not be your goal.

Adversarial Models

Defining a realistic and accurate model of the adversary is an important and complicated undertaking. As we will see in Chapter 7, provable security relies on a model of the system and an attack model. Cybersecurity as applied to software assurance and other domains requires us to consider the motivations, capabilities, and actions of those seeking to compromise the security of a system. This challenge extends to human red teams who may attempt to emulate an adversary and also to algorithms and software emulations of adversaries. Even modeling normal user behavior is challenging because humans rarely act as predictably and routinely as an algorithm. The best network traffic emulators today allow the researcher to define user activity like 70% web traffic (to a defined list of websites) and 30% email traffic (with static or garbage content). Another choice for scientific experimentation (and training) is to use live traffic or captures of real adversary activity.

Sandia National Laboratories’ Information Design Assurance Red Team (IDART) has been studying and developing adversary models for some time. For example, it has described a small nation state example adversary with these characteristics:2

The adversary is well funded. The adversary can afford to hire consultants or buy other expertise. This adversary can also buy commercial technology. These adversaries can even afford to develop new or unique attacks.

This adversary has aggressive programs to acquire education knowledge in technologies that also may provide insider access.

This adversary will use classic intelligence methods to obtain insider information and access.

This adversary will learn all design information.

The adversary is risk averse. It will make every effort to avoid detection.

This adversary has specific goals for attacking a system.

This adversary is creative and very clever. It will seek out unconventional methods to achieve its goals.

It is one thing to define these characteristics on paper and quite another to apply them to a real-world security evaluation. This remains an open problem today. What would it look like to test your cyber defenses against a risk-averse adversary? Here might be one way: say you set up a penetration test using Metaploit and Armitage, plus Cortana, the scripting language for Armitage. You could create a script that acts like a risk-averse adversary by, for example, waiting five minutes after seeing a vulnerable machine before attempting to exploit it (Example 4-1).

Example 4-1. A Cortana script that represents a risk-averse adversary

#

# This script waits for a box with port 445 open to appear,

# waits 5 minutes, and then

# launches the ms08_067_netapi exploit at it.

#

# A modified version of

# https://github.com/rsmudge/cortana-scripts/blob/master/autohack/autohack.cna

#

# auto exploit any Windows boxes

on service_add_445 {

sleep(5 * 60 * 1000);

println("Exploiting $1 (" . host_os($1) . ")");

if (host_os($1) eq "Microsoft Windows") {

exploit("windows/smb/ms08_067_netapi", $1);

}

}

on session_open {

println("Session $1 opened. I got " . session_host($1) .

" with " . session_exploit($1));

}The bottom line is that good scientific inquiry considers the assumptions about the capabilities of an adversary, such as what he or she can see or do. Journal papers often devote a section (or subsection) to explaining the adversary model. For example, the authors might state that “we assume a malicious eavesdropper where the eavesdropper can collect WiFi signals in public places.” As you create and conduct scientific experiments, remember to define your adversarial model. For additional references and discussions on real-world adversary simulations, see the blog posts from cybersecurity developer Raphael Mudge.

Case Study: The Risk of Software Exploitability

Software assurance experts sometimes assume that all bugs are created equal. For a complex system such as an operating system, it can be impractical to address every bug and every crash. Software development organizations typically have an issue-tracking system like Jira, which documents bugs and allows the organization to prioritize the order in which issues are addressed.

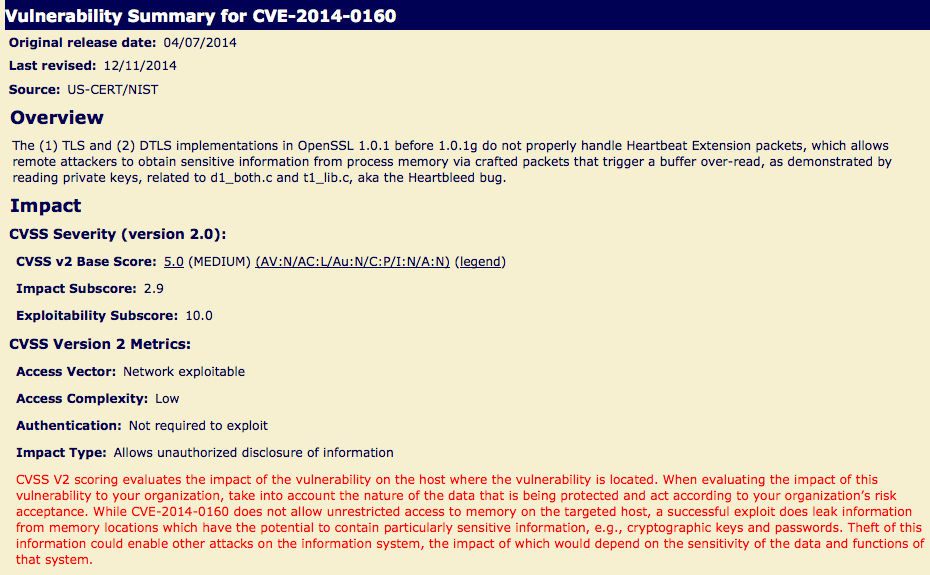

Not all bugs are created equal. As discussed earlier, risk is a function of threats, vulnerabilities, and impact. Even with a carefully calculated risk analysis, understanding the likelihood or probability of that risk occurring is vital. The Common Vulnerability Scoring System (CVSS) is a standard for measuring vulnerability risk. A CVSS score takes into account various metrics, such as attack vector (network, local, physical), user interaction (required or not required), and exploitability (unproven, proof of concept, functional, high, not defined). Figure 4-2 shows the CVSS information for Heartbleed. Calculating CVSS scores requires a thorough understanding of the vulnerability, and is not easily done for every crash you generate. Microsoft’s crash analyzer, !exploitable, also calculates an exploitability rating (exploitable, probably exploitable, probably not exploitable, or unknown), and does so based solely on crash dumps. Microsoft says that the tool can tell you, “This is the sort of crash that experience tells us is likely to be exploitable.”

Figure 4-2. National Vulnerability Database entry for Heartbleed (CVE-2014-0160)

A New Experiment

Consider a hypothetical scientific experiment to determine the likelihood of exploitability. Say you are a developer for a new embedded system that runs on an Internet-enabled pedometer. Testing has already revealed a list of crashes and you would like to scientifically determine which bugs to fix first based on their likelihood of exploitability. Fixing bugs results in a better product that will bring your company increased sales and revenue. One question you could consider is how attackers have gone after other embedded systems like yours. Historical and related data can be very insightful. Unfortunately, it isn’t possible to test a hypothesis like “attackers will go after my product in similar ways to Product Y” until your product is actually attacked, at which point you will have data to support the claim. It is also difficult to predict how dedicated adversaries, including researchers, may attack your product. However, it is possible to use fuzzing to generate crashes, and from that information you can draw a hypothesis. Consider this hypothesis:

Crashes in other similar software can help predict the most frequent crashes in our new code.

The intuition behind this hypothesis is that some crashes are more prevalent than others, that there are identifiable features of these crashes shared between software, and that you can use historical knowledge to identify vulnerable code in new software. It’s better to predict frequent crashes than to wait and see what consumers report. You begin by gathering crashes that might indicate bugs in your own product. This list could come from fuzzing, penetration testing, everyday use of the software, or other crash-generating mechanisms. You also need crash information from other similar products, either from your company or competitors. By fuzzing both groups, you can apply some well-known techniques and determine if the hypothesis holds.

Here’s one approach:

Check for known vulnerabilities in the National Vulnerability Database. As of June 2015, there were no entries in the database for Fitbits.

Use Galileo, a Python utility for communicating with Fitbit devices, to enable fuzzing.

Use the Peach fuzzer or a custom Python script, based on the following, to send random data to the device trying to generate crashes:

# Connect to the Fitbit USB dongle device = usb.core.find(idVendor=0x2687, idProduct=0xfb01) # Send data to the Fitbit tracker (through the dongle) device.write(endpoint, data, timeout) # Read responses from the tracker (through the dongle) response = device.read(endpoint, length, timeout)

Say you find six inputs that crash the Fitbit. Attach eight attributes to each crash:

Stack trace

Size of crashing method (in bytes)

Size of crashing method (in lines of code)

Number of parameters to the crashing method

Number of conditional statements

Halstead complexity measures

Cyclomatic complexity

Nesting-level complexity

Apply automatic feature selection in R with Recursive Feature Elimination (RFE) to identify attributes that are (and are not) required to build an accurate model.

# Set the seed to ensure the results are repeatable set.seed(7) # Load the libraries that provide RFE library(mlbench) library(caret) # Load the data data(FitbitCrashData) # Define the control using a random forest selection function control <- rfeControl(functions=rfFuncs, method="cv", number=10) # Run the RFE algorithm results <- rfe(FitbitCrashData[,1:8], FitbitCrashData[,9], sizes=c(1:8), rfeControl=control) # Summarize the results print(results) # List the chosen features predictors(results) # Plot the results plot(results, type=c("g", "o"))

Without going into depth, machine learning is an approach that builds a model from input data and learns how to make predictions without being told explicitly how to do so (machine learning is covered in Chapter 6). This technique is good for testing the hypothesis because we don’t know whether crashes in our new code are related to crashes in the other software. Within machine learning is a process called feature selection, which is designed to identify the attributes that most effect the model. For example, a crash feature might be the number of parameters to the code function that crashed. Perhaps crashes are more frequent in functions with more parameters. Feature selection also weeds out irrelevant attributes; maybe the number of lines of code in the crashing function has no statistical correlation with the number of crashes.

Note

For a technical deep dive into this process, see “Which Crashes Should I Fix First?: Predicting Top Crashes at an Early Stage to Prioritize Debugging Efforts” by Kim et al. (2011).

In the end, you will want to show that machine learning, based on related software crashes, accurately predicted frequent crashes in your new code.

How to Find More Information

Research in many software assurance areas—especially vulnerability discovery—is presented in general cybersecurity journals and conferences but also at domain-specific venues including the International Conference on Software Security and Reliability and the International Symposium on Empirical Software Engineering and Measurement. Popular publications for scientific advances in software assurance are the journal Empirical Software Engineering and IEEE Transactions on Software Engineering.

Conclusion

This chapter described the intersection of cybersecurity science and software assurance. The key concepts and takeaways are:

Scientific experimentation and evaluation can help ensure that software is secure.

Scientists continue to study how to find unknown vulnerabilities in software.

Fuzzing is one method for experimentally testing a hypothesis in the scientific method.

The scientific method and the software development life cycle each provide structure and process, but neither replaces the other.

Realistic and accurate models of adversaries are important to cybersecurity science, and one must consider assumptions about the capabilities of an adversary.

References

Mark Dowd, John McDonald, Justin Schuh. The Art of Software Security Assessment: Identifying and Preventing Software Vulnerabilities (Boston, MA: Addison-Wesley Professional, 2006)

Gary McGraw. Software Security: Building Security In (Boston, MA: Addison-Wesley Professional, 2006)

Claes Wohlin et al. Experimentation in Software Engineering (Heidelberg: Springer, 2012)

1 Firefox has 12 million source lines of code (SLOC) and Chrome has 17 million as of June 2015. Windows 8 is rumored to be somewhere between 30 million and 80 million SLOC.

2 B. J. Wood and R. A. Duggan. “Red Teaming of Advanced Information Assurance Concepts,” DARPA Information Survivability Conference and Exposition, pp.112-118 vol.2, 2000.

Get Essential Cybersecurity Science now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.