Chapter 1. What Is Serverless, Anyway?

Serverless has been hyped as a transformational technology, leveraged as a brand by various development platforms, and slapped as a label on dozens of services by the major cloud providers. It promises the ability to ship code many times per day, prototype new applications with a few dozen lines of code, and scale to the largest application problems. It’s also been successfully used for production applications by companies from Snap (the main Snapchat app) to iRobot (handling communications from hundreds of thousands of home appliances) and Microsoft (a wide variety of websites and portals).

As mentioned in the Preface, the term “serverless” has been applied not only to compute infrastructure services, but also to storage, messaging, and other systems. Definitions help explain what serverless is and isn’t. Because this book is focused on how to build applications using serverless infrastructure, many chapters concentrate on how to design and program the computing layers. Our definition, however, should also shine a light for infrastructure teams who are thinking about building noncompute serverless abstractions. To that end, I’m going to use the following definition of serverless:

Serverless is a pattern of designing and running distributed applications that breaks load into independent units of work and then schedules and executes that work automatically on an automatically scaled number of instances.

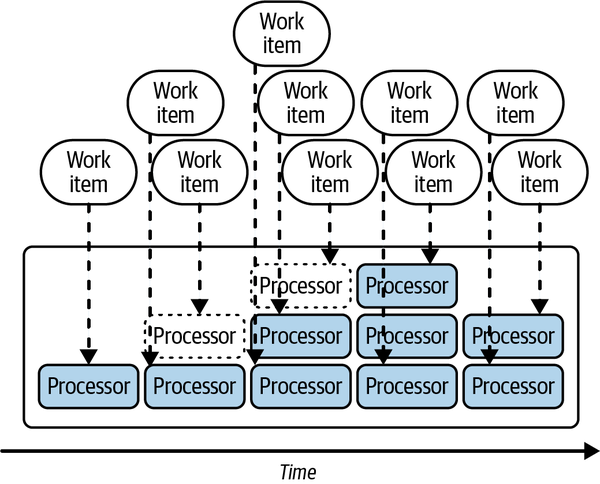

In general,1 the serverless pattern is to automatically scale the number of compute processes based on the amount of work available, as depicted in Figure 1-1.

Figure 1-1. The serverless model

You’ll note that this definition is quite broad; it doesn’t cover only FaaS platforms like AWS Lambda or Cloudflare Workers, but could also cover systems like GitHub Actions (build and continuous integration [CI] actions), service workers in Chrome (background threads coordinating between browser and server), or even user-defined functions on a query platform like Google’s BigQuery (distributed SQL warehouse). It also covers object storage platforms like Amazon Simple Storage Service, or S3 (a unit of work is storing a blob), and could even cover actor frameworks that automatically scaled the number of instances managing actor state.

In this book, I’ll generally pull examples from the open source Knative project for examples of serverless principles, but I’ll occasionally reach out and talk about other serverless platforms where it makes sense. I’m going to focus on Knative for three reasons:

-

As an open source project, it’s available on a broad variety of platforms, from cloud providers to single-board computers like the Raspberry Pi.

-

As an open source project, the source code is available for experimentation. You can add debugging lines or integrate it into a larger existing project or platform. Many serverless platforms are commercial cloud offerings that are tied to a particular provider, and they don’t tend to allow you to modify or replace their platform.

-

It’s in the title of the book, and I expect some of you would be disappointed if I didn’t mention it. I also have five years of experience working with the Knative community, building software and community, and answering questions. My expertise in Knative is at least as deep as my experience with other platforms.

Why Is It Called Serverless?

Armchair critics of serverless like to point out a “gotcha” in the name—while the name is “serverless,” it’s still really a way to run your application code on servers, somewhere. So how did this name come to be, and how does serverless fit into the arc of computing systems over the last 50 years?

Back in the 1960s and 1970s, mainframe computers were rare, expensive, and completely central to computing. The ’70s and ’80s brought ever-smaller and cheaper computers to the point that by the mid-’90s, it was often cheaper to network together multiple smaller computers to tackle a task than to purchase a single more expensive computer. Coordinating these computers efficiently is the foundation of distributed computing. (For a more complete history of serverless, see Chapter 11. For a history of how the serverless name has evolved, see “Cloud-Hosted Serverless”.)

While there are many ways to connect computers to solve problems via distributed computing, serverless focuses on making it easy to solve problems that fall into well-known patterns for distributing work. Much of this aligns with the microservices movement that began around 2010, which aimed to divide existing monolithic applications into independent components capable of being written and managed by smaller teams. Serverless complements (but does not require) a microservices architecture by simplifying the implementation of individual microservices.

Tip

The goal of serverless is to make developers no longer need to think in terms of server units when building and scaling applications,2 but instead to use scaling units that makes sense for the application.

By embracing well-known patterns, serverless platforms can automate difficult-but-common processes like failover, replication, or request routing—the “undifferentiated heavy lifting” of distributed computing (coined by Jeff Bezos in 2006 when describing the benefits of Amazon’s cloud computing platform). One popular serverless model from 2011 is the twelve-factor application model, which spells out several patterns that most serverless platforms embrace, including stateless applications with storage handled by external services or the platform and clear separation of configuration and application code.

By this time, it should be clear that adopting a serverless development mindset does not mean throwing a whole bunch of expensive hardware out the window—indeed, serverless platforms often need to work alongside and integrate with existing software systems, including mainframe systems with lineage from the 1960s.

Knative in particular is a good choice for integrating serverless capabilities into an existing computing platform because it builds on and natively incorporates the capabilities of Kubernetes. In this way, Knative bridges the “serverless versus containers” question that has been a popular source of argument since the introduction of AWS Lambda and Kubernetes in 2014.

A Bit of Terminology

While describing serverless systems and comparing them with traditional computing systems, I’ll use a few specific terms throughout the book. I’ll try to use these words consistently, as there are many ways to implement a serverless system, and it’s worth having some precise language that’s distinct from specific products that we can use to describe how our mental models map to a particular implementation:

- Process

-

I use the term process in the Unix sense of the word: an executing program with one or more threads that share a common memory space and interface with a kernel. Most serverless systems are based on the Linux kernel, as it is both popular and free, but other serverless systems are built on the Windows kernel or the JavaScript runtime exposed via WebAssembly (Wasm). The most common serverless process mechanism as of this book’s writing is the Linux kernel via the container subsystem, used by both Lambda via the Firecracker VM library and various open source projects such as Knative using the Kubernetes scheduler and Container Runtime Interface (CRI).

- Instance

-

An instance is a serverless execution environment along with whatever external system infrastructure is needed for managing the root process in that environment. The instance is the smallest unit of scheduling and compute available in a serverless system and is often used as a key in systems like logging, monitoring, and tracing to enable correlations. Serverless systems treat instances as ephemeral and handle creating and destroying them automatically in response to load on the system. Depending on the serverless environment, it may be possible to execute multiple processes within a single instance, but the outer serverless system sees the instance as the unit of scaling and execution.

- Artifact

-

An artifact (or code artifact) is a set of computer code that is ready to be executed by an instance. This might be a ZIP file of source code, a compiled JAR, a container image, or a Wasm snippet. Typically, each instance will run code using a particular artifact, and when a new artifact is ready, new instances will be spun up and the old instances deleted. This method of rolling out code is different from traditional systems that provision VMs and then reuse that VM to run many different artifacts or versions of an artifact, and it has beneficial properties we’ll talk about later.

- Application

-

An application is a designed and coordinated set of processes and storage systems that are assembled to achieve some sort of user value. While simple applications may contain only one type of instance, most serverless applications contain multiple microservices, each running its own types of instances. It’s also common for applications to be built with a mix of serverless and nonserverless components—see Chapter 5 for more details on how to integrate serverless and traditional architectures in a single application.

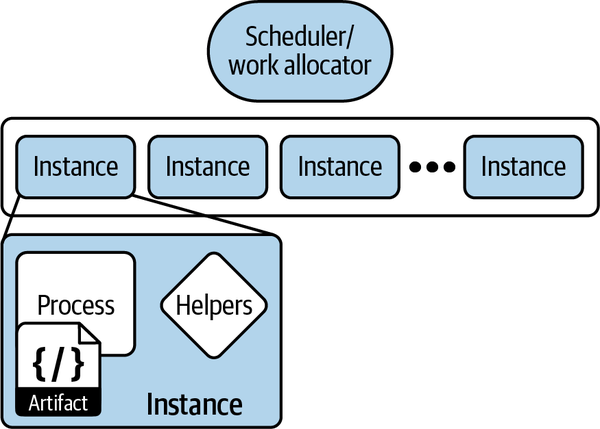

These components, as well as a few others like the scheduler, are illustrated in Figure 1-2.

Figure 1-2. Components of a serverless application

What’s a “Unit of Work”?

Serverless scales by units of work and can scale to zero instances when there’s no work. But, you might ask, what is a unit of work? A unit of work is a stateless, independent request that can be satisfied using a certain amount of computation (and further network requests) by a single instance. Let’s break that down:

- Stateless

-

Each request contains all the work to be done and does not depend on the instance having handled earlier parts of the request or on other requests also in-flight. This also means that a serverless process can’t store data in-memory for future work or assume that processes will be running in between work units to do things like handle in-process timers. This definition actually allows a lot of wiggle room—you can link to the outputs of work units that might be processed earlier, or your work can be a stream of data like “Here’s a segment of video that contains motion (until the motion stops).” Roughly, you can think of a unit of work as the input to a function; if you can process the inputs to the function without local state (like a function not associated with a class or global values), then you’re stateless enough to be serverless.

- Independent

-

Being able to reference only work that’s complete elsewhere allows serverless systems to scale horizontally by adding more nodes, since each unit of work doesn’t directly depend on another one executing. Additionally, independence means that work can be routed to “an instance that’s ready to do the work” (including a newly created instance), and instances can be shut down when there isn’t enough work to go around.

- Requests

-

Like reactive systems, serverless systems should do work only when it’s asked for. These requests for work can take many forms: they might be events to be handled, HTTP requests, rows to be processed, or even emails arriving at a system. The key point here is that work can be broken into discrete chunks, and the system can measure how many chunks of work there are and use that measurement to determine how many instances to provision.

Here are some examples of units of work that serverless systems might scale on.

Connections

A system could treat each incoming TCP connection request as a unit of work. When a connection arrives, it is attached to an instance, and that instance will speak a (proprietary) protocol until one side or the other hangs up on the connection.

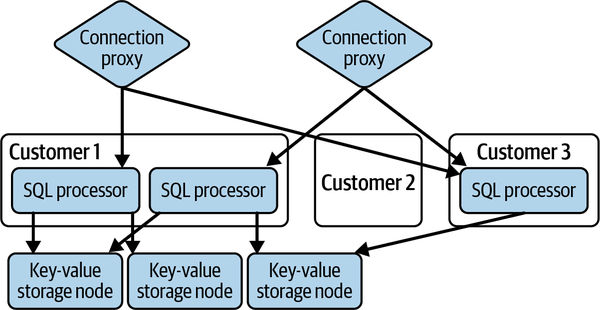

As shown in Figure 1-3, CockroachDB uses a model like this to manage PostgreSQL (Postgres) “heads” onto their database3—each customer database connection is routed by a special connection proxy to a SQL processor specific to that customer, which is responsible for speaking the Postgres protocol, interpreting the SQL queries, converting them into query plans against the (shared) backend storage, executing the query plans, and then marshaling the results back to the Postgres protocol and returning them to the client. If no connections are open for a particular customer, the pool of SQL processors is scaled to zero.

Figure 1-3. CockroachDB serverless architecture

By using the serverless paradigm, CochroachDB can charge customers for usage only when a database connection is actually active and offer a reasonable free tier of service for small customers who are mostly not using the service. Of course, the backend storage service for this is not serverless—that data is stored in a shared key-value store, and customers are charged for data storage on a monthly basis separately from the number of connections and queries executed. We’ll talk more about this model in “Key-Value Storage”.

Requests

At a higher level, a serverless system could understand a particular application protocol such as HTTP, SMTP (email), or an RPC mechanism, and interpret each protocol exchange as an individual unit of work. Many protocols support reusing or multiplexing application requests on a single TCP connection; by enabling the serverless system to understand application messages, work can be broken into smaller chunks, which has the following benefits:

-

Smaller requests are more likely to be evenly sized and more closely time-bounded. For protocols that allow transmitting more than one request over a TCP stream, presumably most requests finish before the TCP stream, and the work for executing one request is smaller, allowing finer-grained load balancing among instances.

-

Interpreting application requests allows decoupling the connection lifespan and instance lifespan. When a TCP connection is the unit of work, deploying a new code artifact requires starting new instances for new connections and then waiting for the existing connections to complete (evocatively called “draining” the instances). If draining takes five minutes instead of one minute, application rollouts and configuration changes take five times as long to complete. Many settings (such as environment variables) can be set only when a process (instance) is started, so this limits the speed that teams can change code or configuration.

-

Depending on the protocol, it’s possible for a single connection to have zero, one, or many requests in-flight at once. By interpreting application requests when scheduling units of work, serverless systems can achieve higher efficiency and lower latency—fanning out requests to multiple instances when requests are made in parallel or avoiding starting instances for connections that do not have any requests currently in-flight.

-

By defining the boundaries of application-level requests, serverless systems can provide higher-quality telemetry and service. When the system can measure the duration of a request, it can measure latency, request sizes, and other metrics, and it can enable and enforce application-level request deadlines and tracing from outside the specific process that is handling a request. This can allow serverless systems to recover and respond to application failures in the same way that API gateways and other HTTP routers can.

Events

At a high level, events and requests are much the same thing. At another level, events are a fundamental building block of reactive and asynchronous architectures. Events declare, “At this time, this thing happened.” It would be possible to model an entire serverless system on events; you might have an event for “This HTTP request happened,” “This database row was scanned,” or “This work queue item was received.” This is a very clever way to fit both synchronous and asynchronous communication into a single model. Unfortunately, converting synchronous requests into asynchronous events and “response events” that are correlated with the request event introduces a few problems:

- Timeouts

-

Enforcing timeouts in asynchronous systems can be much more difficult, especially if a message that needs timing out could be on a message queue somewhere, waiting for a process to notice that the timeout is done.

- Error reporting

-

Beyond timeouts and application crashes, synchronous requests may sometimes trigger error conditions in the underlying application. Error conditions could be anything from “You requested a file you don’t have permission on” to “You sent a search request without a query” to “This input triggered an exception due to a coding bug.” All of these error conditions need to be reflected back to the original caller in a way that enables them to understand what, if anything, they did wrong with their request and to decide on a future course of action.

- Cardinality and correlation

-

While there might be benefits to modeling “A request was received” and “A response was sent” as events, using those events as a message bus to say “Please send this response to this event” misses the point of events as observations of an external system. More practically, what’s the intended meaning of a second “Please send this response to this event” that correlates with the same request event? Assuming that the application protocol envisages only a single answer, the second event probably ends up being discarded. Discarding the event may in turn need an additional notification to the offending eventer to indicate that their message was ignored—another case of the error-reporting issue previously mentioned.

In my opinion, these costs are not worth the benefits of a “grand unified event model of serverless.” Sometimes synchronous calls are the right answer, sometimes asynchronous calls are the right answer, and it’s up to application developers to intelligently choose between the two.

It’s Not (Just) About the Scale

Automatic scaling is nice, but building a runtime around units of work has more benefits. Once the infrastructure layer understands what causes the application to execute, it’s possible to inject additional scaffolding around the application to measure and manage how well it does its work. The finer-grained the units of work, the more assistance the platform is able to provide in terms of understanding, controlling, and even securing the application on top of it.

In the world of desktop and web browser applications, this revolution happened in the late 1990s (desktop) and 2000s (browsers), where application event or message loops were slowly replaced by frameworks that used callback-based systems to automatically route user input to the appropriate widgets and controls within a window. In HTTP servers, a similar single-system framework was developed in the ’90s called CGI (Common Gateway Interface). In many ways, this was the ancestor of modern serverless systems—it enabled writing a program that would respond to a single HTTP request and handled much of the network communication details automatically. (For more details on CGI and its influence on serverless systems, see Chapter 11.)

Much like the CGI specification, modern serverless systems can simplify many of the routine programming best practices around handling a unit of work and do so in a programming-agnostic fashion. Cloud computing calls these sorts of assists “undifferentiated heavy lifting”—that is, every program needs them, but each program is fine if they are done the same way.

The following is not an exhaustive list of features; it is quite possible that the set of features enabled by understanding units of work will continue to expand as more tools and capabilities are added to runtime environments.

Blue and Green: Rollout, Rollback, and Day-to-Day

Most serverless systems include a notion of updating or changing the code artifact or the instance execution configuration in a coordinated way. The system also understands what a unit of work is and has some (internal) way of routing requests to one or more instances running a specified code artifact. Some systems expose these details to application operators and enable those operators to explicitly control the allocation of incoming work among different versions of a code artifact.

Zooming out, the serverless process of starting new instances with a specified version of a code artifact and allowing old instances to be collected when no longer in use looks a lot like the serverful blue-green deployment pattern: a second bank of servers is started with the “green” code while allowing the “blue” servers to keep running until the green servers are up and traffic has been rerouted from the blue servers to the green ones. With serverless request routing and automatic scaling, these transitions can be done very quickly, sometimes in less than a second for small applications and less than five minutes even for very large applications.

Beyond the blue-green rollout pattern, it’s also possible to control the allocation of work to different versions of an application on a percentage (proportional) basis. This enables both incremental rollouts (the work is slowly shifted from one version to another—for example, to allow the new version’s caches to fill) and canary deployments (a small percentage of work is routed to a new application to see how the application reacts to real user requests).

While incremental rollouts are mostly about managing scale, blue-green and canary rollouts are mostly about managing deployment risk. One of the riskier parts of running a service is deploying new application code or configuration—changing a system that is already working introduces the risk that the new system state does not work. By making it easy to quickly start up new instances with a specified version, serverless makes it faster and easier to roll back to an existing (working) version. This results in a lower mean time to recovery (MTTR), a common disaster-recovery metric.

By making individual instances ephemeral and automatically scaling based on incoming work, serverless can reduce the risk of “infrastructure snowflakes”— systems that are specially set up by hand with artisanal configurations that may be difficult to replicate or repair. When instances are automatically replaced and re-deployed as part of the regular infrastructure operations, instance recovery and initialization are continually tested, which tends to ensure that any shortcomings are fixed fairly quickly.

Continually replacing or repaving infrastructure instances can also have security benefits: an instance that has been corrupted by an attacker will be reset in the same way that an instance corrupted by a software bug is. Ephemeral instances can improve an application’s defensive posture by making it harder for attackers to maintain persistence, forcing them to compromise the same infrastructure repeatedly. Needing to repeatedly execute a compromise increases the odds of detecting the attack and closing the vulnerability.

Tip

Serverless treats individual instances as fungible—that is, each instance is equally replaceable with another one. The solution to a broken instance is simply to throw it away and get another one. The answer to “How many instances for X?” is always “The number you need,” because it’s easy to add or remove instances. Leaning into this pattern of replaceable instances simplifies many operational problems.

Creature Comforts: Undifferentiated Heavy Lifting

As described earlier in “Why Is It Called Serverless?”, one of the benefits of a serverless platform is to provide common capabilities that may be difficult or repetitive to implement in each application.

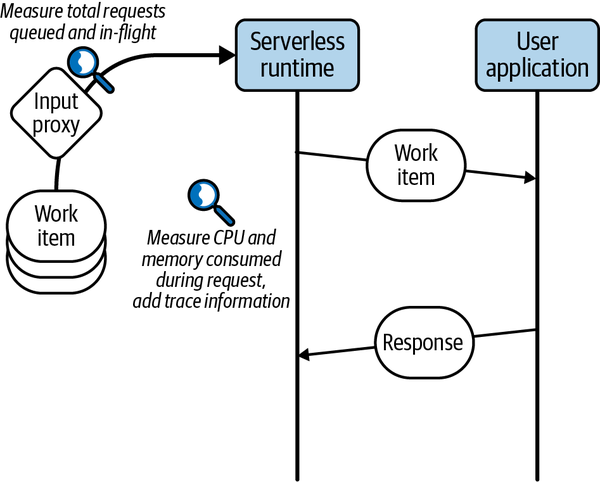

As mentioned in “It’s Not (Just) About the Scale”, once the incoming work to the system has been broken down and recognized by the infrastructure, it’s easy to use that scaffolding to start to assist with the undifferentiated heavy lifting that would otherwise require application code. One example of this is observability: a traditional application needs instrumentation that measures each time a request (unit of work) is made and how long it takes to complete that request. With a serverless infrastructure that manages the units of work for you, it’s easy for the infrastructure to start a clock ticking when the work is handed to your application and to record a latency measurement when your application completes the work.

While it may still be necessary to provide more detailed metrics from within your application (for example, the number of records scanned to respond to a work request), automatic monitoring, visualization, and even alerting on throughput and latency of work units can provide application teams with an easy “zero effort” monitoring baseline. This not only can save application development time, but also can provide guardrails on application deployment for teams who do not have the time or inclination to develop expertise in monitoring and observability.

Similarly, serverless infrastructure may need and want to implement application tracing on the work units managed by the infrastructure. This tracing serves two different purposes:

-

It provides infrastructure users with a way to investigate and determine the source of application latency, including platform-induced latency and latency due to application startup (where applicable).

-

It provides infrastructure operators with richer information about underlying platform bottlenecks, including in application scaling and overall observed latency.

Other common observability features include log collection and aggregation, performance profiling, and stack tracing. Chapter 10 delves into the specifics of using these tools to debug serverless applications in the live ephemeral environment. By controlling the adjacent environment (and, in some cases, the application build process), serverless environments can easily run observability agents alongside the application process to collect these types of data.

Figure 1-4 provides a visualization of the measurement points that can be implemented in a serverless system.

Figure 1-4. Common capabilities

Creature Comforts: Managing Inputs

Because serverless environments provide an infrastructure-controlled ingress point for units of work, the infrastructure itself can be used to implement best practices for exposing services to the rest of the world. One example of this is automatically configuring TLS (including supported ciphers and obtaining and rotating valid certificates if needed) for serverless services that may be called via HTTPS or other server protocols. Moving these responsibilities to the serverless layer allows the infrastructure provider to employ a specialized team that can ensure best practices are followed; the work of this team is then amortized across all the serverless consumers using the platform. Other common capabilities that may extend beyond “undifferentiated heavy lifting” include authorization, policy enforcement, and protocol translation.

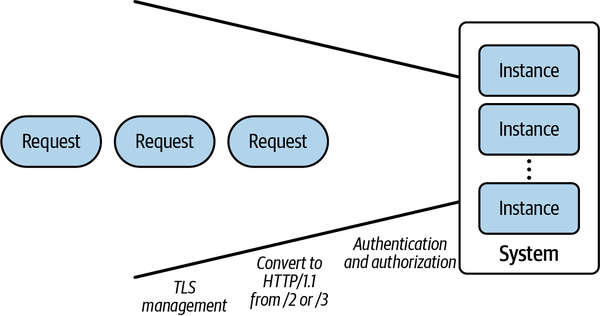

For a visual representation of the input transformations performed by Knative, see Figure 1-5.

Figure 1-5. Managing inputs

Authorization and policy enforcement is a crucial capability in many application scenarios. In a traditional architecture, this layer might be delegated to an outer application firewall or proxy or language-specific middleware. Middleware works well for single-language ecosystems, but maintaining a common level of capability in middleware for multiple languages is difficult. Firewalls and proxies extract this middleware into a common service layer, but the protected application still needs to validate that requests have been sent by the firewall, rather than some other internal attacker. Because serverless platforms control the request path until the delivery of work to the application, application authors do not have to worry about confirming that the requests were properly handled by the infrastructure—another quality-of-life improvement.

The last application developer quality-of-life improvement is in the opportunity to translate external requests to units of work that may be more easily consumed by the target applications. Many modern protocols are complicated and require substantial libraries to implement correctly and efficiently. By implementing the protocol translation from a complex protocol (like HTTP/3 over UDP) to a simpler one (for example, HTTP/1.1, which is widely understood), application developers can select from a wider range of application libraries and programming languages.

Creature Comforts: Managing Process Lifecycle

As part of scaling and distributing work, serverless instances take an active role in managing the lifecycle of the application process, as well as any helper processes that may be spawned by the platform itself. Depending on the platform and the amount of work in-flight, the platform may need to stall (queue) that work until an instance is available to handle the request. Known as a cold start, this can occur even when there are already live application instances (for example, a sudden burst of work could exceed the capacity of the provisioned instances). Some serverless platforms may choose to overprovision instances to act as a buffer for these cases, while others may minimize the number of additional instances to reduce costs.

Typically, an application process within a serverless instance will go through the following lifecycle stages:

- 1. Placement

Before a process can be started, the instance must be allocated to an actual server node. It’s an old joke that there are actually servers in serverless, but serverless platforms will take several of the following conditions into account:

- Resource availability: Depending on the model and degree of oversubscription allowed, some nodes may not be a suitable destination for a new instance.

- Shared artifacts: Generally, two instances on the same server node can share application code artifacts. Depending on the size of the compiled application, this can result in substantially faster application startup time, as less data needs to be fetched to the node.

- Adjacency: If two instances of the same application are present on the same node, it may be possible to share some resources on the node (memory mappings for shared libraries, just-in-time [JIT] compiled code, etc). Depending on the language and implementation, these savings can be sizable or nearly nonexistent.

- Contention and adversarial effects: In a multiapplication environment, some applications may compete for limited node resources (for example, L2 cache or memory bandwidth). Sophisticated serverless platforms can detect these applications and schedule them to different nodes. Note that a particularly optimized application may become its own adversary if it uses a large amount of a nonshared resource.

- Tenancy scheduling: In some environments, there may be strict rules about which tenants can share physical resources with other tenants. Strong sandbox controls within a physical node still cannot protect against novel attacks like Spectre or Rowhammer that circumvent the security model.

- 2. Initialization

Once the instance has been placed on a node, the instance needs to prepare the application environment. This may include the following:

- Fetching code artifacts: If not already present, code artifacts will need to be fetched before the application process can start.

- Fetching configuration: In addition to code, some instances may need specific configuration information (including instance-level or shared secrets) loaded into the local filesystem.

- Filesystem mounts: Some serverless systems support mounting shared filesystems (either read-only or read-write) onto instances for managing shared data. Others require the instance to fetch data during startup.

- Network configuration: The newly created instances will need to be registered in some way with the incoming load balancer in order to receive traffic. This may be a broadcast problem, depending on the number of load balancers. In some systems, instances also need to register with a network address translation (NAT) provider for outbound traffic.

- Resource isolation: The instance may need to configure isolation mechanisms

like Linux

cgroupsto ensure that the application uses a fair amount of node resources. - Security isolation: In a multitenant system, it may be necessary to set up sandboxes or lightweight VMs to prevent users from compromising adjacent tenants.

- Helper processes: The serverless environment may offer additional services such as log or metrics collection, service proxies, or identity agents. Typically, these processes should be configured and ready before the main application process starts.

- Security policies: These may cover both inbound and outbound network traffic policies that restrict what services the application process can see on the network.

- 3. Startup

Once the environment is ready, the application process is started. Typically, the application must perform some sort of setup and internal initialization before it is ready to handle work. This is often indicated by a readiness check, which may be either a call from the process to the outside environment or a health-check request from the environment to the process.

While the placement and initialization phases are largely the responsibility of the serverless platform, the startup phase is largely the responsibility of the application programmer. For some language implementations, the application startup phase can be the majority of the delay attributable to cold starts.

- 4. Serving

Once the application process has indicated that it is ready to receive work, it receives work units based on the platform policies. Some platforms limit in-flight work in a process to a single request at a time, while others allow multiple work items in-flight at once.

Typically, once a process enters the serving state, it will handle multiple work items (either in parallel or sequentially) before terminating. Reusing an active serving process in this way helps amortize the cost of placement, initialization, and startup and helps reduce the number of cold starts experienced by the application.

- 5. Shutdown

Once the application process is no longer needed, the platform will signal that the process should exit. A platform may provide some of these guarantees:

- Draining: Before signaling termination, the instance will ensure that no units of work are in-flight. This simplifies application shutdown, as it does not need to block on any active work in-flight.

- Graceful shutdown: Some serverless platforms may provide a running process an opportunity to perform actions before shutting down, such as flushing caches, updating snapshots, or recording final application metrics. Not all serverless platforms offer a graceful shutdown period, so it’s important to understand whether these types of activities are guaranteed on your chosen platform.

- 6. Termination

Once the shutdown signal has been sent and any graceful shutdown period has elapsed, the process will be terminated, and all the helper processes, security policies, and other resources allocated during initialization will be cleaned up (possibly going through their own graceful shutdown phases). On platforms that bill by resources used, this is often when billing stops. Until termination is complete, the instance’s resources are generally considered “claimed” by the scheduler, so rapid turnover in instances may affect the serverless scheduler’s decisions.

Summary

Serverless computing and serverless platforms assume certain application behaviors, and will not completely replace existing systems and may not be a good fit for all applications. For a variety of common application patterns, serverless platforms offer substantial technical benefits, and I expect that the fraction of organizations and applications that incorporate serverless will continue to increase. With a helping of theory under our belts, the next chapter focuses on building a small serverless application so you can get a feel for how this theory applies in practice.

1 In “Task Queues”, we will talk briefly about scaling work over time rather than instance counts.

2 “Server units” could either represent virtual/physical machines or server processes running on a single instance. In both cases, serverless aims to enable developers to avoid having to think about either!

3 This is described in Andy Kimball’s blog post “How We Built a Serverless SQL Database”, outlining the architecture of CockroachDB Serverless (which I’ve summarized).

Get Building Serverless Applications on Knative now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.