Chapter 1. The IoT Landscape

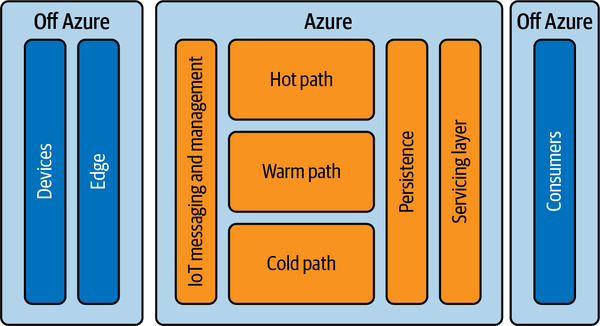

When contemplating the Internet of Things (IoT), you might initially envision the countless devices seamlessly interwoven into our modern landscape. IoT has permeated practically every part of our lives, from the simplest light bulb to orchestrations on a monumental scale within the contours of smart cities. These devices are aptly dubbed the “things” in the IoT realm. To fathom the entirety of this intricate tapestry, one must not simply ascend to the metaphorical 40,000-foot vantage from an airplane. This perspective reveals the devices and the elaborate symphony of parts, all orchestrated in harmonious collaboration. It’s the IoT Landscape—a panoramic view that captures the collective essence of every element and orchestration that conspires to set these IoT devices into remarkable motion. It gives a taxonomy to each individual part, assigning purpose and place within the grand design. In this book, I’ll take you through the IoT Landscape that is specific to Azure, which offers a plethora of Microsoft-managed cloud services, edge components, and software development kits (SDKs) to choose from. So, without further ado, take your first look at the Azure IoT Landscape shown in Figure 1-1.

Figure 1-1. The IoT Landscape for Azure

This book explores each facet within this landscape, delving into the interplay of each part within the framework of a comprehensive IoT solution. Envision this as a voyage—an expedition starting from the leftward side (with devices) that generally moves to the right. In navigating this trajectory, you’ll traverse significant junctures, including edge computing, IoT management and messaging, data pathways, data persistence, data servicing, and the expansive sphere of data consumers. However, to acclimate you to this voyage, let us embark with an overview of each point on the journey. This strategic prelude will chart your course, starting with devices.

Off Azure

The first section of the Azure IoT Landscape is, oddly enough, the things that exist outside of Azure: devices and edge computing.

IoT Devices

It’s hard to define an IoT device exactly because it’s not as simple as one might think. A textbook definition for the “Internet of Things” would be a network of interconnected devices that can collect, transmit, and receive data without direct human intervention. Sure, some things are pretty obvious. A smart light bulb or connected appliance is an IoT device, but that line gets a little blurry with things like televisions or media players. Quite the opposite might be true with some devices. They may be purpose-built to work with humans, like an excavator or smart toothbrushes. Notice what I said: they are purpose-built, like moving dirt or cleaning your teeth. The device has a specialized purpose rather than having a general purpose like a laptop or smartphone.

IoT devices generally, though, have some common characteristics. With devices, you almost always have connectivity for getting the device to talk to the internet, sensors for collecting data, data processing on the device to deal with the collected data, and actuators for responding to commands. Combining these things can create a complex or simple device, depending on the context.

Because there’s a huge variety of devices ranging from light bulbs to traffic cameras, to bulldozers, to heart monitors, it’s impossible to address all of them. It’s even daunting to attempt to generalize because, inevitably, there seem to be exceptions. In Chapter 2, I’ll take you through the Azure-centric hardware offerings from Microsoft and we will study how they work together for different purposes. I’ll also show you the different software offerings for constrained devices (also known as resource-constrained or edge devices, IoT devices with limitations in computing power, memory, energy, and communication capabilities) and unconstrained devices (cloud-connected or gateway devices, with higher computational power, memory, and communication capabilities compared to constrained devices). These devices are designed to perform specific tasks with minimal resources and are often deployed in remote or challenging environments where frequent maintenance or resource replenishment might be difficult.

In Chapter 3, I’ll show you how to get started with exploring IoT devices without needing to invest in hardware right away.

Devices ultimately connect to the internet. They can do that directly in some cases, but in others, they may use a proxy that assists the devices in their work. Such a proxy would be part of edge computing.

Edge Computing

Edge computing is a decentralized approach to data processing where computations occur closer to the data source, reducing latency and enhancing real-time decision making. It involves processing data at or near the devices or local servers rather than sending all data to a centralized cloud server.

Edge computing is extending the cloud into a data center rather than thinking of the cloud as an extension to the data center, which is more of a hybrid model. It is especially valuable for applications that require quick responses, low latency, efficient use of network bandwidth, and offline and intermittent connected computing scenarios. Edge computing suits scenarios where immediate data processing is essential, such as industrial automation, remote monitoring, and smart cities. IoT, therefore, is a pertinent topic in the scope of edge computing.

As of the writing of this book, edge computing is a hot topic, with a flurry of activity in Microsoft space attempting to bring Azure closer to the edge. Within this context, Microsoft has two major initiatives encompassing many different resources. Specifically for the IoT, Azure offers Azure IoT Edge runtime. It’s a lightweight container orchestration platform for building modular IoT devices and edge appliances. Beyond that, Azure offers Azure Arc, a general-purpose tool for extending the Azure control plane into off-Azure environments. One of its biggest abilities is its capacity to manage Kubernetes clusters. Users can deploy services like stream processing, SQL Server, machine learning, and messaging to the edge through Arc. Because Arc leverages Kubernetes, Azure Arc and Azure IoT Edge both use Docker containers, so you can’t ignore that! Containers, in any case, are a part of these solutions. Chapter 6 goes into detail about the edge options for Azure.

These different technologies make edge computing one of the most exciting parts of IoT solutions. Even if you don’t plan on using edge, some things about edge computing can be incorporated into your devices. Regardless of how you connect your devices to the internet, they will need a place online to manage them. That’s the next part of the IoT Landscape.

Azure

Now, we come to the IoT Landscape that exists in Azure. Here, we have IoT messaging and management, data processing, data persistence, and data presentation.

IoT Messaging and Management

IoT messaging and management encompasses a massive set of interrelated topics. A cloud solution like Azure IoT Hub combines them into a platform-as-a-service (PaaS) offering that you can leverage as a solution builder. Here’s what Azure IoT Hub and its related services provide for your devices in terms of IoT messaging and management:

- Device registration and provisioning

-

Devices must be registered within the IoT Hub to establish secure communication. Azure Device Provisioning Services (DPS) provides mechanisms for automatic device provisioning, enabling devices to join the network securely.

- Secure communication

-

Devices connect to Azure IoT Hub using secure communication protocols such as MQTT, AMQP, or HTTPS. End-to-end security over the internet is maintained through device authentication, authorization, and encryption over one of these communication protocols.

- Device twins and desired properties

-

Twinning is a way of tracking the settings of devices on the cloud without having to query each device in the cloud. A “device twin” maintained by Azure IoT Hub represents the device’s state and metadata. Device twins store desired properties that can be set by the cloud application and reported properties that devices update.

- Cloud-to-device (C2D) messaging

-

Azure IoT Hub enables cloud applications to send commands or messages to individuals or groups of devices. It provides APIs and routing capabilities for these messages. Devices receive these messages and can take action accordingly.

- Device-to-cloud (D2C) messaging

-

Devices can send telemetry data, status updates, and other information to the cloud. Azure IoT Hub provides many ways to move this data onto downstream data processing engines for analysis and monitoring, like databases, Azure Functions, Logic Apps, and Stream Analytics.

- Device management and monitoring

-

Azure IoT Hub provides tools to monitor the health and status of connected devices. Devices can report their state, and cloud applications can query this information for troubleshooting and maintenance.

- Edge device management

-

We already discussed Azure IoT Edge, but Azure IoT Hub supports managing IoT devices at the edge, where data processing occurs closer to the data source. Azure IoT Edge extends this capability for deploying and managing containerized workloads on edge devices.

- Scalability and performance

-

Azure IoT Hub is designed to handle large numbers of devices and high message throughput. With thousands of devices connected at a time, the potential data deluge is daunting. It offers tiered options for scaling to accommodate diverse IoT scenarios.

Chapter 4 dives into all things related to device lifecycle management. This process encompasses everything from a device’s inception to the day it’s offloaded and no longer used. It entails device design, device provisioning, device maintenance, and finally, device decommissioning and disposal.

Chapter 5 covers what happens during a device’s main sequence, where it communicates with the cloud through messaging from the device to the cloud and messages from the cloud to the device.

As you can see, there’s no one thing that IoT Hub does for managing messaging and devices at scale. It’s the central nervous system of your IoT solution. It brokers the relationship between your potentially thousands of devices and the downstream systems that handle the telemetry and data coming off those devices through data processing.

Data Processing

Data processing is a crucial component in IoT solutions that involves managing, preparing, and analyzing the vast amounts of data generated by IoT devices. Data processes ensure data is collected, transformed, and utilized effectively to extract insights and drive meaningful actions.

Data processing involves gathering data from these devices, integrating data from diverse sources into datasets, ensuring the collected data is stored appropriately in databases or data lakes, cleaning and transforming to ensure its quality and consistency, and ultimately presenting the data with well-designed data schemas so data can be queried and analyzed.

There are a ton of ways to do this, though. You can think of this as real-time processing and batch processing. Real-time processing involves immediate analysis of streaming data from IoT devices, enabling quick responses to anomalies and triggering alerts or actions. Batch processing analyzes historical data in predefined batches to uncover trends, patterns, and correlations that offer insights into past events. This approach is particularly valuable for long-term analysis.

In both cases, data is summarized and consolidated through aggregation, reducing its volume while retaining its informative content. More advanced techniques like machine learning and artificial intelligence are employed to perform complex analytics, identify hidden patterns, and predict future outcomes. The outcome of data processing is actionable insights that guide decision making, optimize operations, and enhance overall efficiency. These are part of data architecture.

Data architecture provides a general framework for how one thinks about data processing. It spans from data collection to insightful outcomes. It encompasses methodologies, tools, and strategies for efficiently handling large volumes of data from sources like IoT devices. It looks at data sources, how data is received, how data is integrated, how data is transformed, how data is processed, and how data is presented. When designing data processing architecture, factors such as real-time versus batch processing, data latency, data quality, integration with existing systems, and scalability need to be considered.

Chapter 7 starts the last half of the book, shifting focus to creating scalable data solutions on Azure. Chapter 8 extends the processing concepts by looking at three different architectures commonly used in IoT data.

While I just named two broad categories—real-time and batch-style processing—some more nuance can be applied to this context with hot, warm, and cold paths. Each of these takes a different approach to data processing within data architecture.

Hot paths

“Hot path” data refers to the portion of data within a system that requires immediate or real-time processing to facilitate quick decision making, rapid responses, and timely actions. (Hot path is usually thought of as “fast” processing, but it should not be conflated with speed because speed is not the only concern.) Hot path data is processed in real time or near real time, allowing for rapid analysis and decision making. It often contains critical information that, when acted upon swiftly, can prevent or mitigate issues, optimize processes, or enhance user experiences. These actions could be automated responses, user notifications, or system parameter adjustments. In the context of IoT, hot path data entails telemetry events that feed into systems that provide real-time and near real-time responses to data. A canonical example of a hot path telemetry event may be a smoke detector sending a signal to get the fire department’s attention.

Azure provides tooling for managing this through options like Stream Analytics, Azure Functions, Event Grids, Event Hubs, Service Buses, and other real-time processing capabilities. These all work well together to make complete solutions for IoT hot paths. I’ll talk about hot paths in more detail in Chapter 9. Still, there are more than hot paths. There’s something way cooler: warm paths.

Warm paths

Warm path data represents a category that holds a position between “hot path” and “cold path” data regarding its urgency and processing frequency. It is characterized by requiring faster processing than cold path data but not as immediate as hot path data. Like a hot path, speed is important, but it’s not the only factor. Warm path data provides insights and support decisions that can tolerate a brief processing delay.

Unlike hot path data that demands immediate action, warm path data is processed with moderate latency, falling into near real-time or slightly delayed analysis. Its purpose is to contribute insights that aid ongoing monitoring, optimization efforts, and decisions requiring timely attention without needing instant reactions. Warm path data is utilized in scenarios where efficiency and optimization are priorities. These situations benefit from insights that are prompt enough to drive action but don’t require an immediate response.

Regarding data processing architecture, warm path data is typically processed using techniques like stream processing that balance real-time insights and a slight delay in processing. This ensures that the data is transformed into actionable insights within a timeframe that aligns with the needs of operational decision making and ongoing optimization. In essence, warm path data plays a crucial role in enabling organizations to balance the urgency of hot path data and the longer-term insights derived from cold path data. For IoT, a warm path helps you gain insights into ongoing operations, monitoring, and optimizations within a timeframe that balances prompt analysis with acceptable delay. A warm path provides situational awareness by providing insights into changes or trends in IoT device behavior, performance, or environmental conditions. See Chapter 10 for more details on warm paths.

Azure supports warm paths through time-series data. Azure Data Explorer and Azure Stream Analytics are the primary services that accomplish this. Data Explorer is more of a database service but has the compute capacity and integrated services to make it useful for warm paths. Stream Analytics can look at historical data in time windows, too. But for large windows of time or when data does not need immediate processing, you can consider the next type: cold paths.

Cold paths

Cold path data is a category in data processing and analytics characterized by its focus on long-term storage and historical analysis. It’s not exactly synonymous with batch processing, but it often is implemented as such. Unlike data in the hot or warm path, cold path data is stored and processed with a lower frequency of access. It serves purposes such as historical reporting, trend analysis, and gaining deep insights that don’t demand rapid responses. Processing of cold path data doesn’t require immediate urgency and is subject to longer intervals, typically ranging from hours to days. Its primary utility lies in retrospective analysis, identifying trends, historical patterns, and anomalies that have occurred over a substantial period. It’s also useful as an integration pattern for more traditional workloads that leverage more conventional extract, transform, and load (ETL) styles of integrations.

Organizations tend to store cold path data using cost-effective storage solutions, as frequent and fast access is not a central requirement. It is used when regulatory compliance is essential, as industries need to retain historical data for auditing purposes. For businesses, cold path data contributes to generating historical reports, tracking performance over time, and making informed decisions based on a comprehensive historical context.

For IoT, cold path data’s contribution extends to predictive analytics, such as when machine learning models are trained on historical patterns for forecasting. It aids in root cause analysis, enabling detailed investigations into past incidents or anomalies. Moreover, businesses utilize cold path data to plan for the future by understanding the consequences of past decisions and events. I focus on cold paths in Chapter 10.

In data processing architectures, Azure Data Factory, Azure Synapse, Azure Databricks, and HDInsights provide the compute resources to work with the storage to make cold paths work. Cold path data is stored in data warehouses such as Azure Synapse, Azure Data Lake, specialized archival systems, or something as simple as object storage like Azure Blob Storage. All of these are part of persistence.

Data Persistence

Unless you deal only with transient data, you will need to store it somewhere. Even transient data systems, like message queues, will persist data for a while.

Data persistence in the context of data processing refers to the methods and mechanisms used to store and maintain data beyond its initial processing or input. It ensures that data remains accessible, retrievable, and usable for various purposes such as analysis, reporting, and future processing. Different kinds of data persistence serve various needs in data processing architectures:

- Temporary or volatile storage

-

Temporary storage involves holding data in memory or cache for immediate processing. This type of persistence is short-lived and is primarily used during the immediate processing stage. Once the processing is complete, data in temporary storage is discarded. It’s suitable for holding data that needs to be accessed frequently and quickly during processing, but it’s not intended for long-term retention. You’ll encounter this storage for things like Azure Service Bus, Event Grids, and stream processing technologies like Azure Stream Analytics. Stream storage is used for managing real-time data streams generated by IoT devices, sensors, or other sources. This type of persistence allows for the immediate storage and processing of streaming data, enabling real-time analytics and decision making.

- Raw storage or data lakes

-

Data lakes are repositories that store raw, unprocessed data in its original format. This form of persistence is ideal for storing diverse data types, such as structured, semi-structured, and unstructured data, without immediate structuring or transformation. Data lakes facilitate future processing and analysis, enabling organizations to derive insights from data as needs arise. Batch processing and ETL tools use data lakes and raw storage like Blob Storage as part of their processing.

- Structured storage or data warehouses

-

Structured storage, commonly found in data warehouses, involves storing data in a structured format that is optimized for query and analysis. This type of persistence is particularly suitable for processed and transformed data that is ready for reporting, analytics, and business intelligence. Data warehouses provide optimized performance for querying large datasets and generating insights. Databases are useful for querying data for transactional workloads. For IoT, there’s no one database that does everything well, but Azure Cosmos DB and Azure Data Explorer provide some level of transactional storage while Azure Synapse provides more analytic storage.

While no one chapter is dedicated to data persistence, this book talks about persistence quite a bit, especially in the context of data processing. In an IoT solution, you’re likely to run into many different kinds of data storage. You’ll use these as part of your data movement and processing.

In data processing architectures, especially IoT, combining these data persistence methods creates different processing and analysis stages. Temporary storage aids immediate processing, data lakes store raw data, structured storage enables efficient querying, and archival storage ensures data retention. All these, at some level, however, support a data presentation.

Data Presentation Layer

In software architecture and system design, a presentation layer, sometimes called a servicing layer, refers to a component or set of features that facilitate the interaction between different parts of a system. It is an intermediary that handles various communication-related tasks, data processing, and exposure to functionality. The primary purpose of a servicing layer is to provide a unified and standardized interface for different clients, allowing them to access services and resources consistently. This layer helps abstract the underlying complexity of the system’s components and provides a cohesive interface for users and other systems to interact with.

By acting as a bridge between clients (consumers) and core functionalities, the servicing layer abstracts technical intricacies, allowing users to interact without needing to understand the inner workings. It manages the exchange of data between these entities, ensuring smooth communication through various protocols and transformations. It’s, in effect, a façade pattern atop complex data processing. A servicing layer provides security for data, APIs for serving data, pushes for data subscribers, data caches for download, and many other things that data consumers use.

This layer also addresses cross-cutting concerns such as logging, monitoring, and error handling, which are essential to maintaining a stable and well-monitored environment. The servicing layer can be designed for scalability, enabling the system to handle increased traffic and demand without compromising performance. Its role is vital in creating a simple, secure, and efficient bridge between the data and consumers. Chapter 11 dives into the servicing layer in great detail, where it talks about all the nuances of each of these styles of data exposure.

No one service in Azure provides a data presentation layer; however, the services used for this are typically forward-facing. For push-style deliveries, you may use some low-level protocols like FTP. Still, push-style for web apps and data integrations can be accomplished through webhooks, Azure Web PubSub, and Azure SignalR integration. Other integrations also may use APIs like those exposed through Azure Functions or an API service or with a tool like Azure Data API Builder. All these tools and integration points provide a way for consumers to get the data they need for their purposes.

Data Consumers

In the expansive landscape of IoT, data consumers encompass a diverse set of entities and systems that find value in the data generated by IoT devices. These consumers are instrumental in translating data into meaningful insights and actionable information. Several distinct categories of data consumers emerge within this ecosystem:

-

Some consumers demand immediate results from real-time data, acting swiftly on the incoming information to make instantaneous decisions and trigger responses. These real-time analytics and decision systems are essential for predictive maintenance, security breach detection, and event-driven operations. These systems consume APIs and push-style data integrations.

-

Operational dashboards and monitoring tools, like Power BI, present real-time data and reporting. These tools provide an easy-to-comprehend snapshot of ongoing operations and performance metrics, supporting operational teams in managing resources effectively and identifying anomalies in a timely manner. They facilitate informed choices and strategy formulation by delivering insights from real-time, historical, and aggregated IoT data.

-

Dataset consumers, such as predictive analytics and machine learning models, tap into historical and real-time IoT data, deducing patterns, trends, and insights that serve as a foundation for future predictions. These consumers are vital in optimizing processes, anticipating maintenance requirements, and guiding strategic planning.

-

External applications and APIs utilize IoT data for integration into other software systems, applications, or services. They range from third-party applications enhancing functionality to data marketplaces offering access to specific IoT datasets. They are likely to consume APIs and real-time data feeds. Industry apps and consumer apps empower users with insights into their data. These applications allow users to make informed choices and remotely manage their IoT devices.

Each type of data consumer possesses unique demands and purposes. A robust data architecture and a well-crafted servicing layer must be in place to effectively serve this diverse array. This ensures that the data generated by IoT devices is accessible, relevant, and available in formats tailored to the needs of each consumer, enabling them to extract maximum value from the IoT ecosystem. Chapter 12 dives into this topic and gives you some practical examples of each.

Monitoring, Logging, and Security

The IoT Landscape encompasses different domains within the context of IoT, but monitoring, logging, and security are cross-cutting concerns on the Azure IoT Landscape. They’re like fertilizer, water, and sunlight that make crops grow well.

In the context of Microsoft Azure and IoT deployments on the Azure platform, monitoring, logging, and security are three crucial aspects to consider. These aspects are fundamental to ensuring the reliability, visibility, and protection of IoT systems and data. You not only monitor your devices. You need to monitor your software systems and Azure itself.

Monitoring in an Azure IoT solution refers to the ongoing observation and measurement of system components, device behavior, and data flows. It involves using tools and services to track the performance, availability, and health of IoT resources. IoT Hub provides the data, but tools like Azure Monitor provide various monitoring capabilities, including real-time telemetry data visualization. Azure provides Defender for IoT, Azure Security Center, and Azure Sentinel to gain insights into device activities, detect anomalies, and ensure that your IoT solution functions as expected. By monitoring IoT devices and infrastructure, you can proactively identify issues, optimize performance, and provide a smooth user experience.

Monitoring is based on logging, which systematically records events, activities, and interactions within an IoT solution. Azure IoT solutions can generate substantial data, and effective logging is essential for troubleshooting, auditing, and understanding system behavior. Azure provides Azure Log Analytics and Azure Monitor, which enables you to capture and store logs centrally. These logs can offer valuable insights into device behavior, application interactions, and system events, helping you diagnose problems and analyze historical data for improvements. Chapter 13 talks about how to monitor your Azure estate and how the available tooling captures and logs data.

Security is a cross-cutting concern in IoT deployments, and Azure offers robust features to protect your IoT solution. Azure IoT provides secure device provisioning, identity management, and authentication using technologies like X.509 certificates or device keys. Role-based access control (RBAC) allows you to control access to resources based on user roles, ensuring that only authorized individuals can manage and interact with IoT components. As mentioned, Microsoft provides Azure Security Center and Sentinel for threat detection and protection capabilities, helping you identify and address potential security vulnerabilities in your IoT environment. Chapter 14 enumerates different threats related to IoT devices and offers strategies to mitigate these threats.

Monitoring, logging, and security are integral to Azure IoT deployments. Monitoring empowers you to oversee the health and performance of your solution in real time, logging enables effective troubleshooting and historical analysis, and security measures safeguard your IoT environment from unauthorized access and potential threats. These aspects collectively contribute to a reliable, well-managed, and secure IoT ecosystem on the Azure platform.

Conclusion

The last few pages have given you a distilled version of what this book entails. Each component illuminated has its role and significance within the IoT Landscape. There’s much to consider, from devices to data pathways and edge computing to data servicing. Welcome to the forefront of innovation, where Azure IoT offerings wait and stand as your gateway to possibilities.

Get Architecting IoT Solutions on Azure now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.