Small brains, big data

How neuroscience is benefiting from distributed computing, and how computing might learn from neuroscience.

Addresses and papers (source: Internet Archive Book Images)

Addresses and papers (source: Internet Archive Book Images)

When we think about big data, we usually think about the web: the billions of users of social media, the sensors on millions of mobile phones, the thousands of contributions to Wikipedia, and so forth. Due to recent innovations, web-scale data can now also come from a camera pointed at a small, but extremely complex object: the brain. New progress in distributed computing is changing how neuroscientists work with the resulting data — and may, in the process, change how we think about computation.

The brain consists of many neurons — a hundred thousand in a fly or larval zebrafish, millions in a mouse, billions in a human. Its function depends on the neurons’ activity, and how they communicate with one another. For a long time, recordings of neural activity were restricted to a few neurons at once, but several recent advances are making it possible to monitor responses across thousands, and in some cases (like the larval zebrafish), the entire brain.

Many of these methods are optical: animals are genetically engineered so that their neurons literally light up when active, and special forms of microscopy capture images of these activity patterns while animals perform a variety of behaviors (see these two papers here and here for an example of such technology, developed at Janelia Farm Research Campus in the labs of Misha Ahrens and Philipp Keller). The resulting data — arriving at terabytes per hour — pose significant challenges for analytics and understanding. They require both low-level processing (“munging”) and high-level analytics. As such, we have to look at each data set many ways — by relating neural responses to aspects of an animal’s behavior or the experimental protocol, for example, or by identifying patterns in the correlated activity of large ensembles. We never know the answer ahead of time — and sometimes we don’t even know where to start.

Our field requires tools for exploring large data sets interactively, and for flexibility in developing new analyses. Until now, single workstation solutions — e.g. running Matlab on one powerful machine — have been the norm in neuroscience, but these solutions scale poorly. Among distributed computing alternatives, we have found that the Apache Spark platform offers key advantages. First, Spark’s abstraction for in-memory caching makes it possible to rapidly subject a large data set to multiple queries within seconds or minutes, facilitating data exploration. Second, Spark offers powerful, flexible, and intuitive APIs in Scala, Java, and Python. The Python API is particularly appealing because it lets us combine Spark with a variety of existing Python tools for scientific computing (NumPy, Scipy, scikit-learn) and visualization (matplotlib, seaborn, mpld3).

Using Spark as a platform for large-scale computations, we are developing an open source library called Thunder that expresses common workflows for analyzing spatio-temporal data in a modular, user-oriented package, entirely in Python. (This library, and example applications, are described in this recently published paper, in collaboration with the lab of Misha Ahrens).

Many of our analyses create statistical “maps” of the brain by relating brain responses to properties of the outside world. If a zebrafish is presented with patterns that are moving in different directions, for instance, we can compute a map that captures how each neuron’s response relates to the different directions. This is much like a map of voting preferences; people vote for candidates, and neurons vote for directions. In another example, we compared neuronal responses to the animal’s swimming, finding that much of the brain responded most when the animal was swimming, but a distinct subset responded when it was not swimming — the function of these neurons remains a mystery.

Such maps are static, but our data are fundamentally dynamic because neuronal activity varies over time. A family of approaches based on dimensionality reduction (reviewed here) start from high-dimensional time-series data and recover low-dimensional representations that capture key dynamic properties, albeit in a simplified form. These analyses, which examine the entire data set at once, are particularly dependent on distributed computing.

Our long-term goal is to use these techniques to uncover principles of neural coding. Most neuroscientists agree that the brain is an engine of computation, and a remarkably effective one: consuming less power than a laptop, the brain can recognize an object in milliseconds, navigate an environment full of obstacles, and coordinate sophisticated motor plans. A long-standing idea is that understanding these capabilities will in turn stimulate advances in artificial intelligence. Indeed, so-called neural networks, including the recent wave of deep belief networks, mimic aspects of brain architecture: they are built from many “neuron”-like nodes that pass signals to one another. Some of these networks solve tasks, like object and voice recognition, impressively well.

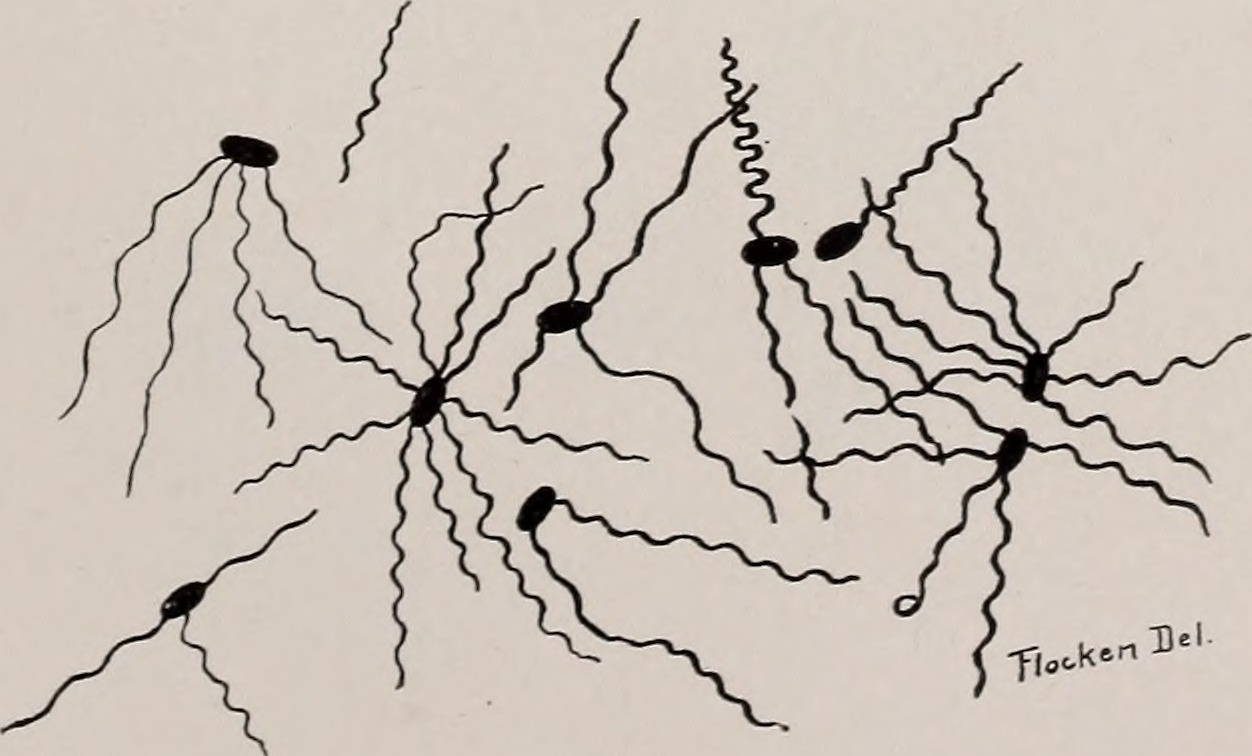

But there remains a significant gap between these networks and real brains. In most artificial networks, each node does essentially the same kind of thing, whereas everywhere we look in the brain we see diversity. There are hundreds or thousands of different kinds of neurons, with diverse morphologies, functions, patterns of connectivity, and forms of communication. Perhaps related, real organisms do not solve just one highly specific task with a clear objective (e.g. face recognition); they flexibly navigate and interact with a dynamic, ever-changing world. The role of such neural diversity — and the fundamental principles of biological computation — remain a mystery, but large-scale efforts to map the activity of entire nervous systems, as well as systematically characterize neuronal morphology and anatomical connectivity, will help pave the way.

In the shorter-term, there is another way neuroscience research might influence computation, data mining, and artificial intelligence. The data neuroscientists are now collecting will begin to rival other sources of large data, not only in size but in complexity, and neuroscience will increasingly both benefit from and inform the broader data science and machine-learning communities. Our mapping analyses, for example, are similar to efforts to learn features from large satellite imagery data or geographic statistics. And in so far as our data are large collections of time series, they might resemble the time-varying user statistics on a website, or the signals from the sensors increasingly appearing on our bodies and in our homes.

Whatever the source, scientists are facing similar challenges at every level — pre-processing, distributed pipelines, algorithms for pattern discovery, visualization — and I increasingly believe our fields can, and should, start tackling these problems together.