6 practical guidelines for implementing conversational AI

How organizations can create more fluid interactions between humans and machines.

RYB color chart from George Field's 1841 "Chromatography; or, A treatise on colours and pigments: and of their powers in painting" (source: Wikimedia Commons)

RYB color chart from George Field's 1841 "Chromatography; or, A treatise on colours and pigments: and of their powers in painting" (source: Wikimedia Commons)

It has been seven years since Apple unveiled Siri, and three since Jeff Bezos, inspired by Star Trek, introduced Alexa. But the idea of conversational interfaces powered by artificial intelligence has been around for decades. In 1966, MIT professor Joseph Weizenbaum introduced ELIZA—generally seen as the prototype for today’s conversational AI.

Decades later, in a WIRED story, Andrew Leonard proclaimed that “Bots are hot,” further speculating they would soon be able to “find me the best price on that CD, get flowers for my mom [and] keep me posted on the latest developments in Mozambique.” Only the reference to CDs reveals that the story was written in 1996.

Today, companies such as Slack, Starbucks, Mastercard, and Macy’s are piloting and using conversational interfaces for everything from customer service to controlling a connected home to, well, ordering flowers for mom. And if you doubt the value or longevity of this technology, consider that Gartner predicts that by 2019, virtual personal assistants “will have changed the way users interact with devices and become universally accepted as part of everyday life.”

Not all conversational AI is created equal, nor should it be. Conversational AI can come in the form of virtual personal (Alexa, Siri, Cortana, Google Home) or professional (such as X.ai or Skipflag) assistants. They can be built on top of a rules engine or based on machine learning technology. Use cases range from the minute and specific (Taco Bell’s TacoBot) to the general and hypothetically infinite (Alexa, Siri, Cortana, Google Home).

Organizations considering implementing conversational interfaces, whether for personal or professional uses, typically rely on partners for the technology stack, but there are plenty of considerations that span beyond the technical. While it’s too early in the game to call them “best practices,” here are some guidelines for organizations considering piloting and/or implementing conversational AI:

1. Start with a clear and focused use case

“The focus as a product or brand,” says Amir Shevat, director of developer relations at Slack, “should not be to think, ‘I am building a bot;’ it should be to think: ‘What is the service I want to deliver?’” Beyond that, say Shevat and others, the best place to start is with a thorny problem that could conceivably be mitigated or solved with data—a lot of it. This doesn’t mean all successful bots should do only one thing, but it’s critical to start with a narrow domain and a clear answer set, and design for an experience in which users typically don’t know what they can ask and what they cannot.

2. The objective determines the interaction model

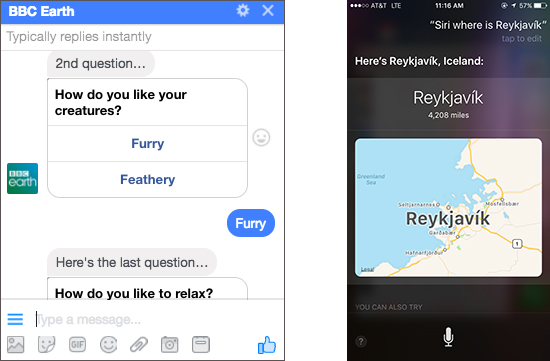

Some conversations lend themselves well to spoken interactions — for example, in a car or turning on the heater in your home. Others, such as asking for a bank balance, may require the privacy and/or precision of text input. But there are other ways to help users interact with bots. Figure 1 illustrates two successful interaction examples.

“Many people still have a misperception of bots just as speaking or typing,” says Chris Mullins of Microsoft. In fact, there are various ways (or modalities) that bots can use to interact with and convey information:

- Speech (Alexa, Siri, Google Home)

- Typing (bots in messaging apps)

- Keyboard support that provides cues that narrow the range of input options

- Cards that display the information visually

“In most successful scenarios,” says Mullins, “we see a mix of modalities win. At the right time, spoken language is perfect. At another time, typing may be perfect. Sometimes you want cards or keyboard support. Conversational modeling is a phenomenally hard problem, and no one has gotten it quite right yet.”

3. Multiple contexts require careful planning and clear choices

If a customer asks a retailer a question such as, “Where can I find a power drill in the store near me?” a developer must consider the context of that question based on the customer’s location. Is she physically in the store? Is she on her phone, or on her computer at home? Developers must design for multiple scenarios and experiences.

This process is challenging because it requires different interaction models during the scoping process. “Interacting with humans is complicated, and conversational modeling is hard,” says Mullins. To do the best job, project teams have to make choices at the beginning of the project.

4. Sustained interactions require sustained contextual understanding

There is a difference between giving a single command, such as “Play Beyoncé’s Lemonade,” or “Check my bank balance,” and engineering for a sustained interaction between a human and a chatbot. This is why conversations that require multiple exchanges (“turns”) between human and bot, and which require context to understand, are complex and hard to engineer.

The example in Figure 2 from Kasisto illustrates the complexity of even a relatively simple payment interaction:

Turn 1:

- The user asks Kai (the chatbot) to pay Emily $5.00.

- Kai finds two people named Emily in the user’s contact list and asks which one she means.

Turn 2:

- The user then switches gears and asks how much she has in checking.

- Kai answers, and then says, “Now, where were we?” picking up from the original request to pay Emily $5.00.

At first glance, this looks like a fairly simple transaction, but from an engineering perspective, it requires quite a bit of language and contextual understanding:

- First, Kai must recognize and keep track of the user’s goal — she wants to pay someone.

- Second, Kai must identify who she wants to pay, realize that the user has two friends named Emily, and request clarification on which one is to receive the payment.

- Third, Kai must understand that the single word “Neubig” refers to the previous interaction and represents a request to pay Emily Neubig.

- Fourth, Kai must interpret “What do I have in my checking?” as a new request unrelated to the previous two interactions (out of context).

- Finally, it must answer this new request, pick up the thread, and fulfill the original request, which is to pay Emily $5.00.

This conversation demonstrates why clear purpose, a narrow answer set, and deep domain expertise are important in chatbot development — because understanding the user’s intent when it is expressed naturally is highly complex and critical to delivering an effective experience.

5. EQ is as important as IQ

Intelligence and clear intention aren’t the only attributes required for successful bots. Detecting emotion, expressed in word choice and tone, is also critical to ensure that conversational experiences are satisfying for users. As a result, many research labs and startups are working on software intended to detect emotional states, whether via images, speech, text, or video.

SRI International’s Speech Technology and Research (STAR) laboratory has developed SenSay Analytics, a platform that claims to sense speaker emotions from audio signals. This is key to understanding when a user is frustrated and may need to be escalated to a human agent or in a receptive frame to hear about relevant offers.

6. Branding opportunities are tiny but potent.

Branding is a critical aspect of the bot’s (and brand’s) success. A poorly executed bot can damage reputation, while a strong brand presence can support the success of the bot. “I would argue that the branding opportunity you have in a conversational interface is relatively small,” says Lars Trieloff of Adobe. “So, leverage the brand in the daily interaction. Make sure it can do one thing really well and any way the customer likes to invoke it.”

We are in the very earliest stages of using conversational interfaces in a meaningful way, and there is still a long way to go. If you’ve ever spent any time revisiting some of these early web sites, you have an indication of where we are now.

But conversational AI—the ability for machines to interact with us in more humanistic ways—is here to stay. It may look primitive today, but advances in data science, natural language technology, machine learning, and other disciplines have finally created the necessary conditions for a shift from form-based to more fluid communications between humans and machines.

Will conversational interactions ever be equal or better than human ones? Some types of conversations will never lend themselves easily to machine interactions. But for some uses, they probably will; we’ve already seen massive innovation, and we’ve barely scratched the surface. One thing is clear, says futurist and creative strategist Monika Bielskyte: “We are entering a future without screens. In the future,” she predicts, “the world will be our desktop.”