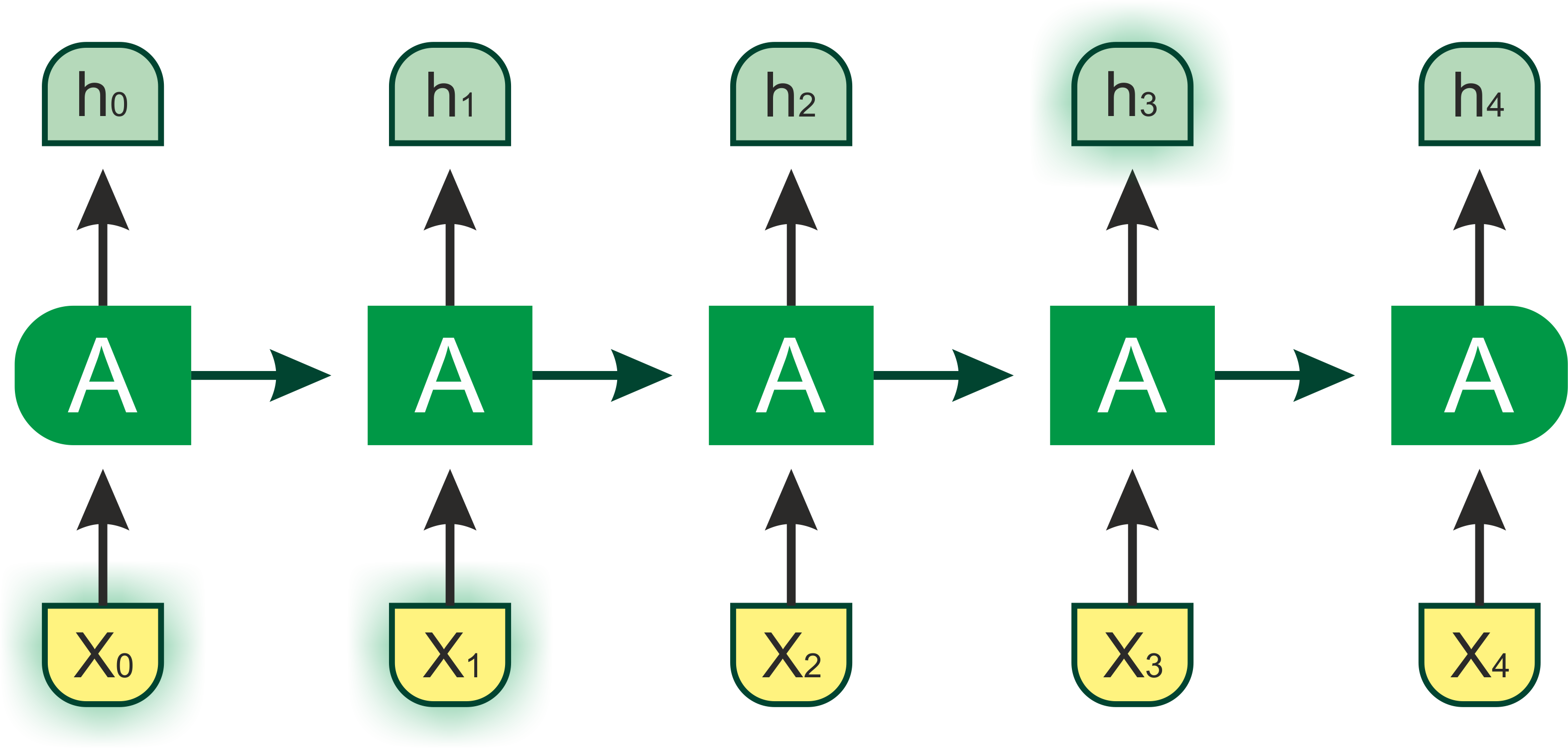

RNNs are very powerful and popular too. However, often, we only need to look at recent information to perform the present task rather than information that was stored a long time ago. This is frequent in NLP for language modeling. Let's see a common example:

Suppose a language model is trying to predict the next word based on the previous words. As a human being, if we try to predict the last word in the sky is blue, without further context, it's most likely the next word ...