Like Scala interactive shell, an interactive shell is also available for Python. You can execute Python code from Spark root folder as follows:

$ cd $SPARK_HOME$ ./bin/pyspark

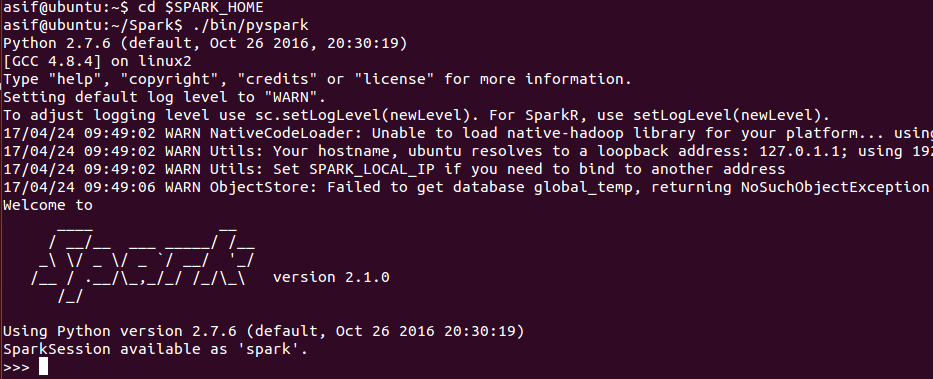

If the command went fine, you should observer the following screen on Terminal (Ubuntu):

Now you can enjoy Spark using the Python interactive shell. This shell might be sufficient for experimentations and developments. However, for production level, you should use a standalone application.

PySpark should be available in the system path by now. After writing the Python code, one can simply run the code using ...