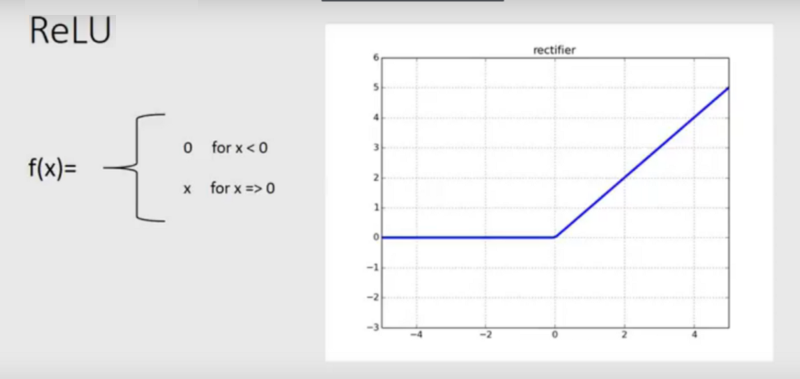

Rectified Linear Unit (ReLu) is the most popular function in the industry. See its equation in Figure 9.35:

If you will see the ReLu mathematical equation, then you will know that it is just max(0,x), which means that the value is zero when x is less than zero and linear with the slope of 1 when x is greater than or equal to zero. A researcher named Krizhevsky published a paper on image classification and said that they get six times faster convergence using ReLu as an activation function. ...