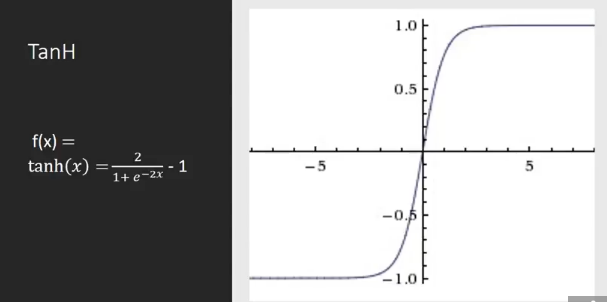

To overcome the problems of the sigmoid function, we will introduce an activation function named hyperbolic tangent function (TanH). The equation of TanH is given in Figure 9.34:

This function squashes the input region in the range of [-1 to 1] so its output is zero-centric, which makes optimization easier for us. This function also suffers from the vanishing gradient problem, so we need to see other activation functions.