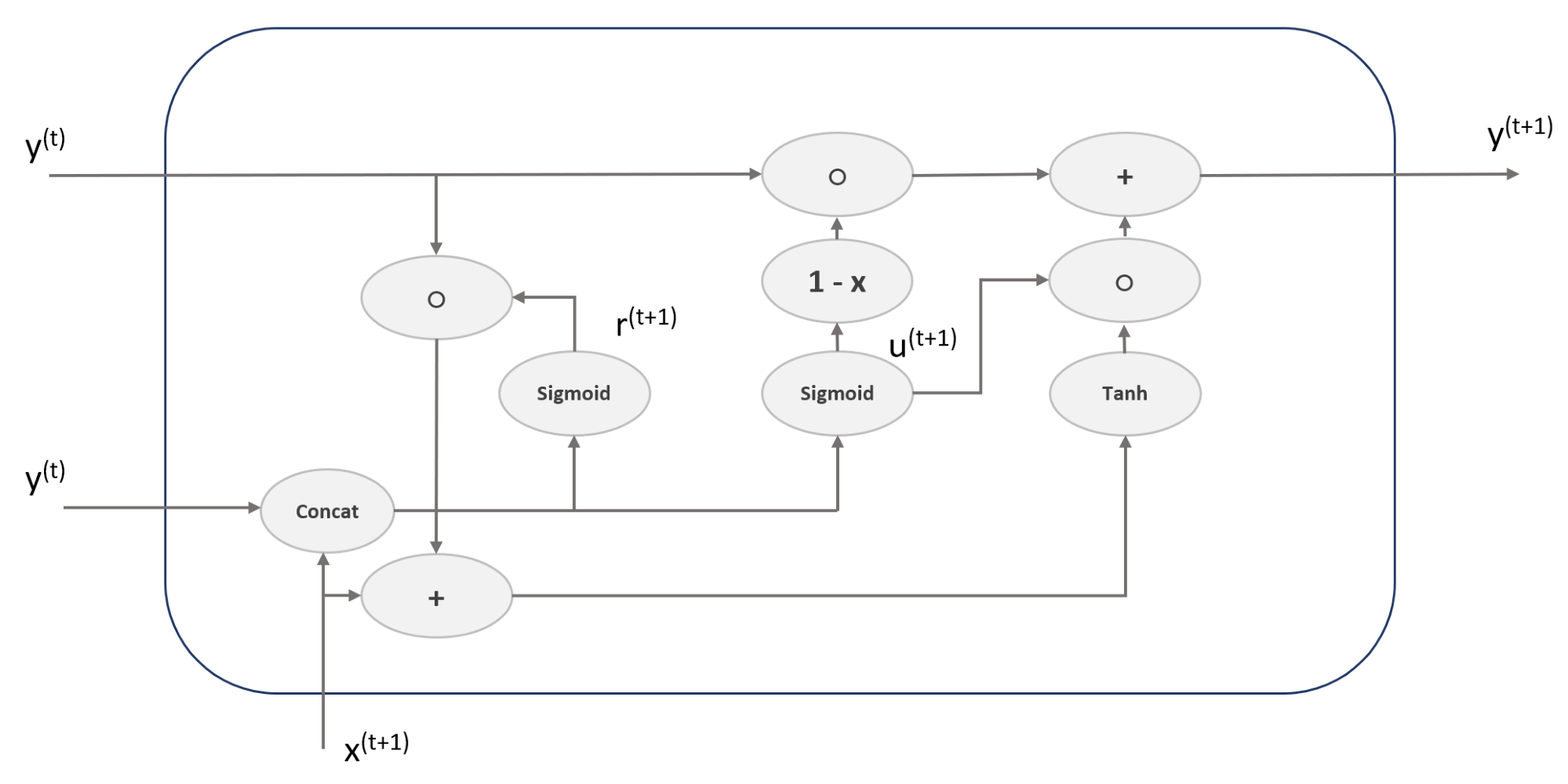

This model, named Gated recurrent unit (GRU), proposed by Cho et al. (in Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation, Cho K., Van Merrienboer B., Gulcehre C., Bahdanau D., Bougares F., Schwenk H., Bengio Y., arXiv:1406.1078 [cs.CL]) can be considered as a simplified LSTM with a few variations. The structure of a generic full-gated unit is represented in the following diagram:

The main differences from LSTM are the presence of only two gates and the absence of an explicit state. These simplifications can speed both the training and the prediction phases while avoiding the vanishing gradient ...