Chapter 6. Setting Up in AWS

In this chapter you’ll create a simple Hadoop cluster running in Elastic Compute Cloud (EC2), the service in Amazon Web Services (AWS) that enables you to provision instances. The cluster will consist of basic installations of HDFS and YARN, which form the foundation for running MapReduce and other analytic workloads.

This chapter assumes you are using a Unix-like operating system on your local computer, such as Linux or macOS. If you are using Windows, some of the steps will vary, particularly those for working with SSH.

Prerequisites

Before you start, you will need to have an account already established with AWS. You can register for one for free.

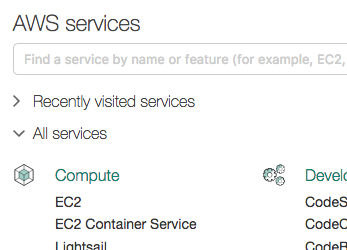

Once you are registered, you will be able to log in to the AWS console, a web interface for using all of the different services under the AWS umbrella. When you log in, you are presented with a dashboard for all of those services. It can be overwhelming, but fortunately, for this chapter you only need to use one service: EC2. Find it on the home page in the console, as shown in Figure 6-1.

Caution

Over time, Amazon will update the arrangement of the console, and so the exact instructions here may become inaccurate.

Figure 6-1. EC2 on the AWS console home page

Notice at the top of the page a drop-down menu, as shown in Figure 6-2, with a geographic area selected, such as N. Virginia, N. California, or ...

Get Moving Hadoop to the Cloud now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.