We have reached our final conceptual topic for this chapter. We've covered types of neurons, cost functions, gradient descent, and finally a mechanism to apply gradient descent across the network, making it possible to learn over repeated iterations.

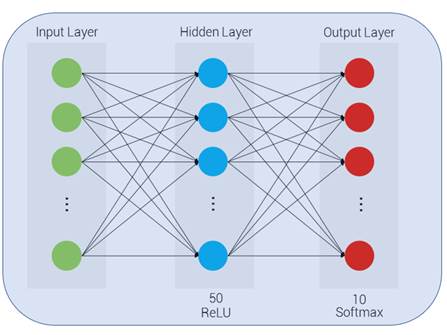

Previously, we saw the input layer and dense or hidden layers of an ANN:

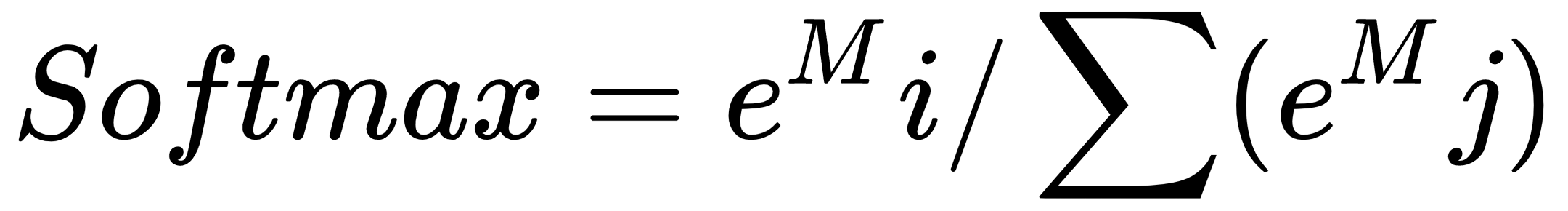

Softmax is a special kind of neuron that's used in the output layer to describe the probability of the respective output:

To understand the softmax equation and its concepts, we will be using some code. Like before, ...