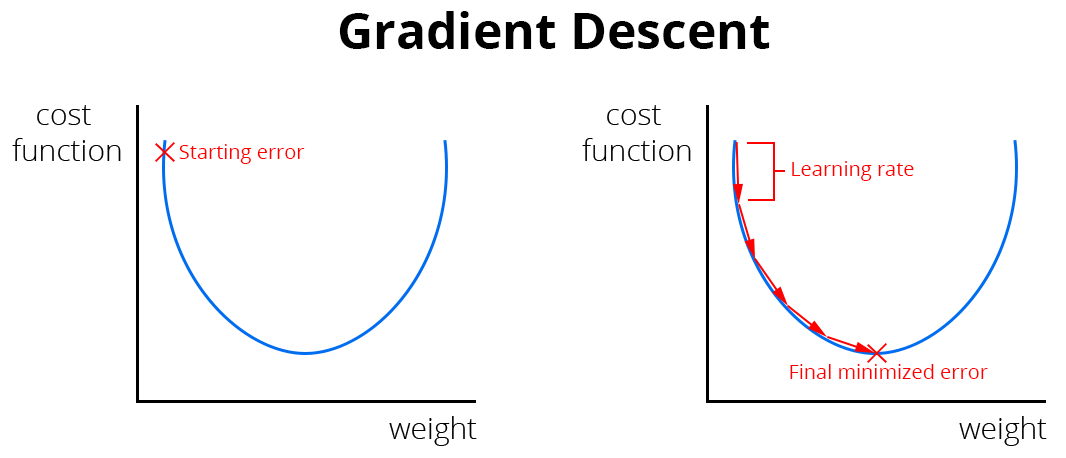

Now that we can make predictions given some parameters and calculate the precision of those predictions with the cost function, we have to work on improving those predictions by reducing the error. How do we reduce the error generated from the cost function? By adjusting the weight and bias of our prediction function with an optimization algorithm. In this case, we'll use gradient descent, which allows us to continuously reduce the error. Here's a graph that explains how it works:

We start with a high error caused by random weight and bias values, then we reduce the error by optimizing those parameters until we reach ...