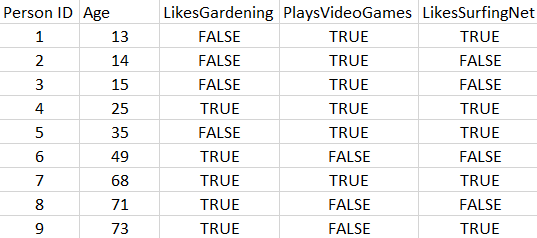

To explain gradient boosting, we will take the route of Ben Gorman, a great data scientist. He has been able to explain it in a mathematical yet simple way. Let's say that we have got nine training examples wherein we are required to predict the age of a person based on three features, such as whether they like gardening, playing video games, or surfing the internet. The data for this is as follows:

To build this model, the objective is to minimize the mean squared error.

Now, we will build the model with a regression tree. To start with, if we want to have at least three samples at the training nodes, the first split of ...