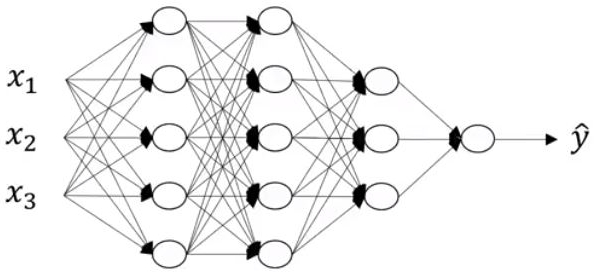

The explanation of the notation is as follows:

- l: Number of layers is 4

- n[l]: Number of nodes in layer l

For the following architecture, this is as follows:

- n [0]: Number of nodes in input layer, that is, 3

- n [1]: 5

- n [2]: 5

- n [3]: 3

- n [4]: 1

- a [l]: Activations in layer l:

As we already know, the following equation goes through the layers:

z = wTX + b

Hence, we get the following results:

- Activation: a = σ(z)

- w[l]: Weight in layer l

- b[l]: Bias in layer l