Simple RNN

Another method to make order matter within neural networks is to give the network some kind of memory. So far, all of our networks have done a forward pass without any memory of what happened before or after the pass. It's time to change that with a recurrent neural network (RNN):

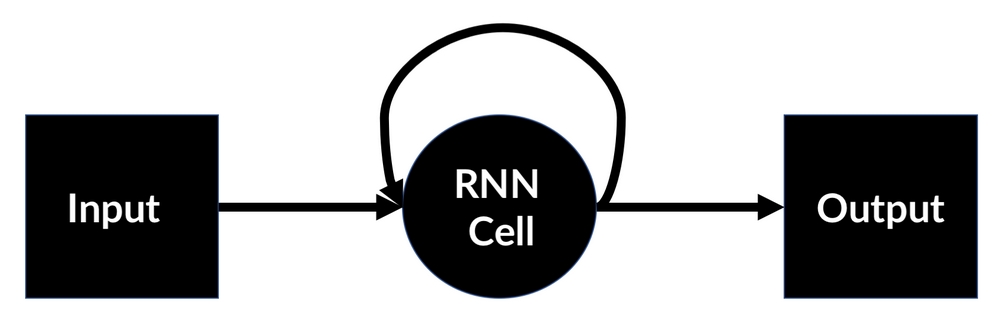

The scheme of an RNN

RNNs contain recurrent layers. Recurrent layers can remember their last activation and use it as their own input:

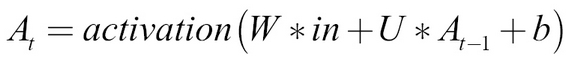

A recurrent layer takes a sequence as an input. For each element, it then computes a matrix multiplication (W * in), just like a ...

Get Machine Learning for Finance now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.