Chapter 1. LLM Fundamentals with LangChain

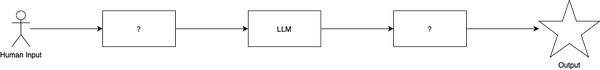

The preface gave you a taste of the power of LLM prompting, where we saw first-hand the impact each prompting technique can have on what you get out of LLMs, especially when judiciously combined. The challenge in building good LLM applications is, in fact, in how to effectively construct the prompt sent to the model and process the model’s prediction to return an accurate output (Figure 1-1)

Figure 1-1. The challenge in making LLMs a useful part of your application.

If you can solve this problem, you are well on your way to build LLM applications, simple and complex alike. In this chapter, you’ll learn more about how LangChain’s building blocks map to LLM concepts and how, when combined effectively, they enable you to build LLM applications. But first, a brief primer on why we think it useful to use LangChain to build LLM applications.

Getting Set Up with LangChain

To follow along with the rest of the chapter, and the chapters to come, we recommend setting up LangChain on your computer first.

First, see the instructions in the preface regarding setting up an OpenAI account and complete these first if you haven’t yet. If you prefer using a different LLM provider, see the nearby sidebar for alternatives.

Then head on over the API Keys page on the OpenAI website (after logging in to your account), create an API key, and save it—you’ll need it soon.

Note

In this book we’ll show code examples in both Python and JavaScript, LangChain offers the same functionality in both languages, so just pick one of them and follow the respective code snippets throughout the book. The code examples for each language will be equivalent between the two, so just pick whichever language you’re most comfortable with.

First, some setup instructions for readers using Python:

-

Ensure you have Python installed. See instructions here for your operating system.

-

Install Jupyter if you want to run the examples in a notebook environment. You can do this by running

pip install notebookin your terminal. -

Install the LangChain library by running the following command in your terminal:

pip install langchain langchain_openai langchain_community langchain-text-splitters langchain-postgres -

Take the OpenAI API key you generated at the beginning of this section and make it available in your terminal environment. You can do this by running the following:

export OPENAI_API_KEY=your-key. Don’t forget to replaceyour-keywith the API key you generated previously. -

Open a Jupyter notebook by running this command:

jupyter notebook. You’re now ready to follow along with the Python code examples.

And now some instructions for readers using JavaScript:

-

Take the OpenAI API key you generated at the beginning of this section and make it available in your terminal environment. You can do this by running the following:

export OPENAI_API_KEY=your-key. Don’t forget to replaceyour-keywith the API key you generated previously. -

If you want to run the examples as Node.js scripts, install Node following instructions here.

-

Install the LangChgain libraries by running the following command in your terminal:

npm install langchain @langchain/openai @langchain/community pg -

Take each example, save it as a

.jsfile and run it withnode ./file.js.

Using LLMs in LangChain

First, to recap, as you know by now, LLMs are the driving engine behind most generative AI applications. LangChain provides two simple interfaces to interact with any LLM API provider:

-

LLMs

-

Chat models

Let’s start with the first one. The LLMs interface simply takes a string prompt as input, sends the input to the model provider, and then returns the model prediction as output.

Let’s import LangChain’s OpenAI LLM wrapper to invoke a model prediction using a simple prompt. First in Python:

fromlangchain_openai.llmsimportOpenAImodel=OpenAI(model='gpt-3.5-turbo-instruct')prompt='The sky is'completion=model.invoke(prompt)completion

And now in JS:

import{OpenAI}from'@langchain/openai'constmodel=newOpenAI({model:'gpt-3.5-turbo-instruct'})constprompt='The sky is'constcompletion=awaitmodel.invoke(prompt)completion

And the output:

Blue!

Tip

Notice the parameter model passed to OpenAI. This is the most common parameter to configure when using an LLM or Chat Model, the underlying model to use, as most providers offer several models, with different trade-offs in capability and cost (usually larger models are more capable, but also more expensive and slower). See here for an overview of the models offered by OpenAI.

Other useful parameters to configure include the following, offered by most providers:

temperature-

This one controls the sampling algorithm used to generate output. Lower values produce more predictable outputs (for example, 0.1), while higher values generate more creative, or unexpected, results (such as 0.9). Different tasks will need different values for this parameter. For instance, producing structured output usually benefits from a lower temperature, whereas creative writing tasks do better with a higher value.

max_tokens-

This one limits the size (and cost) of the output. A lower value may cause the LLM to stop generating the output before getting to a natural end, so it may appear to have been truncated.

Beyond these, each provider exposes a different set of parameters. We recommend looking at the documentation for the one you choose. For example, you can see OpenAI’s here.

Alternatively, the Chat Model interface enables back and forth conversations between the user and model. The reason why it’s a separate interface is because popular LLM providers like OpenAI differentiate messages sent to and from the model into user, assistant, and system roles (here role denotes the type of content the message contains):

- System role

-

Enables the developer to specify instructions the model should use to answer a user question.

- User role

-

The individual asking questions and generating the queries sent to the model.

- Assistant role

-

The model’s responses to the user’s query.

The chat models interface makes it easier to configure and manage conversions in your AI chatbot application. Here’s an example utilizing LangChain’s ChatOpenAI model, first in Python:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.messages import HumanMessage

model = ChatOpenAI()

prompt = [HumanMessage('What is the capital of France?')]

completion = model.invoke(prompt)

And now in JS:

import {ChatOpenAI} from '@langchain/openai'

import {HumanMessage} from '@langchain/core/messages'

const model = new ChatOpenAI()

const prompt = [new HumanMessage('What is the capital of France?')]

const completion = await model.invoke(prompt)

completion

And the output:

AIMessage(content='The capital of France is Paris.')

Instead of a single prompt string, chat models make use of different types of chat message interfaces associated with each role mentioned previously. These include the following:

-

HumanMessage -

A message sent from the perspective of the human, with the user role.

-

AIMessage -

A message sent from the perspective of the AI the human is interacting with, with the assistant role.

-

SystemMessage -

A message setting the instructions the AI should follow, with the system role.

-

ChatMessage -

A message allowing for arbitrary setting of role.

Let’s incorporate a SystemMessage instruction in our example, first in Python:

from langchain_core.messages import AIMessage, HumanMessage, SystemMessage

from langchain_openai.chat_models import ChatOpenAI

model = ChatOpenAI()

system_msg = SystemMessage('You are a helpful assistant that responds to questions with three exclamation marks.')

human_msg = HumanMessage('What is the capital of France?')

completion = model.invoke([system_msg, human_msg])

completion

And now in JS:

import {ChatOpenAI} from '@langchain/openai'

import {HumanMessage, SystemMessage} from '@langchain/core/messages'

const model = new ChatOpenAI()

const prompt = [

new SystemMessage('You are a helpful assistant that responds to questions with three exclamation marks.'),

new HumanMessage('What is the capital of France?')

]

const completion = await model.invoke(prompt)

completion

And the output:

AIMessage('Paris!!!')

As you can see, the model obeyed the instruction provided in the SystemMessage even though it wasn’t present in the user’s question. This enables you to pre-configure your AI application to respond in a relatively predictable manner based on the user’s input.

Making LLM prompts reusable

The previous section showed how the prompt instruction significantly influences the model’s output. Prompts help the model understand context and generate relevant answers to queries.

Here is an example of a detailed prompt:

Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know". Context: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively. Question: Which model providers offer LLMs? Answer:

Although the prompt looks like a simple string, the challenge is figuring out what the text should contain, and how it should vary based on the user’s input. In this example, the Context and Question values are hardcoded, but what if we wanted to pass these in dynamically?

Fortunately, LangChain provides prompt template interfaces that make it easy to construct prompts with dynamic inputs, first in Python:

from langchain_core.prompts import PromptTemplate

template = PromptTemplate.from_template("""Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know".

Context: {context}

Question: {question}

Answer: """)

prompt = template.invoke({

"context": "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

"question": "Which model providers offer LLMs?"

})

And in JS:

import {PromptTemplate} from '@langchain/core/prompts'

const template = PromptTemplate.fromTemplate(`Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know".

Context: {context}

Question: {question}

Answer: `)

const prompt = await template.invoke({

context: "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

question: "Which model providers offer LLMs?"

})

And the output:

StringPromptValue(text='Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".\n\nContext: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face\'s `transformers` library, or by utilizing OpenAI and Cohere\'s offerings through the `openai` and `cohere` libraries respectively.\n\nQuestion: Which model providers offer LLMs?\n\nAnswer: ')

That example takes the static prompt from the previous block and makes it dynamic. The template contains the structure of the final prompt alongside the definition of where the dynamic inputs will be inserted.

As such, the template can be used as a recipe to build multiple static, specific prompts. When you format the prompt with some specific values, here context and question, you get a static prompt ready to be passed in to an LLM.

As you can see, the question argument is passed dynamically via the invoke function. By default, LangChain prompts follow Python’s f-string syntax for defining dynamic parameters – any word surrounded by curly braces, such as {question}, are placeholders for values passed in at run-time. In the example above, {question} was replaced by “Which model providers offer LLMs?”.

Let’s see how we’d feed this into an LLM OpenAI model using LangChain, first in Python:

from langchain_openai.llms import OpenAI

from langchain_core.prompts import PromptTemplate

# both `template` and `model` can be reused many times

template = PromptTemplate.from_template("""Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know".

Context: {context}

Question: {question}

Answer: """)

model = OpenAI()

# `prompt` and `completion` are the results of using template and model once

prompt = template.invoke({

"context": "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

"question": "Which model providers offer LLMs?"

})

completion = model.invoke(prompt)

And in JS:

import {PromptTemplate} from '@langchain/core/prompts'

import {OpenAI} from '@langchain/openai'

const model = new OpenAI()

const template = PromptTemplate.fromTemplate(`Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don't know".

Context: {context}

Question: {question}

Answer: `)

const prompt = await template.invoke({

context: "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

question: "Which model providers offer LLMs?"

})

const completion = await model.invoke(prompt)

And the output:

Hugging Face's `transformers` library, OpenAI using the `openai` library, and Cohere using the `cohere` library offer LLMs.

If you’re looking to build an AI chat application, the chat prompt template can be used instead to provide dynamic inputs based on the role of the chat message. First in Python:

from langchain_core.prompts import ChatPromptTemplate

template = ChatPromptTemplate.from_messages([

('system', 'Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".'),

('human', 'Context: {context}'),

('human', 'Question: {question}'),

])

prompt = template.invoke({

"context": "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

"question": "Which model providers offer LLMs?"

})

And in JS:

import {ChatPromptTemplate} from '@langchain/core/prompts'

const template = ChatPromptTemplate.fromMessages([

['system', 'Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".'],

['human', 'Context: {context}'],

['human', 'Question: {question}'],

])

const prompt = await template.invoke({

context: "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

question: "Which model providers offer LLMs?"

})

And the output:

ChatPromptValue(messages=[SystemMessage(content='Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".'), HumanMessage(content="Context: The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face\'s `transformers` library, or by utilizing OpenAI and Cohere\'s offerings through the `openai` and `cohere` libraries respectively."), HumanMessage(content='Question: Which model providers offer LLMs?')])

Notice how the prompt contains instructions in a SystemMessage and two HumanMessages that contain dynamic context and question variables. You can still format the template in the same way, and get back a static prompt that you can pass to a large language model for a prediction output. First in Python:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

# both `template` and `model` can be reused many times

template = ChatPromptTemplate.from_messages([

('system', 'Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".'),

('human', 'Context: {context}'),

('human', 'Question: {question}'),

])

model = ChatOpenAI()

# `prompt` and `completion` are the results of using template and model once

prompt = template.invoke({

"context": "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

"question": "Which model providers offer LLMs?"

})

completion = model.invoke(prompt)

And in JS:

import {ChatPromptTemplate} from '@langchain/core/prompts'

import {ChatOpenAI} from '@langchain/openai'

const model = new ChatOpenAI()

const template = ChatPromptTemplate.fromMessages([

['system', 'Answer the question based on the context below. If the question cannot be answered using the information provided answer with "I don\'t know".'],

['human', 'Context: {context}'],

['human', 'Question: {question}'],

])

const prompt = await template.invoke({

context: "The most recent advancements in NLP are being driven by Large Language Models (LLMs). These models outperform their smaller counterparts and have become invaluable for developers who are creating applications with NLP capabilities. Developers can tap into these models through Hugging Face's `transformers` library, or by utilizing OpenAI and Cohere's offerings through the `openai` and `cohere` libraries respectively.",

question: "Which model providers offer LLMs?"

})

const completion = await model.invoke(prompt)

And the output:

AIMessage(content="Hugging Face's `transformers` library, OpenAI using the `openai` library, and Cohere using the `cohere` library offer LLMs.")

Getting Specific Formats out of LLMs

Plain text outputs are useful, but there may be use cases where you need the LLM to generate a structured output, that is, output in a machine-readable format, such as JSON, XML or CSV, or even in a programming language such as Python or JavaScript. This is very useful when you intend to hand that output off to some other piece of code, making an LLM play a part in your larger application.

JSON Output

The most common format to generate with LLMs is JSON, which can then be used to, for instance:

-

Send it over the wire to your frontend code

-

Saving it to a database

When generating JSON, the first task is to define the schema you want the LLM to respect when producing the output. Then, you should include that schema in the prompt, along with the text you want to use as the source. Let’s see an example, first in Python:

from langchain_openai import ChatOpenAI

from langchain_core.pydantic_v1 import BaseModel

class AnswerWithJustification(BaseModel):

'''An answer to the user question along with justification for the answer.'''

answer: str

'''The answer to the user's question'''

justification: str

'''Justification for the answer'''

llm = ChatOpenAI(model="gpt-3.5-turbo-0125", temperature=0)

structured_llm = llm.with_structured_output(AnswerWithJustification)

structured_llm.invoke("What weighs more, a pound of bricks or a pound of feathers")

And in JS:

import {ChatOpenAI} from '@langchain/openai'

import {z} from "zod";

const answerSchema = z

.object({

answer: z.string().describe("The answer to the user's question"),

justification: z.string().describe("Justification for the answer"),

})

.describe("An answer to the user question along with justification for the answer.");

const model = new ChatOpenAI({model: "gpt-3.5-turbo-0125", temperature: 0}).withStructuredOutput(answerSchema)

await model.invoke("What weighs more, a pound of bricks or a pound of feathers")

And the output:

{

answer: "They weigh the same",

justification: "Both a pound of bricks and a pound of feathers weigh one pound. The weight is the same, but the volu"... 42 more characters

}

So, first define a schema. In Python this is easiest to do with Pydantic (a library used for validating data against schemas). In JS this is easiest to do with Zod (an equivalent library). The method with_structured_output will use that schema for two things:

The schema will be converted to a JSONSchema object (a JSON format used to describe the shape, that is types, names, descriptions, of JSON data), which will be sent to the LLM. For each LLM, LangChain picks the best method to do this, usually function-calling or prompting.

The schema will also be used to validate the output returned by the LLM before returning it, this ensures the output produced respects the schema you passed in exactly.

Other Machine-Readable Formats with Output Parsers

You can also use an LLM or Chat Model to produce output in other formats, such as CSV or XML. This is where output parsers come in handy. Output parsers are classes that help you structure large language model responses. They serve two functions:

- Providing format instructions

-

Output parsers can be used to inject some additional instructions in the prompt that help guide the LLM to output text in the format it knows how to parse.

- Validating and parsing output

-

The main function is to take the textual output of the LLM or Chat Model and render it to a more structured format, such as a list, XML, and so on. This can include removing extraneous information, correcting incomplete output, and validating the parsed values.

Here’s an example of how an output parser works, first in Python:

from langchain_core.output_parsers import CommaSeparatedListOutputParser

parser = CommaSeparatedListOutputParser()

items = parser.invoke("apple, banana, cherry")

And in JS:

import {CommaSeparatedListOutputParser} from '@langchain/core/output_parsers'

const parser = new CommaSeparatedListOutputParser()

await parser.invoke("apple, banana, cherry")

And the output:

['apple', 'banana', 'cherry']

LangChain provides a variety of output parsers for various use cases, including CSV, XML, and more. We’ll see how to combine output parsers with models and prompts in the next section.

Assembling the Many Pieces of an LLM Application

The key components you’ve learned about so far are essential building blocks of the LangChain framework. Which brings us to the critical question: how do you combine them effectively to build your LLM application?

Using the Runnable Interface

As you may have noticed, all the code examples used so far utilize a similar interface and the invoke() method to generate outputs from the model (or prompt template, or output parser). All components have the following:

-

A common interface with these methods:

- invoke

-

Transforms a single input into an output.

- batch

-

Efficiently transforms multiple inputs into multiple outputs.

- stream

-

Streams output from a single input as it’s produced.

-

Built-in utilities for retries, fallbacks, schemas, and runtime configurability

-

In Python, each of the 3 methods above have

asyncioequivalents.

As such all components behave the same way, and the interface learned for one of them applies to all. First in Python:

from langchain_openai.llms import OpenAI

model = OpenAI()

completion = model.invoke('Hi there!')

# Hi!

completions = model.batch(['Hi there!', 'Bye!'])

# ['Hi!', 'See you!']

for token in model.stream('Bye!'):

print(token)

# Good

# bye

# !

And in JS:

import {OpenAI} from '@langchain/openai'

const model = new OpenAI()

const completion = await model.invoke('Hi there!')

// Hi!

const completions = await model.batch(['Hi there!', 'Bye!'])

// ['Hi!', 'See you!']

for await (const token of await model.stream('Bye!')) {

console.log(token)

// Good

// bye

// !

}

There you see how the three main methods work:

-

invoke()takes a single input and returns a single output. -

batch()takes a list of outputs and returns a list of outputs. -

stream()takes a single input and returns an iterator of parts of the output as they become available.

In some cases, if the underlying component doesn’t support iterative output, there will be a single part containing all output.

You can combine these components in two ways:

- Imperative

-

Call them directly, for example with

model.invoke(...) - Declarative

-

With LangChain Expression Language (LCEL), covered in an upcoming section.

Table 2-1 summarizes their differences, and we’ll see each in action next.

| Imperative | Declarative | |

|---|---|---|

| Syntax | All of Python or JavaScript | LCEL |

| Parallel execution | Python: With threads or coroutines JavaScript: With Promise.all |

Automatic |

| Streaming | With yield keyword | Automatic |

| Async execution | With async functions | Automatic |

Imperative Composition

Imperative composition is just a fancy name for writing the code you’re used to writing, composing these components into functions and classes. Here’s an example combining prompts, models, and output parsers, first in Python:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.runnables import chain

# the building blocks

template = ChatPromptTemplate.from_messages([

('system', 'You are a helpful assistant.'),

('human', '{question}'),

])

model = ChatOpenAI()

# combine them in a function

# @chain decorator adds the same Runnable interface for any function you write

@chain

def chatbot(values):

prompt = template.invoke(values)

return model.invoke(prompt)

# use it

result = chatbot.invoke({

"question": "Which model providers offer LLMs?"

})

And in JS:

import {ChatOpenAI} from '@langchain/openai'

import {ChatPromptTemplate} from '@langchain/core/prompts'

import {RunnableLambda} from '@langchain/core/runnables'

// the building blocks

const template = ChatPromptTemplate.fromMessages([

['system', 'You are a helpful assistant.'],

['human', '{question}'],

])

const model = new ChatOpenAI()

// combine them in a function

// RunnableLambda adds the same Runnable interface for any function you write

const chatbot = RunnableLambda.from(async values => {

const prompt = await template.invoke(values)

return await model.invoke(prompt)

})

// use it

const result = await chatbot.invoke({

"question": "Which model providers offer LLMs?"

})

And the output:

AIMessage(content="Hugging Face's `transformers` library, OpenAI using the `openai` library, and Cohere using the `cohere` library offer LLMs.")

The preceding is a complete example of a chatbot, using a prompt and chat model. As you can see, it uses familiar Python syntax and supports any custom logic you might want to add in that function.

On the other hand, if you want to enable streaming or async support, you’d have to modify your function to support it. For example, streaming support can be added as follows, in Python first:

@chain

def chatbot(values):

prompt = template.invoke(values)

for token in model.invoke(prompt):

yield token

for part in chatbot.stream({

"question": "Which model providers offer LLMs?"

}):

print(part)

And in JS:

const chatbot = RunnableLambda.from(async function* (values) {

const prompt = await template.invoke(values)

for await (const token of await model.stream(prompt)) {

yield token

}

})

for await (const token of await chatbot.stream({

"question": "Which model providers offer LLMs?"

})) {

console.log(token)

}

And the output:

AIMessageChunk(content="Hugging") AIMessageChunk(content=" Face's AIMessageChunk(content=" `transformers` ...

So, either in JS or Python, you can enable streaming for your custom function by yielding the values you want to stream, and then calling it with stream.

And for asynchronous execution you’d rewrite your function like this, in Python:

@chain

async def chatbot(values):

prompt = await template.ainvoke(values)

return await model.ainvoke(prompt)

result = await chatbot.ainvoke({

"question": "Which model providers offer LLMs?"

})

# > AIMessage(content="Hugging Face's `transformers` library, OpenAI using the `openai` library, and Cohere using the `cohere` library offer LLMs.")

This one applies to Python only as asynchronous execution is the only option in JavaScript.

Declarative Composition

LangChain Expression Language (LCEL) is a declarative language for composing LangChain components. LangChain compiles LCEL compositions to an optimized execution plan, with automatic parallelization, streaming, tracing, and async support.

Let’s see the same example using LCEL, first in Python:

from langchain_openai.chat_models import ChatOpenAI

from langchain_core.prompts import ChatPromptTemplate

# the building blocks

template = ChatPromptTemplate.from_messages([

('system', 'You are a helpful assistant.'),

('human', '{question}'),

])

model = ChatOpenAI()

# combine them with the | operator

chatbot = template | model

# use it

result = chatbot.invoke({

"question": "Which model providers offer LLMs?"

})

And in JS:

import {ChatOpenAI} from '@langchain/openai'

import {ChatPromptTemplate} from '@langchain/core/prompts'

import {RunnableLambda} from '@langchain/core/runnables'

// the building blocks

const template = ChatPromptTemplate.fromMessages([

['system', 'You are a helpful assistant.'],

['human', '{question}'],

])

const model = new ChatOpenAI()

// combine them in a function

// RunnableLambda adds the same Runnable interface for any function you write

const chatbot = template.pipe(model)

// use it

const result = await chatbot.invoke({

"question": "Which model providers offer LLMs?"

})

And the output:

AIMessage(content="Hugging Face's `transformers` library, OpenAI using the `openai` library, and Cohere using the `cohere` library offer LLMs.")

Crucially, the last line is the same between the two examples—that is, you use the function and the LCEL sequence in the same way, with invoke/stream/batch. And in this version, you don’t need to do anything else to use streaming, first in Python:

chatbot = template | model

for part in chatbot.stream({

"question": "Which model providers offer LLMs?"

}):

print(part)

# > AIMessageChunk(content="Hugging")

# > AIMessageChunk(content=" Face's

# > AIMessageChunk(content=" `transformers`

# ...

And in JS:

const chatbot = template.pipe(model)

for await (const token of await chatbot.stream({

"question": "Which model providers offer LLMs?"

})) {

console.log(token)

}

And, for Python only, it’s the same for using asynchronous methods:

chatbot = template | model

result = await chatbot.ainvoke({

"question": "Which model providers offer LLMs?"

})

Summary

In this chapter, you’ve learned about the building blocks and key components necessary to build LLM applications using LangChain. LLM applications are essentially a chain consisting of the large language model to make predictions, the prompt instruction(s) to guide the model towards a desired output, and an optional output parser to transform the format of the model’s output.

All LangChain components share the same interface with invoke, stream, and batch methods to handle various inputs and outputs. They can either be combined and executed imperatively by calling them directly or declaratively using LangChain Expression Language (LCEL).

The imperative approach is useful if you intend to write a lot of custom logic, whereas the declarative approach is useful for simply assembling existing components with limited customization.

In the next chapter, you’ll learn how to provide external data to your AI chatbot as context, so that you can build an LLM application that enables you to “chat” with your data.

Get Learning LangChain now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.