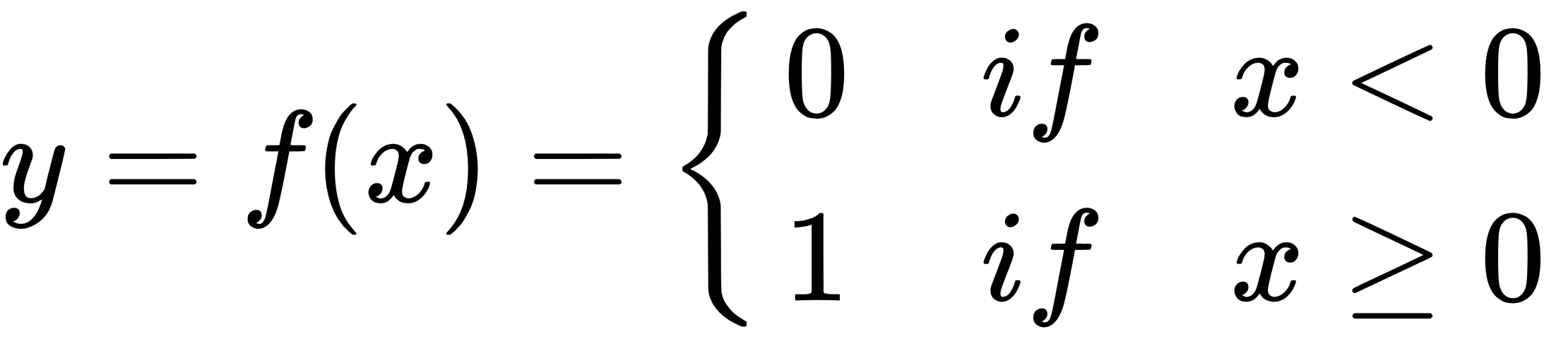

Rectified Linear Unit(ReLU) is the most used activation function since 2015. It's a simple condition and has advantages over the other functions. The function is defined by the following formula:

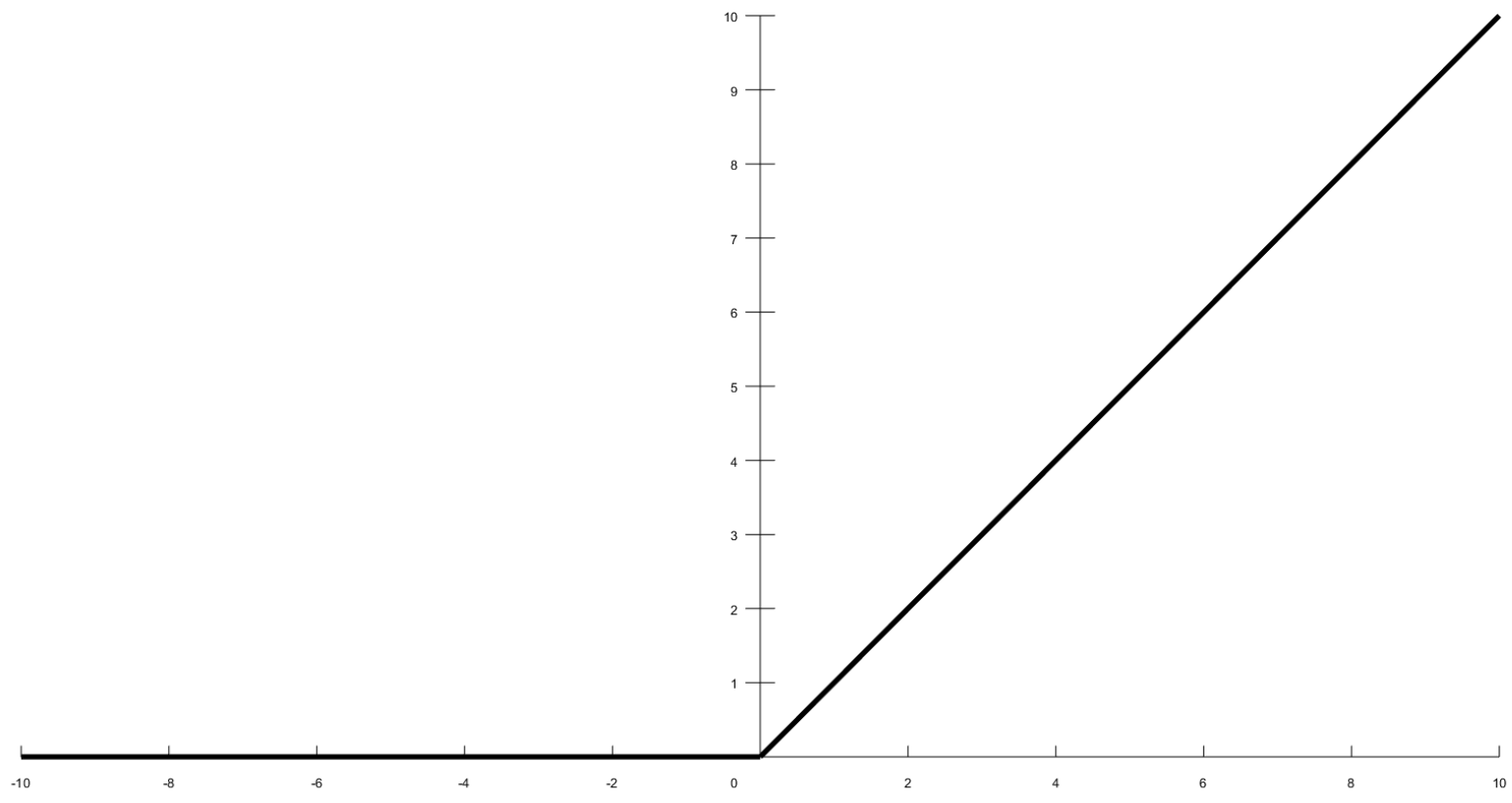

The following screenshot shows a ReLU activation function:

The range of output is between 0 and infinity. ReLU finds applications in computer vision and speech recognition using deep neural nets. There are various other activation functions as well, but we've covered the most important ones here.