Chapter 1. Introduction to Service Mesh

In this chapter, we explore the notion of a service mesh and the vast ecosystem that has emerged in support of service mesh solutions. Organizations face many challenges when managing services, especially in a cloud native environment. We are introducing service mesh as a key solution on your cloud native journey because we believe that a service mesh should be a serious consideration for managing complex interactions between services. An understanding of service mesh and its ecosystem will help you choose an appropriate implementation for your cloud solution.

Challenges in Managing Microservices

Microservices are an architecture and development approach that breaks down business functions into individually deployable and managed services. We view microservices as loosely coupled components of an application that communicate with each other using well-defined APIs. A key characteristic of microservices is that you should be able to update them independent of one another, which enables smaller and more frequent deployments. Having many loosely coupled services that are independently and frequently changing does promote agility, but it also presents a number of management challenges:

Observing interactions between services can be complex when you have many distributed, loosely coupled components.

Traffic management at each service endpoint becomes more important to enable specialized routing for A/B testing or canary deployments without impacting clients within the system.

Securing communication by encrypting the data flows is more complicated when the services are decoupled with different binary processes and possibly written in different languages.

Managing timeouts and communication failures between the services can lead to cascading failures and is more difficult to do correctly when the services are distributed.

Many of these challenges can be resolved directly in the services code. However, adjusting the service code puts a massive burden on you to properly code solutions to these problems, and it requires each microservice owner to agree on the same solution approach to assure consistency. Solutions to these types of problems are complex, and it is extremely error prone to rely on application code changes to provide the solutions. Removing the burden of codifying solutions to these problems and reducing the operational costs of managing microservices are primary reasons we have seen the introduction of the service mesh.

What Is a Service Mesh Anyway?

A service mesh is a programmable framework that allows you to observe, secure, and connect microservices. It doesn’t establish connectivity between microservices, but instead has policies and controls that are applied on top of an existing network to govern how microservices interact. Generally a service mesh is agnostic to the language of the application and can be applied to existing applications usually with little to no code changes.

A service mesh, ultimately, shifts implementation responsibilities out of the application and moves them into the network. This is accomplished by injecting behavior and controls within the application that are then applied to the network. This is how you can accomplish things such as metrics collecting, communication tracing, and secure communication without changing the applications themselves. As stated earlier, a service mesh is a programmable framework. This means that you can declare your intentions, and the mesh will ensure that your declared intentions are applied to the services and network. A service mesh simplifies the application code, making it easier to write, support, and develop by removing complex logic that normally must be bundled in the application itself. Ultimately, a service mesh allows you to innovate faster.

How Does a Service Mesh Work?

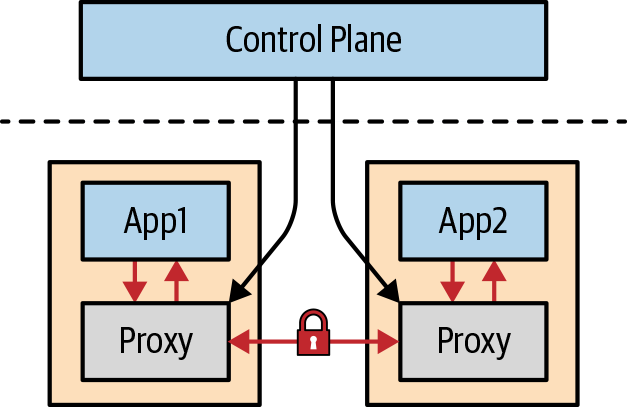

Many service mesh implementations have the same general reference architecture (see Figure 1-1). A service mesh will have a control plane to program the mesh, and client-side proxies in the data plane (shown below the dashed line) that are within the request path and serve as the control point for securing, observing, and routing decisions between services. The control plane transfers configurations to the proxies in their native format. The client-side proxies are attached to each application within the mesh. Each proxy intercepts all inbound and outbound traffic to and from its associated application. By intercepting traffic, the proxies have the ability to inject behavior on the communication flows between services. Following is a list of behaviors commonly found in a service mesh implementation:

Traffic shaping with dynamic routing controls between services

Resiliency support for service communication such as circuit breakers, timeouts, and retries

Observability of traffic between services

Tracing of communication flows

Secure communication between services

In Figure 1-1, you can see that the communication between two applications such as App1 and App2 is executed via the proxies versus directly between the applications themselves, as indicated by the red arrows. By having communication routed between the proxies, the proxies serve as a key control point for performing complicated tasks such as initiating transport layer security (TLS) handshakes for encrypted communication (shown on the red line with the lock in Figure 1-1). Since the communication is performed between the proxies, there is no need to embed complex networking logic in the applications themselves. Each service mesh implementation option has various features, but they all share this general approach.

Figure 1-1. Anatomy of a service mesh

The Service Mesh Ecosystem

You might find navigating the service mesh ecosystem a bit daunting because there are many different implementation choices. While most choices share the same reference architecture shown in Figure 1-1, there are variations in approach and project structure you should consider when making your service mesh selection. Here are some questions to ask yourself when selecting a service mesh implementation:

Is it an open source project governed by a diverse contributor base?

Does it use a proprietary proxy?

Is the project part of a foundation?

Does it contain the feature set that you need and want?

The fact that there are many service mesh options validates the interest of service mesh, and it shows that the community has not selected a de facto standard as we have seen with other projects such as Kubernetes for container orchestration. Your answers to these questions will have an impact on the type of service mesh that you prefer, whether it is a single vendor-controlled or a multivendor, open source project. Let’s take a moment to review the service mesh ecosystem and describe each implementation so that you have a better understanding of what is available.

Envoy

The Envoy proxy is an open source project originally created by the folks at Lyft. The Envoy proxy is an edge and service proxy that was custom built to deal with the complexities and challenges of cloud native applications. While Envoy itself does not constitute a service mesh, it is definitely a key component of the service mesh ecosystem. What you will see from exploring the service mesh implementations is that the client-side proxy from the reference architecture in Figure 1-1 is often implemented using an Envoy proxy.

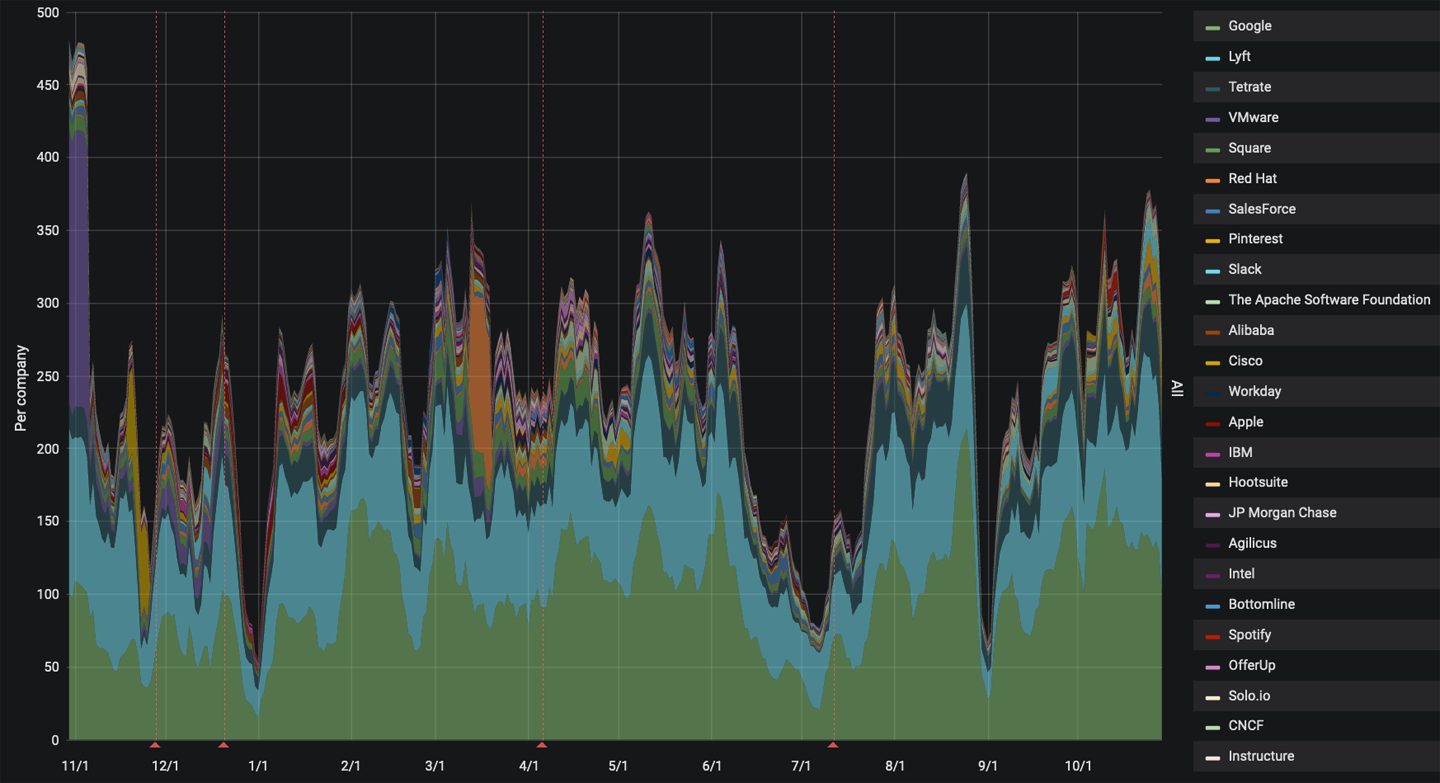

Envoy is one of the six graduated projects in the Cloud Native Computing Foundation (CNCF). The CNCF is part of the Linux foundation, and it hosts a number of open source projects that are used to manage modern cloud native solutions. The fact that Envoy is a CNCF graduated project is an indicator that it has a strong community with adopters using an Envoy proxy in production settings. Although Envoy was originally created by Lyft, the open source project has grown into a diverse community, as shown by the company contributions in the CNCF DevStats graph shown in Figure 1-2.

Figure 1-2. Envoy 12-month contribution distribution

Istio

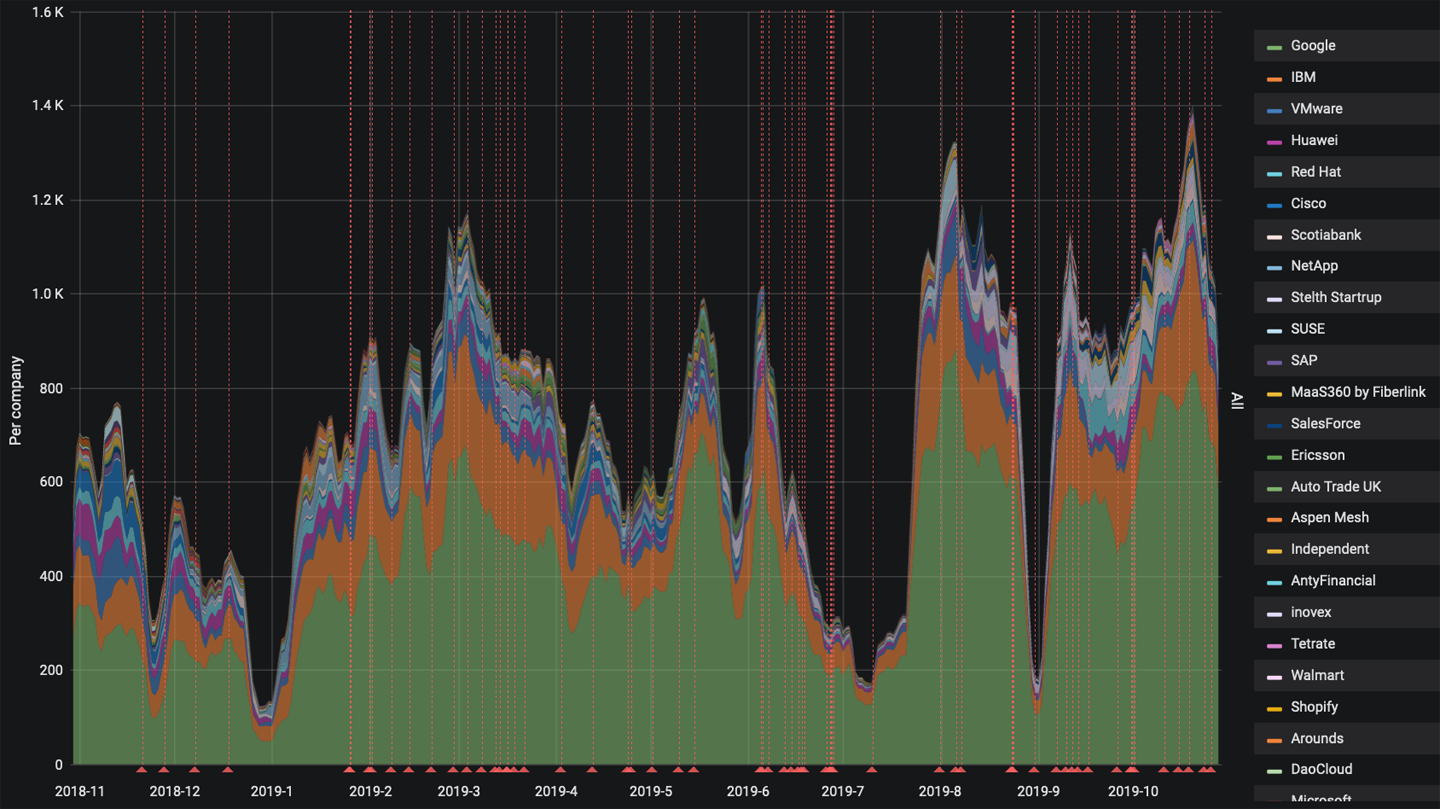

The Istio project is an open source project cofounded by IBM, Google, and Lyft in 2017. Istio makes it possible to connect, secure, and observe your microservices while being language agnostic. Istio has grown to include contributions from companies beyond its original cofounders, companies such as VMware, Cisco, and Huawei, among others. Figure 1-3 shows company contributions over the past 12 months using the CNCF DevStats tool. As of this writing, the open source project Knative also builds upon the Istio project, providing tools and capabilities to build, deploy, and manage serverless workloads. Istio itself builds upon many other open source projects such as Envoy, Kubernetes, Jaeger, and Prometheus. Istio is listed as part of the CNCF Cloud Native Landscape, under the Service Mesh category.

Figure 1-3. Istio 12-month contribution distribution

The Istio control plane extends the Kubernetes API server and utilizes the popular Envoy proxy for its client-side proxies. Istio supports mutual TLS authentication (mTLS) communication between services, traffic shifting, mesh gateways, monitoring and metrics with Prometheus and Grafana, as well as custom policy injection. Istio has installation profiles such as demo and production to make it easier to provision and configure the Istio control plane for specific use cases.

Consul Connect

Consul Connect is a service mesh developed by HashiCorp. Consul Connect extends HashiCorp’s existing Consul offering, which has service discovery as a primary feature as well as other built-in features such as a key-value store, health checking, and service segmentation for secure TLS communication between services. Consul Connect is available as an open source project with HashiCorp itself being the predominant contributor. HashiCorp has an enterprise offering for Consul Connect for purchase with support. At the time of writing, Consul Connect was not contributed to the CNCF or another foundation. Consul is listed as part of the CNCF Cloud Native Landscape under the Service Mesh category.

Consul Connect uses Envoy as the sidecar proxy and the Consul server as the control plane for programming the sidecars. Consul Connect includes secure mTLS support between microservices and observability using Prometheus and Grafana projects. The secure connectivity support uses the HashiCorp Vault product for managing the security certificates. Recently, HashiCorp has introduced Layer 7 (L7) traffic management and mesh gateways into Consul Connect as beta features.

Linkerd

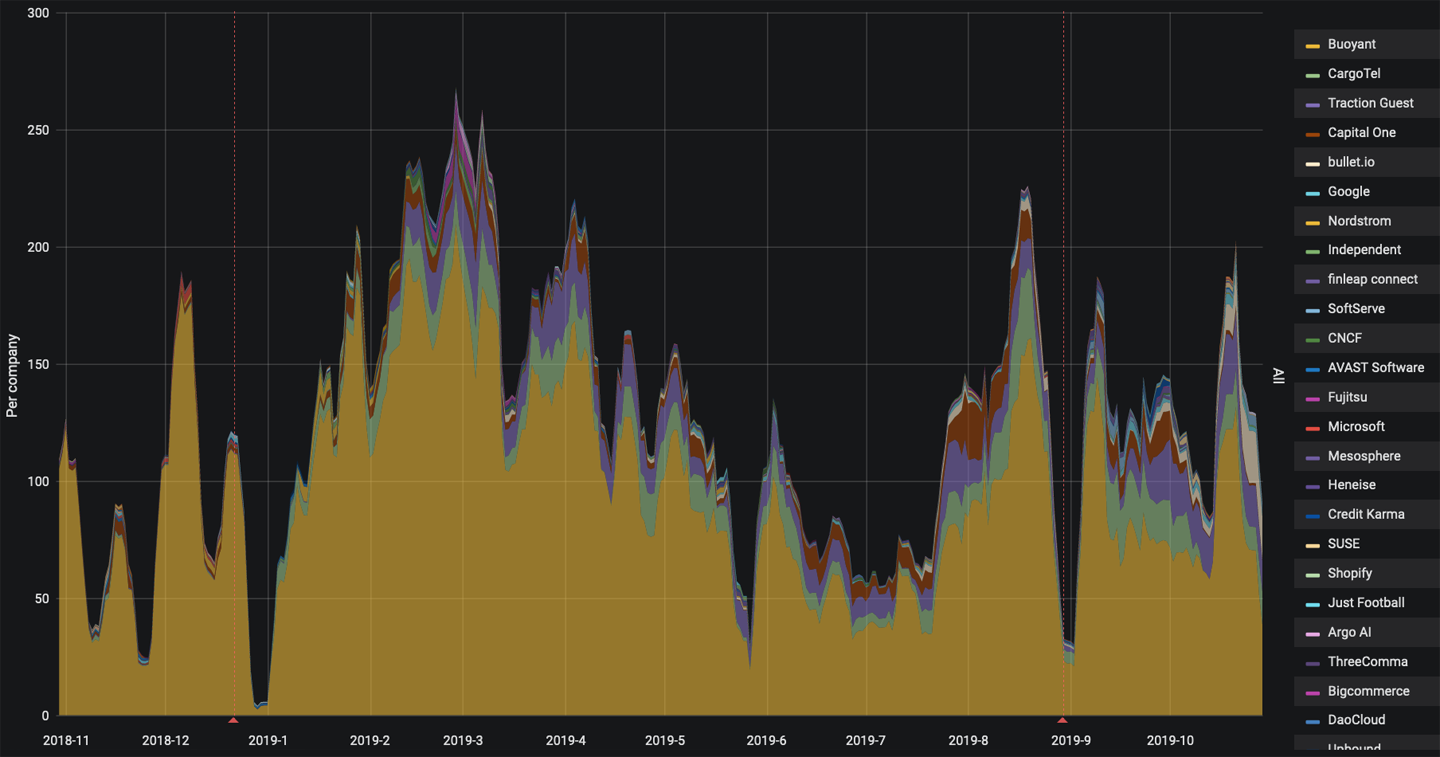

The Linkerd service mesh project is an open source project as well as a CNCF incubating project focusing on providing an ultralight weight mesh implementation with a minimalist design. The predominant contributors to the Linkerd project are from Buoyant, as shown in the 12-month CNCF DevStats company contribution graph in Figure 1-4. Linkerd has the key capabilities of a service mesh, including observability using Prometheus and Grafana, secure mTLS communication, and—recently added—support for service traffic shifting. The client-side proxy used with Linkerd was developed specifically for and within the Linkerd project itself, and written in Rust. Linkerd provides an injector to inject proxies during a Kubernetes pod deployment based on an annotation to the Kubernetes pod specification. Linkerd also includes a user interface (UI) dashboard for viewing and configuring the mesh settings.

Figure 1-4. Linkerd one-year contribution distribution

App Mesh

App Mesh is a cloud service hosted by Amazon Web Services (AWS) to provide a service mesh with application-level networking support for compute services within AWS such as Amazon ECS, AWS Fargate, Amazon EKS, and Amazon EC2. As the project URL suggests, AWS App Mesh is a closed sourced managed control plane that is proprietary to AWS. App Mesh utilizes the Envoy proxy for the sidecar proxies within the mesh; this has the benefit that it may be compatible with other AWS partners and open source tools. It appears that App Mesh’s API has similar routing concepts as the Istio control plane, unsurprising since Istio serves as a control plane for the Envoy proxy. As of this writing, secure mTLS communication support between services is not implemented but is a road map item. The focus of App Mesh appears to be primarily traffic routing and observability.

Kong

Kong’s service mesh builds upon the Kong edge capabilities for managing APIs and has delivered these capabilities throughout the entire mesh. Though Kong is an open source project, it appears that its contributions are heavily dominated by Kong members. Kong is not a member of a foundation, but is listed as part of the CNCF Cloud Native Landscape under the API Gateway category. Kong does provide Kong Enterprise, which is a paid product with support.

Much like all the other service mesh implementations, Kong has both a control plane to program and manage the mesh as well as a client-side proxy. In Kong’s case the client-side proxy is unique to the Kong project. Kong includes support for end-to-end mTLS encryption between services. Kong promotes its extensibility feature as a key advantage. You can extend the Kong proxy using Lua plug-ins to inject custom behavior at the proxies.

AspenMesh

AspenMesh is unlike the other service mesh implementations in being a supported distribution of the Istio project. AspenMesh does have many open source projects on GitHub, but its primary direction is not to build a new service mesh implementation but to harden and support an open source service mesh implementation through a paid offering. AspenMesh hosts components of Istio such as Prometheus and Jaeger, making it easier to get started and use over time. It has features above and beyond the Istio base project, such as a UI and dashboard for viewing and managing Istio resources. AspenMesh has introduced additional tools such as Istio Vet, which is used to detect and resolve misconfigurations within an instance of Istio. AspenMesh is an example where there are new markets emerging to offer support and build upon a source service mesh implementation such as Istio.

Service Mesh Interface

Service Mesh Interface (SMI) is a relatively new specification that was announced at KubeCon EU 2019. SMI is spearheaded by Microsoft with a number of backing partners such as Linkerd, HashiCorp, Solo.io, and VMware. SMI is not a service mesh implementation; however, SMI is attempting to be a common interface or abstraction for other service mesh implementations. If you are familiar with Kubernetes, SMI is similar in concept to what Kubernetes has with Container Runtime Interface (CRI), which provides an abstraction for the container runtime in Kubernetes with implementations such as Docker and containerd. While SMI may not be immediately applicable for you, it is an area worth watching to see where the community may head as far as finding a common ground for service mesh implementations.

Conclusion

The service mesh ecosystem is vibrant, and you have learned that there are many open source projects as well as vendor-specific projects that provide implementations for a service mesh. As we continue to explore service mesh more deeply, we will turn our attention to the Istio project. We have selected the Istio project because it uses the Envoy proxy, it is rich in features, it has a diverse open source community, and, most important, we both have experience with the project.

Get Istio Explained now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.