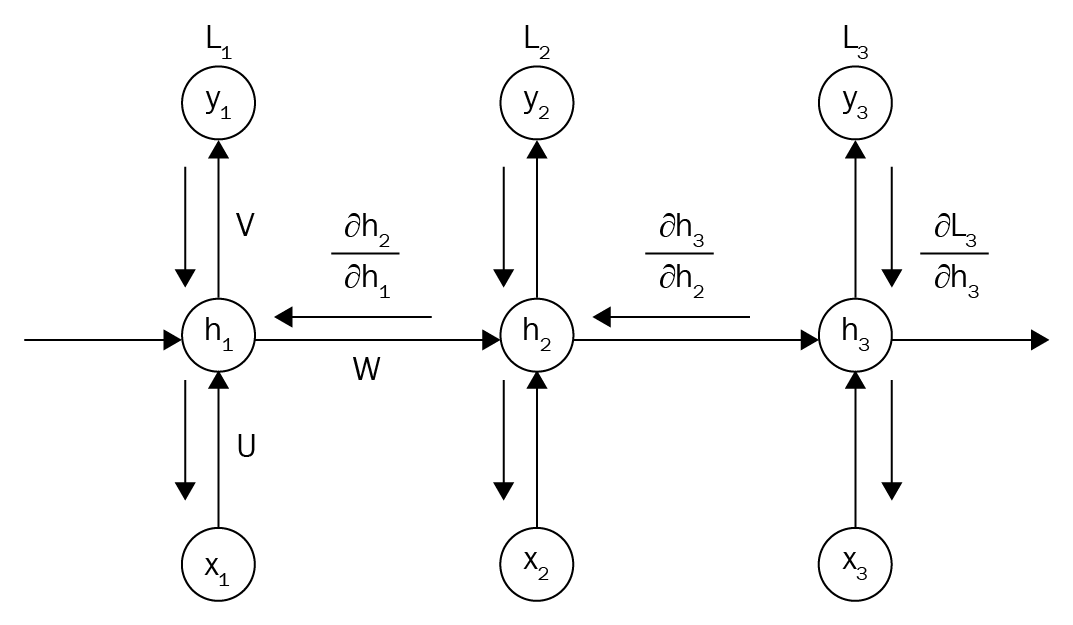

Now, how do we train RNNs? Just like we have trained our normal neural networks, we can use backpropagation for training RNNs. But in RNNs, since there is a dependency on all the time steps, gradients at each output will not depend only on the current time step but also on the previous time step. We call this backpropagation through time (BPTT). It is basically the same as backpropagation except that it is applied an RNN. To see how it occurs in an RNN, let's consider the unrolled version of an RNN, as shown in the following diagram:

In the preceding diagram, L1, L2, and L3 are the losses at each time step. Now, ...