Parallelizing/distributing training computation can happen as model parallelism or data parallelism.

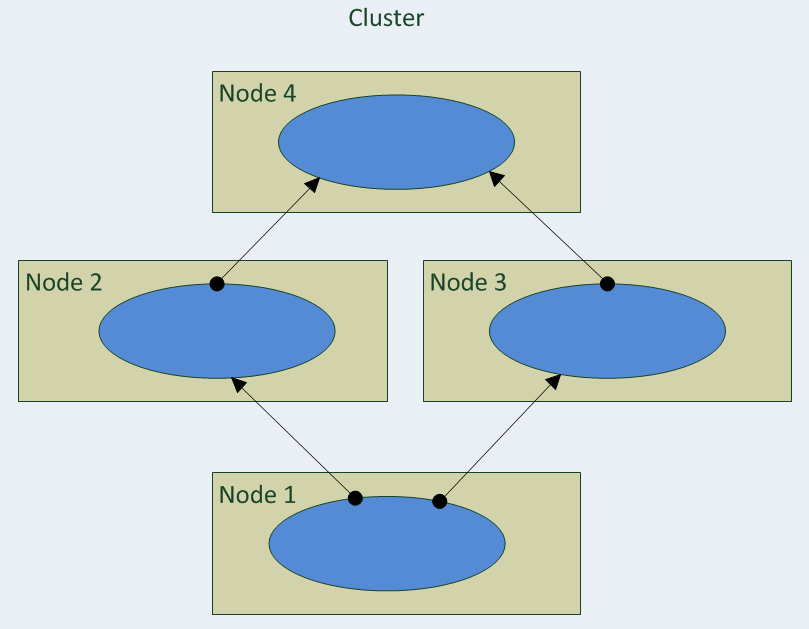

In model parallelism (see following diagram), different nodes of the cluster are responsible for the computation in different parts of a single MNN (an approach could be that each layer in the network is assigned to a different node):

In data parallelism (see the following diagram), different cluster nodes have a complete copy of the network model, but they get a different subset of the training data. The results from each node are then combined, as demonstrated in the following ...