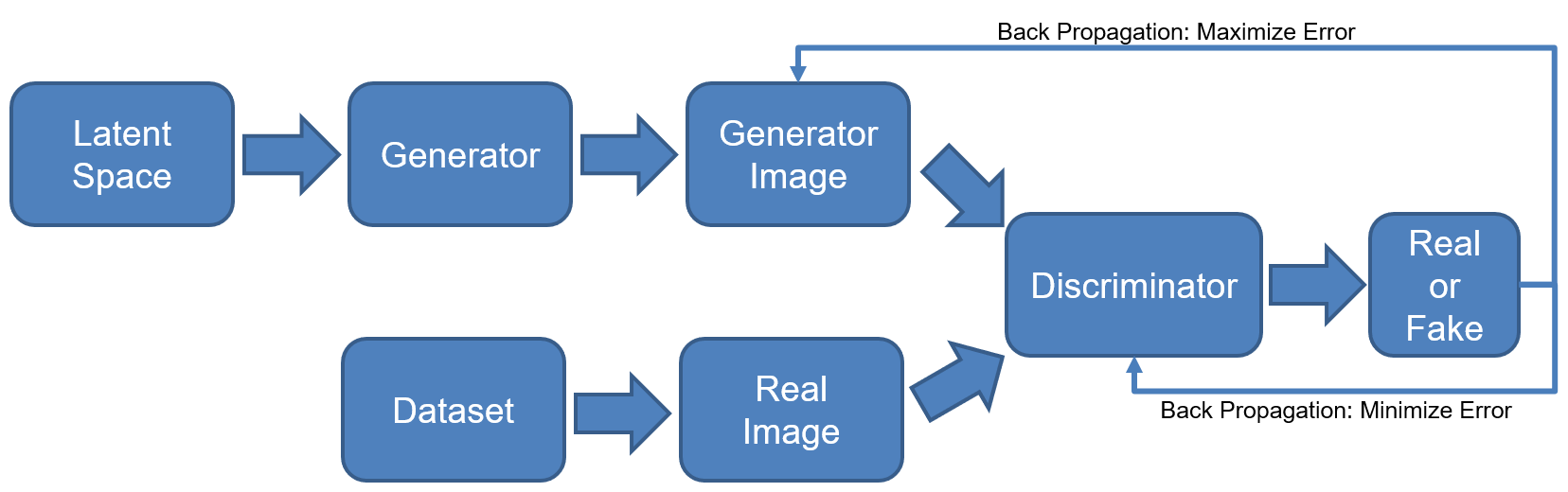

We've talked about the MiniMax problem at work here. By sampling two mini-batches at every epoch, the GAN architecture is able to simultaneously maximize the error to the generator and minimize the error to the discriminator:

In each chapter, we'll revisit what it means to train a GAN. Generative models are notoriously difficult to train to get good results. GANs are no different in this respect. There are tips and tricks that you will learn throughout this book in order to get your models to converge and produce results.