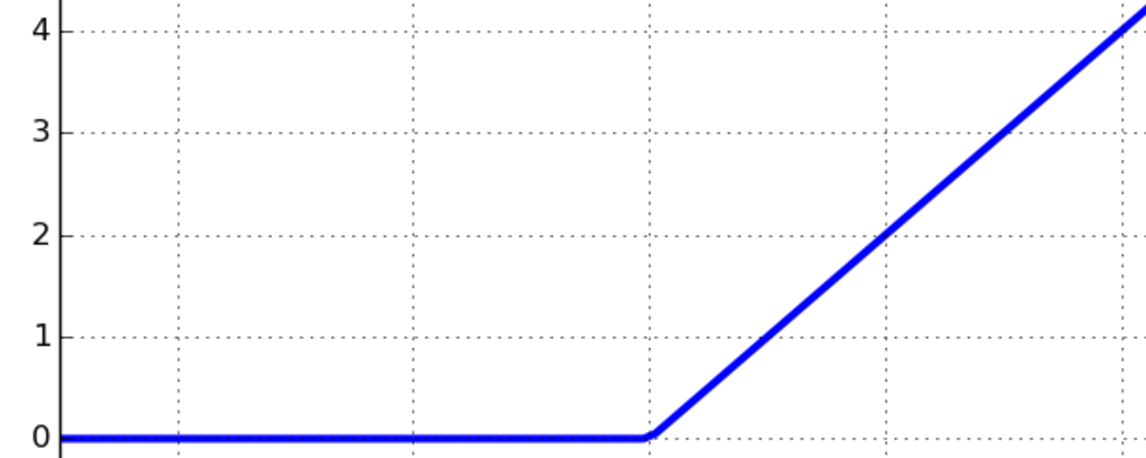

The sigmoid is not the only kind of smooth activation function used for neural networks. Recently, a very simple function called rectified linear unit (ReLU) became very popular because it generates very good experimental results. A ReLU is simply defined as  , and the nonlinear function is represented in the following graph. As you can see in the following graph, the function is zero for negative values, and it grows linearly for positive values:

, and the nonlinear function is represented in the following graph. As you can see in the following graph, the function is zero for negative values, and it grows linearly for positive values: