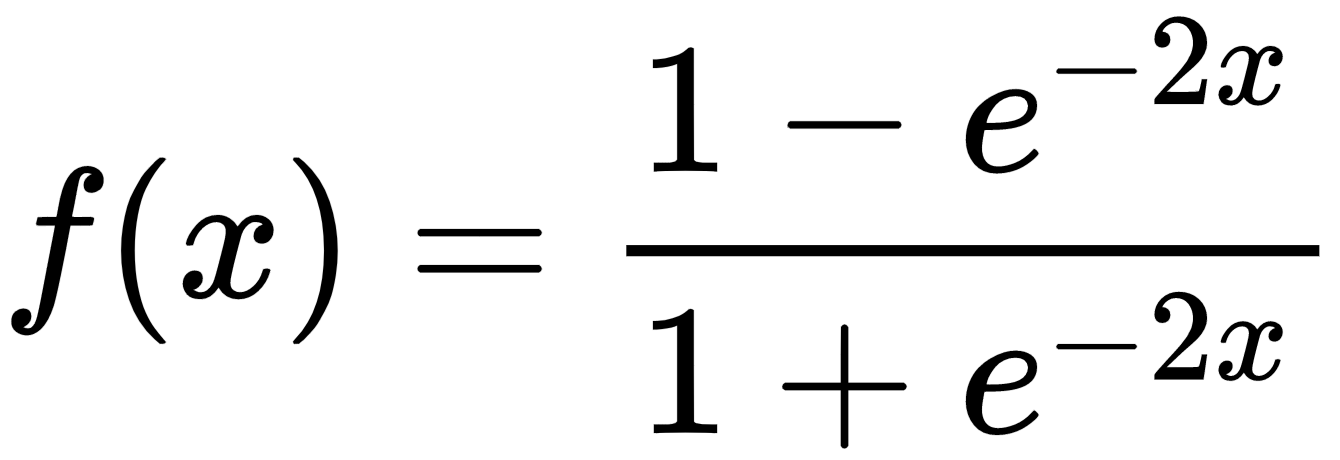

The mathematical formula of tanh is as follows:

Its output is centered at zero with a range of -1 to 1. Therefore, optimization is easier and thus in practice, it is preferred over a sigmoid activation function. However, it still suffers from the vanishing gradient problem.