Chapter 10. Pipelines and MLOps

In previous chapters, we demonstrated how to perform each individual step of a typical ML pipeline, including data ingestion, analysis, and feature engineering—as well as model training, tuning, and deploying.

In this chapter, we tie everything together into repeatable and automated pipelines using a complete machine learning operations (MLOps) solution with SageMaker Pipelines. We also discuss various pipeline-orchestration options, including AWS Step Functions, Kubeflow Pipelines, Apache Airflow, MLFlow, and TensorFlow Extended (TFX).

We will then dive deep into automating our SageMaker Pipelines when new code is committed, when new data arrives, or on a fixed schedule. We describe how to rerun a pipeline when we detect statistical changes in our deployed model, such as data drift or model bias. We will also discuss the concept of human-in-the-loop workflows, which can help to improve our model accuracy.

Machine Learning Operations

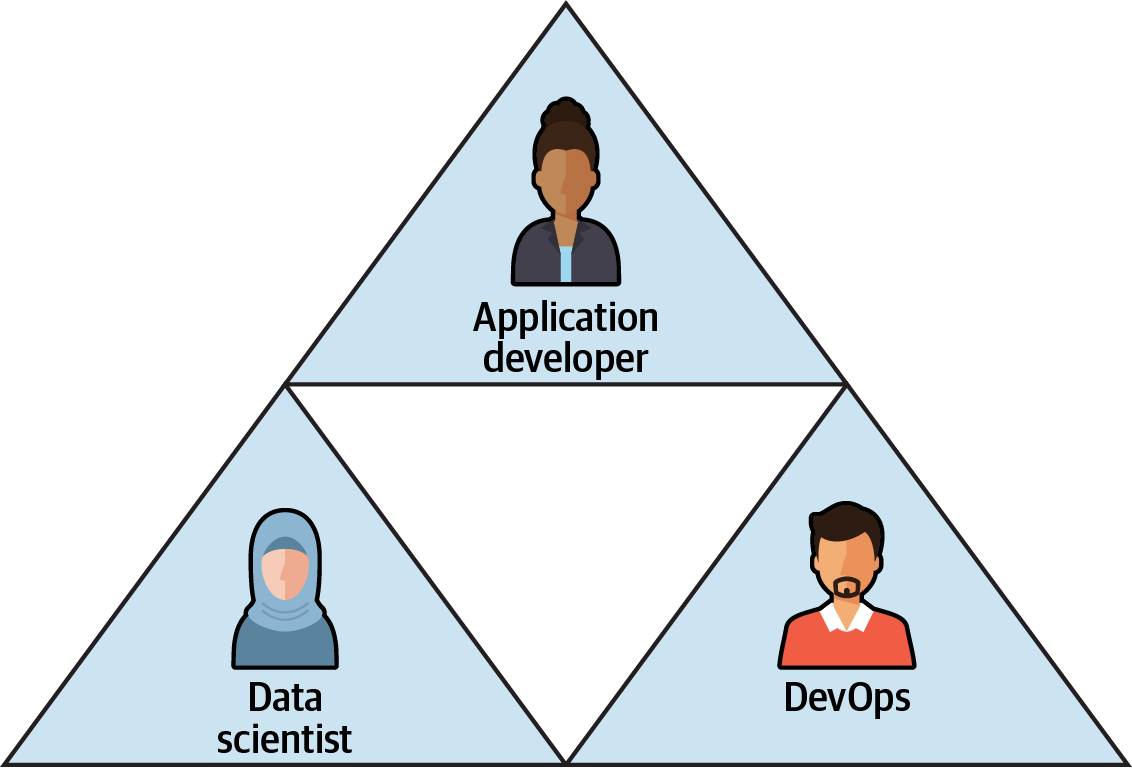

The complete model development life cycle typically requires close collaboration between the application, data science, and DevOps teams to successfully productionize our models, as shown in Figure 10-1.

Figure 10-1. Productionizing machine learning applications requires collaboration between teams.

Typically, the data scientist delivers the trained model, the DevOps engineer manages the infrastructure that hosts the ...

Get Data Science on AWS now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.