Chapter 16. Logistic Regression

A lot of people say there’s a fine line between genius and insanity. I don’t think there’s a fine line, I actually think there’s a yawning gulf.

Bill Bailey

In Chapter 1, we briefly looked at the problem of trying to predict which DataSciencester users paid for premium accounts. Here we’ll revisit that problem.

The Problem

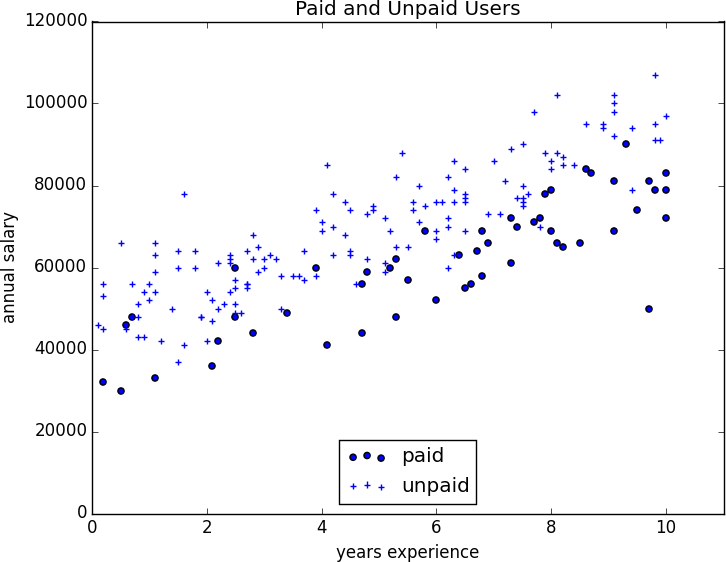

We have an anonymized dataset of about 200 users, containing each user’s salary, her years of experience as a data scientist, and whether she paid for a premium account (Figure 16-1). As is typical with categorical variables, we represent the dependent variable as either 0 (no premium account) or 1 (premium account).

As usual, our data is a list of rows

[experience, salary, paid_account]. Let’s turn it into the format we need:

xs=[[1.0]+row[:2]forrowindata]# [1, experience, salary]ys=[row[2]forrowindata]# paid_account

An obvious first attempt is to use linear regression and find the best model:

Figure 16-1. Paid and unpaid users

And certainly, there’s nothing preventing us from modeling the problem this way. The results are shown in Figure 16-2:

frommatplotlibimportpyplotaspltfromscratch.working_with_dataimportrescalefromscratch.multiple_regressionimportleast_squares_fit,predictfromscratch.gradient_descentimportgradient_step

Get Data Science from Scratch, 2nd Edition now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.