Aligning Performance Objectives with Compliance Regulations

Meeting both compliance and performance objectives requires structure and discipline. Compliance is usually a functional requirement while performance is most often a non-functional requirements. A structured process will achieve better overall performance by defining and tracking both functional and non-functional requirements together. Meeting both objectives can be accomplished by following the process outlined in the remainder of this report. This process includes the following steps:

- Define the business goals for performance.

Identify constraints. These include:

- Business constraints

- Regulatory and compliance constraints

- Design and develop for performance goals.

- Execute performance measurement and testing.

- Implement performance monitoring.

- Mitigate risks.

1. Define the Business Goals for Performance

Ultimately, the goal of system development is to meet the business goals of your organization. Business goals include meeting compliance objectives. Without the business, information technology is irrelevant.

Understanding the business goals must be the first step, and understanding the system performance goals and expectations is of primary importance. For example, if the business goals of a financial services provider include executing more financial transactions (i.e., money transfers) in a shorter period of time, the business problem translates to clear performance expectations. The transactions per second (TPS) rate required from the system can be calculated. The process of defining the business performance goals must be disciplined and thorough and include the business partners.

The business motivation for visibility into performance goals must also be captured. This will translate into the reporting requirements and metrics used by the IT department and the corporate internal business users, and external customers. The metrics and reporting requirements can be used to define the reports and dashboards used when monitoring the system and business transactions.

2. Identify Constraints

Once performance goals have been established, project constraints must be well understood. Constraints typically include resource (i.e., hardware, software, network), geography (i.e., location of users and the infrastructure), and time (i.e., operating windows) constraints, and regulatory compliance requirements regarding access control, confidentiality, and logging.

Understanding, defining, and documenting constraints requires communication with business partners. As constraints are constantly changing, staying current with emerging regulations is also critical. Depending on the size of the organization, an internal compliance team may be responsible for identifying and auditing systems for compliance. In other cases, outside agencies can be used.

2a. Identifying Business Constraints

As part of the business requirements phase, functional and non-functional requirements are defined and documented. Functional requirements define what the system must do. Non-functional requirements define how the system must do it. Business constraints may be subtle. For example, marketing campaigns can affect the way a system is implemented. Consider the scenario of a marketing campaign banner image that is presented to a user upon logging in to a secure home page. The image for the banner may be selected from multiple campaigns depending on rules defined by the business. The retrieval of the image requires selection of the campaign by the rules implemented by the business. This flexibility results in targeted marketing campaigns based on user characteristics and behavior. The constraint is the need to process the business rules during the page rendering process. This constraint requires additional processing and must be considered in the design of the system.

2b. Identifying Regulatory and Compliance Constraints

Access control, confidentiality, and logging are the primary compliance requirements that must be defined, documented, and implemented in such a way as to minimize performance impact.

Access control is often implemented using role-based access models. Depending on the implementation model, achieving robust performance may be difficult. Access control must be enforced at both the authentication layer and the services layer. In many cases, back-end transactions are required to verify access to the service being called. This level of access control must be implemented with performance in mind, reducing the overall number of transactions to ensure compliance. This can be a challenging model to implement.

Confidentiality is typically addressed via encryption, both for passwords and for confidential data. Confidential data cannot be stored or transmitted in clear text. Regulations dictate the security policies that must be followed to ensure compliance.

Logging may be required to ensure compliance. Synchronous logging implementations can slow down performance. A common technique to reduce the performance impact is to leverage asynchronous logging and auditing techniques.

3. Design and Develop for Performance Goals

When designing a system, performance must be a priority. Understanding the demands that may be placed on them—particular functions, batch jobs, or components—should be at the top of a developer’s to-do list when designing and building systems. Early in the design process, developers should test code and components for performance, especially for complex distributed architectures. For example, if 50 services are going to be built using a framework including web services, middleware, databases, and legacy systems, a proof-of-concept (POC) performance test should be part of the design process. After building out two or three key transactions based on the proposed architecture, run the test. This will help determine if the design will scale to support the expected transaction load before the entire system is built.

Many strategies can be designed into the system to ensure optimal performance. Some examples include asynchronous logging and caching of user attributes and shared system data. Being judicious is always recommended if there’s a requirement that only affects certain customers. It’s worth considering multiple code sets depending on the requirements of key customers. For example, if 90% of users won’t see a benefit from preloading data, the code to pre-load/cache data should be built in such a way as to only support the 10% of users that will see the performance benefit.

4. Execute Performance Measurement and Testing

Performance measurement requires discipline to ensure accuracy. In order to identify and establish specific tests, the PE team must model, via a workload characterization model, real-world performance expectations. This provides a starting point for the testing process. The team can modify and tune the model as successive test runs provide additional information. After defining the workload characterization model, the team needs to define a set of user profiles that determine the application pathways that typical classes of users will follow. These profiles are used and combined with estimates from business and technical groups throughout the organization to define the targeted performance behavior criteria. These profiles may also be used in conjunction with predefined performance SLAs as defined by the business.

Once the profiles are developed and the SLAs determined, the performance test team needs to develop the typical test scenarios that will be modeled and executed. In addition, the performance test environment must be identified and established. This may require acquiring hardware and software, or can be leveraged from an existing or shared environment. At a minimum, the test environment should closely represent the production environment, though it may be a scaled-down version.

The next critical part of performance testing is identifying the quantity and quality of test data required for the performance test runs. This can be determined through answering different questions: Are the test scenarios destructive in nature to the test bed of data? Can the database be populated in such a way that it’s possible to capture a snapshot of the database before any test run and restored between test runs? Can the test scenarios create the data that they require as part of a setup script, or does the business complexity of the data require that it be created one time up front and then cleaned up as part of the test scenarios? One major risk to the test data effort, if using an approach leveraging actual test scripts, is that one of the test scripts may fail during the course of the test runs and the data will have to be recreated anyway, using external tools or utilities.

As soon as these test artifacts have been identified, modeled, and developed, the performance test can begin with an initial test run, modeling a small subset of the potential user population. This is used to shake out any issues with the test scripts or test data used by the test scripts. It also validates the targeted test execution environment including the performance test tool(s), test environment, system under test (SUT) configuration, and initial test profile configuration parameters. In effect, this initial test is a “smoke test” of the performance test runtime environment.

At the point when the PE smoke test executes successfully, it is time to reset the environment and data and run the first of a series of test scenarios. This first scenario will provide significant information and test results that can be used by the performance test team defining the performance test suites.

The performance test is considered complete when the test team has captured results for all of the test scenarios making up the test suite. The results must correspond to a repeatable set of system configuration parameters as well as a test bed of data.

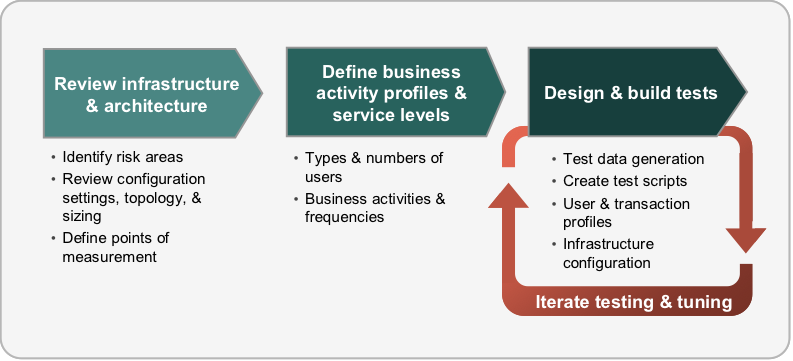

The following diagram outlines the overall approach used for assessing the performance and scalability of a given system. These activities represent a best-practices model for conducting performance and scalability assessments.

Each test iteration attempts to identify a system impediment or prove a particular hypothesis. The testing philosophy is to vary one element, then observe and analyze the results. For example, if results of a test are unsatisfactory, the team may choose to tune a particular configuration parameter and then rerun the test.

5. Implement Performance Monitoring

The increased complexity of today’s distributed and web-based architectures has made it a challenge to achieve reliability, maintainability, and availability at the levels that were typical of traditional systems implementations. The goal of systems management and production performance monitoring is to enable measurable business benefits by providing visibility into key measures of system quality.

To be proactive, companies need to implement controls and measures that either enable awareness of potential problems or target the problems themselves. Application performance monitoring (APM) not only ensures that a system can support service levels such as response time, scalability, and performance, but, more importantly, proactively enables the business to know when a problem will arise. When difficulties occur, PE, coupled with APM, can isolate bottlenecks and dramatically reduce time to resolution. Performance monitoring allows proactive troubleshooting of problems when they occur and facilitates developing repairs or “workarounds” to minimize business disruption.

Organizations can implement production performance monitoring to solve performance problems, and leverage it to inhibit unforeseen performance issues. It establishes controls and measures to sound alarms when unexpected issues appear, and isolates them. Unfortunately, the nature of distributed systems development has made it challenging to build in the monitors and controls needed to isolate bottlenecks, and to report on metrics at each step in distributed transaction processing. In fact, this has been the bane of traditional systems management. However, tools and techniques have matured to provide end-to-end transactional visibility, measurement, and monitoring.

Aspects of these tools include dashboards, performance monitoring databases, and root cause analysis relationships allowing tracing and correlation of transactions across the distributed system. Dashboard views provide extensive business and system process information, allowing executives to monitor, measure, and prepare based on forecasted and actual metrics. By enabling both coarse and granular views of key business services, they allow organizations to more effectively manage customer expectations and business process service levels, and plan to meet and exceed business goals. In short, they deliver the right information to the right people, at the right time. It is important to define what needs to be measured based on the needs of the business and IT.

Understanding application performance and scalability characteristics enables organizations to measure and monitor business impacts and service levels, further understand the end user experience, and map dependencies between application service levels and the underlying infrastructure. The integration of business, end user, and system perspectives enables management of the business at a service and application level.

6. Mitigate Risk

As risks are identified through analysis of test results and application performance monitors, the impact of these risks must be categorized. Sample categories include:

Business impact

- Regulatory impacts for outages

- High financial impact for outages

- Application supports multiple lines of business

- Application classified as business critical

- Application supports contractual SLAs

User population

- Application has geographically diverse users (domestic, international)

- High rate of user population or concurrency growth expected

Transaction volumes

- “Flash” events may dramatically increase volumes

As risks are identified, specific solutions and recommendations must be developed to minimize and resolve these issues. The release and deployment model will influence how and when a particular solution or change is implemented. For example, if caching is going to be added, will this be implemented in a single release or will components be deployed in successive releases? Less code-invasive changes such as hardware configuration or changes isolated to a single tier (i.e., additional database indexes) may be able to be handled in minor or emergency releases.

Development Methodology Considerations

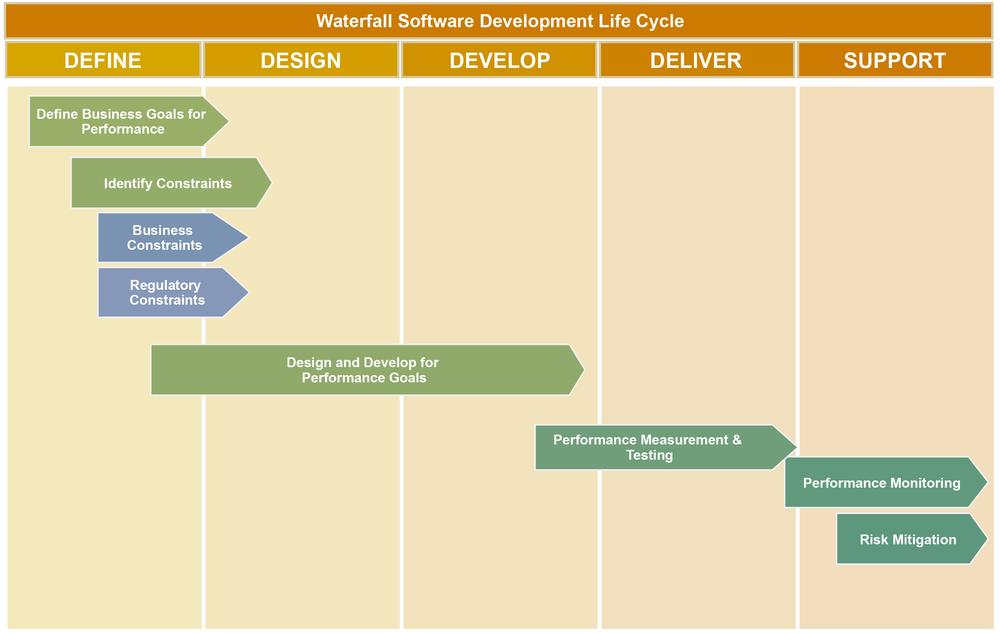

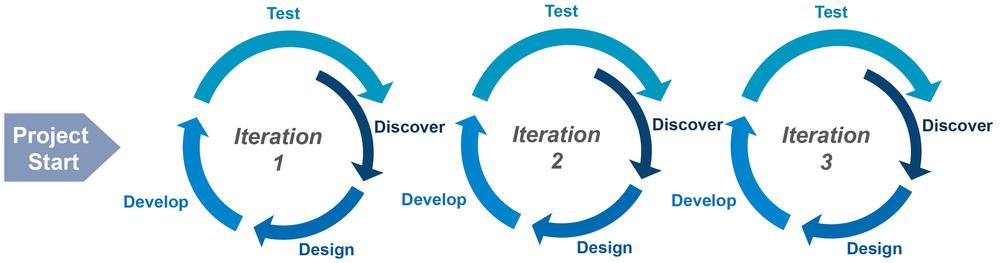

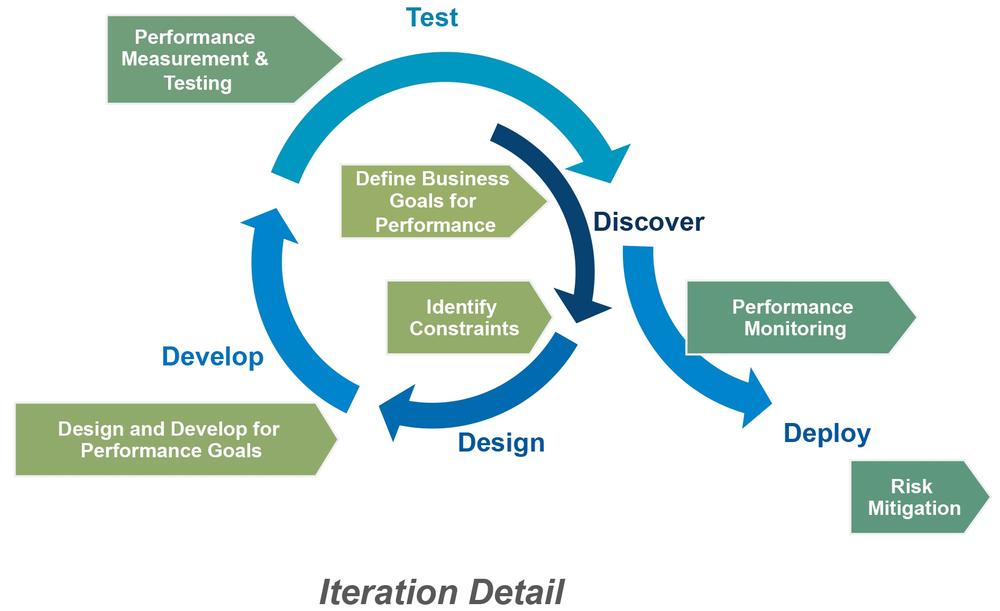

Software development methodologies vary by implementation and framework. Depending on the standards defined for an organization, the methodology followed may be dictated by the enterprise, or, if multiple methodologies are supported, it may depend on the requirements/demands of the project. The process for achieving performance goals while addressing compliance requirements is applicable to and consistent across multiple methodologies, as portrayed in the diagrams that follow.

Waterfall

The waterfall model is still followed by very large organizations for many critical system implementations. This progressive development process provides a disciplined structure, as well as checkpoints, to support a predictable set of requirements and releases. This disciplined and rigid methodology requires both functional and non-functional requirements to be captured during the requirements phase and applied to the full development life cycle. Compliance requirements are typically captured as functional requirements, while the non-functional requirements include performance and scalability.

Iterative Development: Agile and Scrum

Functional compliance requirements and performance can also be effectively addressed when following agile and Scrum methodologies.

Many companies, including high-tech organizations and startups, have adopted agile as their primarily development methodology. Flexible and iterative development allows functional and non-functional requirements to be addressed in multiple iterations. Ideally, compliance requirements are captured as functional requirements in the early iterations.

Iterative and agile methods allow building of software in the form of completed, finished, and ready-for-use iterations or blocks, beginning with the blocks perceived to be of the highest value to the customer.

Scrum is an agile development model based on multiple small teams working independently. Within each iteration, certain steps must be followed to ensure the performance goals are defined, tested, and monitored.

Following the disciplined process discussed above will enable you to meet both performance and compliance objectives. This process is applicable to multiple development methodologies. By understanding the business needs, the system workload, and the reporting requirements, you’ll be able to measure and monitor real world performance. This will ensure meeting the goals of performance and compliance requirements, providing visibility into key measures of system quality, all while proactively mitigating risks.

Get Compliance at Speed now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.