Chapter 1. Security in the Modern Organization

In this chapter, you will learn the following:

-

Why security is becoming ever more critical in the modern age

-

What is meant by cloud native security

-

Where security fits in the modern organization

-

What the purpose of security is

-

What DevSecOps really is

-

How to measure the impact of security

-

The underlying principles of security

This foundation is critical for you to compellingly articulate why investment into security is and will continue to be mandatory and how the advent of the cloud has not invalidated the fundamental principles of security but has transformed how they are applied.

1.1 Why Security Is Critical

Seeing as you’re reading this, you probably already believe in the criticality of security; however, it’s important to understand how security continues to be ever more important day to day and year to year.

Life in the 21st century is digital first—our lives revolve around the internet and technology. Everyone’s personal information is given to and stored by trusted third parties. We all believe that it will be handled safely and securely. What recent history has shown us, however, is that security breaches are the norm; they are to be expected. This information is the gold filling the 21st-century bank vaults of technology titans. Where you have concentrations of wealth, you must scale your security to match.

Human instinct makes us believe that to go slowly is to go safely, which often manifests as lengthy security assessments, full multiweek penetration tests on every release, and security being the slowest moving part on the path to production.

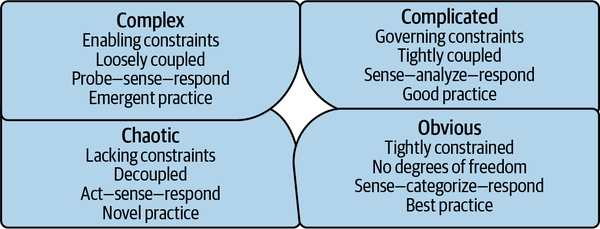

This is actively harmful in two ways. First, the systems that businesses operate are inherently complex. Complexity theory and other models of complexity, such as the Cynefin framework, shown in Figure 1-1, teach us that it is impossible to think our way through a complex system. No amount of reading code and looking at architecture diagrams can allow you to fully understand all the possibilities and potential vulnerabilities within a system. Being able to react and apply fixes quickly when issues are discovered, such as the Log4j vulnerability in December 2021, results in a superior security posture when compared to lengthy, time-intensive review cycles.

Figure 1-1. Cynefin framework

But even if it were possible with sufficient time to root out all security vulnerabilities, for a business, moving slowly in the 21st century is a recipe for disaster. The level of expectation set by the Googles, Microsofts, and Amazons of the world has laid down a gauntlet. Move fast or die. Security teams are caught between two unstoppable forces: the business imperative for agility through speed and the exponential growth in breach impacts.

When a breach happens, the business suffers in a number of ways, to name but a few:

-

Reputational damage

-

Legal liabilities

-

Fines and other financial penalties

-

Operational instability and loss of revenue

-

Loss of opportunity

The vast majority of businesses are either already in the cloud or are exploring how they can migrate their estates. With the near ubiquity of cloud infrastructure, both governments and regulators are investing significantly in their understanding of how companies are using the cloud. Examples such as the General Data Protection Regulation (GDPR) and the California Consumer Privacy Act are just the tip of a wave of increased security expectations, controls, and scrutiny. Over time, the damage suffered by a business from a breach will exponentially and catastrophically increase. Our principles of security are not invalidated by this new reality, but how they are applied, embedded, and upheld needs to fundamentally transform.

1.2 What Is Meant by Cloud Native Security?

A common trope of the technology industry is that definitions become loose over time. In this book, cloud native is defined as leveraging technology purpose-built to unlock the value of, and accelerate your adoption of, the cloud. Here is a list of common properties of cloud native solutions:

-

It is automation friendly and should be fully configurable through infrastructure as code (IaC).

-

It does not place unnecessary, artificial constraints on your architecture. For example, per machine pricing is not considered a cloud native pricing model.

-

It elastically scales. As your systems and applications grow in size, the solution scales in lockstep.

-

It natively supports the higher-level compute offerings on the cloud platforms. It should support serverless and containerized workloads, as well as the plethora of managed service offerings.

In this book, where possible, the recipes use the managed services provided by the cloud platforms themselves. They have all the previous properties, are purpose-built to support customers in their cloud journey, and are easily integrated into your estate.

IT security has existed from the day there was something of value stored on a computer. As soon as things of value were stored on computers, it was necessary to defend them. As an industry, IT has proven the ability to undergo seismic shifts with frightening regularity; embracing cloud native is simply the most recent. As more value is poured into technology and systems, the potential value to be gained by attacking them increases, therefore our security must increase in kind. The cloud can bring so much good, but with it comes new challenges that will need cloud native people, processes, and tools to overcome.

The Beginnings of the Cloud

Back in 2006, Amazon first announced Amazon Web Services (AWS), offering pay-as-you-go technology to businesses. Over the intervening 15 years, a tectonic shift fundamentally altered how companies approach technology. Historically, businesses ordered and managed hardware themselves, investing huge sums of capital up front and employing large teams to perform “undifferentiated heavy lifting” to operate this infrastructure. What Amazon started, followed in 2008 by Google and 2010 by Microsoft, allowed businesses to provision new infrastructure on demand in seconds, as opposed to waiting months for the hardware to arrive and be racked, configured, and released for use.

Infrastructure became a commodity, like water or electricity. This enabled businesses to rapidly experiment, become more agile, and see technology as a business differentiator rather than a cost center. Over time, the cornucopia of services offered by the Cloud Service Providers (CSPs) has grown to encompass almost everything a business could need, with more being released every day. Nearly every company on the planet, including the most ancient of enterprises, is cloud first. The cloud is here to stay and will fundamentally define how businesses consume technology in the future.

Old Practices in the New Reality

When something as transformational as cloud computing occurs, best practices require time to emerge. In the intervening gap, old practices are applied to the new reality. The security tools and processes which served us well in the pre-cloud age were not built to contend with the new normal. The pace of change posed by the cloud presented new security challenges the industry was not equipped to face. Through effort, time, and experimentation, it is now understood how to achieve our security objectives by working with, not against, the cloud. You can now have cloud native security.

Cloud native security is built around the following fundamental advantages of cloud computing:

- Pay for consumption

-

In a cloud native world, expect to only pay for services as you use them, not for idle time.

- Economies of scale

-

As the CSP is at hyperscale, it can achieve things which cannot be done in isolation, including lower pricing, operational excellence, and superior security postures.

- No capacity planning

-

Cloud resources are made to be elastic; they can scale up and down based on demand rather than having to go through the effort-intensive and often inaccurate process of capacity planning.

- Unlock speed and agility

-

By allowing companies and teams to rapidly experiment, change their mind, and move quickly, the cloud allows for capturing business value that would be impossible otherwise.

- Stop spending money on “undifferentiated heavy lifting”

-

Rather than focus on activities that cannot differentiate you from your competition, allow the CSP to focus on those tasks while you focus on core competencies.

- Span the globe

-

The CSP allows businesses to scale geographically on demand by having locations all over the world that operate identically

When you look at the processes and tools that constitute cloud native security, you enable the consumption and promised benefits of the cloud, not constrain them.

1.3 Where Security Fits in the Modern Organization

Historically, security has operated as a gatekeeper, often as part of change advisory boards (CABs), acting as judge, jury, and executioner for system changes. This siloed approach can only take you so far. The waste incurred by long feedback loops, long lead times, and slow pace of change is incompatible with a digital-first reality.

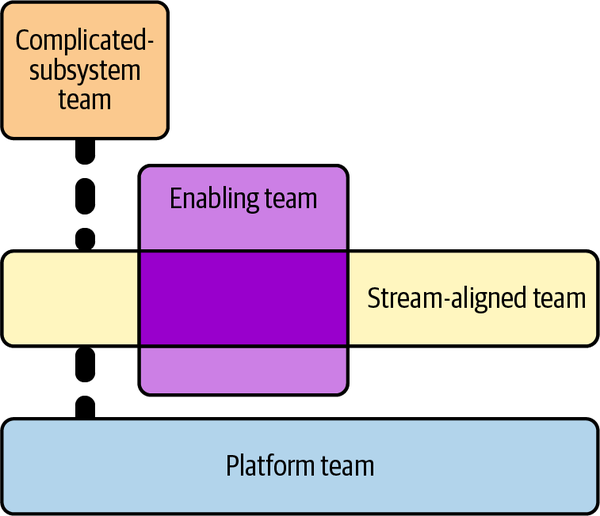

By looking to block rather than enable change, the security and delivery teams are forced into a state of eternal conflict, creating friction that breeds animosity and prevents the business from achieving its goals. Team Topologies, by Matthew Skelton and Manual Pais (IT Revolution Press), examines the four team archetypes that are fundamental to exceptional technology performance: enabling teams, platform teams, complicated-subsystem teams, and stream-aligned teams, as shown in Figure 1-2.

Figure 1-2. Team topologies

Stream-aligned teams are how the business directly delivers value. They build the systems and services that allow the business to function, interact with customers, and compete in the market.

Complicated-subsystem teams look after systems that require their own deep domain expertise, such as a risk calculation system in an insurance company.

Platform teams produce compelling internal products that accelerate stream-aligned teams, such as an opinionated cloud platform, which is the focus of Chapter 2.

Enablement teams are domain specialists who look to impart their understanding of other teams within the business.

Simply put, all other teams are there to enable the stream-aligned team. Security needs to operate as an enablement team; i.e., they are domain experts that actively collaborate with other teams. It is unrealistic and infeasible to expect that all engineers become security experts throughout a company, although it is not unrealistic or infeasible to expect and embed a base level of security competency in all engineers. Even in a highly automated world, developing systems is knowledge work—it is people who determine the success of your security initiatives.

It is through this enablement lens that many of the recipes in this cookbook make the most sense. Through working with enterprises around the world, I have seen that the paradigm shift from gatekeeper to enabler can be difficult to undertake; the animosity and lack of trust between security and delivery built over many years are powerful inhibitors of change. However, to take full advantage of cloud native security, this shift must happen, or misaligned incentives will scupper any and all progress.

1.4 The Purpose of Modern Security

Security operates in the domain of risk. Perfect security is not a realistic or achievable goal; at any one time, you cannot provide services and be known to be immune to all threats. This reality is even borne out in how fines are handed out following breaches: a substantial percentage of the fine is negated if reasonable attempts have been made to prevent the breach. So, if you cannot achieve complete security, then what is your north star? What is your goal?

At the macro level, your goal is to make commercially reasonable efforts to minimize the chance of security incidents. What is deemed commercially reasonable varies wildly among companies. Often, startups have a significantly higher risk tolerance for security than regulated enterprises, as common sense would lead us to predict. What is important to keep in mind is that this much lower risk tolerance does not mean that an enterprise cannot move as fast as a startup due to overbearing security concerns. Throughout this book you will see how, with the correct principles and recipes in place, you do not handicap your stream-aligned teams.

At the micro level, your goal is to ensure that a single change does not present an intolerable amount of risk. Again, what is tolerable is highly context specific, but thankfully, techniques to minimize risk are often universal. Later in this chapter, as I discuss DevSecOps, I will drill into what properties of changes allow you to minimize the risk and how embracing a DevSecOps culture is required for aligning teams around security objectives.

1.5 DevSecOps

Before I can dive into what DevSecOps is, you first need to understand its precursor, DevOps.

What Is DevOps?

At its heart, DevOps is a cultural transformation of software delivery. It is heavily influenced by lean theory and is most simply described as bringing together what historically were two disparate silos, development and operations, hence DevOps, or the commonly used soundbite, “You build it, you run it.”

To put it into numbers, elite teams operating in a DevOps model do the following:

-

deploy code 208 times more frequently

-

deploy code 106 times faster

-

recover from incidents 2,604 times faster

-

make 1/7 the amount of changes that fail

As you can see from the numbers, DevOps was revolutionary, not evolutionary, and DevSecOps has the same potential.

Understanding these numbers is crucial for modern security as it allows for alignment around a common set of constraints—security objectives need to be achieved without preventing teams from becoming elite performers. Being elite for lead time means that changes are in production within an hour, meaning that mandatory security tests that take a day to complete are incompatible with the future direction of the company. A classic example of this in the enterprise is a mandatory penetration test before every release; although the goal of the penetration test is valuable, the activity itself and its place in the process need to change. The increasingly popular approach of bug bounties is a potential replacement for penetration tests. These challenges that security teams are now facing are the same ones that operations teams faced at the birth of DevOps in the early 2000s.

It’s crucial to set the context, as it leads to the right conversations, ideas, problems, and solutions to achieve the right outcomes. As you can see, the engineering and cultural principles needed to allow companies to merely survive today forces wide-scale changes in security, the reality of which is what the industry calls DevSecOps.

Two of the seminal texts in DevOps, The Phoenix Project (by Gene Kim et al., IT Revolution Press) and The Unicorn Project (by Gene Kim, IT Revolution Press), elaborate “the Three Ways” and “the Five Ideals” as underlying principles. I’ll examine them briefly here as they also underpin DevSecOps.

The Three Ways

- Flow and Systems Thinking

-

The first way tells us that you need to optimize for the whole system, not simply for one component. Local optimization can often come at the expense of the system as a whole, which leads us to the realization that the most secure system is not necessarily in the best interests of the business. Delaying a critical feature because of a vulnerability is a trade-off that needs to be made on a case-by-case basis.

- Amplify Feedback Loops

-

The second way tells us that feedbacks loops are the mechanisms that allow for correction; the shorter they are, the faster you can correct. This leads us to the potential value of the short-term embedding of security specialists in teams, and also adopting tooling that allows for rapid feedback on changes, such as in IDE SAST tooling.

- Culture of Continual Experimentation and Learning

-

The third way is how you need to embrace risk, and only by taking and learning from risks can you achieve mastery. This leads us to the realization that the technology domain is moving forward ever more rapidly, and you need to move with it, not fight against it. Dogma leads to ruin.

The Five Ideals

- Locality and Simplicity

-

The first ideal around locality means that you should enable autonomous teams; changes should be able to happen without approval from many people and teams. Teams should own their entire value stream, which is a significant shift from the siloed approach of the past, where handoffs reduced accountability and added waste.

- Focus, Flow, and Joy

-

The second ideal means that you should be looking to enable teams to focus on their objectives and find flow, as working in a state of flow brings joy. Rather than getting in each other’s way and working in the gatekeeper functions of the past, you need to find how you can help people and teams achieve flow and make the passage of work easy and enjoyable.

- Improvement of Daily Work

-

Historically, the rush for features has drowned systems and teams in seas of technical debt. Instead, you need to be mindful and enable teams to pay down their technical debt. There may be systems that are in need of decommissioning, systems that have started to struggle to scale, or decisions that have proved less than optimal over time.

- Psychological Safety

-

People should feel secure and safe to express themselves and should not be blamed for mistakes, which instead are used as opportunities for learning. Through rigorous and meticulous study, Google found that psychological safety is one of the key properties of high-performing teams.

- Customer Focus

-

Systems fall into one of two categories: core and context. Core generates a durable competitive advantage for the business; context is everything else. Security for most businesses is context; it exists to enable core but is not core itself, as it is not generally a source of competitive advantage. This is shown by the fact that security operates as an enablement team and is there to support core in delivering the greatest value.

What Is DevSecOps?

DevSecOps is the natural extension of DevOps into the security domain. You are now charged with a goal of enabling business agility securely. Within that shift comes people, process, and tool changes, but it is important to understand that it is, at its core, a shift in culture. Simply replacing tools in isolation will not allow you to thrive in the new reality, no matter what the vendor might say.

As I said previously, security operates in the domain of risk. As part of the approval and testing processes, for a change, you are trying to build confidence that you are not introducing a large amount of risk. This is analogous to functional testing of software: you cannot prove the nonexistence of bugs, but you can pass a confidence threshold, meaning that you can release into production. Proving that a change contains no security issues is impossible; being confident that a major issue is not introduced is possible. This brings us to the following two properties of a change that impact risk:

- Size of the change

-

Size is the most critical property of a change to consider when looking at risk. The more you change, the more risk is involved. This is something that is hard to definitively measure, as most things in security unfortunately are. But as a base, heuristic lines of code are effective more often than not. You want many small changes as opposed to fewer large ones. This allows you to more easily understand which change caused an adverse impact and to more effectively peer-review the change, and it means that one bad change does not block all other changes.

- Lead time for changes

-

Based on our shared understanding that changes with security vulnerabilities are inevitable, the speed with which you can resolve introduced issues becomes crucial. The total risk posed by a change is capped by the length of time it is exposed and live. When an issue is discovered in production, the lower the lead time, the less the exposure. In reality, the teams that pose the greatest challenge when first embarking on DevSecOps, the pioneers moving the fastest, have the highest potential for security. The days of “move fast and break things” are behind us; today’s mantra is “Better Value, Sooner, Safer, Happier”.

Resolving issues with roll forward versus roll back

Upon discovery of an issue, in an ideal world you want to roll forward—introduce a new change to resolve the issue—rather than roll back and revert all changes. An operationally mature team has more options—the same processes and tools that allow them to deploy many times a day give them a scalpel to target and resolve issues. Teams early in their DevOps journey often only have sledgehammers, meaning that the business impact of resolving an issue is much worse.

Continuous integration and continuous delivery

Continuous integration (CI) and continuous delivery (CD) are two foundational patterns that enable DevOps; they are how system change happens. Teams possess a CI/CD pipeline which takes their code changes and applies them to environments. Security teams possessing their own pipelines can rapidly enact change, while hooking into all pipelines in the organization allows them to enact change at scale.

Before I discuss what exactly continuous integration and continuous delivery are, let’s segue briefly into how code is stored.

Version Control

Version control is the process of maintaining many versions of code in parallel. There is a base branch, often called trunk or main, which has a full history of every change that has ever happened. When a team member wishes to make a change, they make a new branch, make their changes independently, and merge them back into the base branch.

Companies will have at least one version control system they use, most commonly GitHub, GitLab, or BitBucket. Becoming familiar with how version control operates is a required skill for the modern security engineer.

What is continuous integration?

Continuous integration is the practice of regularly testing, at least daily, against the base branch. Its primary purpose is to check that the proposed changes are compatible with the common code. Often, a variety of checks and tests are run against the code before it is allowed to be merged back into the base branch, including a human peer review.

As this process allows for barring changes, you can embed security tooling that analyzes code and dependencies to stop known issues from being merged and ever reaching a live environment.

What is continuous delivery?

Continuous delivery is the practice of having the common code maintained in a deployable state; i.e., the team is able to perform a production release at any time. The intent is to make releasing a business decision rather than a technical one. As the code exists to fulfill a business need, it makes sense for this decision to be purely business driven.

This approach runs in opposition to significant human oversight on changes. A mandatory human-operated penetration test before release means that continuous delivery cannot be achieved, and the business loses agility as its ability to react is constrained.

What is continuous deployment?

Continuous delivery and deployment are often confused, as they are very closely related. Continuous deployment is the practice of performing an automated production release when new code is merged into the shared common code. By building the apparatus around this, teams can be elite and release tens to hundreds of times a day.

The level of automation required shifts almost 100% of quality control onto tooling, with the sole human interaction being the peer review. Teams reaching for this goal introduces a need for a mature, fully automated DevSecOps tool chain.

CI/CD pipelines

As mentioned previously, teams possess CI/CDs, which is how change is applied to environments. These pipelines are the only way to make production changes, and provide the vector for embedding practices across all teams in an organization. As long as you can automate something, it can become part of a pipeline and can be run against every change as it makes its way to production and even after. Pipelines become the bedrock for the technical aspects of the DevSecOps cultural shift.

Want to start running dependency checks of imported packages? Embed it into the pipeline. Want to run static code analysis before allowing the code to be merged? Embed it into the pipeline. Want to check infrastructure configuration before it’s live in the cloud? Embed it into the pipeline.

Additionally, these pipelines operate as information radiators. As all change goes through them, they become the obvious choice for where to surface information from. As I am now broaching the topic of measuring the impact of security, many of the metrics are observed from the pipelines themselves.

1.6 How to Measure the Impact of Security

I often find myself quoting Peter Drucker: “What gets measured, gets managed.” With that in mind, how can you tackle measuring the impact of security? This has often proved to be a vexing question for many chief information security officers (CISOs), as security is only ever top of mind when something has gone wrong. While I do not believe I have the one true answer, let’s discuss some ideas and heuristics that are often used.

Time to Notify for Known Vulnerabilities

As modern systems are built on the shoulders of giants—i.e., software is built depending on frameworks and libraries from innumerable developers and companies—we need an ability to notify teams when one of their dependencies is known to have a potential vulnerability.

For example, I’m building a serverless function in Python, and I have used the latest version of a library. Two days after that code is deployed into production, a vulnerability is identified and raised against the library. How long a wait is acceptable before my team is notified of the vulnerability?

Time to Fix a Known Vulnerability

Coupled to the notification time, what is an acceptable wait time for the vulnerability to be fixed? Fixing in this context can take a few different guises, the simplest being deploying a new version of the function with a patched, updated library; slightly more complicated is the decommissioning of the function until a patch is available, and potentially most complicated is self-authoring the library fix.

The selection of the solution is context specific, but the metric will help drive maturity and will produce concrete examples around what risk is truly tolerable from the business.

Service Impacts Incurred Through Security Vulnerabilities

Often the quickest way to close a potential security threat is to turn something off, whether literally flicking a switch or making something inaccessible. As an organization operationally matures, the service impact of fixing a security issue should be negligible. As talked about previously, you want to roll forward fixes, thereby not impacting service availability, but there will be cases along the journey where it is better to place the service in a degraded state while the fix is applied. Improvements in this metric are correlated with increased operational maturity.

Attempted Breaches Prevented

Modern tooling is sophisticated enough to identify breaches being attempted or retroactively identify past breach attempts. To make the impact of investment in security more tangible, understanding how many potential incidents have been prevented is a powerful metric to obtain. It is important, however, that there is nuance in the measurement. Being able to drill down to the component level is crucial; for example, reducing your attack surface by decommissioning infrastructure will make the aggregate count decrease but could be misconstrued as a loss in tooling efficacy, or it could simply be that fewer attempts are being made.

Compliance Statistics

Having a robust set of controls that define compliant cloud resource configurations is crucial in a scalable security approach, as you will see in greater detail later in the book. For now, consider an AWS organization with hundreds of S3 buckets spread across tens of accounts—you should be able to track and report on how many of them have sufficient levels of server-side encryption enabled. By tracking this metric across many resource types and configuration options, you can understand our baseline security posture at scale and show the impact of security initiatives.

Percentage of Changes Rejected

As part of a security team’s enablement objective, over time you need to determine efficacy. Over time, teams should understand the security context they operate within, and doing things securely should be the default. A metaphor I like for this is that developers are like lightning—they pursue the path of least resistance. If you can make the secure path the one of least resistance, you will observe the percentage of changes rejected on security grounds decrease over time.

1.7 The Principles of Security

By establishing principles, a common set of beliefs, and embedding them through action, you make significant progress on two pivotal goals. First, you strengthen a culture that takes security seriously. Second, you build the foundations for autonomy. Fundamentally, scaling is achieved by giving people the tools and mental models required to make the correct decisions. It is not enough for people to be able to parrot answers back; they need to be able to arrive at the same answer independently. To that end, let’s look at a starting set of principles.

Least Privilege

Often the first principle that comes to mind when discussing security, the principle of least privilege is that actors in the system, both human and robot, have enough privilege to perform their jobs and no more. For example, a human cannot make changes to production environments without using the CI/CD pipeline, or a system cannot provision infrastructure in regions that are not needed for the application.

Currently this is hard to achieve and maintain. As I have already discussed, the business needs to be agile, which means that the scope of permissions someone requires can rapidly change. The most common issue I’ve seen is that although getting extended permissions is normally streamlined and fairly trivial, permissions are rarely revoked or challenged. Often what was least privileged in the past is now overly privileged due to a decrease in scope. We’ll evaluate recipes later in the book around both the initial creation of permission sets and their ongoing maintenance.

Only as Strong as Your Weakest Link

Your security posture is not determined by your strongest point but by your weakest. Having a castle doesn’t help keep you safe if you leave the gate unlocked and open. When you look at how to implement cloud native security, you need to make sure you’re focusing on the weak points, not reinforcing areas of strength.

There’s no value in investing significant time in finely tuned identity and access management (IAM) policies, if users are not ubiquitously using multifactor authentication (MFA).

Defense in Depth

This principle is closely related to the concept of weakest links. To have a robust security posture, you need layered solutions. For example, company systems are often only accessible over a virtual private network (VPN), and the intent is that the VPN is only accessible by authenticated users; however, you should not implicitly trust that all users coming from the VPN address space have been authenticated. Otherwise, a compromise in one system cascades, and the potential impact of a breach is magnified.

Another example is when designing networking, as discussed in Chapter 5. Applications in the cloud have distinct identities that define access, beneath that should be additional firewall rules that use IP address ranges to allow groups of systems to communicate, and beneath that are the routes which dictate where traffic can flow. These combine to iteratively reduce the blast radius of a breach: a compromise in one layer does not completely negate all controls.

Security Is Job Zero

A phrase initially coined by Amazon, this principle speaks to how security is the first step in the process. Although I have already discussed how not everyone can be security experts, everyone must become security conscious, literate, and cognizant. Allowing people to look through a security lens is the most critical aspect of a security team, which we’ll discuss as part of the enablement strategies in Chapter 4.

Culturally, security has to be the basis from which both technical and social systems are built. An insecure foundation will undermine anything built on top of it. Bad password management undoes password complexity requirements. Unencrypted traffic can make encryption at rest pointless.

You can’t out-engineer a culture that doesn’t value security at its core.

Quality Is Built In

This principle goes hand in hand with security as job zero. Historically, security was a “bolt-on”—once the functionality was built, it was then made secure, to varying levels of efficacy. In a world centered around the need for business agility, it is hard to see how this “bolt-on” approach, even if it was effective in preventing incidents, allowed teams to be agile and effective. Security is an aspect of system quality. In the preceding principle, it is the alpha quality; without a secure foundation the change should never see the light of day. Code, architectures, and systems need to be designed to allow for security, meaning that security is something that needs to be prioritized and invested in from day one.

Businesses can often be myopic in the pursuit of new functionality, and under pressure to hit release dates, security is often deprioritized. This technical debt accrues over time and becomes incredibly expensive to pay back, orders of magnitude more than was initially required to build in at the start.

DevSecOps initiatives, tooling, and processes like threat modeling make security a first-class initiative from before a line of code is written. By enforcing security standards from the beginning, it is no longer work that can be dropped when there is schedule pressure. It’s part of the standard operating procedure.

Chapter Summary

Let’s review the learning objectives.

Modern life means that ever more value is being created digitally, and with that come more incentives for cyber criminals and worse damages as regulation increases. As the attacks grow in sophistication, so must our defenses. Cloud native security is security principles applied in true symbiosis with the cloud, ensuring that you are building fit-for-purpose processes, using the right tools, and making sure our people understand the new reality.

Security is an enablement function in a modern organization, as opposed to the gatekeeper position it often previously occupied. It needs to allow for change to flow quickly, easily, and safely. Security exists to manage risk, both at the macro and micro levels. Risk is introduced through change, so being able to understand change at scale is critical in managing risk. Smaller, more frequent change is far less risky than bigger, less frequent change.

DevSecOps is a cultural shift that transforms how security works in concert with delivery teams. You cannot achieve DevSecOps by buying a new tool; instead, it is a deep-rooted change that starts and ends with people. I talked about a few quantitative measures that could be used together to understand how security is maturing at your organization, such as the percentage of compliant infrastructure, the speed with which the issues are rectified, and the number of potential breaches negated.

The fundamental principles of security have not changed in decades; instead it is their application that has changed. From least privilege to defense in depth, understanding these principles enables you to form a security strategy and understand how the recipes in this book stem from a strong, principled foundation.

With the introduction done, we’ll now look at the recipes that allow you to establish a solid foundation in the cloud. As with a shaky foundation, everything built on top will quickly come crashing down around you.

Get Cloud Native Security Cookbook now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.