Chapter 4. Multi-Container Pods

The previous chapters explained how to manage single-container Pods. That’s the norm, as you’ll want to run a microservice inside of a single Pod to reinforce separation of concerns and increased cohesion. Technically, a Pod allows you to configure and run multiple containers. The section “Multi-Container Pods” of the CKAD curriculum addresses this concern.

In this chapter, we’ll discuss the need for multi-container Pods, their relevant use cases, and the design patterns that emerged in the Kubernetes community. The exam outline specifically mentions three design patterns: the sidecar, the adapter, and the ambassador. We’ll make sure to get a good grasp of their application with the help of representative examples.

We’ll also talk about init containers. Init containers help with transitioning the runtime environment into an expected state so that the application can work properly. While it’s not explicitly mentioned in the CKAD curriculum, I think it’s important to cover the concept, as it falls under the topic of multi-container Pods.

At a high level, this chapter covers the following concepts:

-

Pod

-

Container

-

Volume

-

Design patterns

Note

This chapter will use the concept of a Volume. Reference Chapter 8 for more information if you’re not familiar with Kubernetes’ persistent storage options.

Defining Multiple Containers in a Pod

Especially to beginners of Kubernetes, how to appropriately design a Pod isn’t necessarily apparent. Upon reading the Kubernetes user documentation and tutorials on the internet, you’ll quickly find out that you can create a Pod that runs multiple containers at the same time. The question often arises, “Should I deploy my microservices stack to a single Pod with multiple containers, or should I create multiple Pods, each running a single microservice?” The short answer is to operate a single microservice per Pod. This modus operandi promotes a decentralized, decoupled, and distributed architecture. Furthermore, it helps with rolling out new versions of a microservice without necessarily interrupting other parts of the system.

So what’s the point of running multiple containers in a Pod then? There are two common use cases. Sometimes, you’ll want to initialize your Pod by executing setup scripts, commands, or any other kind of preconfiguration procedure before the application container should start. This logic runs in a so-called init container. Other times, you’ll want to provide helper functionality that runs alongside the application container to avoid the need to bake the logic into application code. For example, you may want to massage the log output produced by the application. Containers running helper logic are called sidecars.

Init Containers

Init containers provide initialization logic concerns to be run before the main application even starts. To draw an analogy, let’s look at a similar concept in programming languages. Many programming languages, especially the ones that are object oriented like Java or C++, come with a constructor or a static method block. Those language constructs initialize fields, validate data, and set the stage before a class can be created. Not all classes need a constructor, but they are equipped with the capability.

In Kubernetes, this functionality can be achieved with the help of init containers. Init containers are always started before the main application containers, which means they have their own lifecycle. To split up the initialization logic, you can even distribute the work into multiple init containers that are run in the order of definition in the manifest. Of course, initialization logic can fail. If an init container produces an error, the whole Pod is restarted, causing all init containers to run again in sequential order. Thus, to prevent any side effects, making init container logic idempotent is a good practice. Figure 4-1 shows a Pod with two init containers and the main application.

Figure 4-1. Sequential and atomic lifecycle of init containers in a Pod

In the past couple of chapters, we’ve explored how to define a container within a Pod. You simply specify its configuration under spec.containers. For init containers, Kubernetes provides a separate section: spec.initContainers. Init containers are always executed before the main application containers, regardless of the definition order in the manifest. The manifest shown in Example 4-1 defines an init container and a main application container. The init container sets up a configuration file in the directory /usr/shared/app. This directory has been shared through a Volume so that it can be referenced by a Node.js-based application running in the main container.

Example 4-1. A Pod defining an init container

apiVersion:v1kind:Podmetadata:name:business-appspec:initContainers:-name:configurerimage:busybox:1.32.0command:['sh','-c','echoConfiguringapplication...&&\mkdir-p/usr/shared/app&&echo-e"{\"dbConfig\":\{\"host\":\"localhost\",\"port\":5432,\"dbName\":\"customers\"}}"\>/usr/shared/app/config.json']volumeMounts:-name:configdirmountPath:"/usr/shared/app"containers:-image:bmuschko/nodejs-read-config:1.0.0name:webports:-containerPort:8080volumeMounts:-name:configdirmountPath:"/usr/shared/app"volumes:-name:configdiremptyDir:{}

When starting the Pod, you’ll see that the status column of the get command provides information on init containers as well. The prefix Init: signifies that an init container is in the process of being executed. The status portion after the colon character shows the number of init containers completed versus the overall number of init containers configured:

$ kubectl create -f init.yaml pod/business-app created $ kubectl get pod business-app NAME READY STATUS RESTARTS AGE business-app 0/1 Init:0/1 0 2s $ kubectl get pod business-app NAME READY STATUS RESTARTS AGE business-app 1/1 Running 0 8s

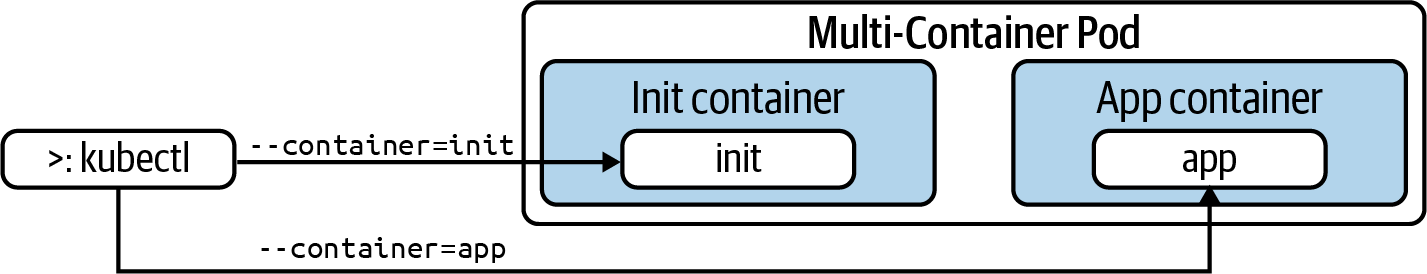

Errors can occur during the execution of init containers. You can always retrieve the logs of an init container by using the --container command-line option (or -c in its short form), as shown in Figure 4-2.

Figure 4-2. Targeting a specific container

The following command renders the logs of the configurer init container, which equates to the echo command we configured in the YAML manifest:

$ kubectl logs business-app -c configurer Configuring application...

The Sidecar Pattern

The lifecycle of an init container looks as follows: it starts up, runs its logic, then terminates once the work has been done. Init containers are not meant to keep running over a longer period of time. There are scenarios that call for a different usage pattern. For example, you may want to create a Pod that runs multiple containers continuously alongside one another.

Typically, there are two different categories of containers: the container that runs the application and another container that provides helper functionality to the primary application. In the Kubernetes space, the container providing helper functionality is called a sidecar. Among the most commonly used capabilities of a sidecar container are file synchronization, logging, and watcher capabilities. The sidecars are not part of the main traffic or API of the primary application. They usually operate asynchronously and are not involved in the public API.

To illustrate the behavior of a sidecar, we’ll consider the following use case. The main application container runs a web server—in this case, NGINX. Once started, the web server produces two standard logfiles. The file /var/log/nginx/access.log captures requests to the web server’s endpoint. The other file, /var/log/nginx/error.log, records failures while processing incoming requests.

As part of the Pod’s functionality, we’ll want to implement a monitoring service. The sidecar container polls the file’s error.log periodically and checks if any failures have been discovered. More specifically, the service tries to find failures assigned to the error log level, indicated by [error] in the log file. If an error is found, the monitoring service will react to it. For example, it could send a notification to the administrators of the system. We’ll keep the functionality as simple as possible. The monitoring service will simply render an error message to standard output. The file exchange between the main application container and the sidecar container happens through a Volume, as shown in Figure 4-3.

Figure 4-3. The sidecar pattern in action

The YAML manifest shown in Example 4-2 sets up the described scenario. The most tricky portion of the code is the lengthy bash command. The command runs an infinite loop. As part of each iteration, we inspect the contents of the file error.log, grep for an error and potentially act on it. The loop executes every 10 seconds.

Example 4-2. An exemplary sidecar pattern implementation

apiVersion:v1kind:Podmetadata:name:webserverspec:containers:-name:nginximage:nginxvolumeMounts:-name:logs-volmountPath:/var/log/nginx-name:sidecarimage:busyboxcommand:["sh","-c","whiletrue;doif[\"$(cat/var/log/nginx/error.log\|grep'error')\"!=\"\"];thenecho'Errordiscovered!';fi;\sleep10;done"]volumeMounts:-name:logs-volmountPath:/var/log/nginxvolumes:-name:logs-volemptyDir:{}

When starting up the Pod, you’ll notice that the overall number of containers will show 2. After all containers can be started, the Pod signals a Running status:

$ kubectl create -f sidecar.yaml pod/webserver created $ kubectl get pods webserver NAME READY STATUS RESTARTS AGE webserver 0/2 ContainerCreating 0 4s $ kubectl get pods webserver NAME READY STATUS RESTARTS AGE webserver 2/2 Running 0 5s

You will find that error.log does not contain any failure to begin with. It starts out as an empty file. With the following commands, you’ll provoke an error on purpose. After waiting for at least 10 seconds, you’ll find the expected message on the terminal, which you can query for with the logs command:

$ kubectl logs webserver -c sidecar $ kubectl exec webserver -it -c sidecar -- /bin/sh / # wget -O- localhost?unknown Connecting to localhost (127.0.0.1:80) wget: server returned error: HTTP/1.1 404 Not Found / # cat /var/log/nginx/error.log 2020/07/18 17:26:46 [error] 29#29: *2 open() "/usr/share/nginx/html/unknown" \ failed (2: No such file or directory), client: 127.0.0.1, server: localhost, \ request: "GET /unknown HTTP/1.1", host: "localhost" / # exit $ kubectl logs webserver -c sidecar Error discovered!

The Adapter Pattern

As application developers, we want to focus on implementing business logic. For example, as part of a two-week sprint, say we’re tasked with adding a shopping cart feature. In addition to the functional requirements, we also have to think about operational aspects like exposing administrative endpoints or crafting meaningful and properly formatted log output. It’s easy to fall into the habit of simply rolling all aspects into the application code, making it more complex and harder to maintain. Cross-cutting concerns in particular need to be replicated across multiple applications and are often copied and pasted from one code base to another.

In Kubernetes, we can avoid bundling cross-cutting concerns into the application code by running them in another container apart from the main application container. The adapter pattern transforms the output produced by the application to make it consumable in the format needed by another part of the system. Figure 4-4 illustrates a concrete example of the adapter pattern.

Figure 4-4. The adapter pattern in action

The business application running the main container produces timestamped information—in this case, the available disk space—and writes it to the file diskspace.txt. As part of the architecture, we want to consume the file from a third-party monitoring application. The problem is that the external application requires the information to exclude the timestamp. Now, we could change the logging format to avoid writing the timestamp, but what do we do if we actually want to know when the log entry has been written? This is where the adapter pattern can help. A sidecar container executes transformation logic that turns the log entries into the format needed by the external system without having to change application logic.

The YAML manifest shown in Example 4-3 illustrates what this implementation of the adapter pattern could look like. The app container produces a new log entry every five seconds. The transformer container consumes the contents of the file, removes the timestamp, and writes it to a new file. Both containers have access to the same mount path through a Volume.

Example 4-3. An exemplary adapter pattern implementation

apiVersion:v1kind:Podmetadata:name:adapterspec:containers:-args:-/bin/sh--c-'whiletrue;doecho"$(date)|$(du-sh~)">>/var/logs/diskspace.txt;\sleep5;done;'image:busyboxname:appvolumeMounts:-name:config-volumemountPath:/var/logs-image:busyboxname:transformerargs:-/bin/sh--c-'sleep20;whiletrue;dowhilereadLINE;doecho"$LINE"|cut-f2-d"|"\>>$(date+%Y-%m-%d-%H-%M-%S)-transformed.txt;done<\/var/logs/diskspace.txt;sleep20;done;'volumeMounts:-name:config-volumemountPath:/var/logsvolumes:-name:config-volumeemptyDir:{}

After creating the Pod, we’ll find two running containers. We should be able to locate the original file, /var/logs/diskspace.txt, after shelling into the transformer container. The transformed data exists in a separate file in the user home directory:

$ kubectl create -f adapter.yaml pod/adapter created $ kubectl get pods adapter NAME READY STATUS RESTARTS AGE adapter 2/2 Running 0 10s $ kubectl exec adapter --container=transformer -it -- /bin/sh / # cat /var/logs/diskspace.txt Sun Jul 19 20:28:07 UTC 2020 | 4.0K /root Sun Jul 19 20:28:12 UTC 2020 | 4.0K /root / # ls -l total 40 -rw-r--r-- 1 root root 60 Jul 19 20:28 2020-07-19-20-28-28-transformed.txt ... / # cat 2020-07-19-20-28-28-transformed.txt 4.0K /root 4.0K /root

The Ambassador Pattern

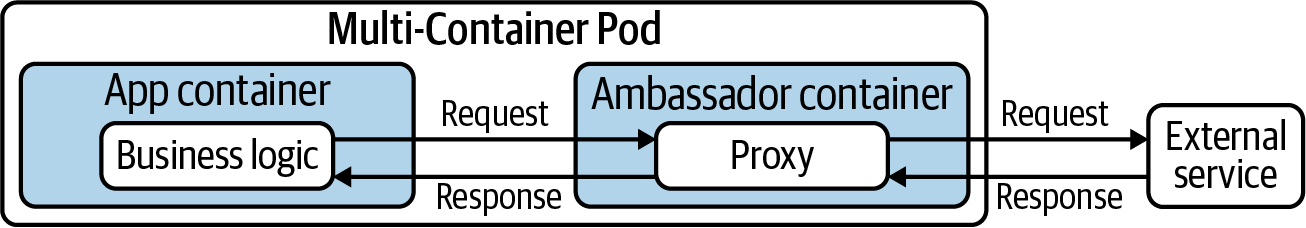

Another important design pattern covered by the CKAD is the ambassador pattern. The ambassador pattern provides a proxy for communicating with external services.

There are many use cases that can justify the introduction of the ambassador pattern. The overarching goal is to hide and/or abstract the complexity of interacting with other parts of the system. Typical responsibilities include retry logic upon a request failure, security concerns like providing authentication or authorization, or monitoring latency or resource usage. Figure 4-5 shows the higher-level picture.

Figure 4-5. The ambassador pattern in action

In this example, we’ll want to implement rate-limiting functionality for HTTP(S) calls to an external service. For example, the requirements for the rate limiter could say that an application can only make a maximum of 5 calls every 15 minutes. Instead of strongly coupling the rate-limiting logic to the application code, it will be provided by an ambassador container. Any calls made from the business application need to be funneled through the ambassador container. Example 4-4 shows a Node.js-based rate limiter implementation that makes calls to the external service Postman.

Example 4-4. Node.js HTTP rate limiter implementation

constexpress=require('express');constapp=express();constrateLimit=require('express-rate-limit');consthttps=require('https');constrateLimiter=rateLimit({windowMs:15*60*1000,max:5,message:'Too many requests have been made from this IP, please try again after an hour'});app.get('/test',rateLimiter,function(req,res){console.log('Received request...');varid=req.query.id;varurl='https://postman-echo.com/get?test='+id;console.log("Calling URL %s",url);https.get(url,(resp)=>{letdata='';resp.on('data',(chunk)=>{data+=chunk;});resp.on('end',()=>{res.send(data);});}).on("error",(err)=>{res.send(err.message);});})varserver=app.listen(8081,function(){varport=server.address().portconsole.log("Ambassador listening on port %s...",port)})

The corresponding Pod shown in Example 4-5 runs the main application container on a different port than the ambassador container. Every call to the HTTP endpoint of the container named business-app would delegate to the HTTP endpoint of the container named ambassador. It’s important to mention that containers running inside of the same Pod can communicate via localhost. No additional networking configuration is required.

Example 4-5. An exemplary ambassador pattern implementation

apiVersion:v1kind:Podmetadata:name:rate-limiterspec:containers:-name:business-appimage:bmuschko/nodejs-business-app:1.0.0ports:-containerPort:8080-name:ambassadorimage:bmuschko/nodejs-ambassador:1.0.0ports:-containerPort:8081

Let’s test the functionality. First, we’ll create the Pod, shell into the container that runs the business application, and execute a series of curl commands. The first five calls will be allowed to the external service. On the sixth call, we’ll receive an error message, as the rate limit has been reached within the given time frame:

$ kubectl create -f ambassador.yaml

pod/rate-limiter created

$ kubectl get pods rate-limiter

NAME READY STATUS RESTARTS AGE

rate-limiter 2/2 Running 0 5s

$ kubectl exec rate-limiter -it -c business-app -- /bin/sh

# curl localhost:8080/test

{"args":{"test":"123"},"headers":{"x-forwarded-proto":"https", \

"x-forwarded-port":"443","host":"postman-echo.com", \

"x-amzn-trace-id":"Root=1-5f177dba-e736991e882d12fcffd23f34"}, \

"url":"https://postman-echo.com/get?test=123"}

...

# curl localhost:8080/test

Too many requests have been made from this IP, please try again after an hour

Summary

Real-world scenarios call for running multiple containers inside of a Pod. An init container helps with setting the stage for the main application container by executing initializing logic. Once the initialized logic has been processed, the container will be terminated. The main application container only starts if the init container ran through its functionality successfully.

Kubernetes enables implementing software engineering best practices like separation of concerns and the single-responsibility principle. Cross-cutting concerns or helper functionality can be run in a so-called sidecar container. A sidecar container lives alongside the main application container within the same Pod and fulfills this exact role.

We talked about other design patterns that involve multiple containers per Pod: the adapter pattern and the ambassador pattern. The adapter pattern helps with “translating” data produced by the application so that it becomes consumable by third-party services. The ambassador pattern acts as a proxy for the application container when communicating with external services by abstracting the “how.”

Exam Essentials

- Understand the need for running multiple containers in a Pod

-

Pods can run multiple containers. You will need to understand the difference between init containers and sidecar containers and their respective lifecycles. Practice accessing a specific container in a multi-container Pod with the help of the command-line option

--container. - Know how to create an init container

-

Init containers see a lot of use in enterprise Kubernetes cluster environments. Understand the need for using them in their respective scenarios. Practice defining a Pod with one or even more init containers and observe their linear execution when creating the Pod. It’s important to experience the behavior of a Pod in failure situations that occur in an init container.

- Understand sidecar patterns and how to implement them

-

Sidecar containers are best understood by implementing a scenario for one of the established patterns. Based on what you’ve learned, come up with your own applicable use case and create a multi-container Pod to solve it. It’s helpful to be able to identify sidecar patterns and understand why they are important in practice and how to stand them up yourself. While implementing your own sidecars, you may notice that you have to brush up on your knowledge of bash.

Sample Exercises

Solutions to these exercises are available in the Appendix.

-

Create a YAML manifest for a Pod named

complex-pod. The main application container namedappshould use the imagenginxand expose the container port 80. Modify the YAML manifest so that the Pod defines an init container namedsetupthat uses the imagebusybox. The init container runs the commandwget -O- google.com. -

Create the Pod from the YAML manifest.

-

Download the logs of the init container. You should see the output of the

wgetcommand. -

Open an interactive shell to the main application container and run the

lscommand. Exit out of the container. -

Force-delete the Pod.

-

Create a YAML manifest for a Pod named

data-exchange. The main application container namedmain-appshould use the imagebusybox. The container runs a command that writes a new file every 30 seconds in an infinite loop in the directory /var/app/data. The filename follows the pattern {counter++}-data.txt. The variable counter is incremented every interval and starts with the value 1. -

Modify the YAML manifest by adding a sidecar container named

sidecar. The sidecar container uses the imagebusyboxand runs a command that counts the number of files produced by themain-appcontainer every 60 seconds in an infinite loop. The command writes the number of files to standard output. -

Define a Volume of type

emptyDir. Mount the path /var/app/data for both containers. -

Create the Pod. Tail the logs of the sidecar container.

-

Delete the Pod.

Get Certified Kubernetes Application Developer (CKAD) Study Guide now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.