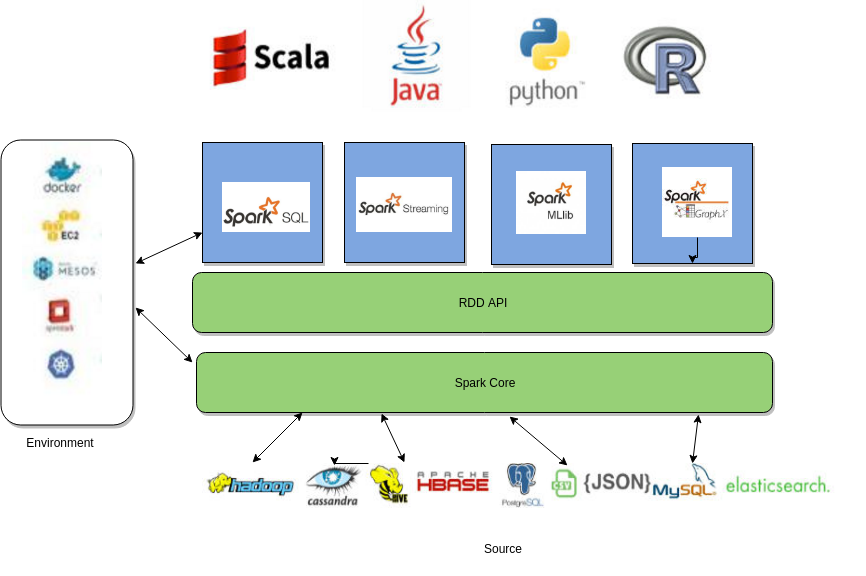

As discussed previously, Spark can be used for various purposes, such as real-time processing, machine learning, graph processing, and so on. Spark consists of different independent components which can be used depending on use cases. The following figures give a brief idea about Spark's ecosystem:

- Spark core: Spark core is the base and generalized layer in Spark ecosystem. It contains basic and common functionality which can be used by all the layers preceding it. This means that any performance improvement on the core is automatically applied to all the components preceding it. RDD, which is main abstraction ...