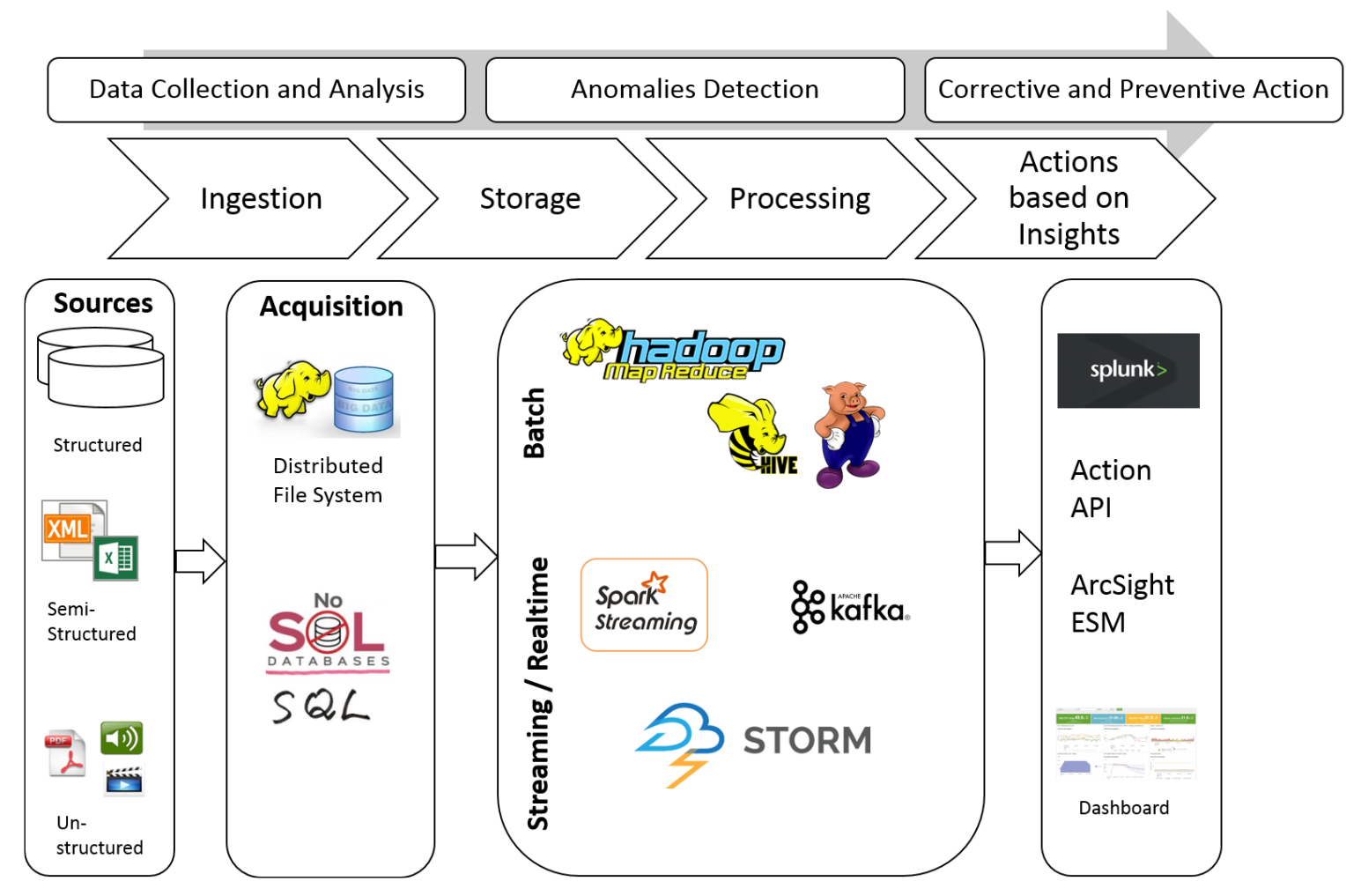

In typical Big Data environments, a layered architecture is implemented. Layers within the data processing pipeline help in decoupling various stages through which the data passes to protect the critical infrastructure. The data flows through ingestion, storage, processing, and an actionize cycle, which is depicted in the following figure along with popular frameworks used for implementing the workflow:

Most of the components used in this figure are open source and a result of collaborative efforts from a large community. A detailed ...