Cross-entropy was described in Chapter 9, Getting Your Neurons to Work. The first input comes from layer 2, the result of the preceding nodes computing x-input. The second input, y-input, comes directly from the input node, as implemented in the following code:

with tf.name_scope('total'):cross_entropy = tf.reduce_mean(diff)tf.summary.scalar('cross_entropy', cross_entropy)

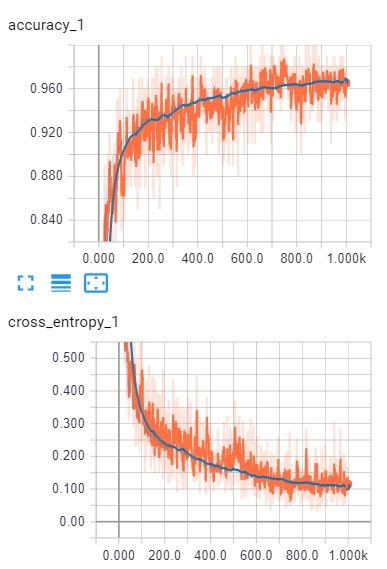

TensorFlow measures its progression as shown in the cross_entropy_1 and accuarcy_1 charts that follow:

Note how nicely the value of cross-entropy goes down a nice gradient (slope) as the loss function as the training progresses. ...