The fine-tuning task follows the pretraining task, and apart from the input preprocessing, the two steps are very similar. Instead of creating a masked sequence, we simply feed the BERT model with the task-specific unmodified input and output and fine-tune all the parameters in an end-to-end fashion. Therefore, the model that we use in the fine-tuning phase is the same model that we'll use in the actual production environment.

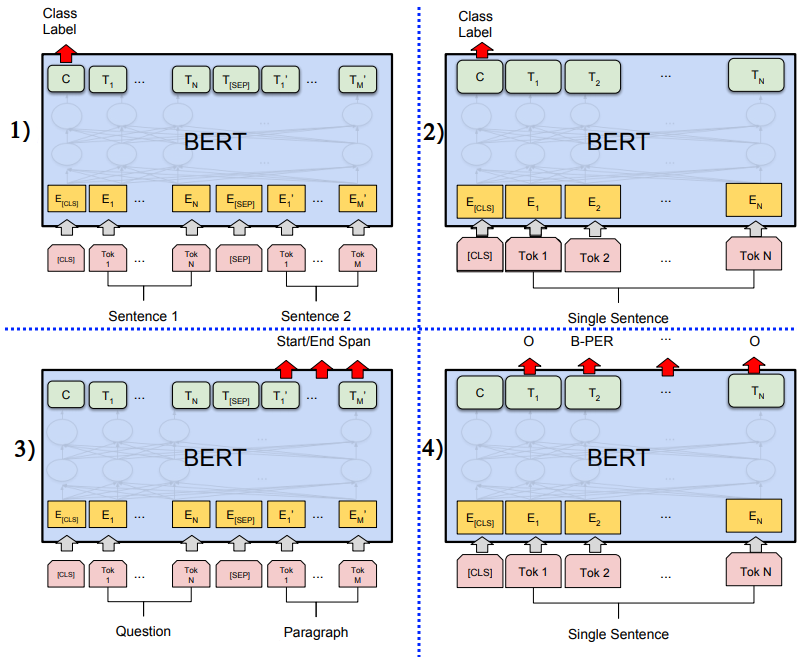

The following diagram shows how to solve several different types of task with BERT:

Let's discuss them:

- The top-left scenario ...