Chapter 1. Applied Machine Learning and Why It Fails

There are many reasons for the contrast between potential and actual applications of ML in industry, but at its core, the failure is due to the unique challenges of operationalizing ML, commonly known as MLOps. Although companies across the globe have standardized the practice of DevOps, the workflow and automation of putting code into production systems, MLOps presents a uniquely different set of problems.

In the practice of DevOps, the code is the product; what is written by the engineer defines precisely how the system acts and what the customer sees. The code is easy to track, manage, update, and correct. But MLOps isn’t as straightforward; code is only half of the solution, and in many cases, much less than half. MLOps requires the seamless cooperation, coordination, and trust of code and data, a problem that has not been fully tackled and standardized by the industry.

When an ML model is put into production, teams must access not only the code that created it, and the model artifact itself, but the data it was trained on, as well as the data it begins making predictions on post-deployment. Because a model is merely an interpretation of a set of data points, any change in that original dataset, by definition, is a new model. And as the model makes predictions in the real world, any discrepancies between the distributions of data the model trained on and data it predicted on (commonly referred to as data drift) will cause the model to underperform and require retraining. Too small of a sample size, and your model may underfit the data—fail to converge on an understanding of its distribution. Too much biased data, and your model may overfit the data, gaining an overconfidence (and sometimes memorization) of the training data, but failing to generalize to the real world. This introduces multiple issues in current analytic architectures, from data tooling, storage, and compute, to human resource utilization.

Data Science Tools Were Not Built for Big Data

Talk to any data scientist, and they will tell you that their day consists mostly of SQL, Python, and R. These languages, which have matured over years of development, enable rapid experimentation and testing, and this is exactly what a data scientist needs in the early stages of building an ML model. Python and R are extremely flexible and streamline iterative workflows natively. In Example 1-1, we see how a data scientist could quickly run through 50 ML model configurations in just 10 lines of code.

Example 1-1. Rapid prototyping in Python with only a few lines of code

from pprint import pprint

import random

from sklearn.model_selection import GridSearchCV

# Define hyper parameters

hyper_params = {

'num_trees': [5,10,20,40],

'max_depth': [4,12,18,24],

'train_test_split': [(0.8, 0.2), (0.7,0.3), (0.55,0.45)]

}

# Iterate through many combinations of hyper parameters

for test in range(50):

hp_vals = {}

for hp in hyper_params:

hp_vals[hp] = random.choice(hyper_params[hp])

print('-'*50)

print(f'\nTraining model using the following Hyper Parameters:')

pprint(hp_vals)

train_model(hp_vals)

print('Done Training\n')

In data science, however, the major trend has been toward building models using larger datasets that cannot fit in memory. This creates an issue for data scientists, because Python and R are best suited and natively built for in-memory use cases. The most popular data science framework in Python is pandas, an in-memory data computation and analysis library that makes the common tasks of data scientists—such as imputing missing data (handling null values through statistical replacement), creating statistical summaries, and applying custom mathematical functions—incredibly simple. It works nicely with scikit-learn, one of the most popular and easy-to-use ML frameworks in Python. Combining these two tools enables data scientists to rapidly prototype algorithms, experiment with ideas, and come to conclusions faster and with less effort.

Pandas and scikit-learn work well for users as long as their data is only a few gigabytes in size. Because these tools can run on only a single machine at a time, you cannot distribute the computational load across many machines in parallel. Once you surpass the limitations of your personal machine or server, you begin to see degradations of performance and even system failures. As the amount of acquired data continues to explode, the work required is increasingly beyond the capacity of these tools.

Given this limitation, data scientists often resort to training models on subsets of their data. They can use statistical tools to capture the most important data, but these practices inevitably lead to lower-quality models, since the model has been fit (exposed) to less information.

Generally speaking, exposing your model to a higher quality or greater quantity of training data will increase the accuracy more than modifying the algorithm.

Another tactic is partial learning—a practice in which a subset of data is brought into memory to train a model, then discarded and replaced with another subset to train on. This iterative process eventually captures the entirety of the data, but it can be expensive and time-consuming. Many teams won’t have the time or resources to employ this methodology, so they stick with subsampling the original dataset.

But what is the purpose of storing all this data if data scientists cannot use it to improve the business?

Spark

A common response to this issue is the introduction of Spark into the data science ecosystem. Apache Spark is a popular open source project for processing massive amounts of data in parallel. It was originally built in Scala and now has APIs for Java, Python, and R. Many infrastructure engineers are comfortable setting up cluster environments for Spark processing, and significant community support exists for the tool.

Spark, however, does not solve the entire issue. First, Spark is not simple to use: its programming paradigms are very different from traditional Python programming and often require specialized engineers to properly implement. Spark also does not natively support all of Python and R programmers’ favorite analytics tools. If, for example, a Python developer using Spark wants to use statsmodels, a popular statistical framework, that Python package must be installed on all distributed Spark executors in the cluster, and it must match the version that the data scientist is working with; otherwise, unforeseen errors may occur.

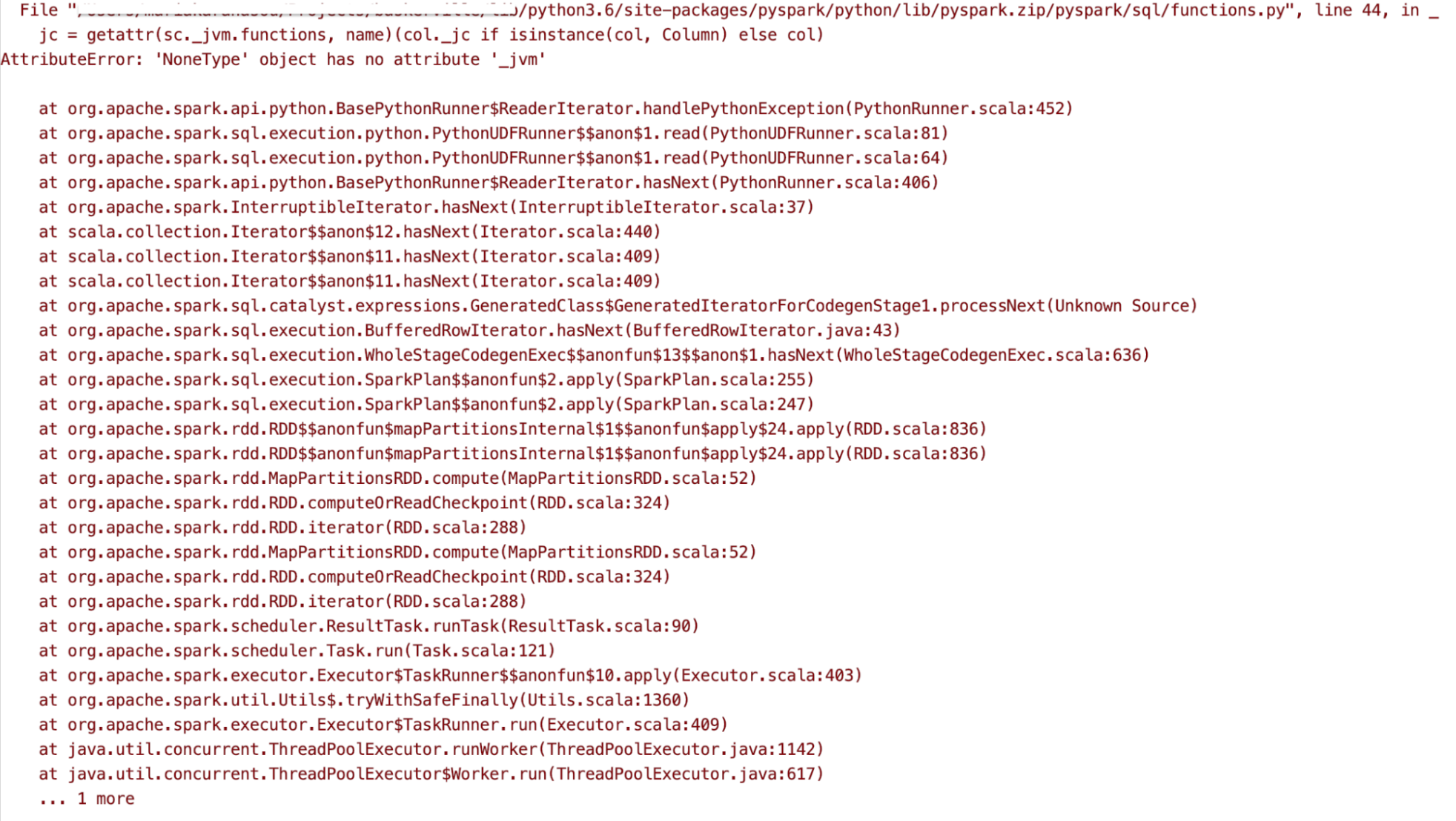

PySpark and RSpark, the Python and R API wrappers for Spark, also have notorious difficulty in interpreting error messages. Simple syntax errors are often hidden under hundreds of lines of Scala stack traces. Figure 1-1 shows a typical stack trace of a Python PySpark error. Much of it is Java and Scala code, which does not leave the data scientist with many avenues for debugging or explanation.

Many of these issues have been front and center for the Apache Spark team, which has been working hard to mitigate them—improving error messages, adding pandas API syntax to Spark dataframes, and enabling Conda (the most popular Python package manager) files for dependency management. But this is not a catchall solution, and Spark was fundamentally written for functional programming and large data pipelines meant to be written once and run in batch, not constantly iterated upon.

Figure 1-1. Common PySpark stack trace

Shifting to Spark for production data science will likely require another resource such as a data engineer to take the work of a data scientist and completely rewrite it using Spark to scale the workload. This is a major hurdle for large companies and a contributing factor to the failure of 80% of data science initiatives.

Dask

One proposed solution is to use Dask, a pure Python implementation of a distributed compute framework. Dask has strong support for tools like pandas, NumPy, and scikit-learn, and it supports traditional data science workflows. Dask also works well with Python workflow orchestration tools like Prefect, which empowers data scientists to build end-to-end workflows using tools they understand well.

But Dask is not a full solution to the issue either. To start, Dask provides no support for R, Java, or Scala, leaving many engineers without familiar tools to work with. Additionally, Dask is not a full drop-in replacement for pandas, and does not support the full capabilities of the Python-based statistical and ML tools. Other projects (for example, Modin) have stepped in to attempt to gain full compatibility, but these are young systems and do not yet offer the maturity necessary to be trusted and adopted by large enterprises.

The infrastructure needs of Dask present another potential hurdle. Using Dask to its full potential requires a cluster of machines capable of running Dask workloads. Companies may not have the necessary resources to deploy and support a Dask cluster, as it is a new project in the space. Far more infrastructure engineers are familiar with Spark than with Dask.

Companies such as Coiled are beginning to support Dask as a service, managing all of the infrastructure for you. But these companies, much like Dask itself, are young and may not support the strict security or stability requirements of a given organization. Dask also adds yet another tool to the ever growing toolbox necessary to bring data science out of the back room and into production.

Data Tooling Explosions

The preceding discussion may have exposed you to multiple projects and technologies you had never heard of before. That is indicative of another major hurdle to large organizations’ efforts to leverage data science: the explosion of data science tools.

Both Python and R significantly reduce the barrier to entry for developing new packages relative to many other programming languages. The major advantage of this is that the open source community constantly creates new projects that build off of each other and continuously ease the daily lives of programmers, data scientists, and engineers. The difficulties come when organizations need to support this ever growing list of tools, many of which have conflicting dependencies and untested stability and security concerns. Supporting this web of tooling and infrastructure is a never ending task, which becomes a job in and of itself, taking resources away from the core goal: putting ML models into production.

Simply moving data from a data warehouse, data lake, or multiple original sources to an analytic environment brings a wealth of concerns and opportunities for human error, which often requires a plethora of startups and open source projects to rescue the effort. Picking the proper tools, training a team on their function, and supporting their reliability is the job of an entire team for which there may not be a budget. As many data science organizations store their raw data in data lakes and data warehouses, each separate from data stores being used by the data scientists, this single task can be a major barrier to streamlined ML.

Disparate Datasets and Poor Data Architectures

ML fails at organizations primarily because of improper storage, management, and ownership of raw data and data movement. Data science is, at its core, an iterative and experimental field of study. Support for rapid testing, trial, and error is core to its success in business. If data scientists are forced to wait hours or days to access the data they need, and every change in request requires additional waiting periods, progress will be slow or impossible.

Across many modern data architectures, refined data is stored in data warehouses, separate from the needs of the data scientists and analysts, or data lakes that store far too much data in various stages of refinement for easy location, access, transformation, and experimentation. The problems of opaque changes in upstream data sources and a lack of visibility in the data lineage can decrease the efficiency of data scientists when they are dependent on data engineers to provide clean data for use in their modeling. If the underlying data sent to data scientists changes between model-training iterations, catching that change can be extremely challenging.

The enterprise data warehouse (EDW) offers advantages including performance and concurrency, reliability, and strong security. Commercial EDWs such as Snowflake, Amazon Redshift, and Vertica usually come with a strong SQL interface familiar to data engineers and architects.

But data warehouses were not initially architected for complex iterative computations required by state-of-the-art data science and have struggled to support that need. Just a few years ago, they generally didn’t support time series, semi-structured data, or streaming data. They often ran on specialized hardware making scale expensive, and they required slow batch extract, transform, and load (ETL) processes to get the data refined and into the hands of data analysts. Even now, many analytical databases at the heart of data warehouses support only a subset of the data formats needed for data science. The structured data they store is focused on the needs of business intelligence, not data science. These limitations have become a major bottleneck to achieving production ML.

As an attempt to mitigate the shortcomings of the data warehouse, the data lake was introduced in the 2010s. Streaming data and time-series data were supported, varied data structures including semi-structured data (such as JSON, Avro, and logs) were native, and scaling was cheap because data lakes ran on commodity hardware.

But the data lake also came with major hurdles. Data lakes were complex and required specialized and high-salaried engineers to operate. Data lake performance was poor, and they struggled specifically with concurrent users, bogging down and even failing with as few as 10 simultaneous requests. Over time, some other data lake weaknesses, such as ACID compliance (ensuring atomicity, consistency, isolation and durability) and metadata management, have been addressed.

Most important, however, data lakes could not, and still struggle to, natively support the tools that data scientists need. Data scientists still routinely take subsets of data out of the lake, combine those with data from the data warehouse or other sources, and process those combined subsets in memory by using Python and R. Data lakes solved a problem, centralizing a company’s massive aggregation of raw data. But they did not solve the problem of data scientists: scaling the workflow they were used to.

Complexities of Data Science and the Cost of Skills

Lastly, but certainly of great importance, is the technical depth necessary to actually perform adequate data science. As a field, data science and ML have exploded in popularity, and with that popularity, a plethora of easy-to-use open source tools have emerged. But in reality, these tools do not usually address complex and company-specific business problems. When using ML to determine who gets a small business loan, for example, it’s simple enough to use a scikit-learn model and analyze Shapley Additive Explanations (SHAP) scores, but only a trained data scientist can help you understand whether your model is inheriting biases from your historical data, or where specifically (and why) your model is underperforming.

Truly powerful data science requires a mastery of data and statistics alongside a strong ability to program, and a keen understanding of storytelling and data visualization.

The intersection of these three skill sets is minimal, and as a result the costs of employing good data scientists are extraordinarily high.

To mitigate that, an increasing number of architectures are trying to support the concept of a citizen data scientist—someone with a deep understanding of the specific business and business problem, often a business analyst, who also has a basic grasp of applicable data science concepts. This conceptual role is generally filled by someone who uses SQL daily and understands data visualization tools, but typically does not have a good grasp of programming in languages like Python or R, or the normal depth of expertise in data science expected of someone who has spent years of training in that field. Enabling this citizen data scientist role is a goal of many architectures.

At this point, we’ve looked at reasons ML projects fall short of their potential. The remainder of this report will walk through the evolution of data warehouses and data lakes into a unified architecture, an evolution that supports successful ML implementations across many industries. Importantly, we’ll take a dive into in-database ML, a new concept that will fundamentally change the way enterprises utilize ML and democratize its usage. Finally, we’ll see how this unified architecture helps companies productionalize ML pipelines and take their data scientists out of the back room.

Get Accelerate Machine Learning with a Unified Analytics Architecture now with the O’Reilly learning platform.

O’Reilly members experience books, live events, courses curated by job role, and more from O’Reilly and nearly 200 top publishers.