Topic models: Past, present, and future

David Blei, co-creator of one of the most popular tools in text mining and machine learning, discusses the origins and applications of topic models.

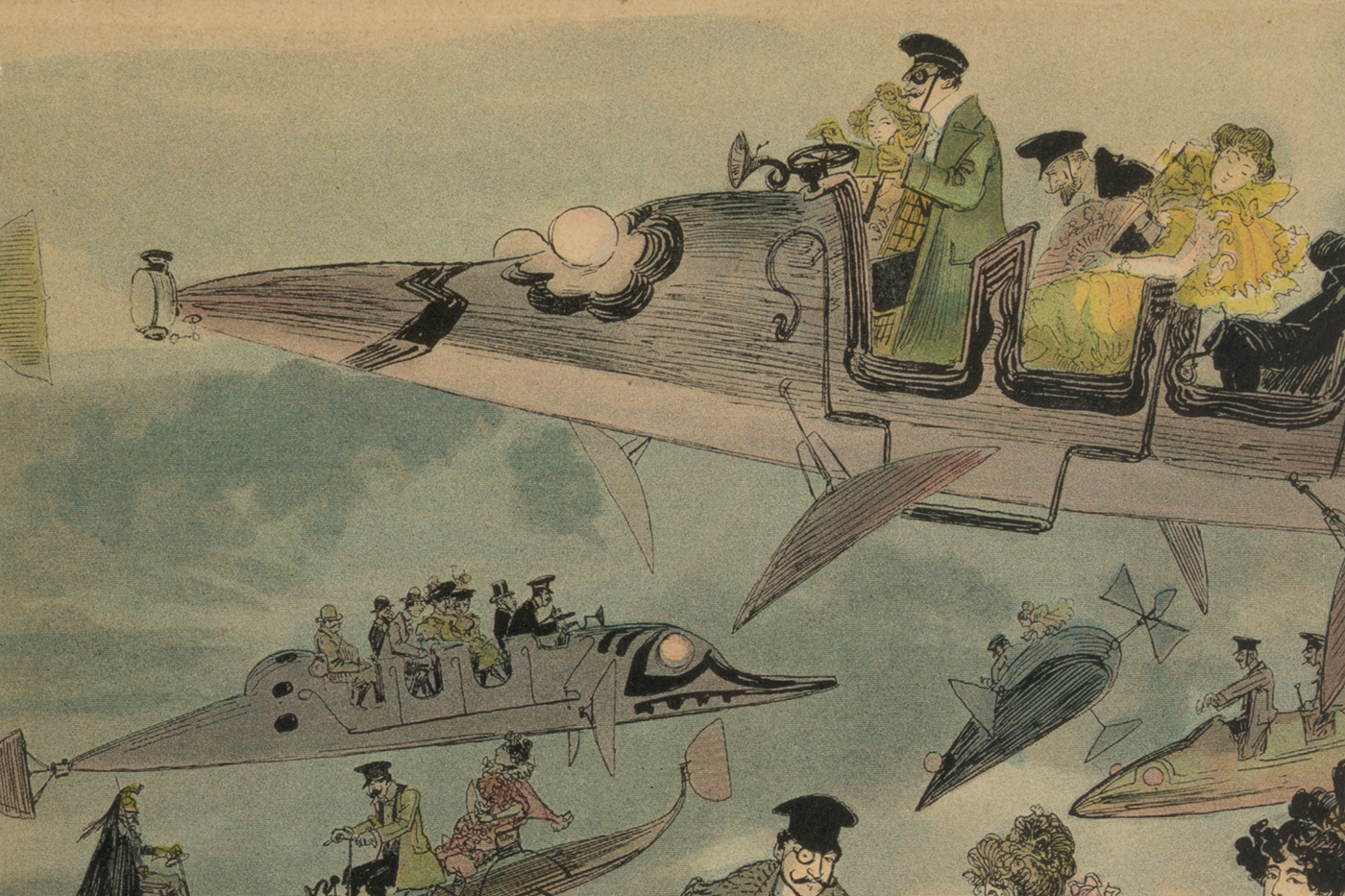

Futuristic Air Travel in Paris (source: Library of Congress)

Futuristic Air Travel in Paris (source: Library of Congress)

I don’t remember when I first came across topic models, but I do remember being an early proponent of them in industry. I came to appreciate how useful they were for exploring and navigating large amounts of unstructured text, and was able to use them, with some success, in consulting projects. When an MCMC algorithm came out, I even cooked up a Java program that I came to rely on (up until Mallet came along).

I recently sat down with David Blei, co-author of the seminal paper on topic models, and who remains one of the leading researchers in the field. We talked about the origins of topic models, their applications, improvements to the underlying algorithms, and his new role in training data scientists at Columbia University.

Generating features for other machine learning tasks

Blei frequently interacts with companies that use ideas from his group’s research projects. He noted that people in industry frequently use topic models for “feature generation.” The added bonus is that topic models produce features that are easy to explain and interpret:

“You might analyze a bunch of New York Times articles for example, and there’ll be an article about sports and business, and you get a representation of that article that says this is an article and it’s about sports and business. Of course, the ideas of sports and business were also discovered by the algorithm, but that representation, it turns out, is also useful for prediction. My understanding when I speak to people at different startup companies and other more established companies is that a lot of technology companies are using topic modeling to generate this representation of documents in terms of the discovered topics, and then using that representation in other algorithms for things like classification or other things.”

Scaling to large corpuses

The early algorithms that came out of the academic research community couldn’t handle large numbers of unstructured documents. I remember having problems fitting topic models against 100,000 documents (nowadays, this is a relatively modest corpus). But things changed around 2010, Blei explained:

“At some point, Google showed me that they fit a topic model to a billion documents and a million topics, something I couldn’t have done, and were using it as features for various things … Before 2010, it was hard for us to analyze with our modest academic clusters … to analyze 100,000 documents. That was a big deal for us — if we analyzed 100,000 documents, that was a big piece of analysis. After that work, we now regularly can analyze half a million documents, three million documents, five million documents — and with a computer cluster, we can analyze billions of documents. This was a big change. This changed the scale at which we could do these kinds of analyses and, further, our algorithm generalized to many different kinds of statistical models.

“That’s just a little story, part of a bigger story in scaling up machine learning. I think a lot of the interest in statistical machine learning and data science right now is thanks both to it being a rich field that provides tools that help us understand and exploit patterns in data, but also that we’ve all been working hard to bring it up to date to modern data set sizes. This idea, which is called stochastic optimization, is one of the cornerstones of scaling up machine learning. What’s amazing about this is, that idea is from 1951. It’s Robbins and Monro, 1951, this little eight page mathematics paper.”

Explosion of work in industry and academia

I think it’s fair to say that topic models are now being used by data analysts in all disciplines and companies. In recent years, it’s become a technique that researchers from the humanities and social sciences have come to rely on. Blei marvels at the number of people using topic models in their work:

“It’s funny, I remember at first I was aware of all the different extensions, and at some point that changed. I no longer can keep my hand on everything. I can’t know about all the different topic modeling papers that are out there, and so I get asked a lot of questions, ‘Hey, has anybody written a paper about this or this?’ I usually have to answer, ‘I’m not sure.’ I know what my students are up to, and when something has a lot of traction, of course, I know about it, but at some point I lost my own handle of the topic modeling literature because it was growing so fast. … I think that the success stories for topic modeling that I find most compelling are the ones where people in the social sciences and in political science and in the digital humanities are using these tools to help them with their close reading of large archives of documents.”

You can listen to our entire interview in the SoundCloud player above, or subscribe through TuneIn, iTunes, SoundCloud, or RSS